3.0 Introducing Quality Management

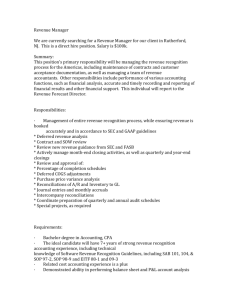

advertisement