2006 AARON, Colorist: from Expert System to Expert

advertisement

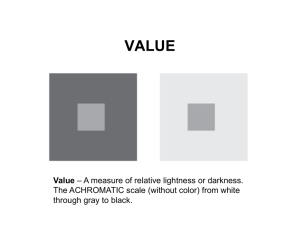

AARON, Colorist: from Expert System to Expert. Harold Cohen Emeritus Professor University of California at San Diego. October 2006 My eleven-year old daughter Zana (1) enjoys perfectly normal color vision, and, like any other normally perceptive person, she believes that color is a property of things. Consequently, she was quite put out when I told her that color isn’t really a property of things at all, it’s a property of vision. In the real world, I said, where the things are, there’s light, but not color. You need the right kind of eyes to see color, and animals that don’t have the right kind of eyes see the world in black and white and grey. I don’t see how that can be, she said, (2) how can they know what’s going on around them if they can’t see the colors? Oh, that’s easy, I said, as a matter of fact you and I know what’s going on mostly by how light or dark things are, even though we’re seeing in color. The color doesn’t add a whole lot. I don’t get it, she said. Look, I’ll show you, (3) I said. Well, she said, I think it does add a lot and I’m glad I have the right kind of eyes. Flowers are much nicer when they’re colored. You’re right, I said. (4) Daddies can be awfully pedantic. Of course she was right. It can’t make a difference to an eleven-year to say that roses only reflect light of a particular frequency instead of saying that roses are red. In fact, it didn’t make much difference to me, either, when I was painting. The principle property of the stuff 1 I was squeezing out of the tubes was its color, and if one tube was labeled yellow ochre and another cadmium green, the difference between their contents was a difference in color; it would never have occurred to me to think about it as a difference in the frequency of the reflected light. That state of mind started to change for me when I moved from painting to computing, and found that in place of the thirty or forty colors I had at my disposal I was stuck with the three so-called primaries common to all electronic displays; red, green and blue. Wait, though, I’m getting ahead of myself. I moved from painting to computing more than thirty-five years ago. My state of mind couldn’t have started to change then, because there weren’t any color displays to work on thirty-five years ago. I started with the much more general goal of writing a program that could function as an artist; an autonomous entity capable of generating original artworks; as autonomously, at least, as I could figure out how to make it. The result was a program I called AARON. I’ve been trying ever since to increase its autonomoy. It was close to fifteen years later before color entered AARON’s world. Autonomy isn’t an absolute, of course. To the degree that AARON makes most of its images at night, while I’m asleep, (5) evidently it is more autonomous now than it was then, and its power as a colorist is quite apparent. (6) In fact, it’s a much stronger and a much more inventive colorist than I ever was myself. Its autonomy doesn’t extend to exercising judgment about what it’s doing, but then, I’m reminded every morning, when I have to decide which of the hundred or so images to discard and which few to keep and to print (7), that exercising judgment concerning the program’s use of color is quite difficult. 2 Color is surely the least likely area of expertise for a computer program, especially one without a visual system. As I tried to explain to my daughter, color is fundamentally visual in the precise sense that it’s a property of the perceptual system that interprets the various wavelengths and amplitudes of the light from the real world. Animals that don’t have the right kind of retina don’t see color and a program without any kind of retina doesn’t see anything. Exercising judgment is difficult also because our relationship to color is curiously problematic. We can see it and we can respond to it, yet we’re almost incapable of imagining it. You might suppose that’s because we’re mostly not experts. But, no; not even expert colorists can imagine the appearance of complex color relationships. No one proceeds by constructing an internal representation – an imagined version -- of a complex color scheme and then telling someone else how to execute it; not until now, anyway, and, as you will see, what AARON has been given is not at all like what one might suppose an imagined scheme to be. The human artist needs to monitor the developing color scheme as each new color is added, to see it happening on the canvas, because he can’t reliably picture in his mind how that new color is going to affect the color scheme as a whole. He rarely gets it exactly right first time and he proceeds stepwise, correcting as he goes. Obviously, then, visual feedback is central to what the human colorist does. AARON has no visual system. No visual system, no visual feedback. No feedback, no basis for continuous correction. I got a handle on the problem of color about twenty years ago, after several years of frustrating lack of insight, when it became clear to me that if AARON didn’t have the hardware upon which my own expertise rested, than the standard expert system approach of emulating my own expertise was a nonstarter. I needed to build a system based on the resources AARON did have, 3 which included, if I could devise a way of representing individual colors, an entirely un-human ability to build and maintain an internal model of arbitrarily complex color schema. But how to generate those colors? I needed to devise a set of rules flexible enough and robust enough to apply across the full range of unpredictable compositions that the program was capable of generating. That rule-based coloring system served AARON very well for the next twenty years, but that isn’t what I want to talk about – it has been adequately described in several papers that can be got off the web. Enough here to say that in those twenty years it grew to be very long and very detailed; so long and detailed, finally, that introducing new rules, or making changes in the existing rules without breaking something was becoming more and more difficult. And a few months ago I replaced the entire system with a remarkably simple algorithm that not only performed at least as well as its predecessor, but opened up a much wider range of coloring strategies than had been possible before. It’s that algorithmic system I want to talk about. It really is simple; it could have been written in a couple of hours by any novice programmer, if he had known what to write. But its structure and its development are deeply embedded in the technology of color, and here’s where my daughter’s perfectly reasonable view of color as being simply a property of objects has to give way to a fuller understanding of how color gets to be perceived. If I’m to explain how and why this new algorithmic approach works as well as it does I’m going to have to start with a little background. Here’s a brief review, then, of what we know about color and about the various ways it can be represented and specified. To begin with, as I told my daughter, color is what we see; the sensations generated by the retina in response to the varying wavelength and amplitude of 4 the light that’s reflected from the objects in the world. (8) It follows, then, that a full physical description of what we see as a color requires only three properties of the light itself, all of which can be represented by a graph showing the distribution of wavelengths against their amplitudes. Those properties go under a variety of names in various color theories; I’m going to refer to them as hue, brightness and saturation. The hue is where the peak of the graph falls on the visible spectrum, while the saturation is a measure of how much of the total energy of the sample falls within how narrow a band. And brightness – where the sample would fall on a scale from black to white, how it would be recorded on black and white film, roughly speaking, how it would be perceived by an animal without color vision -- is simply the total energy of the sample, which is represented here by the area under the curve. (8) For those of you who can remember black-and-white movies or who still use black and white film in your prehistoric cameras, it should be clear, as I showed Zana, that the brightness component provides most of the information we need about the world, and it’s not coincidental that the human eye has ten times as many receptors for light intensity – brightness – as it does for color. From a perceptual standpoint it remains far more important than either hue or saturation, even when we’re responding to the colors we see. From a perceptual standpoint also, it should be noted that there are many factors influencing how we deal with what we see. From my own position, one of the most important of these relates to the fact that vision, which works primarily in terms of brightness differentiation, functions primarily to provide information about the world we live in; it even has special features for emphasizing the edges of the objects with which we share that world. For the artist making representations of the world, that strong identification of brightness with objects means that color will always carry a secondary role; the more immediately the viewer recognizes what the image represents, the less important color will be to 5 his perception of the image. At the extreme, it will be regarded as merely descriptive, an essentially minor property of what is being represented. (If you think back to the history of the use of color in the last century, you’ll see that color finds its fullest expression with abstract painters, while only one or two notable exceptions to the norm, most particularly Bonnard and Klimt, contrive to keep color at a very high level of importance in a representational context.) This issue will explain a great deal about AARON’s subject matter. My interest in plant forms began (*9*) as a way of providing AARON with an ambiance for its newly acquired knowledge of the human figure. Over the years (*10*) it took over as the main subject (*11*) and in the most recent decade, as my preoccupation with color came to dominate, I found myself zooming in on the plant material until (*12*) the images began to teeter on an edge between representation and non-representation. I don’t consider them as abstractions exactly, because they retain their formal origins in plant growth. But they are far enough away from overt representation to break the perceptual link to brightness-domination and allow the viewer to recognize color as a primary element. (Interestingly enough, and as you will see, (*13*) the new algorithm seems to have made it possible to reintroduce brightness as an element without denying the primacy of color.) For any artist, representational or otherwise, manipulating color implies manipulating the three properties of light – the frequency, brightness and saturation. It’s rarely necessary to think in these analytical terms, however, and it would rarely be advantageous to do so, because we can’t manipulate them directly in any case. How we can manipulate them depends upon the medium. (14) The painter has a wide range of differently-colored physical materials to be squeezed out of tubes and mixed together. The computer artist uses viewing devices of one sort or another – CRT, LCD or plasma panels -- that generate the color of the individual pixels from the relative settings of the three so-called primaries, red, green and blue. 6 So you might say that the primary materials for the painter are the various pigments in the tubes of paint, while the primary materials for the computer artist are red, green and blue. Could the painter manage if he had only red, green and blue paint? Certainly not: (15) the three suffice for the display because mixing them there is additive – as more of each primary is activated it adds to the total energy of what you see on the screen. Crazy as it might sound until you see it for yourself, you get the brightest color, yellow, by mixing red and green. On the other hand, mixing paint is subtractive; physical materials act as filters, and the more filters you have the less light they will allow to pass. So mixing red and green paint produces (16) … well, it’s still sort of yellow-orange in this case, but it’s so degraded that we call it something else. Now, when I started to write the coloring rules for AARON I accepted that my primary materials were determined by the output device – the screen – so the output side of each rule would have to be an RGB specification that generated the desired values of the three primary characteristics of the color – the hue, brightness and saturation -- within the color scheme as a whole. As you may imagine, finding two RGB mixtures that satisfied the desired relationship of all three characteristics was non-trivial. Mixing red green and blue was still a bit like mixing paint – though rather like an inside-out version -- but it took years to acquire the level of expertise in manipulating this three-color system that I’d had previously with my thirty or forty different physical colors. In any electronic display system, everything comes down to red, green and blue. But I’d be in a much better position, I thought, if I could stop thinking in terms of red green and blue and started to think more directly in terms of what their manipulation was supposed to accomplish; that is, if I could manipulate the hue, brightness and saturation directly. And, as it happens, RGB isn’t the only game in town; the lisp system I use also has HLS, a mode widely used for computer 7 graphic applications, that requires a color to be specified in terms of its hue, lightness and saturation and takes care internally of the conversion to the unavoidable red, green and blue. That sounded like exactly what I needed; and it almost was. It was only when I started to rewrite AARON’s coloring rules to provide HLS output, that I realized that the lightness component in HLS wasn’t quite the same as my notion of brightness. (17) Here’s a physical model that makes clear what HLS actually means and why lightness and brightness aren’t quite the same thing. As you see, you can think of HLS as a system in which a filter of some hue sits between a light source and the viewer. Lightness is a measure of the energy of the light source and saturation is the thickness of the filter. In my hue/brightness/saturation model, brightness represents the level of light energy arriving at the viewer’s retina, after it has passed through the filter. In the HLS model lightness is the available energy of the light source itself, before its brightness is reduced by the filter. The difference wasn’t critical -- after all, the behavior of light doesn’t change because one adopts a new way of describing it -- but it was obvious that lightness and saturation were both involved in determining brightness, and consequently that they couldn’t be considered separately, as I’d been able to consider the red, green of blue components of a color separately. What was much less obvious was how the resultant perceived color would be affected by different combined values of lightness and saturation. It took me a while to develop a good intuitive grasp of how that would work, and I couldn’t re-write AARON’s rules without it. As things turned out, though, I didn’t rewrite the entire rule-base, because, long before I’d finished the job, I started to suspect that there may be a quite different way of going about things. I was motivated, in part, by a growing 8 discomfort about what I was beginning to see as a hard limit on AARON’s autonomous expertise; the fact that whatever expert knowledge it had, it had only implicitly, available to it only through the rules I wrote. I thought it should have that knowledge available in explicit form, and consequently I saw one of my first tasks in building a new system to provide some of that knowledge. Let me explain: if (18) if you look closely at any of AARON’s images, you’ll find that every single patch of color has three components; there’s the main color, and then there are two variants of that color which are used for the edges of the form, offset from it to some specifiable degree in any or all of the three primary characteristics. Rather than specifying the actual colors to be used – as I’d done with the RGB-based system – it should be possible to say something like “under such-and-such circumstances, shift the hue barely perceptibly for part of the edge.” And of course it is possible, provided that the program knows what “barely perceptibly” means. Well, what does it mean? Assuming that the spectrum is approximated by 360 colors, each a mixture of only two of the three primaries, (19) we can define the minimal perceptual shift for any color to be the number of steps you need to take, on one side or the other, before you begin to note a difference. That turns out to be anything but constant as you move along the spectrum; (20) you may not be able to distinguish between colors two or three steps apart in one place, while the change between two adjacent colors in another place sticks out like a sore thumb. As you may imagine, assembling this minimal perceptual shift data – that’s where this slide comes from -- was no fun. I saw it as critical to the design of the first algorithmic version of the program, but it became less of an issue as I continued to work on the program and strategy shifted. In the current version – version three -- the change from the center of a colored patch to its edge is 9 controlled by a single contrast variable which is set for the entire image, and that seems to work just fine. Conversely, I eas hardly aware at the outset of what has subsequently emerged as the central driving force behind the first version. It was the dawning suspicion that one could only go so far in developing a color scheme by picking individual colors because a color scheme can only be characterized adequately by the relationships between the colors, and that one needed to a focus directly upon the nature of the relationships rather than the colors. In designing the first version of the new system, then, I bypassed entirely the specification of individual colors that had characterized the earlier system, and allowed those colors to emerge from the relationships the program generated in terms of hue, lightness and saturation. That needs to be explained. Hues were determined by subject matter. (21) It didn’t much matter what hue the program chose for leaves or flowers, but the distances between the hues for different elements – flowers, tendrils, branches and so on – did matter, (22) and to fix those distances for AARON’s entire output I settled on an additive series shifted to a random starting point. Every image would then have the same spacing of hues but no two of them would have the same hues or, consequently, the same colors. For the lightnesses and saturations to be associated with each of these hues, the program simply generated two lists of random values (23). These were then the only values that could be used for the entire image. As each plant element came up for coloring – leaf, flower, whatever -- its hue would be whatever had been prescribed for it and its lightness and saturation would be drawn randomly from the respective list of values. 10 If there are seven object-related hues allocated to an image, then, and seven values in each of the two lists, each hue can appear in any of forty-nine variants, and a color scheme as a whole could involve almost three hundred and fifty distinct colors if there were that many objects to color. So, although all the leaves on a tree would have the same hue, no two of them were very likely to have the same color; just as no two leaves on a real tree under real lighting conditions would appear to be exactly the same color. It could hardly be simpler. It’s so simple it would be laughable but for the fact that these half-dozen lines of code worked at least as well as a heavy-weight expert system that had been refined continuously for more than a decade. Evidently limiting the lightness and saturation to a small set of fixed values provides coherence to the color scheme as a whole, though the basis for that coherence is anything but evident to the viewer. But what do I mean when I say that it worked at least as well as the previous verssion? It may not be entirely obvious to people in the engineering disciplines, but in the art-making game we don’t think about finding the “best” solution to a problem; we think about generating the widest possible range of excellent solutions. One limitation of that range under the old system of hand-made rgb rules was that it almost never produced grays. To generate grays in the rgb system you need close-to-equal amounts of red, green and blue, and I always found that difficult to specify while satisfying all the other requirements. Now if you look at my HLS model (24), you’ll see that zero saturation means literally that no hue is present, and you have a neutral gray controlled entirely by the lightness. With the new algorithm, then, the inclusion of variously neutral grays in a color scheme simply depended on what numbers were in the saturation list and which ones were selected for individual colors. And it quickly became apparent that from the same set of numbers the algorithm could 11 generate a much wider range of color schemes than its predecessor could; not only schema (25) with both very pure and very gray colors, but also some strange, (26) almost monochromatic, compositions quite unlike anything AARON had ever generated before (27) . So far, so good: a much extended range, generated simply by the numbers in a couple of lists. But just where on that range a particular image would fall was random, just as the numbers were. I thought the program should have some degree of control in the matter; that it should be able to choose where on the range it wanted to operate. By this time I had a more developed sense of how the various settings for lightness and saturation determine the kind of color they’ll generate. At one end, with both lightness and saturation close to their maximum values, we get relatively pure colors. At the other end, when both of them have very low values it means there is very little light and very little hue, so we have essentially dark grays. In between these extremes, (28) we can vary the brightness of the grays by varying lightness while keeping saturation constant. And we can vary the grayness by leaving the lightness alone and varying the saturation. In between, where both lightness and saturation are somewhere near the middle of their ranges of values, we get a palette of muted colors; not too saturated, not too light and not too dark. In short, the program can generate a lot of different coloring possibilities simply by picking random values for lightness and saturation, but the only way for it to choose among those possibilities is for it to control the ratios between the two sets of values. Well, it can hardly do that over the infinite number of combination of individual values in the lightness and saturation lists. But let’s suppose, now, that instead 12 of dealing with those numbers individually, we group the numbers in each list into three ranges.(29) The high-value range may contain random numbers between .8 and 1.0, for example, the middle range between .4 and .65 and the low range between .15 and .35. Then we have (30) nine different ways of pairing the ranges; nine classes of lightness to saturation ratios, each of which will give rise to a distinct type of color scheme. Now, we’re unlikely to use all nine of these combinations in a single image, and we certainly don’t want a composition to be controlled by just one of them. An image comprised of exclusively high saturation colors is apt to look crude rather than vivid, just as an exclusively light color scheme will look weak rather than subtle. The vividness of a color is most affective in the presence of more muted colors, just as light colors need some dark colors to focus attention on their lightness. So AARON should probably use two or three of these range-ratios, but it needs to control how much each of them gets used within a single image. And if we want it to generate the widest gamut of outcomes from any particular set of ratios, we don’t want the mode of control to be too deterministic; we don’t want it to apply a low-low combination every fourth time, for example, and a high-high combination for the rest of the time. Rather, we want the program to specify only the average frequencies with which each of the range-ratios is chosen, leaving it to chance to determine which will actually be used on any particular occasion. Actually, that has long been AARON’s way of making choices; it calls a preference function like this – (31) (prefs 60 ‘a’ 30 ‘b’ 10 ‘c’) – that guarantees only a statistical likelihood that ‘a’ will be returned sixty percent of the time; (that is, ‘a’ will be returned if a random number between 0 and 100 is less than 60). The new version, using essentially the same strategy to select from among pre13 determined range-ratios, uses a script like this:- (32) (4 'hh 3 'hl 2 'lh) where the first character of each range symbol indicated the lightness range and the second indicates the saturation range. So if the ‘hl range is selected, as it will be 1/3 of the time in this example, the lightness will be randomly selected from its high range and the saturation from its low range. On to the second version of the algorithm, then. Once again, it has two phases, a preamble in which the various controls are set up, and the development, in which those controls are applied element by element until the image is complete. And, as before, the first step in the preamble deals with the spacing of hues; a sequence of numbers is randomly located somewhere on the 360-point circle and the resultant hues are assigned to the different elements of the composition – leaves, tendrils and so on. Three ranges of random values are then assigned to lightness and to saturation, one each for high, medium and low values. (33) These are the only values permitted for the entire composition, as they were for the first version; but now the selection is further restricted to the specified ranges. The final step in the preamble is to compose a preference script, which will determine subsequently how those ranges get selected. (34) A script like the one we just looked at – (4 'hh 3 'hl 2 'lh) – specifies that in the development stage, 4/9ths of the time both lightness and saturation should use values from their high ranges; a third of the time lightness should be high and saturation low; and 2/9ths of the time lightness should be low and saturation high. Again, though, let me stress that 4/9ths, 1/3rd and 2/9ths represent preferences, not absolutes; though given the large number of selections required in a complex image I assumed that the distribution of ranges would average out quite close to the preferences. 14 Well, in practice they weren’t as close as I thought they would be. But, more to the point, the intent of the algorithm wasn’t really to control how frequently the various range-combinations would be selected, but rather to control the distribution of the different range-ratios within the physical structure of the image; the amount of space, that is, that would accrue to each one. That’s quite different, of course, and in making its preference-based selections in that second version the program was indifferent to whether it was about to color a very large leaf or a very thin branch. That led, then, to the third, current version of the algorithm. AARON still doesn’t concern itself with the size of an element when it’s selecting a color for it, but now it keeps track of how much space actually gets used by each of the rangeratios in the coloring process. Eighty percent of the time it uses the regular frequency-based selection for the next area to be colored, (35) but every fifth time it compares the use records of all of the active range-ratios to the usage called for in the preference script – (this isn’t the script I just showed you, obviously). The third column has the original integer weightings converted into real proportions, the fourth has the current space usage of each range-pair and the final column has the difference between the two. The program then chooses the range-pair that has fallen furthest behind its preference to replace the frequency-based selection for the next area to be colored. As you can see from the numbers, the strategy works surprisingly well, with usage remaining remarkably close to the preferences. And what that means, of course, is that the kind of coloring produced is predictably linked to the preference script that generated it. That’s a significant improvement for what appears to be a relatively small change to a simple algorithm. But, in fact, what I’ve presented as a simple change of 15 strategy has actually changed the very nature of the algorithm in a fundamental way, in that what was truly a simple method, blind to its own production, is now required to sample and evaluate data from an unpredictably developing domain in order to stay on track. Indeed, I’m not even sure whether the term ‘algorithm’ is appropriate any longer. Perhaps ‘meta-algorithm’ might be a better term. Whatever I call it, however, it represents fifty years of accumulated expertise as a colorist – together with twenty years of accumulated expertise in programming -– all collapsed into a few lines of code. I have still to think through the implications of what that means to our understanding of the nature of human expertise. And that’s pretty much the whole story to date. Or rather, it’s the whole of the story with respect to color, which is only one part of the larger story of AARON; how it does what it does. I haven’t even mentioned how it generates the forms it uses or how it controls composition, for example, both of them having a profound effect upon how the viewer reads the image. Even while teetering on the edge of representation, there’s a distinct difference in reading (36) when the branching structure of the tree is much in evidence, compared to those cases where the branches are almost entirely hidden (37). And one of the most important factors is what isn’t there – the shapes and sizes of the bits of background that show through the trees. So a lot rests upon how large the program allows any single element to be (38) and upon how much space it allows the complete trees to occupy. (39) Even the proportions of the framing rectangle (40) plays its part in determining how the viewer identifies what he sees and how he prioritizes his perception of its elements. That’s all far beyond the scope of a single one-hour talk, however, and I’ll conclude this one with a few of the images AARON made (41) one night a couple of weeks ago. It had finished a hundred and twenty-nine images and was still at 16 it when I interrupted the next morning. (42) Then it took me about the same time to examine them all that it took AARON to make them. They were all of the quality of those I’m showing you, and I discarded most of them simply because there was no point in keeping so many; but I (43) was quite unable to get the remaining number down below thirty, even though I knew that only a few of those thirty would ever get printed (44). If, as I’ve said, the goal in the arts is to generate a rich output of excellent work, not to find the single “best” solution to some problem, then I clearly have no reason for discomfort; not on that score, anyway. (45) From the standpoint of increasing the program’s autonomy, on the other hand, I do still have reason for discomfort. I’ve provided AARON’s preference scripts; I’ve provided the crucial limits within which the program sets the values for the three ranges for lightness and saturation. (46) I’m the one who has to decide which of its images to keep and which to discard. I’ve devised the new form of the program, while AARON remains incapable of self-modification. What would drive self-modification when it remains incapable of assessing what it has already done? (47) As I’ve indicated, AARON’s performance involves a relatively large number of controlling variables. Their values can be recorded and considered, but they don’t constitute a useful description of the images to which they give rise. I have access to the images; AARON has access only to the numbers that produced them. Does that mean that I need to devise a descriptive scheme for its images that corresponds to what I see, that AARON can understand? And do I then need to correlate different combinations of variable values with my own assessment of the images to which they give rise? Is that actually possible? I don’t know; it seems like a major problem. But I also don’t know whether there may exist some much simpler route to self-assessment; some “meta-algorithmic” 17 approach as different from this conventional view of the problem as the new AARON is different from its predecessors. If so, I have no idea what it may be; I hope I’ll recognize it, if and when I stumble into it. 18