Effect of Pre-paid Incentives on Response Rates

advertisement

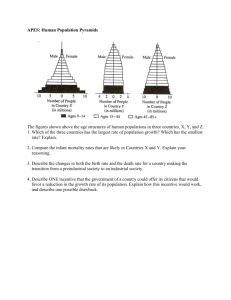

The Effect of Partial Incentive Pre-Payments on Telephone Survey Response Rates Todd Robbins-Abt Associates Inc. Ting Yan-Abt Associates Inc. Donna Demarco-Abt Associates Inc. Erik Paxman-Abt Associates Inc. Rhiannon Patterson-Abt Associates Inc. Paper presented at the 2003 Annual Conference of the American Association for Public Opinion Research May 15-18, 2003 Nashville Direct Inquiries to: Todd Robbins Survey Director Abt Associates 55 Wheeler St. Cambridge, MA 02138 617-520-3062 todd_robbins@abtassoc.com Please do not cite without first contacting Todd Robbins (todd_robbins@abtassociates.com) 1 Abstract We conducted a randomized experiment to assess the effect on interview response rates of differing methods for providing incentive payments to respondents to a telephone survey. This experiment is part of a larger economic impact evaluation of 600 individuals nationwide participating in Individual Development Account (IDA) programs funded by the Assets for Independence Act (AFIA). In this program participants open special savings accounts that are eligible for matched savings if the money is used to purchase homes, start businesses or pursue additional education. As part of the congressionally-mandated evaluation and longitudinal study of this program we randomly assigned different response incentive plans to 600 Individual Development Account program participants. All respondents receive $35 for completing a 30-minute telephone interview. Half of the sample however, was randomly chosen to receive ten of the 35 dollars in an advance letter that introduces the respondents to the study, asks for their participation and informs them that upon the completion of the interview they will receive a twenty-five dollar check. The other half of the sample received the advance letter without a ten-dollar check, but with the assurance that they will receive $35 once they have completed the interview. The first wave of interviewing was completed in March of 2003. Preliminary data show that overall there is no statistically significant response rate difference between the two groups. Existing literature suggest that pre-paid incentives may yield higher response rates. Our data suggest that we can reject this hypothesis. This paper will discuss our findings, including a determination as to whether pre-paid incentives reduce the number of calls needed to secure a completed interview. 2 Introduction Survey organizations periodically offer material and monetary incentives to respondents to induce cooperation (Dillman 2000; Nederhof 1983). Numerous studies have examined the effects of such incentives on data quality (Davern, Rockwood, Sherrod, and Campbell, 2003; Nederhof 1983; James and Bolstein 1990; Willimack, Schuman, Pennell and Lepkowski 1995), survey costs (Berk, Mathiowetz, Ward, and White, 1987; Singer, Van Hoewyk, and Mary Maher 2000) and response rates (Berk Mathiowetz, Ward, and White 1987; Willimack, Schuman, Pennell and Lepkowski 1995; James and Bolstein 1990). Additionally, researchers have studied how other aspects of study design, such as advance and follow-up mailings, interact with incentive plans to affect response rates (James and Bolstein 1990; Gunn and Rhodes 1981). Although studies assessing the effects of incentives on data collection modes other than mail surveys are not as well documented (Willimack, Schuman, Pennell and Lepkowski 1995; Singer, Van Hoewyk, Gebler, Raghunathan and McGonagle 1999), there is published evidence that incentives have a positive effect on response rates for interviewer-mediated surveys, such as face to face interviewing (Willimack, Schuman, Pennell and Lepkowski 1995) and telephone interviewing (Cantor, Allen, Cunningham, Brick, Slobasky 1997; Singer, Van Hoewyk, and Maher, 2000; Singer, Van Hoewyk, Gebler, Raghunathan and McGonagle, 1999, Gunn and Rhodes 1981). Studies assessing the differences between pre-paid incentives and promised incentives for interviewermediated surveys are even more difficult to find. Singer and her coauthors’ (1999) systematic review of 34 studies looking at the effect of incentives on interviewermediated surveys reports that prepaid incentives yield higher response rates than do promised incentives. Additionally Singer, Van Hoewyk, and Maher (2000) report on their experiments conducted during a two-year telephone survey called the Survey of Consumer Attitudes. In this experiment the authors provide various incentive and pre-notification plans to their monthly dialing cohorts. These variations included promised incentive amounts of five or ten dollars, advance letters sent or not sent, prepaid vs. promised incentives, and conditions where interviewers are ‘blind’ to the various incentive conditions. In one experiment a five dollar bill is included with an advance letter to a random half of the sample. This pre-payment condition does lead to higher respondent cooperation. We conducted an incentive experiment during a 14-month telephone interviewing effort from January of 2002 to February 2003. We compared an incentive condition that included both a prepaid and promised incentive to a plan that only promised an incentive once the interview was completed. We also conducted a preliminary examination of the relationship between incentive plans and the interviewer effort required to complete an interview. This paper describes the experiment and reports our findings. 3 Study Background The incentive experiment was conducted as part of a larger evaluation of the Individual Development Account (IDA) program offered through the U.S. Department of Health and Human Services Assets for Independence Act (AFIA, Public Law 105-285, enacted on October 27, 1998). The Assets for Independence Demonstration program provides federal funding for state and local individual development account (IDA) demonstration projects nationwide. Under this program, IDAs are personal savings accounts that enable low-income persons to combine their own savings with matching public or private funds for first-time home purchase, business start-up or expansion, or post-secondary education. The national evaluation of the AFIA demonstration program is composed of two parts: an impact study and a process study. The two components of the evaluation work together to present a portrait of IDA account holders and the grantee organizations implementing the IDA projects. The impact study will examine the effects of the demonstration on participants, based on a three-year longitudinal survey of 600 IDA participants nationwide. It will examine the extent to which AFIA-funded IDAs affect participant savings and asset accumulation. The primary program effects that this analysis will assess are as follows: increase in savings; increase in homeownership; increase in post-secondary education; and increase in self-employment. A series of secondary effects will also be examined, as follows: increase in home equity; increase in earned income; and reduction in consumer debt. The survey instrument, which draws questions primarily from the Survey of Income and Program Participation (SIPP) core module and selected SIPP topical modules, has been designed to collect information for the following purposes: to specify outcome measures with respect to employment status, earned income, savings, homeownership, business ownership, vehicle ownership, post-secondary educational attainment, consumer debt, and receipt of major means-tested benefits (including the earned income tax credit); to specify explanatory variables with respect to race/ethnicity, marital status, presence of children, and household composition; and to identify IDA-related program services received and to assess the factors promoting or hindering the use of one’s IDA. 4 Sample Selection Our survey research sample consists of a randomly selected national sample of 600 AFIA account holders who opened their IDA accounts during 2001. The sample was selected at two points in time. The first-half survey sample consisted of 300 randomly selected adults who opened their accounts in AFIA projects nationwide during the six-month reference period January-June 2001. A “reserve sample” of 300 was also selected at this time. Primary and reserve samples, each also 300 in size, were subsequently selected from accounts opened during July-December 2001. We constructed the sampling frame (the list of accounts from which the sample was selected), by sending a letter to each AFIA grantee that received a FY1999 or FY 2000 AFIA grant and requesting a complete listing of their current AFIA account holders and the date on which their IDA account was opened. The lists of account holders provided by all grantees in response to this request were then used to identify those opened during January-June 2001. In compiling the sampling frame, we excluded accounts by two “grandfathered” state grantees because their IDAs are administered under pre-existing state policies that differ from the AFIA rules and regulations. The sample for each six-month period was selected using a simple random sampling method, in which each account in the associated sampling frame has an equal probability of selection. For example, for the first-half sample we used a sampling frame that consisted of 1,227 accounts distributed by the month of account opening. Thus, the selection probability was 300/1,227, or 0.244. Sample selection for the second half of the year mirrored our procedures for the January-June sample. This sampling frame however consisted of 1,356 accounts that were opened between July and December of 2001. The selection probability was 300/1,356, or .221. Our sampling approach anticipated that some cases selected into the primary sample would be found ineligible for the survey. Cases were considered ineligible for the survey under any of the following circumstances: the respondent is less than 18 years of age at the time of the interview; the respondent has no recollection of opening an IDA with the identified grantee or subgrantee organization; and the respondent indicates that the month of account opening is more than two months prior to the listed month (i.e., the month indicated by the grantee or subgrantee organization). Each case in the primary sample deemed ineligible for interviewing was replaced with another case randomly selected from the accounts opened in the corresponding listed month. To provide a source of these replacement cases, a “reserve” sample,” equal in size to the primary sample, was selected for each month. For example, for the first-half sample, the selection probability for the reserve sample was 300/(1,227-300), or 0.324 5 Survey Protocol Each of the survey respondents received $35 for completing the first annual telephone interview, which occurred approximately 12 months after the account opening and lasted approximately 30 minutes. These account holders will be interviewed again at 24 and 36 months after their IDA account openings. During this first round, we interviewed 499 participants. The sample members in each monthly cohort were randomly assigned to two groups of equal size. Half of each month’s sample received Incentive Plan A. This incentive plan consisted of a $10 check with an advance letter that described the purpose of the study, promised an additional $25 upon completion of the interview, reminded them of the specific IDA program name that they joined, and asked for their participation. The advance letters were sent via first class mail, five business days prior to dialing each month’s sample. The remaining half of each month’s sample received Incentive Plan B. This group did not receive a prepaid incentive, but received an advance letter that promised a $35 check upon completion of the telephone interview and was otherwise identical to the letter sent to Plan A sample members. Both types of letters included a toll-free phone number so respondents could call our phone center to either complete the survey, or tell us the best times to contact them. To help with the interviewing, we used a CATI (Computer Assisted Telephone Interview) system. The system required interviewers to remind account holders during the introduction that they would receive either a $25 or a $35 check (depending on the type of incentive plan) upon completion of the interview. We used directory assistance and Experian databases to gain better contact information for respondents that we could not initially locate. Phone center staff had four weeks to contact each respondent. The names of the respondents who could not be reached within the four-week period were forwarded to an expert telephone locator who spent an additional month attempting to locate and contact these respondents. Initial refusals were also handed over to the telephone locator who was also trained in refusal conversion. Effect of Pre-paid Incentives on Response Rates For this first wave of interviewing, data collection began on January 22, 2001 and continued through March 15, 2002. The overall final response rate across both incentive plans A and B was 83%. The final response rate for Plan A ($10 prepayment + $25 promised) was 81% while the final response rate for Plan B (promised incentive of $35) was 85%. 6 Figure1 shows the number of sample and the number of completes by incentive types. Figure1: Sample and Completes by Incentive Plans 300 300 300 256 243 250 200 150 Sample 100 50 Completes 0 Plan A ($10 prepayment) Plan B (No prepayment) Figure2 shows the final response rates by incentive types. Figure2: Response Rates by Incentive Plans 86.00% 85.33% 85.00% 84.00% 83.00% 82.00% 81.00% 81.00% 80.00% 79.00% 78.00% Response Rates Plan A ($10 prepayment) Plan B (No prepayment) 81.00% 85.33% The response rate for Plan B is slightly higher then the response rate for Plan A, a result that seems to contradict most of the previous work on pre-paid incentives. However, a Chi-Square test (Table3) has indicated no statistically significant difference between the response rates for Plan A and Plan B respondents. The p-value for the Chi-Square test is 7 0.156 which falls considerably above the conventional threshold of significance of 0.05. As a result we fail to reject the null hypothesis of equal response rates between incentive plans A and B. Table 3: X2 statistics Statistics Chi-Sqaure Likelihood Ratio Chi-Square Continuity Adj. Chi-Square Mantel-Haenszel Chi-Square (Sample Size=600) DF 1 1 1 1 Value 2.0119 2.0166 1.7143 2.0086 Prob. 0.1561 0.1556 0.1904 0.1564 Effect of Incentives on Interviewer Effort We also examined the results of our data collection effort to determine whether either one of our incentive plans impacts interviewer effort to complete an interview. For this experiment we defined interviewer effort as the number of dialings required to complete an interview. There is some precedence for this in the literature on incentives and survey costs (Berk et al 1987; Singer et al, 2000). Singer and her co-authors found that the data from the Survey of Consumer Attitudes showed that a pre-paid $5 incentive “significantly reduced the number of calls to close out a case” (p. 185). The pre-payment also reduced the number of interim refusals leading the authors to conclude that the prepaid incentives were cost-effective. Our experiment focuses on the number of calls to achieve a completed interview. We calculated the average number of calls to complete an interview for each of the two incentive plans. We did not however, calculate the average number of calls to close out a case. The average number of calls needed to complete an interview for Plan A sample members ($10 pre-payment + $25 promised) was 8.79, while the average number of calls needed for Plan B sample members ($35 promised) was 7.63 (see figure 4). 8 Figure4. Mean # of Dialings by Incentive Plans 9.00 8.80 8.79 8.60 8.40 8.20 8.00 7.80 7.63 7.60 7.40 7.20 7.00 Mean # of Dialings Plan A ($10 prepaym ent) Plan B (No prepaym ent) 8.79 7.63 A two-sample t-test is performed on the difference of mean numbers of calls required to achieve a completed interview under each incentive plan. As shown in Table 5, we again fail to reject the null hypothesis at =.05. The incentive plans have no apparent impact on the mean number of calls to achieve a completed interview. Table5: t-test on mean number of calls If Variances are T-statistic Equal 1.464 Not Equal 1.458 DF 497 479.18 Pr>t 0.1439 0.1454 Discussion The findings may at first seem curious given that the existing research suggests that prepaid monetary incentives positively affect response rates, even for interviewermediated surveys. Our survey research sample consisted of a random list of IDA program participants. These participants are low-income as they must either be incomeeligible for the federal earned income tax credit (EITC) or must be receiving (or eligible for) benefits or services under a state’s Temporary Assistance for Needy Families (TANF) program, and have assets of less than $10,000 (excluding the value of one’s primary dwelling and one motor vehicle). Most of the participants are working. Given the nature of the program (to encourage savings), and the demographics of the survey participants (low income), we can assume that $35 is a strong incentive to complete the interview as shown by our overall response rate of 83%. We hypothesized that $10 in advance would be a strong motivator to complete the interview. Our findings, however, do not show that the $10 pre-paid advance results in the direction we might expect. The $10 pre-paid advance coupled with the promised incentive of $25 is not significantly more effective than the straight promise of $35. 9 Since the literature suggests otherwise, what may account for the divergence of our findings from previous ones? First of all, from the experimental design point of view, we did not provide the entire incentive amount when we sent the pre-payment in the advance letter for Plan A sample. Instead only a fraction ($10) is sent with a promise to send an additional $25 once the interviews were completed. The combined effects of payment amount together with payment time may be the reason that our experimental findings diverge from previous studies. We may have seen a different outcome if we had offered the entire $35 in advance to half of our sample instead of the $10 advance payment and the promise of another $25. Second, we can only hypothesize about the psychological factors that could have contributed to the failed impact of the $10 incentives on the response rate. For instance, the $10 as an advance incentive may not have added to respondents’ confidence that they will be paid the promised incentives once they complete the interview, or the $10 prepayment may not be significant enough to persuade the respondents to take the time to speak to an interviewer at some later, unspecified time. In addition, the topic of the survey might be deemed salient enough that respondents either have the interest to do the survey or feel obliged to do the survey as an assumed condition of continued participation in the IDA program. This is actually consistent with the leverage-salience theory of survey participation posited by Groves, Singer and Corning (2000). According to Groves, Singer and Corning, respondents assign different salience and leverage to survey design features. When they perceive the topic of survey itself, for instance, to be very important, monetary incentives-a design feature given less leverage—would play a less significant role in their decision to cooperate and participate. As a result, generally respondents more interested in the topic show smaller incentive effect. In our experiment, the respondents are already committed to the IDA program. Furthermore, we confirmed our legitimacy in the advance letter by naming the specific agency that administers the individual accounts. Both attributes are deemed more salient and given more leverage than monetary incentives. As a result, the positive effect of an incentive may diminish. Third, published experiments offered much smaller monetary incentives. We suspect that a ceiling effect exists when it comes to the incentive amount. Statistically significant differences may disappear when higher incentives are offered. The mail survey literature does show that response rates do increase as the incentive amount increases, but most of these ‘experimental’ increases in response rates and in the total incentives offered, are small. It is possible that a kind of diminishing return occurs when incentives over a certain threshold are offered. Conclusion This paper summarizes an experiment we conducted to supplement published data about the effects of pre-paid incentives on interviewer-mediated surveys. In our experiment, we instituted two types of incentive plans; one in which a portion of the incentive was prepaid, while the remainder was promised and delivered after the interview; and one in which the entire incentive was promised and delivered once the interview had been 10 completed. We then examined data collected during a 14-month telephone interview to determine whether incentive type affects response rates and/or the effort required to complete interviews. The results of our experiment indicate no significant difference in either response rates or effort required to complete an interview. Researchers must continue to untangle the effects of various incentive plans on telephone survey response rates. We were unable to test any other incentive plans, such as a ‘noincentive’ condition or vary total incentive amounts or fully pre-paid incentive amounts. More of these varying conditions should be tested in future work. In particular, although other evidence suggests that higher monetary incentives are more effective in raising response rates than lower amounts, we do not know at what point a ceiling effect is achieved and higher incentive amounts (whether pre-paid or not) become less effective. 11 References Berk, Marc L., Nancy A. Mathiowetz, Edward P. Ward and Andrew A. White. 1987. “The Effect of Prepaid and Promised Incentives: Results of a Controlled Experiment.” Journal of Official Statistics. 3: 449-457. Cantor, D., Bruce Allen, Patricia Cunningham, J. Michael Brick, Renee Slobasky, Pamela Giambo, and Genevieve Kenny. 1997. “Promised Incentives on a RDD Survey.” Proceedings of the Eighth International Workshop on Household Survey Nonresponse. 219-228. Mannheim, Germany. Church, Allan H. 1993. “Estimating the Effect of Incentives on Mail Survey Response Rates: A Meta-Analysis.” Public Opinion Quarterly 57: 62-70. Davern, Michael, Todd H. Rockwood, Randy Sherrod, and Stephen Campbell. 2003. “Prepaid Monetary Incentives and Data Quality in Face-to-Face Interviews.” Public Opinion Quarterly 67: 139-147. Dillman, Don A. 2000. Mail and Internet Surveys: The Tailored Design Method. New York: John Wiley and Sons, Inc. Groves, Robert M., Eleanor Singer, and Amy Corning. 2000.. “Leverage-Salience Theory of Survey Participation—Description and an Illustration.” Public Opinion Quarterly 64:299-308 Gunn, Walter J., and Isabelle N. Rhodes. 1981. “Physician Response Rates to a Telephone Survey: Effects of Monetary Incentive Level.” Public Opinion Quarterly 45: 109-115. James, Jeannine M., and Richard Bolstein. 1990. “The Effect of Monetary Incentives and Follow-up Mailings on the Response Rate and Response Quality in Mail Surveys.” Public Opinion Quarterly 54: 346-361. Nederhof, Anton J. 1983. “The Effects of Material Incentives in Mail Surveys: Two Studies.” Public Opinion Quarterly 47: 103-111. Singer, Eleanor, John Van Hoewyk and Mary P. Maher. 2000. “Experiments with Incentives in Telephone Surveys.” Public Opinion Quarterly 64: 171-188. Singer, Eleanor, John Van Hoewyk, Nancy Gebler, Trivellore Raghunathan, and Katherine McGonagle. 1999. “The Effect of Incentives on Response Rates in Interviewer-Mediated Surveys.” Journal of Official Statistics. 15: 217-230. Willimack, Diane K., Howard Schuman, Beth-Ellen Pennell and James M. Lepkowski. 1995. “Effects of a Prepaid Nonmonetary Incentive on Response Rates and Response Quality in a Face-to-Face Survey.” Public Opinion Quarterly 59: 78-92. 12 13