Ch 4 Jackson

advertisement

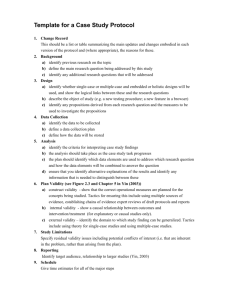

CHAPTER 4 VALIDITY OBJECTIVES - - in this chapter, we discuss o the methods used to estimate validity with norm-referenced tests o the relationship between reliability and validity o factors that influence validity the methods used to estimate validity with criterion-referenced tests are also discussed at the end of the chapter at the completion of this chapter, you should be able to: 1. define validity and outline the methods used to estimate it 2. describe the influence of test reliability on validity 3. identify those factors that influence validity 4. select a valid criterion score based on measurement theory INTRODUCTION - certain characteristics are essential to measurement: w/o them, little faith can be put in the measurement and little use made of it two are reliability and objectivity (chapter 3) a third is validity – and according to the AERA, the most important of the three THE OLD DEFINITION a test or instrument is valid when it measures what it is supposed to measure leg extension exercises are valid indicators of quadriceps strength – the SAT, GRE and other I.Q. based tests are valid indicators of intelligence and/or knowledge biceps curls, on the other hand, are not valid indicators of speed or leg strength before a test can be valid it must be reliable and objective – biceps curls are valid for arm strength therefore they are reliable they are not, however, valid for speed or leg strength, yet they are still reliable measures - THE NEW DEFINITION is at the test score interpretation level thus, interpretations based on the test scores are what are valid in the above examples, the amount of strength based upon push-up scores is what is interpreted as valid - in either case, for validity to exist, reliability must be established in this chapter, an overview of validity is presented, not a comprehensive coverage VALIDITY AND NORM REFERENCED TESTS - validity must be discussed in terms of interpretations based on scores from normreferenced or criterion-referenced tests validity exists when an instrument measures what it is supposed to measure - we cannot validate a test we collect data (measure) to validate the interpretations made from the measurements An example, o skinfold measurement to estimate percent body fat o to interpret the percent fat estimate from skinfolds, we need to have validity evidence to make our conclusions o if we say that a person has 40% body fat based upon skinfold measurements, we need to establish a relationship between skinfolds and percent body fat (chapter 9) o if we say that the person with 40% body fat has too much fat and is at risk for health problems, we need to establish a relationship between health and percent body fat - in addition to reliability and objectivity, a valid test will also be relevant relevant means that the test is relevant to the situation in which it is being used – for example, the biceps curl test is not a valid indicator of speed, therefore it is also not relevant to the measurement of running speed - validity has two major components – relevance and reliability three types of relevance: content-related, criterion-related and construct-related these lead to four types of validity: logical, concurrent, predictive and construct Types and Estimation - three basic types of validity evidence: content validity evidence, criterion validity evidence, and construct validity evidence - several sources suggest that there is really one type of validity: construct validity - all others are just different methods of estimating construct validity - from the older definition of validity discussions centered on different types of validity currently, discuss types of validity evidence - validity can be estimated either logically or statistically in behavioral and education related research, the trend is going toward logical validity in sciences, the statistical approach is still the preferred method - commonly used approaches to establishing validity Content Validity Evidence - a logical approach or logical type of evidence - typically has been represented as logical validity - determined by examining what is to be measured and what is actually being measured o for example, knowledge test gives scores that provide valid interpretations when the questions are reliable, the questions are based on material taught, and based upon the objectives of the instructional unit - used to be known as “content validity evidence” – what is the content of the instrument - sometimes this type of evidence is easy to assess: 100 yd dash for speed, lifting test to assess strength, etc… sometimes this type of evidence is little more difficult in which you might need a panel of experts to interpret all aspects of instruction or inclusion - - the AERA suggests several types of validity evidence should accompany content validity evidence, content-related, criterion-related, construct-related - this type of validity evidence rests solely on subjective interpretations of material included in the instruction Criterion-related Validity Evidence - determine this through the correlation between scores for a test and the specified criterion - more of a traditional procedure for examining validity evidence - the correlation coefficient is the validity coefficient and is interpreted as an estimate of the validity of interpretations based on the test scores - high validity evidence is there when the closer the validity coefficient is to one the lower the validity coefficient, the lower the criterion-related validity evidence - remember from chapter 2 that a correlation coefficient can range from -1 to +1 a criterion-related validity coefficient should not be negative - the most important aspect of this type of validity evidence is the selection of the criterion the better the criterion, the better the validity evidence will be realized (which does not necessarily mean a higher validity coefficient) - Expert Ratings - one criterion measure is the subjective ratings of one or more expert judges - two or more judges will increase reliability as the ratings are not dependent on only one judge - this will also increase the reliability - high objectivity among judges is crucial - usually take the form of group ranking or point scale - for group rankings, each judge ranks from best to worst these are fine as long as the sizes of the groups are manageable once the groups become too large, a point value method is preferred on a point scale, each person is assigned a point value typically, higher validity evidence is exhibited with a point value system Tournament Standings - this is a second criterion - run a correlation (PPMCC or other appropriate correlation from chapter 2) between the scores and the tournament standings - this is based on the assumption that tournament standings are good indicators of performance - the NCAA basketball tournament is a good example of this Predetermined Criteria - a score from a known and well-accepted instrument - the validity evidence is shown by the correlation between the scores from the test being examined and the criterion - a good approach when trying to improve the measurement of something - ideally, the criterion should be what is widely accepted as the “gold standard” “gold standard” is the best test available for that particular measurement if no gold standard is available, choose the best available for the situation this will affect the validity evidence - three examples are body composition, fitness assessment (oxygen consumption) and physical activity for body composition, the “gold standard” is hydrostatic weighing or DXA these tests are expensive and not practical if you need to test several individuals or have limited resources the advent of skinfold measurements to assess percent body fat was developed through the use of a predetermined criterion, which was hydrostatic weighing - - - for oxygen consumption, the “gold standard” is maximal treadmill time with a metabolic analysis system this test is also expensive, may be uncomfortable for the subjects, and may not be practical for testing a large number of subjects the advent of field tests (mile run, 12 minute run, 20-meter shuttle run) and non-exercise tests have been developed through the use of a predetermined criterion, which was maximum treadmill time in physical activity, there is no current “gold standard” methods of measuring physical activity include direction observation (accelerometer, pedometer), self-report from a questionnaire, observation, or physiological measurements (doubly labeled water) - because there is no “gold standard”, the validity evidence realized from any of the aforementioned methods is compromised Construct Validity Evidence - content and criterion-related validity approaches are established procedures for establishing validity evidence - this approach is relatively newer and more complex - first introduced in 1954 by the APA, and used extensively in psychological situations - there is an increase in the use of this procedure in the health sciences - a construct is something that is abstract, not something that is directly measurable attitudes, personalities, motivations, etc.. are abstract constructs - this approach is based on the scientific method 1. the idea that one of these constructs can be measured by an instrument 2. a theory developed to explain the construct and test(s) that measure it 3. statistical analyses are done to confirm or fail to confirm the theory - the construct validity evidence is determined through which the theoretical and statistical information support each other - - from the book consider a test of swimming ability answer the question: do individuals who score higher on the test have a higher swimming ability administer the test to intermediate and advanced swimmers because advanced swimmers are better swimmers, we have construct validity evidence if the advanced swimmers have a higher mean score than the intermediate swimmers you would test this using an independent samples t-test (chapter 2) - we need to define the population for testing, and all individuals tested should be of similar characteristics this will increase our validity evidence - from a more abstract perspective, a statistical procedure that is frequently used is factor analysis by definition, it identifies constructs that are related from the measurement instrument - - Validation Procedure Example - we have learned o that all types of validity evidence could be considered some form of construct validity evidence o that there are several indicators of validity evidence o and that all the types are not equally strong in their evidence - - - when conducting a validity study, all forms of validity evidence presented should be examined – this should be in relation to the study you are considering a good model would be a validation study published in a research based journal or a book chapter related to a specific type of validity one example is in your text and refers to a chapter written by two of the co-authors of the measurement text the reference to the chapter: Mahar, M.T. and D. A. Rowe. 2002. Construct validity in physical activity research. In G. J. Welk (Ed.). Physical activity assessment for health-related research. Champaign, IL: Human Kinetics. the chapter then revolves around the measurement of physical activity (chapter 12) their chapter is organized into three stages of construct validation and the type of validation evidence needed at each stage the three stages are: o definitional evidence stage o confirmatory evidence stage o theory testing stage the Definitional Evidence Stage o examining the extent to which operational domain of test represents the theoretical domain of the construct o content or logical approach is the method used for this type of evidence o in this stage of validity evidence, we are examining the properties of the test rather than attempting to interpret the scores of the test o defining the construct must be done prior to developing the test to measure it o depending on the type of physical activity, there can be several definitions of physical activity, one of these must be chosen prior to developing the test o when we do select a definition, we must realize that this will ultimately affect our validity evidence o based on the definition, we can under-represent or over-represent the level of physical activity of the group o this is done by things outside the boundaries of the definition, things that might not be in the control of the researcher o they need to be considered for the final definition selection - the Confirmatory Evidence Stage o attempt to confirm the definition of the construct from stage one o this can be accomplished through any of the methods discussed under criterionrelated or construct validity evidence o o the data analysis approaches include: factor analysis for internal evidence, a multitrait-multimethod matrix (MTMM) for convergent (the same) and discriminant evidence (difference), correlational techniques for criterion-related evidence, and known difference methods o we discussed the third and fourth methods previously o methods one and two are advanced statistical techniques - the Theory Testing Stage o theory for the measured construct is tested using the scientific method o an important part of a well-conducted validation procedure o o at this stage, there are several things that will influence the validity evidence of the construct o these are referred to as determinants (correlates) o in turn, the construct itself will influence these constructs as well o these are referred to as outcomes of the construct o o at this stage, we analyze both the determinants and the outcomes of the construct o the results of these analyses will provide us with the validity evidence Factors Affecting Validity Selected Criterion Measure - magnitude of validity coefficient for validity evidence can be affected by several things - one of the more important influences is the selection of the criterion measure - several possible criterion measures have been suggested - and it is reasonable to expect that each of these criterion measures would exhibit different validity evidence in different situations - although it should be close, just not exact, given different situations Characteristics of the Individuals Tested - validity evidence doesn’t transcend different groups - specific evidence needs to stay within a defined group - generalized evidence can be okay, but should also be tested Reliability - validity evidence/coefficient directly related to the reliability coefficient - maximum validity coefficient can be defined as: - rx, y rx, x ry,y - where rxy is the correlation coefficient (validity coefficient) rxx and ryy are the reliability coefficients of X and Y, respectively Objectivity - or rater reliability - if two different people cannot agree on a score, the outcome lacks objectivity - this will reduce the validity coefficient and the validity evidence Lengthened Tests - reliability increases as a test increases in length, and - validity will be positively influenced through these types of changes Size of the Validity Coefficient - no rigid standards exist for size of the validity coefficient - depending on the situation, 0.90 may be set as desirable while 0.80 could be set as a minimum - sometimes lower coefficients are more acceptable, for instance if no other testing is available, or you are developing a model to predict performance VALIDITY AND THE CRITERION SCORE Selecting the Criterion Score - remember, a criterion score is the score used to indicate a person’s ability - unless perfect reliability exists, the criterion score is a better indicator of ability if more than one trial is used - the criterion score must be selected on the basis of reliability and validity The Role of Preparation - an important determinant in the validity of a criterion score – better preparation leads to higher validity coefficients - you get a better indicator of true ability when the individuals being tested fully understand what is expected of them - for performance tests, this involves a practice trial - for written tests, this involves detailed instructions VALIDITY AND CRITERION-REFERENCED TESTS - definition of validity for criterion-referenced tests is the same for norm-referenced tests techniques used for estimating/calculating the validity coefficient are different for criterion-referenced tests, remember, we are classifying individuals on a yes/no, pass/fail, etc… - one such technique is known as domain-referenced validity domain as in what behavior or ability is the test supposed to measure a form of logical validity because the test is measuring what it is supposed to measure, that is if it is valid - decision validity, another technique, is based upon the accuracy of the test in its classification results - decision validity or C = AD ABCD Test Classification Proficient Nonproficient - - Actual Classification Proficient Nonproficient A B C C Table 4.2 data: A = 40; B = 5; C = 10; D = 20 40 20 60 .80 decision validity is: 40 5 10 20 75 the closer to 1, the better; at a minimum decision validity should be .60 or 60% the double-entry classification table is another validity estimate; it will be lower than the decision validity estimate AD BC calculated using the following: φ(phi) A BC DA CB D 40 * 20 5 *10 750 using our example: φ(phi) .58 40 510 2040 105 20 1299.04 values of Phi range from -1.0 to 1.0 and they are interpreted the same as correlation coefficients the classification system is dichotomous, which means there is less variability which leads to the lower values when compared to the decision validity classification validity of criterion-referenced standards for youth health-related fitness tests could be investigated to determine accuracy of classification this would be a type of concurrent validity evidence (in the present) we could also investigate whether these children maintain their same fitness levels as they enter into adulthood this would be a type of predictive validity evidence (in the future)