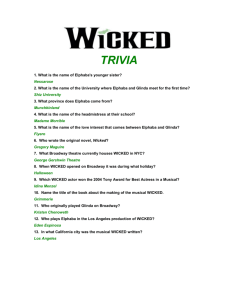

1.3 Wicked problems

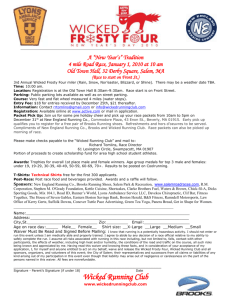

advertisement