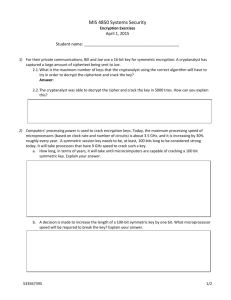

Experiment 4 - Percepts and Concepts Laboratory

advertisement