Human Body/Head Orientation Estimation and Crowd Motion Flow

advertisement

Human Body/Head Orientation

Estimation and

Crowd Motion Flow Analysis

From a Single Camera

Ovgu Ozturk

June 2010, Tokyo

The University of Tokyo

Supervisor: Kiyoharu Aizawa

Co-supervisor: Toshihiko Yamasaki

Acknowledgments

It has been a long way and there were really tough times, but I had my friends beside

me whenever I needed them. So the deepest appreciation goes to those special heroes in

various parts of my life in Japan.

I would like to express my deepest sense of gratitude to my supervisor Prof. Kiyoharu

Aizawa for his patient guidance, insightful comments and valuable advices throughout

this study. He has provided the peaceful atmosphere, and his considerateness helped us

to overcome the obstacles and succeed in our goals.

My sincere thanks and appreciation to my co-supervisor Dr. Toshihiko Yamasaki for his

diligence, extensive knowledge and providing the gentle atmosphere by saying “good

morning” everyday. This thesis would not have been possible unless his support in a

number of ways.

Dr. Chaminda de Silva was there from the beginning till the end with his continuous

support. I am heartily thankful to him for sharing his experiences about life and research

with me.

I am indebted to many of my lab colleagues for their support during the projects,

experiments and about dealing with life in Japan. I enjoyed the time spent with them.

A long time of appreciation and gratitude goes to the Japanese Government for

providing me MEXT scholarship to pursue my education in Japan.

With deepest love and gratitude, I am would like to thank to my family, the most

precious in my life.

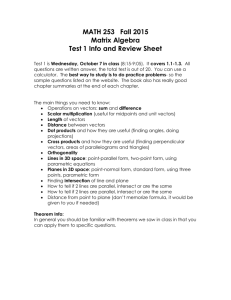

Table of Contents

List of Figures ................................................................................................ 6

List of Tables.................................................................................................. 8

Abstract ......................................................................................................... 9

List of Publications ...................................................................................... 13

Introduction ................................................................................................. 15

1.1

Motivation ................................................................................................ 16

1.2

Objectives ................................................................................................. 18

1.3

Organization of the Thesis........................................................................ 23

Human Tracking and Body/Head Orientation Estimation .......................... 24

2.1 Introduction.................................................................................................. 24

2.1.1 Related Work ............................................................................................................. 27

2.2 System Overview .......................................................................................... 29

2.3 Human Tracking by using Particle Filters.................................................... 33

2.4 Human Body/Head Orientation Estimation Algorithm ................................. 36

2.6 Experimental Results ................................................................................... 43

2.7 Conclusions and Future Work ...................................................................... 49

Dominant Motion Flow Analysis In Crowds ................................................ 52

3.1 Introduction.................................................................................................. 52

3.2 System Overview .......................................................................................... 58

3.3 SIFT Motion Flow Vector Generation ........................................................... 61

3.4 Hierarchical Clustering of Local Motion Flow Vectors .................................. 67

3.5 Constructing Global Dominant Motion Flows from Local Motion Flows ....... 71

3.6 Experimental Results ................................................................................... 74

3.7 Discussion and Conclusions .......................................................................... 77

Future Footsteps: An intelligent interactive system for public entertainment

..................................................................................................................... 79

4.1

Introduction.............................................................................................. 79

4.1.1 Related Work ............................................................................................................. 83

4.2 System Overview .......................................................................................... 87

4.2.1 System Architecture .................................................................................................. 89

4.2.2 Calibration ................................................................................................................. 92

4.3 Real-time Tracking of Multiple Humans ...................................................... 93

4.3.1 Background Subtraction and Blob Extraction ........................................................ 94

4.3.2 Association of Blobs ................................................................................................... 98

4.4 Analysis of Tracking Results and Visualization of Footsteps ...................... 100

4.4.1 Prediction of Future Footsteps ............................................................................... 101

4.4.1 Visualization of Future Footsteps .......................................................................... 105

4.5 Experimental Results ................................................................................. 107

4.5.1 Results from Various Situations ............................................................................. 108

4.5.2 User Study ............................................................................................................... 109

4.7 Conclusions ................................................................................................ 110

4.8 Future Work ................................................................................................111

Conclusions ................................................................................................ 113

Discussions and Future Work .................................................................... 113

References .................................................................................................. 114

List of Figures

Figure 1. An example view from a market place. ............................................................. 22

Figure 2. An example view from a street .......................................................................... 22

Figure 3. Visual focus attention of people in a market place .......................................... 25

Figure 4. Overview of Body/Head Orientation Estimation System ................................ 30

Figure 5. Edge and color orientation histograms. ............................................................ 33

Figure 6. Grouping of head-shoulder contours ................................................................. 38

Figure 7. SIFT motion flow vectors around head region. ................................................ 40

Figure 8. Addition of SIFT motion flow vectors................................................................ 42

Figure 9. Tracking of a person and estimation of head/body orientation. ...................... 43

Figure 10. Image patches of various head-shoulder regions. .......................................... 45

Figure 11. Experimental results........................................................................................ 47

Figure 12. Experimental results of challenging cases. .................................................... 48

Figure 13. Structured/unstructured crowd scene examples. ........................................... 53

Figure 14. System Overview .............................................................................................. 59

Figure 15. SIFT motion flow vector................................................................................... 61

Figure 16. SIFT motion flow vectors in a given image region. ........................................ 63

Figure 17.SIFT motion flows for 100 frames and 400 frames ......................................... 64

Figure 18. Motion flow map and local regions for the entire scene. ............................... 65

Figure 19. Hierarchical clustering of motion flow vectors. .............................................. 67

Figure 20. Dividing into local regions and creating an orientation histogram for each.68

Figure 21. Hierarchical Clustering Steps ......................................................................... 68

Figure 22. Local regions and motion flow maps. .............................................................. 70

Figure 23. Local dominant motion flows. ......................................................................... 70

Figure 24. Neighborhood schema for local dominant flow vectors.................................. 72

Figure 25. Connecting local flows to obtain global flows. ................................................ 73

Figure 27. Global dominant motion flows. ........................................................................ 74

Figure 26. Input data ......................................................................................................... 74

Figure 28. Combining global flows one step more............................................................ 75

Figure 29. Ground truth. ................................................................................................... 75

Figure 30. Depicted future footstep of a girl..................................................................... 81

Figure 31. General view of the area from the camera. .................................................... 81

Figure 32. Placement of the system in the airport. .......................................................... 86

Figure 33. Inside of the box. .............................................................................................. 86

Figure 34. The placement of the camera and the box. ..................................................... 89

Figure 35. System architecture ......................................................................................... 90

Figure 36. The calibration step is displayed. .................................................................... 93

Figure 37. Blob extraction: Example input scenes and enlarged view of a partial area in

the input scene. .................................................................................................................. 94

Figure 38. Blob extraction for a child................................................................................ 96

Figure 39. Example extracted regions of adults ............................................................... 96

Figure 40. Example extracted regions of children ........................................................... 96

Figure 41. Blob tracking during three consecutive frames. .......................................... 100

Figure 42. Association of blobs stored in the data structure. ........................................ 101

Figure 43. Gradually disappearing images of a foot. ..................................................... 103

Figure 44. Various foot shapes used in the system. ....................................................... 103

Figure 45. Experimental results: visualization of future footsteps for various people.

........................................................................................................................................... 104

Figure 46. Experimental results: various future footsteps............................................ 104

Figure 47. Experimental results: various future footsteps............................................ 106

Figure 48. Visualization of mostly followed paths from top-view ................................. 106

Figure 49. User Reaction: a woman is jumping right and left to play with the displayed

footsteps. ........................................................................................................................... 107

Figure 50. User Reaction: a little girl is exploring and trying to step on the footsteps.

........................................................................................................................................... 107

List of Tables

Abstract

In the last few decades, automation of descriptive and statistical analysis of

human behavior became a very significant research topic. Due to the technological

advances in video technologies, many researchers have focused on detection and

analysis of human motion from video cameras. They tried to develop intelligent systems

that contribute to automatic control and alarm systems, automatic data evaluation and

process. In this respect, analysis of human behavior is important for many different

applications such as marketing, social behavior modeling, security systems,

human-robot interaction, etc… To achieve these systems, the main tasks are stated as

detecting humans in a given scene, counting the number of humans, tracking their

motion and analyzing their trajectory of motion. In addition to this, recently there are

researches which try to understand human face and body gestures, such as smiling,

walking, jogging, waving a hand. Until now, there has been a significant progress in

detection of humans and tracking of their motion in public spaces, understanding their

gestures.

To analyze a given scene in more detailed, the next step is to measure people’s

focus of attention which remains as an unsolved problem. Visual focus of attention of

humans is defined as the direction they are heading to or the direction they are looking

at during their motion. Humans show their attention by walking towards that direction

or by turning their head to that direction. The paths they walk can give us information

about their interests in the environment. Hence, the orientation of their body and head

can give us a hint about their visual focus of attention. In crowd scenes, the most

common motion paths can give us information about the popularity of the places in the

environment.

Currently, there are a huge number of researches that try to solve the body/head

orientation estimation problem by using multiple cameras, multi-sensors or they locate

various markers on the bodies. These approaches are often too impractical or expensive

to build in common public places for general cases. Our aim is to extract the most

possible useful information to achieve human motion analysis in a given public scene

from a single camera. It has very big challenges due to the articulations in human body

and pose and less data. On the other hand, by only using a single camera, we can build

portable, low-cost systems with less complexity.

In our research we focus on two major problems. First, we studied the estimation

of visual focus of attention of people. We have developed a system that tracks people

and estimates their body and head orientation. Second, we have analyzed the crowd

scenes and proposed a method to calculate the dominant motion flows that can handle

very complex situations. Later, as an application of our work, we have presented an

interactive human tracking system during a digital art project that was exhibited in

Haneda Airport in Tokyo for one month.

“Tracking of Humans and Estimation of Body/Head Orientation” part addresses

the problem of determining body and head orientation of a person while tracking the

person in an indoor environment monitored by a top-view single camera. We capture

the top-view of the scene from a very high place and the resolution of the data is low.

By analyzing the head-shoulder contour of a person and by using the motion flows of

distinctive image features, SIFT features, around head region, we try to estimate the

body/head orientation of a person. Experimental results show the successful application

of our method with five degrees error at maximum.

Detecting dominant motion flows in crowd scenes is one of the major problems

to understand the content of a crowded scene. In our work, we focus on analyzing the

crowd motion for structured and unstructured crowds, where the motion of the people is

very complex and unpredictable. We have proposed a hierarchical clustering of

instantaneous motion flow vectors accumulated for a very long time to find the mostly

followed patterns in the scene. Experimental results demonstrate the successful

extraction of dominant motion flows in challenging real-world scenes.

An intelligent interactive public entertainment system, which employs

multiple-human tracking from a single camera, has been developed. The proposed

system robustly tracks people in an indoor environment and displays their predicted

future footsteps in front of them in real-time. To evaluate the performance, the proposed

system was exhibited during a public art exhibition in an airport. The system

successfully tracked multiple people in the environment and displayed the footstep

animations in front of them. Many people participated in the exhibition; they showed

surprise, excitement, curiosity. They tried to control the display of the footsteps by

making various movements.

List of Publications

Book Chapter

1. 未来の足跡「Footprint of Your Future」in 空気の港「Digital Public Art in Haneda

Airport」, O. Ozturk, T. Matsunami, M.Togashi, K.Sawada, T. Ohtani, Y. Suzuki, T.

Yamasaki, K. Aizawa published by 美術出版社

Journal Articles

1. "Real-time tracking of multiple humans and visualization of their future footsteps in

public indoor environments", O. Ozturk, Y. Suzuki, T. Yamasaki, K. Aizawa,

Multimedia Tools and Applications, Special Issue on Intelligent Interactive

Multimedia Systems and Services, Springer. (submitted on March 11)

2. “Human Tracking and Visual Focus of Attention Estimation From a Single Camera

In Indoor Environments”, O. Ozturk, T. Yamasaki, K. Aizawa, EURASIP Journal on

Image and Video Processing (to be submitted)

Reviewed Conference Papers

1. “Detecting Dominant Motion Flows In Unstructured/structured Crowd Scenes”, O.

Ozturk, T. Yamasaki, K. Aizawa、International Conference on Pattern Recognition,

ICPR2010, Istanbul, Turkey.

2. “Can you SEE your “FUTURE FOOTSTEPS?”, O. Ozturk, T. Matsunami, Y.

Suzuki, T. Yamasaki, K. Aizawa, Proceedings of International Conference on Virtual

Reality, VRIC2010, April 7-11,2009,Laval,France.

3. “Tracking of Humans and Estimation of Body/Head Orientation from Top-view

Single Camera for Visual Focus of Attention Analysis”, O. Ozturk, T. Yamasaki, K.

Aizawa, THEMIS2009 Workshop held within ICCV 2009, Sept 27-Oct 4.

4. “Content-aware Control for Efficient Video Transmission of Wireless Multi-camera

Surveillance Systems”, O. Ozturk, T. Yamasaki, K. Aizawa, PhD Forum,

ICDSC2007, September, Vienna.

5. “Human Visual Focus of Attention Analysis by Tracking Head Orientation with

Particle Filters”, O. Ozturk, T. Yamasaki, K. Aizawa, International Conference on

Advanced Video and Signal-based Surveillance, AVSS2010, Boston, USA.(to be

submitted March 26)

Non-reviewed Conference Papers

1. “Multiple Human Tracking and Body Orientation Estimation by using Cascaded

Particle Filter from a Single Camera”, O. Ozturk, T. Yamasaki, K. Aizawa, P-3-23,

PCSJ/IMPS2009, Oct. 7- Oct. 9, 2009, Shizuoka.

2. “Content-aware Video Transmission for Wireless Multi-camera Surveillance”, O.

Ozturk, T. Hayashi, T. Yamasaki, K. Aizawa, P-5-02, PCSJ/IMPS2007, Oct. 31- Nov.

2, 2007, Shizuoka.

3. “Content-aware Spatio-Temporal Rate Control of Video Transmission for Wireless

Multi-camera Surveillance”, O. Ozturk, T. Yamasaki, K. Aizawa, IEICE2008,

D-11-20, March 18-21, Kita-Kyushu.

Chapter 1

Introduction

Analysis of human behavior in public places is an important topic which has

attracted much attention from many researchers, designers, companies and

organizations. It is critical to know how humans move in public spaces, how they react

to surroundings, how they show attention to the objects of interest. For example,

pedestrian behavior recognition at bus stops, at the crossings or stations is useful for

security reasons. People looking at the bulletin boards, commercial screens or customers

walking around market stands can provide us information about recent trends,

marketing strategies, effective advertisement methods. Intelligent human computer

interfaces can be developed by analyzing the user’s body motions. Studying the general

characteristics of behaviors of humans or crowds can provide us a measure to

distinguish the normal and abnormal situations and detect the emergency cases.

In this respect, analyzing human motions can give us useful feedbacks to build

autonomous intelligent environments and systems to improve the quality of life in many

different ways. One of the most useful and efficient way for human motion

understanding is to utilize image and video processing methods. In our era, video

cameras are installed everywhere, they have become a part of everyday life. There are

various security cameras in indoors and outdoors. Many people use web cameras with

their computers. Now, it is very simple and cheap to obtain image/video data of a

person or an environment. Hence, tracking of humans and understanding their motion

via computer vision techniques is of great service for many.

1.1

Motivation

Recently, there has been significant progress in the field of computer vision to

analyze various scenes captured from video cameras and extract useful information for

automatic decision making. Video cameras are installed in the markets, in the stations,

in the shops. There are various cameras that are mounted in the computers, automatic

machines or robots. It is possible to capture an image of the scene or record video data

of the scene, then the data is processed to acquire meaningful information.

There are several researches in this area. Now it is possible to detect humans in

an image, to recognize different objects. Furthermore, there are algorithms to count

humans and even, to track their motion under various circumstances. Given an image of

the scene, existence of the people, their identity can be extracted. Their body pose or

type of their motion analysis can be achieved to some extent. There are systems that

detect simple actions, such as: walking, running, raising hands or kneeling down. Some

other systems tracks moving people and find their motion paths.

As a next step in scene analysis, more detailed analysis of human motions is

remained unsolved. For example, to measure people’s focus of attention is still an open

problem. Regarding this, in our research we focus on two major problems. The first one

is to estimate the visual focus of attention of people while tracking them. The second

one is to detect the dominant motion flows in crowd scenes.

Visual focus of attention of humans is defined as the direction they are heading

to or the direction they are looking at during their motion. Depending on the camera

view, this definition can vary. Considering the general view of a shopping area, we can

say that humans are attracted by their destination or by the objects around. They show

their attention by walking towards that direction or by turning their head to that

direction. The paths they walk can give us information about their interests in the

environment. Hence, the orientation of their body and head can give us a hint about

their visual focus of attention. In crowd scenes, it is impossible to investigate each

person’s movement in detail or to acquire enough information from each person to

evaluate their body/head orientation. For crowd scenes, the most common motion paths

can give us information about the popularity of the places in the environment.

In our research, we build our problem statement as estimating the visual focus of

attention of people from a single camera. Currently, there are a huge number of

researches that try to solve the same problem by using multiple cameras, multi-sensors

or they locate various markers on the bodies. These approaches are often too impractical

or expensive to build in common public places for general cases. On the other hand, by

only using a single camera, we can build portable, low-cost systems with less

complexity. Figure 1 and 2 give example images of the scenes that present our problem

settings.

1.2

Objectives

In our work, we focused on two major problems. First, we studied the estimation

of visual focus of attention of people. We have developed a system that tracks people

and estimates their body and head orientation. Second, we have analyzed the crowd

scenes and proposed a method to calculate the dominant motion flows that can handle

very complex situations. Later, as an application of our work, we have participated in a

digital art project that was exhibited in Haneda Airport in Tokyo for one month. There,

we have developed a real-time multiple human tracking system, which visualizes

people’s predicted future footsteps during their motion. Below, the main characteristics

and major contributions of each research are introduced briefly.

Tracking of Humans and Estimation of Body/Head Orientation: This part

addresses the problem of determining body and head orientation of a person while

tracking the person in an indoor environment monitored by a top-view single camera.

The challenging part of this problem is that there is wide range of human appearances

depending on the position from the camera and pose articulations. In this work, a

two-level cascaded particle filter approach is introduced to track humans. Color clues

are used at the first level and edge-orientation histograms are utilized to support the

tracking at the second level. To determine body and head orientation, combination of

Shape Context and SIFT features is proposed. Body orientation is calculated by

matching upper region of the body with predefined shape templates, finding the

orientation within the ranges of pi/8. Then, optical flow vectors of SIFT features around

head region are calculated to evaluate the direction and type of the motion of the body

and head. We demonstrate the experimental results of our approach showing that body

and head orientation are successfully estimated. Discussion on various motion patterns

and future improvements for more complicated situations is given.

Detecting dominant motion flows in crowds: Detecting dominant motion flows

in crowd scenes is one of the major problems in video surveillance. This is particularly

difficult in unstructured crowd scenes, where the participants move randomly in various

directions. In our work we present a novel method which utilizes SIFT features’ flow

vectors to calculate the dominant motion flows in both unstructured and structured

crowd scenes. SIFT features can represent the characteristic parts of objects, allowing

robust tracking under non-rigid motion. First, flow vectors of SIFT features are

calculated at certain intervals to form a motion flow map of the video. Next, this map is

divided into equally sized square regions and in each region dominant motion flows are

estimated by clustering the flow vectors. Then, local dominant motion flows are

combined to obtain the global dominant motion flows. Experimental results demonstrate

the successful application of the proposed method to challenging real-world scenes.

An intelligent interactive system for public entertainment: In this work, an

interactive entertainment system which employs multiple-human tracking from a single

camera is presented. The proposed system robustly tracks people in an indoor

environment and displays their predicted future footsteps in front of them in real-time.

The system is composed of a video camera, a computer and a projector. There are three

main modules: tracking, analysis and visualization. The tracking module extracts people

as moving blobs by using an adaptive background subtraction algorithm. Then, the

location and orientation of their next footsteps are predicted. The future footsteps are

visualized by a high-paced continuous display of foot images in the predicted location

to simulate the natural stepping of a person. To evaluate the performance, the proposed

system was exhibited during a public art exhibition in an airport. People showed

surprise, excitement, curiosity. They tried to control the display of the footsteps by

making various movements.

Figure 1. An example view from a market place.

Figure 2. An example view from a street

1.3

Organization of the Thesis

The rest of this thesis is organized as follows. Chapter 2 describes the developed

algorithms to track humans and estimate their body/head orientation. Chapter 3 explains

our algorithm to detect dominant motion flows. Chapter 4 introduces an interactive

application project, “Future Footsteps”, which utilizes real-time multiple-human

tracking. In each chapter, the recent related advances in the field are given in detail. The

developed algorithms are explained and experimental results for various situations are

presented. Additionally, in Chapter 4 user studies about the interactive system are

introduced. Chapter 5 concludes the work presented in the thesis, followed by the

discussions and future improvements summarized in Chapter 6.

Chapter 2

Human Tracking and Body/Head

Orientation Estimation

2.1 Introduction

Analysis of human behavior in public places is an important topic which has

attracted much attention from many researchers, designers, companies and

organizations. It is critical to know how humans move in public spaces. Until now, there

has been a huge amount of research to detect humans, count and track them. However, a

few has gone one step further to detect visual focus of attention. In this work, we

address a relatively untouched problem and focus on the tracking of humans and

estimation of the visual focus of attention in indoor environments. More specifically, we

Figure 3. Visual focus attention of people in a market place

would like to know which direction a person is looking at, while wandering in an indoor

environment. We present a tracking system, which keeps the track of a person under

random motions and propose a new method to find the orientation of the body,

moreover orientation of the head while tracking the person.

Our contribution is two-fold, first we have developed a two-level cascaded

particle filter tracking system to track the body motion. Human appearance in the image

is defined as an elliptic region which is very effective and adaptable to track the object

for any pose seen from any angle. Appearance model of the target is constructed by

two-methods: first one is by using a random color histogram, second one is by using an

edge orientation histogram. The color histogram forms the basis for tracking and is

calculated at each iteration. The edge orientation histogram is used at some intervals and

only updated when necessary. Our second contribution is about how to calculate the

direction of the head movements and track the head motion throughout video frames.

We use Shape Context ¥cite{Seemann, ShapeContext} approach to detect basic body

orientation and propose an optical flow approach by using distinctive features, SIFT

features ¥cite{Sift}, around head region. The displacement of distinctive features

around head region gives an idea about the local motion. Then, it is combined with the

change in the center of mass to evaluate the overall motion of the body and head.

This chapter describes our approach and gives initial experimental results. We

discuss about various cases including both successful and insufficient situations and

seek for the ways to further improve the algorithm. Our work is a part of a project in an

airport in Tokyo and our human tracking and orientation estimation system will be used

to measure visual focus of attention of the audience during an art exhibition. For the

time being, occlusions are excluded and humans in the images are assumed to be not

carrying big bags on their shoulders, which would distract the head-shoulder triangle.

The rest of the chapter is organized as follows. Next section introduces over all

system, explaining our tracking and orientation estimation algorithms. Experimental

results and related discussion are presented in section 3 followed by conclusions and

future work in section 4.

2.1.1 Related Work

In the area of computer vision, considerable progress has been made to achieve

automatic

detection

¥cite{Wu,Zhang,Sabzmeydani,Zhao,Wojek},

counting

¥cite{VideoSecurity,Counting} and tracking of humans ¥cite{Yang,YuanLi,Han}.

However, most of the previous efforts have relied on two main groups of constraints:

first one is on motion path and second one is on body shape. Some researchers

¥cite{Seemann,VideoSecurity,Counting} used gates, or passages where human motion

can be predicted and linear most of the time. Besides, the majority of the tracking

algorithms

used

constant

shape-features

of

human

body

¥cite{Wu,Sabzmeydani,Counting,Zhao}, such as $¥Omega$ shape of head-shoulders or

combination of body parts-head,torse,legs-¥cite{Wu,Zhao}. However, while examining

a general indoor public places, such as market places, exhibition areas, where people

walk randomly, there is an extensive range of human pose seen from the camera and

unpredicted change in motion direction. Although tracking has been achieved to some

extent, estimation of the human body and head orientation while tracking still remain

unsolved.

To our best knowledge, this work is the first attempt to extract orientation of

both body and head of wandering humans in a public space by using only video data.

Glas ¥etal ¥cite{LaserTracking} studies a similar problem by combining video data and

laser scanner data. Their work extracts the position of two arms and head from a

top-view appearance and finds the orientation of human body in the scene. Using laser

scanners increases the cost and limits the portability of the system. In our work, we only

use a single camera and we aim to detect not only body orientation but also head

orientation, which would not be possible by using a laser scanner system as in

¥cite{LaserTracking}. O. Ba and J. Odobez ¥cite{Odobez02} explore the head

orientation detection problem in single and multiple cameras. They train various poses

of human head from front-view and combine the detection results from multiple

cameras to estimate the head orientation. Matsumoto ¥etal ¥cite{Matsumoto} focuses

on gaze detection from a single face image to find the focus of attention. More advanced

research was conducted by Smith ¥etal ¥cite{TrackingVFOA} using a single camera

and computer vision techniques to track the head orientation of humans while passing in

front of an outdoor advertisement.

For our case, the situation is more complicated targeting a general case with

motion of the body and head in any direction. We work with data captured from a

top-view single camera in an indoor public environment. Since privacy is a critical issue,

only top-view capturing is permitted, yielding less personal data acquisition, hence

higher privacy protection. As a result of top-view capturing, appearance of human body

can change depending on the position from the camera and orientation of the body.

There is little distinctive data and a wide range of human poses. We define estimating

the visual focus of attention as detecting the orientation of body and following the

head-movements to find where the person is looking at.

2.2 System Overview

We intend to automatically track humans wandering in an indoor environment

and analyze their behavior. The environment selected for this work is a marketplace

inside an airport. The environment is monitored by a single camera mounted sufficiently

high above to provide a top-view of the scene. Figure 3 shows an example scene from

our experiments in a market place at an airport. The data used in the experiments is

captured in full HD mode providing 1440x1080 pixels resolution for each frame. An

average human appearance resolution is 70x90 pixels. In this work, we focus on people

Figure 4. Overview of Body/Head Orientation Estimation System

who are walking around or standing still and looking around. It is assumed that people

don’t carry big bags on their shoulders. Occlusion handling and generalization of the

approach for various behaviors are left as future work.

Our system is composed of two main modules and an initialization step, which

is illustrated in Figure 4. Initialization step is composed of four parts. First of all, blobs

indicating the human regions in the scene are extracted by a background subtraction

algorithm. For each blob, color histogram and edge-orientation histogram models are

generated to describe the appearance of the target human during tracking process. Then,

initial body orientation is calculated by using shape context matching. After this,

tracking process starts and at each frame the target is tracked by using color-histogram

model based particle filter. With 10 frames of intervals, displacement in the center of

mass of the blob, change in the area and optical flow of the SIFT features are observed

to evaluate the change in the orientation of the body and head. If orientation change is

detected, new orientation is estimated and edge-orientation histogram is updated,

tracking process starts again for another 10 frames interval. During each tracking

interval, particle filter is used based on color histogram at each iteration, and

edge-orientation histogram is used once in three iterations to validate the tracking

process.

Blob Detection, Color Histogram Generation, Edge-orientation Histogram

Generation parts of the initialization are described below. Steps of the Blob Detection

are given in Table 1.

Blob Detection:

1. Use H,S,V color components and take the difference of the input and background

image

2. Apply Thresholding

3. Majority voting of H,S, V color values

4. Median Filtering

5. Morphological opening process

6. Find blobs with area larger than a threshold

7. For each blob, assign an ellipse (center, orientation, major axis length, minor axis

length)

Table 1. Blob Detection

Color Histogram Generation:

Figure 3 shows the color histogram and edge-orientation histogram generation. We

utilize a color histogram of 18-bins in total, where 6-bins are used for each

color-component of RGB color space. For each component, 0-256 color range is

divided equally into 6 bins. From a predetermined number ( N = 120 ) of randomly

chosen points inside the elliptic region, each color component of each point contributes

to the corresponding bin with its value.

Figure 5. Edge and color orientation histograms.

Edge-orientation Histogram Generation:

Sobel edge detection is used. Orientation and strength of the edges are calculated similar

to the method in [Yang]. Defining an edge orientation histogram, where [pi/2, pi/2]

range is divided into 8 bins, each edge information contributes to the corresponding bin

with the amount of its strength.

2.3 Human Tracking by using Particle Filters

In our system, we employ particle filter approach, one of the most popular

tracking methods in computer vision. The particle filter, also known as condensation

filter, is a Bayesian sequential importance sampling technique, which recursively

approximates the posterior probability density function(pdf) of the state space using a

finite set of weighted samples. Target objects are defined with their observation models

which are used to measure the observation likelihood of the samples in the candidate

region.

Particle filter tracking approach basically consists of two steps: prediction and

update. Given all available observations

Z1:t 1 Z1 ,..., Zt 1

prediction stage uses the probabilistic system transition model

up to time t-1, the

p X t | X t 1 to

predict the posterior at time t as:

p X t | Z1:t 1 p X t | X t 1 p X t 1 | Z1:t 1 dX t 1 ….………..(1)

At time t, the observation Zt is available, the state can be updated using Bayes's rule:

p X t | Z1:t

where

p Z t | X t p X t | Z1:t 1

p Z t | Z1:t 1

……………………(2)

p Zt | X t is described by the observation equation.

The posterior

p X t | Z1:t

X

with importance weights wt . As shown in ¥cite{Monte}, the weights

i

t i 1,..., N

is approximated by a finite set of

become the observation likelihood

N

samples

i

p Zt | X t . After some iteration of prediction

and update steps, when the number of samples decreases below a certain threshold,

samples are re-sampled with equal weights.

In our work, we combine two types of observation models, color-histogram and

edge-orientation histogram in two-level cascaded approach. The object to be tracked is

assigned an elliptic region, similar to the work ¥cite{Sebastian}. At each iteration, N =

300 particles are sampled as candidates, for each particle color-histogram is calculated

from randomly sampled 120 points inside the elliptic region around the particle as

shown in Figure 5. Then, after some number of iterations (n = 3 was found to be the

most appropriate), edge-orientation histogram of the head region is generated and used

to support tracking.

State space is defined as X x, x, y, y, hx, hy, , where the states are given as

follows:

x,y: center of the ellipse

x, : velocity of the center in two-dimensions

hx, hy: axis lengths of the ellipse

Θ: orientation of the ellipse

State equations are:

xt xt 1 k * xt 1 ………….….…….(3)

xt xt 1 ………….…......…..(4)

yt yt 1 k * yt 1 ....….…..………......(5)

yt yt 1 .……………………(6)

hxt hxt 1 …………………….(7)

hyt hyt 1 …………………….(8)

t t 1 ...............................(9)

State variables are assumed to be affected by Gaussian noise, where

appropriate covariance values are assigned empirically considering the kinematics of

ellipse. Bhattacharyya distance is used to compare the histogram of the sample points

with the histogram of the object.

2.4 Human Body/Head Orientation Estimation Algorithm

Human Body/Head Orientation Estimation Module is composed of three main parts:

1. Motion Vector Detection

2. SIFT Optical Flow

3. Body Orientation Check

During "Body Orientation Assignment" part of initialization module and "Body

Orientation Check" part, the same orientation estimation algorithm is used. We

implement

a

simple

but

very

effective

method,

shape

context

matching¥cite{Poppe,Wojek,ShapeContext} to determine the orientation of the body by

comparing the head region of a person with previously defined templates. Figure 6

shows the groups that compose the category set for possible appearances of

head-shoulder triangle region in the scene. In our experiments, camera monitors the

scene from a very high place and camera's position corresponds to the bottom of the

captured scene. In Figure 6, two types of placement of a camera are shown, our system

is the one with the camera at the side. If the camera was placed in a central position on

the ceiling, the number of categories would be doubled to include the other half. There

are 13 groups in the set, which is used to categorize all possible cases of head region.

Groups with the names ending with r are constructed by getting the symmetry image

about the y-axis. Canny edge detection algorithm is used to detect the edges on the

boundary of the upper half region of the body, then shape context matching is conducted

to find the corresponding group.

Figure 6. Grouping of head-shoulder contours

Tracking starts with the initially assigned orientation, then for each 10 frames

of interval, displacement vector(DV) of the center of the tracked region is calculated by

using the result from the particle filter. And resultant optical flow vector, which is called

"SOFV" is computed from SIFT features around head region. Head region is chosen,

since it is the most stable part compared to other body parts considering various poses

of the body and it is not affected by shape changes from the clothing of the person. Here,

a very basic logic applies to group the patterns of motion.

1. If both DV and SOFV are close to zero, then person is standing in the same position

with no orientation change.

2. If DV is close to zero but SOFV is larger than a threshold, then person is standing

still, but the orientations of the body and head are changing.(Person is turning right or

left around himself, or she/he is only moving her/his head).

3. If both DV and SOFV are larger than defined thresholds, then person is stepping

towards a direction.

4. Logically, DV is larger than a threshold and SOFV is close to zero combination is not

possible.

Motion type is evaluated and orientations of the head and body are estimated as

explained below by using DV, SOFV vectors. At certain intervals, body orientation is

checked with shape context matching to support the operation of the estimation process.

How to calculate SOFV: During tracking process, 10 is chosen as interval

length to evaluate the change in orientation. SIFT features are examined with sub

intervals of length 3,3,4. In other words, SIFT features are detected and tracked at

3rd,6th and 10th frames of the interval to construct the optical flow vectors as shown in

Figure 7. The numbers 10 and 3 for interval length are set empirically to keep the SIFT

Figure 7. SIFT motion flow vectors around head region.

feature correspondence between frames. If sub interval length is smaller than 3, then the

vector length is too small to get information about the direction change; if it is larger,

then the number of matched SIFT features between two frames is too few.

In Figure 7, 35x35 pixels image of the head region of the frame x is displayed.

10 frames interval starts with frame x and ends with frame x+10. Red marks show the

SIFT features detected in frame x, blue marks show the matched SIFT features in frame

x+3 corresponding to the ones in frame x. Again, yellow marks show the matched

features of frame x+6 with frame x+3. Finally white marks show the SIFT features that

matches with the ones in frame x+6. On the right of the image, optical flow vectors are

displayed. We divide 2D space into 4 regions as shown and call them -R, -L, +L, +R.

For each region, we calculate the average vector of the optical flow vectors and average

position

of

the

vectors

in

that

region

and

call

them

as

VR, VL, VL, VR and cr, cl, cl, cr .

SOFV Calculation:

1. Calculate average vectors resulting from the optical flows of SIFT features in each

region.

2. Choose two regions with the largest average vector length.

( Region -R, -L in the example)

3. Calculate the average positions of the optical flow vectors for each region.

4. By comparing average positions, add two dominant vectors calculated in step 2 to

find

SOVF .

SOVF V1 V 2 ………………………………(10)

Figure 8. Addition of SIFT motion flow vectors

There is a global motion opposing the local rotational motion. These motion patterns

can not be calculated at the moment. In the example in Figure 7, red line shows the

average vector in -R, yellow line shows the average vector in -L and black line shows

the average vector in +R. Since there is no vectors in region +L, resultant average vector

is zero. Resultant

SOVF

is shown with bold blue line.

Figure 9. Tracking of a person and estimation of head/body orientation.

2.6 Experimental Results

In our experiments, we use real time data captured from a market place in an

airport. Data was captured in HD mode, with 1440x1080 resolution and average human

appearance occupies around 70x90 pixels.

Figure 8 shows a tracking result of a person during 720 frames, 24 secs. In the

figure, the person tracked is shown inside a yellow rectangle. Red line shows the center

of the tracked region for each iteration and forms a trajectory line. Elliptic regions

resulting from the particle filter tracking are shown with green color around the

trajectory of the motion. However for the sake of clarity, all iterations are not displayed,

the results are displayed once in 10 frames interval. Tracking starts at frame 131, the

person heads upwards at the beginning. He walks a few steps forward and then stops

and gazes around by turning right around himself, which is a clock-wise rotation seen

from the top. Then, he turns left around himself until the point he started, making a

counter-clock wise rotation. This takes place between frames 175 and 470, which is

depicted in the figure where green ellipses accumulate. Then, he continues with his

original direction, walking towards upwards until he comes to another stopping point at

frame 740. During this scenario, person is tracked successfully, the initial body

orientation starts with groupLeft, with pi/8 angle. This continues during the straight

motion, at two stopping points, rotation angle is calculated by using SOFV vectors and

shape context.

Figure 10. Image patches of various head-shoulder regions.

Here, first we present a set of experiments to show the effectiveness of shape

context matching and classification of 5 groups approach. We chose 30 random

appearances of various people in the video and applied the shape context matching to

categorize them. Then, we explain the experimental results of three different cases of

tracking and estimating the orientation of body and head.

Figure 10 shows head region of 30 different appearances of humans in the

captured video. When shape context matching is applied, 24 among 30 were detected

correctly, with 80% matching rate. Mismatched six were from GroupRight,

GroupRightDiagonal,

GroupLeft,

GroupLeftDiagonal.

They

had

a

common

characteristic that head circle was not clear enough to play a strong distinctive role in

the outline of the shape. So they were mismatched with groupStraight. For these cases,

the orientation is mistaken and those cases should be studied further to improve the

matching algorithm.

In Figure 11, two examples are given. Figure 11a illustrates a person turning

left throughout 10 frames interval. Green lines show the edge contours for 1st, 3rd, 6th

and 10th frames of the interval to display the motion. On the right, detected and tracked

SIFT features are displayed with marks in the order red, blue, yellow and white as

explained in the previous section. The final SOFV vector is depicted with blue color

with the resultant turning angle shown in black. Displacement vectors for SIFT features

accumulate in the regions, +L and -L, indicating a motion towards left. Figure 11b

shows a difficult case for the head movement with a small angle. The person stops and

slightly turns right around himself. The resultant SOFV vector correctly indicates a

rotation according to his motion, which is the angle shown with black arrow between

red and blue lines.

Figure 11. Experimental results.

In Figure 12, two cases are presented. The one at the top shows a rotation of a

person towards right, which is a clock-wise rotation seen from the camera.

Figure 12. Experimental results of challenging cases.

The orientation of the head is successfully tracked. The one at the bottom presents a

case where there are more than one motion to be analyzed. SIFT features are affected

both by the motion of the head and by the motion of the body. The center of the body

also moves a little bit upwards. Hence, resultant SIFT feature displacement vectors are

not a result of only orientation change but also a result of global displacement. To solve

this case, experiments are still being held considering the affect of global motion vector

in the calculations of SIFT features.

2.7 Conclusions and Future Work

In this work, we have presented a human tracking and body/head orientation

estimation algorithm from a top-view single camera. Cascaded particle filter was

utilized successfully to track people under random walking directions and standing

patterns. A new approach combining shape context matching and optical flow of SIFT

features is proposed to detect the orientation change in the body and head. The system

presented in this paper is an initial part of a project, tracking and detecting the

body/head orientations of wandering people. In future, it is planned to be further

improved to apply to more complex situations in public places.

In our experiments we have shown the effectiveness of our method for tracking

the humans and their body and head orientation under random walking sequences.

Tracking is successfully achieved with two-step cascaded particle filter. Tracking

algorithm is working under various conditions, even there are some partial occlusions.

However to detect the orientation of the body and head, upper half of the body seen

from the camera is very important and any objects blocking the head triangle region

decreases the success rate. For some cases the algorithm still works very well, since

most of the algorithm depends on general evaluation of displacement vectors of the

features. The only part affected too much from distracting objects is the shape context

matching part, which highly depends on the edge contour of the boundary. There are

still various cases to be studied such as people walking with big bags or some occlusion

problems of people walking very close to each other. They are left as future work.

In our work, SIFT features were chosen to be utilized in the algorithm after

several experiments with Kanade-Lucas-Thomasi(KLT) features or recently popular

SURF¥cite{Surf} features. KLT features were not distinctive enough. Once we detect

KLT features in each image, they appear on the parts of the clothes as well as on the

head region. But, the number of KLT features in the head region is not enough. On the

other hand, the number of detected SURF features is not enough on the body region.

Among all, SIFT features give very promising results.

One important issue to be discussed here is related to performance.

Performance evaluation of the system is one of our near future goals. At the moment our

system is working very well for one person, however it is aimed to be working for

multiple people in the environment at the same time. This brings very important

performance issues, which should be studied very carefully. The interval rates for

body/head orientation calculation, edge detection and shape context matching

algorithms are expected to be improved to get the best performance overall.

Chapter 3

Dominant Motion Flow Analysis In Crowds

3.1 Introduction

Dominant motion patterns in videos provide very significant information which

has a wide range of applications. Since motion patterns are formed by individual

motions or interacted motions of crowds, it helps to analyze the social behavior in a

given environment in the video. Furthermore it is useful during public place design and

activity analysis for security reasons.

Over the years, there have been many researches which try to find the motion

patterns by using individual object tracking and trajectory classification methods.

However, in real world situations, high density crowds form the most cases, and it is not

always possible to track individual objects. Crowd scenes can be divided into two

groups, unstructured and structured scenes, as in Figure 12. Structured crowds are the

(a) Structured Crowd Scenes

(b) Unstructured Crowd Scenes

Figure 13. Structured/unstructured crowd scene examples.

ones where main motion tracks are defined by environmental conditions, such as

escalators, crosswalks, etc. Unstructured crowds are those where objects can move

freely in any direction, following any path. So far, very few researchers have attempted

to solve the complexity of the crowd scenes that are structured. Detecting dominant

motion flows in unstructured crowds still remains as a challenging task.

To solve the problem of calculating the dominant motion flows both in

unstructured and structured crowds, we propose a new approach which has two

distinctive contributions. First, our approach utilizes motion flows of the SIFT features

in a scene. Unlike corner-based features which have been used commonly in other

researches, SIFT features can represent characteristics parts of the objects. Therefore,

their tracking consistency and accuracy are higher during complex motions. Second,

we propose a hierarchical clustering framework to deal with the complexity of

unstructured motion flows. Entire scene is divided into equally sized local regions. In

each local region, flow vectors are classified into groups based on their orientation.

Then, location-based classification is applied to find the spatial accumulation of the

vectors. Finally, local dominant motion flows are connected to obtain global dominant

motion flows.

Related Work:

Tracking individual objects and constructing the trajectories is a common

approach to find the global motion flows as in [1, 6]. However, for crowd videos,

continuous tracking of individual objects is not possible because of occlusion or failures.

Another approach is to employ instantaneous flow vectors of image features in the

entire image [3-5, 11]. They use corner-based features. But, these features are not

reliable under non-rigid motion, affine transformation or noise. Hence, these researches

consider only structured motions and do not work for unstructured crowds. In [4], they

use neighborhood information, but it fails when a region contains flows with multiple

directions eliminating each other. In [7], they propose floor fields, which are applicable

for structured crowds.

In their work, Brostow et al. [8] tracke simple image features and cluster them

probabilistically to represent the motion of individual entities. The algorithm works for

detecting and tracking individuals under various motions. However, the crowds in their

examples are in the category of structured crowds. Hence the complexity is not as much

as the complexity in unstructured crowds like in our examples. And occlusions are in

the acceptable levels.

In [11, 12], Lin et al. utilize a dynamic near-regular texture paradigm for

tracking groups of people. They try to handle occlusion and rapid movement changes.

However still the type of the crowd mentioned in the paper is structured and the flow of

the marching motion can be predictable. Another approach that tracks individuals in

crowded scenes using selective visual attention is proposed by Yang et al. [13]. Only,

the work in [2] considers unstructured crowd scenes and deals with complex crowds,

they also try to track individual targets.

Grimson et al. [12] gives one of the early examples of activity analysis by

tracking moving objects and learning the motion patterns. They use the tracked motion

data: to calibrate the distributed sensors, to construct rough site models, to classify

detected objects, to learn common patterns of activity for different object classes.

Johnson et al. [5] used neural networks to model motion paths from trajectories. While

in [3], they accumulate the trajectories to describe the mostly followed paths, and then

they use this information to find unusual behaviors of pedestrians. Similarly, Wang et al.

[9] use trajectory based scene model and classification. They propose similarity

measures for trajectory comparison to segment a scene into semantic regions. Vaswani

et al. [10] modeled the motion of all the moving objects analyzing the temporal

deformation of the “shape” which was constructed by joining the locations of the

objects in each frame. Long-term trajectory based approaches are only applicable when

continuous tracking of objects is possible. Therefore, they do not work in case of

unstructured crowds, especially when there are severe occlusions.

In trajectory analysis, sinks are defined as the endpoints of the trajectories [2, 4,

shah]. Stauffer [6] defined a transition likelihood matrix and iteratively optimized the

matrix for the estimation of sources/sinks. Wang et al. [9] estimated the sinks using the

local density velocity map in a trajectory clustering. However, when continuous

tracking is interrupted by occlusions or noisy data, trajectory calculation will result in

false sinks. For unstructured crowds, long-term tracking of objects is not possible. As a

result, sinks cannot be defined reliably. Therefore, short term motion flow vector based

approaches are the most promising solutions to analyze complex motions with severe

occlusions.

Wright and Pless [6] determine persistent motion patterns by a global joint

distribution of independent local brightness gradient distributions and model this with a

Gaussian mixture model. This approach assumes all motion in a frame to be coherent,

independent motions like pedestrians moving independently violate these assumptions.

Ali and Shah [1] present an approach inspired by particle dynamics, where they first

determine spatial flow boundaries by adverting particles through the optical flow field

and subsequently performing graph-cut based image segmentation. Their image

sequences do not contain overlapping motions. Andrade et al. [2] use features based on

linear PCA of optical flow vectors as input for a temporal model.

Crowd motion analysis can be also applied to understand the behavior of

biological populations. For example, Betke et al.[2] proposed an algorithm to track a

dense crowd of bats in thermal imagery. Li et al. [3] have recently developed an

algorithm for tracking thousands of cells in phase contrast time-lapse microscopy

images.

The next section gives the overview of the system. In Section 3.3, the

definition and construction of a SIFT motion flow vector are given. In Section 3.4,

generation of dominant local motion flow vectors by hierarchical clustering of SIFT

motion flows is explained. After that, the next section describes how local motion flows

are combined to obtain global motion flows. Experimental results are presented giving

examples of both structured and unstructured crowd data sets. Finally, the chapter is

closed with conclusions and future directions of the work.

3.2 System Overview

In our study, our main goal is to analyze the behavior of crowds by finding the

most popular flow paths of the crowd motion. This may apply to cars on highways or

crossings, or it may apply to pedestrians. Usually people move along predetermined

paths, for instance they follow escalators, roads, sidewalks. However, when there is no

environmental setting, people choose their own way and walk along random paths. As

mentioned earlier, these kind of crowds show unstructured motion characteristics. The

flow of the motion is not predictable and the occlusion level is usually very high. In

case of dense crowds, tracking of individuals is not easily achievable, and tracking

results are erroneous. Hence, continuous tracking of crowd motion is not a viable

Figure 14. System Overview

solution. Instead, short-term motion flows can be helpful to describe the motion flows in

a local region of the given scene for a short-period of time.

Short-term motion flows are represented by the displacement vector of specific

features of the image between successive frames. Low-level image features are tracked

between successive frames of a video data. The displacement vectors of those features

describe the flow of the motion in that local region. They represent instantaneous

motions in the image. Accumulating these vectors for a long period of time and merging

them yield to useful information about the overall motion in the entire image.

However, short-term motion flows represent various kinds of motion in case of

unstructured crowds. For complex motions, there will be many flow vectors in many

directions after accumulating all the information for a long period of time. As a result,

there will be a huge and complex data set of flow vectors to process to analyze the

motion. This forms the most challenging part of the problem. To deal with the

complexity in the entire scene, first we divide the entire scene into smaller image

regions. In each region, we classify the motion flow vectors to obtain a local dominant

motion flow groups. And, then we merge the motion flow vectors to obtain the local

representative dominant motion flow vectors for each group. Figure 14 shows the

system overview of our approach. After detecting local motion flows, they are

connected to obtain the global dominant motion flows in the entire scene. We use SIFT

image features to track and generate instantaneous motion flow vectors. After

experimenting with KLT, SURF, SIFT. SIFT features give the best results. In general,

there are three main steps:

1. SIFT Motion Flow Vector Generation

2. Hierarchical Clustering of Local Motion Flow Vectors

3. Constructing Global Dominant Motion Flows from Local Motion Flows

These steps are explained in detail in the next sections.

Figure 15. SIFT motion flow vector

3.3 SIFT Motion Flow Vector Generation

In this paper, SIFT features are used to calculate the motion flows. SIFT

features are known to be one of the best features that are robust under various

transformations. They can be used to continuously track the foreground objects over

many frames. Thus, instead of calculating the motion flows at each frame, we track the

features at certain intervals as shown in Figure 15.

It provides us two advantages. First,

it reduces the noise coming from background and unstable points. And computed

motion flow vectors can be used directly without any pre or post processing.

In our experiments we have tried various kinds of well-known image features,

such as KLT[ref], SIFT[ref], SURF[ref], Harris corners[ref]. There are important criteria

while deciding which image features to use to represent the motion flow in the image:

1. The features should be distinctive enough to represent a part of each object

(person) in the image. So that, the motion vectors will not be representing random flow

of the motion (coming from noise, background or instantaneous data) in the image, the

vectors will be created in accordance with the motion of people.

2. There should be enough number of extracted features in the local region. The

density of the features (number of extracted and correctly tracked features per area)

should be sufficient enough to represent the amount of motion in the area.

3. The features should be robust enough to be tracked over many frames.

One of the key contributions of this work is that instead of tracking image

features in each video frame, we track image features after some interval. The reason for

this is two-fold. The first one is we want to obtain motion flow vectors which has

meaningful length and direction information. If the length of the vector is too short, than

orientation information will be too weak or noisy to contain correct orientation flow

information in that region. The second one is that, considering the continuity of the

motion for a certain period of time, the flow vectors generated in each frame will have

the similar information with the flow vector generated after skipping a few frames. By

skipping the frames, we will not lose information, while having advantage in terms of

time and computational complexity.

Figure 16. SIFT motion flow vectors in a given image region.

Each video is segmented into intervals with length “d”. SIFT features extracted

in a frame will be matched to the corresponding features in the next frame after the

interval d. After that, the most representative feature displacement vectors are chosen by

thresholding. The displacement vectors of the features over a certain threshold are

defined as flow vectors. Figure 15 shows the definition of a flow vector. Figure 16

depicts the flow vectors.

x,y : center of mass

Θ : orientation

Flow vector is represented with F(x, y, Θ, t, L), where:

(a)

(b)

Figure 17.SIFT motion flows for 100 frames and 400 frames

L : length

t

: frame number

In our approach, deciding the interval length “d” is very important. After

holding experiments with various video data and interval lengths, most of the time three

was the most informative interval length ensuring the tracking continuity and sufficient

number of features.

Figure 17(a) and (b) demonstrate a part of an unstructured crowd scene with

two different motion flow maps depicted for different durations. Motion flows are

calculated for 100 frames and 400 frames with interval length 3. Accumulation of flow

vectors can be seen in certain orientations. However, if the variety of orientations in the

region increases, the flow map becomes very complicated. The complexity increases in

Figure 18. Motion flow map and local regions for the entire scene.

Figure 17(b). When entire scene is considered, data amount and complexity will be

much higher. The overall motion flow map is show in Figure 18.

The data containing all motion flow vectors is very huge and has high variety

in terms of orientations which spread into the entire image. It is difficult to analyze this

data and cluster into meaningful groups. In this case, an effective solution is to divide

the entire image into regions. Instead of dealing with the huge data, dividing into

smaller groups and clustering the vectors locally gives effective and meaningful results.

Figure 18 shows and example of a division of the entire scene into equally-sized square

shaped local regions.

In each local region, motion flow vector are clustered to obtain the dominant

motion flows in that region. Motion flow vectors have position, orientation and strength

as parameters. If a general clustering is applied by using these parameters all at the same

time, the results will not be informative and serve our aim to calculate the dominant

motion flows. Common clustering methods [3] in the literature will not work effectively.

Instead, we give priority to some parameters, such as orientation, and introduce a

hierarchical clustering method to detect the dominant motion flows in the region, which

is explained in the next section.

(a)System flow

(b) Orientation groups

Figure 19. Hierarchical clustering of motion flow vectors.

3.4 Hierarchical Clustering of Local Motion Flow Vectors

Detecting dominant motion flows is defined as finding the orientation and

spatial distribution of the mostly followed paths in a scene during a given period. If the

motion of the objects in a video has an organized behavior, then one type of orientation

can be assigned to each location. However, for crowd videos, especially unstructured

crowds, participants move in various directions at different times. Each spatial location

holds more than one orientation type depending on the time. It is not possible to find the

dominant flows by existing methods [3, 4, 11].

Figure 20. Dividing into local regions and creating an orientation histogram for each.

(a)Orientation-based

(b)Spatial

(c)Dominant motion flows

Figure 21. Hierarchical Clustering Steps

In this work, entire scene is divided into smaller regions, in which flows

vectors are easier to separate into meaningful groups. Then, the flow vectors in each

region are clustered with a two-step hierarchical approach to find the local dominant

motion flows. Figure 20 and 21 show the hierarchical clustering steps. Finally, local

dominant motion flows are connected to compute the global dominant motion flows.

Orientation information is the most significant information while classifying

the flow vectors. In each local region, first, flow vectors are classified into one of the

four main orientation groups. Figure 19 shows the grouping of orientations. To achieve

this, orientation histogram is calculated and major groups are chosen to represent the

region. For example, in Figure 21(a), there are two groups depending on the orientation

as depicted in blue and green. Second step is spatial clustering. Flow vectors in each

orientation group are clustered based on the location. Hence accumulations of the

vectors in the region are detected as in Figure 21(b). For this, “Self-Tuning Spectral

Clustering” method has been applied considering the evaluation results in [3]. Here,

deciding the number of clusters gains an important role in the results. In our algorithm,

the dimensions of the local regions and the number of clusters are very significant to

obtain the correct representation of the flow in the area.

The dimensions of the local region can be decided by considering the number

of motion flows per area, the average strength of the motion flow vectors.

Deciding

the number of clusters for each step is more straightforward. For orientation-based

clustering, there are four different kinds of orientation groups and the choices can be

one, two, three, four. By looking at the orientation histogram, the number of clusters is

easily calculated. For spatial clustering, “one”,” two”, “three” and “four” are given as an

input to the clustering algorithm, and the one giving the best grouping results is decided

to be the number of clusters. To measure how well the number of clusters provides

meaningful results, we investigate the ratio of distance between the center of clusters to

Figure 22. Local regions and motion flow maps.

Figure 23. Local dominant motion flows.

the local region dimensions. If the resultant groups are very well separated, it means

there is a high chance of good clustering..

After clustering, local dominant motion flows are calculated by computing the

average location, average orientation and total number of the flow vectors in each group.

So, local dominant motion flow for each group is described with L(x, y, w, Θ). “w”

symbolizes the number of vectors and depicted with the width of the flow vector. Figure

21(c) shows three dominant motion flows calculated in the region. The center of the