61solutions4

advertisement

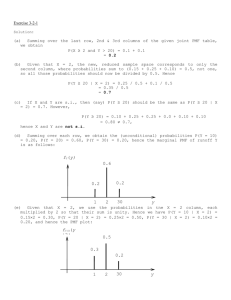

Stat 61 - Homework #4 --- SOLUTIONS (10/8/07) 1. Mathematics applied to real life. In roulette, if you bet $1 on a single number, your probability of winning is 1/38. You are offered 35-1 odds --- which means that you stand to win $35 or lose $1. Let the random variable X be your profit in dollars from such a bet (so that X can be either +35 or –1). The pmf is: x +35 -1 p(x) 1/38 37/38 But the problem is easier if you write X = 36Y-1, where Y is a Bernoulli random variable with parameter p = 37/38 and this pmf: y +1 0 p(y) 1/38 37/38 Then you have the benefit of the standard formulas for Bernoulli r.v.’s: E(Y) = p = 1/38, Var(Y) = p(1-p) = 37/(382) a. What is E(X) ? From the pmf: E(X) = (+35)(1/38)+(-1)(37/38) = –1/19 0.0526 dollars From the transformation: E(X) = 36E(Y)-1 = 36/38 – 1 = –1/19 0.0526 dollars b. What is Var(X) ? From the pmf: E((X-µ)2) = (35-(-1/19))2(37/38) + ((-1)-(-1/19))2(1/38) = (after much algebra) 37(362) / (382) 33.2078 square dollars Better: E(X2) = (352)(1/38) + (-1)2(37/38) = 1262/38 so Var(X) = E(X2) –E(X)2 = 37(362) / (382) 33.2078 square dollars Better still, from the transformation: Var(X) = 362 Var(Y) = 37(362) / (382) 33.2078 square dollars c. What is X ? X = square root of Var(X) 5.763 dollars 2. An opportunity to manage risk. You can also bet on a combination of L numbers, where L can be 1, 2, 3, 4, 6, 12, or 18. Your probability of winning is now L/38. If you bet $1 on this combination, then according to the rules, you stand to win (36/L)-1 dollars or lose one dollar. (That is, they keep your dollar in any event, but if you win they also give you the payoff of 36/L dollars.) Let X be your profit from such a bet (so that X can be either +(36/L)–1 or –1). Now X = (36/L) Y – 1 where Y is Bernoulli with parameter p = (L/38) so E(Y) = L/38, Var(Y) = (L)(38-L) / (382) a. What is E(X) ? E(X) = (36/L) E(Y) = (36/L)(L/38) – 1 = –1/19 0.0526 dollars b. What is Var(X) ? Var(X) = (36/L)2 (L)(38-L) / (382) = ((38-L)/L) (36/38)2 square dollars c. What is X ? X = Square root of ((38-L)/L) times (36/38) dollars L 1 2 3 4 6 12 18 X (dollars) 5.763 4.019 3.236 2.762 2.188 1.394 0.998 1 3. Mean and variance for geometric distributions (=Discrete “power laws”). a. Let a be a constant satisfying 0 < a < 1. Evaluate: A = a + 2a2 + 3a3 + 4a4 + … multiply: aA = a2 + 2a3 + 3a4 + … then subtract: (1-a) A = a + a2 + a3 + a4 … which is a / (1-a), either from the formula for a geometric series or by repeating the same trick; so solve: A = a / (1-a)2. b. With a as before, evaluate: B = a + 4a2 + 9a3 + 16a4 + … (the coefficients are k2 for k = 1, 2, 3, 4…) multiply: aB = a2 + 4a3 + 9a4 + … subtract: (1-a)B = a + 3a2 + 5a3 + 7a4 + … = (a + a2 + a3 + a4 + …) + 2a (answer to A, above) = a/(1-a) + 2a2/(1-a)2 = ( a + a2 ) / (1-a)2 solve: B = (a + a2) / (1-a)3. c. Let µ be a constant satisfying µ > 0. Evaluate: k 1 C = k . k 0 1 1 = (1/(µ+1)) times [ answer to part B with (µ/(µ+1)) in place of a ] 2 = 2µ2 + µ. d. Let µ be as before. A random variable X has a “geometric distribution with mean µ” if it has the pmf 1 p(k) = 1 1 k for k = 0, 1, 2, … (As usual, when p(k) is given only for some values of k, we mean to imply that p(k) = 0 for all other values. The same goes for densities f(y).) 2 Find E(X). E(X) = sum of [ k p(k) ] for k = 0, 1, 2, … = µ (as in class) e. Also find E(X2). E(X2) = answer to part C = 2µ2 + µ. f. Also find Var(X). Var(X) = E(X2) – E(X)2 = (2µ2 + µ) – µ2 = µ2 + µ. g. Also find X, the standard deviation of X. X 2 4. Poisson distributions. A discrete random variable X has a Poisson distribution with parameter and is called a Poisson random variable with parameter if its pmf is given by p(k) = e- ( k / k! ) if k = 0, 1, 2, 3, …. a. What is E(X) ? First note that p(0)+p(1)+p(2)+p(3)+… = 1 (as required) because e-µ ( µ0/0! + µ1/1! + µ2/2! + µ3/3! + µ4/4! + … ) = 1 because the sum is the Taylor series for e+µ. Now, E(X) = 0p(0) + 1p(1) + 2p(2) + 3p(3) + … = e-µ ( 0µ0/0! + 1µ1/1! + 2µ2/2! + 3µ3/3! + 4µ4/4! + … ) = e-µ ( µ1/0! + µ2/1! + µ3/2! + µ4/3! + … ) = e-µ ( µ0/0! + µ1/1! + µ2/2! + µ3/3! + … ) (µ) =µ (as we would hope, given the name of the parameter) b. What is Var(X) ? E(X2) = 0p(0) + 1p(1) + 4p(2) + 9p(3) + … = e-µ ( 0µ0/0! + 1µ1/1! + 4µ2/2! + 9µ3/3! + 16µ4/4! + … ) = e-µ ( 1µ1/0! + 2µ2/1! + 3µ3/2! + 4µ4/3! + … ) 3 = e-µ ( + e-µ ( = e-µ ( + e-µ ( = µ + µ 2. 1µ1/0! + 1µ2/1! + 1µ3/2! + 1µ4/3! + … ) 0µ1/0! + 1µ2/1! + 2µ3/2! + 3µ4/3! + … ) 1µ0/0! + 1µ1/1! + 1µ2/2! + 1µ3/3! + … ) (µ) µ0/0! + µ1/1! + µ2/2! + … ) (µ2) So Var(X) = (µ + µ2) - µ2 = µ . The variance of a Poisson distribution is the same as its mean. (Recall that the standard deviation of an exponential distribution is the same as its mean. That’s not the same thing!) 5. Mean and Variance for a Standard Normal Distribution. A random variable Z is called “standard normal” and has the “standard normal distribution” if it has the density function 1 1 2 z2 e f(z) = 2 for all real z. a. First we’ll try to verify that z f ( z )dz = 1. Try integration by parts on the integral 12 z 2 z e dz … write the integrand as (1) e and integrate the “(1)” while you differentiate the second factor. In that way, show that 1 z2 2 z e 1 z2 2 dz z z 2e 1 z2 2 dz . Well, that’s what you get if you integrate by parts. Well, that didn’t get us very far, did it? So let’s save that formula for future reference, and accept on faith (for now) that z z e 1 z2 2 dz 2 so that f ( z )dz = 1 as required. 4 b. Find E(Z). (Hint: In the integral, make the substitution u = ½ z2. (Now you know why we like that “1/2” in the exponent.) We want: 1 1 2 z2 z e dz z 2 E (Z ) Actually, this looks easy by symmetry. The factor “z” makes this an odd function, so its integral over a symmetrical interval would have to be zero. Or, substitute v = -z with the result that E(Z) = -E(Z), and draw the same conclusion. But this is dangerous. For E(Z) to exist, both the positive part of the integral and the negative part must exist. So we have to (at least roughly) integrate 1 1 1 1 2 z2 z e dz e u du z 0 u 0 2 2 2 positive part of E ( Z ) (since z dz = du ) and this is definitely finite. Now the other half is 1 . 2 That’s all we need to conclude that E(Z) = 0. c. Find E(Z2), Var(Z), and Z. For E(Z2) we want: 1 1 2 z2 z2 e dz . z 2 E (Z 2 ) This would be very hard, except that we have those wonderful formulas left over from part a, which give us 1 1 1 z 2 1 2 z2 z2 e dz e z 2 2 dz 1. z 2 E (Z 2 ) It follows that Var(Z) = Z = 1. The standard normal distribution has mean zero and standard deviation 1. 5 6. The Gamma Function. The “gamma function” is defined by ( x) y 0 y x 1e y dy for every x > 0. (The definition works for most negative values of x and even for complex values of x, but we only care about positive real values.) (Integration by parts was good for problem 5, but it’s even better for anything involving gamma functions.) a. Show that (1) = 1. (1) y11e y dy y 0 e y dy 1 y 0 b. Show that (2) = 1. (2) y 0 y 21e y dy ( ye y ) y 0 y 0 y 0 ye y dy e dy y (by parts) =1 c. Show that (3) = 2. (3) y 0 y 31e y dy ( y =+2 2 y y 0 e ) y 0 y 0 y 2e y dy y 0 2 y e y dy (by parts) ye y dy 2. d. Graph (x) (roughly) for 0 < x ≤ 3. (Hint: (x) has a unique minimum for x > 0.) e. Show that (x+1) = x (x) for all x > 0. (This is consistent with parts b and c, right?) ( x 1) y 0 y x e y dy ( y x y e ) y 0 y 0 ( x) y e dy x 1 y (by parts) =0+(x)( x) as required f. Show that (x) = (x-1)! if x is a positive integer. By induction on the previous result: (x) = (x-1) (x-1) = (x-1)(x-2) (x-2) = … = (x-1)(x-2)…(1) = (x-1)! So, the gamma function is a generalization of factorials. 6 7. The Gamma Distribution. Let a > 0 and let b be any real number. A random variable Y has a gamma distribution with parameters a and b if it has the density function f ( y) [constant] y a 1eby . for y 0 only! (Note that if a = 1, this is just an exponential distribution with b = 1/.) a. Show that the constant has to be ba / (a). Let’s integrate the function without the constant. First, substitute u = by. y 0 y a 1e by dy u 0 (u / b) a 1 e u (1/ b)du (since du=b dy) 1 = a u a 1e u du b u 0 1 a ( a ) b since that last integral is just (a) by definition. So, the constant has to be the inverse of this last expression, hence ba / (a). b. What is E(Y) ? b a E (Y ) y y a 1e by dy y 0 ( a ) b a ( a 1) 1 by e dy y ( a ) y 0 b a (a 1) = a 1 by part a with (a+1) for a ( a ) b a since ( a 1) / ( a) a by problem 6e. b Someday we’ll use this result to estimate rates of occurrence for events. The parameter “a” will represent the number of events we have seen, and the parameter “b” will be the length of time we have been watching. The mean of this gamma distribution, a/b, will turn out to be our estimate of the average number of events per unit time. Of course, that is what we would have guessed without gamma distributions. But we’ll get a lot more from the gamma distributions. (end) 7