Significance results aren't the end of an experimenter's work

advertisement

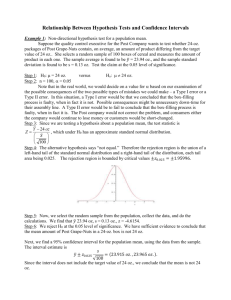

Significance Sometimes we observe a surprising event or sequence of events. E.g. you flip a coin ten times and get ten heads. You ask yourself: “Is the result significant?” I.e. does this result indicate that the coin is biased or did you just observe an unlikely (but not impossible) run? Since the chance of getting 10 consecutive heads is 1 in 210, it seems fair to conclude: “Either the coin is unfair or the coin is fair and I’ve just seen an unusual run of heads.” 1 Let H = the coin is fair. We call this a “statistical hypothesis” about the coin. Given the result of 10 consecutive heads, could we conclude: The probability of H is very low, say 5%? No. From the frequency perspective, this does not make sense! Either the coin is fair (symmetrical structure) or it isn’t. There is no probability about it. We could conclude: Either the coin is unfair or it is fair and an unlikely event occurred. Alternatively: If H is true, then an unlikely event occurred. 2 Recall the example from last class: we found that the probability of getting between 1079 & 1089 Amazing cars that don’t require a tune-up was 99%. Let’s say the government testers found that only 1050 cars went 100,000 km without requiring a tune-up. What does this mean? If the hypothesis that 99% of Amazing cars are reliable is true, then an event has occurred that has a probability <1%. We have found a significant result. We say that: The government data is significant at the 1% level. 3 We use frequency-type probabilities to come up with significance tests, i.e. to tell us when observed results are meaningful. How do we do this? Design a test: Example: does this new drug, X, help alleviate allergy symptoms? 1. Select two groups: one gets X, the other doesn’t. 2. H = X has no effect (“null hypothesis”). 3. Measure the results of the two groups (this calls for some decision). 4. Divide the results into Big (i.e. X helps a lot) and Little (no real difference). 5. Choose these categories so that there is a low probability (according to your model) of getting Big (often 1% is used). 6. Therefore, if the results fall into Big, they are significant (at the 1% level). 4 Let’s worry about #5 above for a moment. There is a range of possible results your experiment might yield. We divide the possible data into two groups: S (significant) and N (not significant) such that: 1. Pr(S/H) < Pr(N/H). This is clearly important for otherwise the result wouldn’t be useful in moving us away from the null hypothesis. 2. Pr(S/H) = p (usually 1% or 5%). What does all this mean? If you’re a medical experimenter, you need to make some choices on how to design your test for X. 5 The experimenter records the data for the two groups given X. Assume she decides to measure how often the subjects in each group sneeze per day. She designs her test so that: 1. The probability if getting a certain number of sneezes is 1% if the Null Hypothesis is true. How do experimenters do this? Logicians aren’t in the business of test design. However, we can analyze the reasoning behind reported results. 6 Let’s say that our researcher finds that all subjects who got X sneezed n times per day. She tells us that the significance level (or “p-value”) of this result is 1%. What does this mean? NOT: “There is a 1% chance that the Null Hypothesis is true”. Either X has an effect or it doesn’t. Rather: If H is true, then using a certain statistic (sneeze frequency) that summarizes data from an experiment like this, the probability of obtaining the data we obtained is 1%. I.e.: Either H is true and something with a probability <1% happened, or H is false. 7 A simple example: H = this coin is fair. Experiment: I toss it seven times. Result: Seven heads. There are 27 = 128 possible outcomes (if H is true). Only one of these outcomes = seven consecutive heads. So, if H is true, I got a result that had <1% (0.78125%) probability of happening. Accordingly, I report: “Either H is false or H is true and something unusual (Pr < 1%) happened by chance.” I say that the “p-value” or “level of significance” is 0.01 (really, 0.0078125). 8 Significance results aren’t the end of an experimenter’s work. They leave a great deal to be done: Causation vs. correlation Just because two things happen together, it does not follow that one caused the other. Which causal processes? We can know a result is significant without knowing how it is. Common cause? We need to find out if one thing caused the other (X and lower sneezing) or if the two are the joint results of something else. The significance results do give us a common measure for different experiments, but that is only the beginning of the story. 9 Power Significance results don’t necessarily change your beliefs. For example, if nothing significant is observed you may still reject the null hypothesis and keep looking. The Neyman-Pearson Framework is a method that leads to a definite decision, one or the other of: Reject a hypothesis H if the outcome falls in R Accept H if the outcome falls in A. They emphasize two kinds of error: Reject H when it is true (type I). Accept H when it is false (type II). N & P say we should minimize both types of error. 10 To do this you must design a test that: 1. Keeps Pr(R/H) low while 2. Maximizing Pr (R/~H). Number two is called the power of the test. N & P want to maximize power. A significance test can help with 1. The power is harder to define because it is not always clear what it means for a statistical hypothesis to be false: there are very many ways this might be the case, many of which could elude us until science progresses. We just focus on the logical point: N-P tests try to maximize the probability of rejecting a false hypothesis while minimizing the probability of rejecting a true hypothesis. 11 Confidence & Inductive Behaviour We often want to draw conclusions about a whole population based on a sample. What we want to know is how reliable conclusions from samples to wholes are. Interval estimates: 1. The number of males in this room is between 20 and 50. 2. The proportion of female students is between 0.45 and 0.55. 3. Etc. How reliable are estimates like this? The Bayesian has a quick answer: Sample the population and use Bayes’ rule to calculate the belief-type probability that the number of males in this room is between 20 and 50. 12 But on the frequency perspective, either the number of males is between 20 and 50 or it isn’t. There is no frequency (and, hence, no probability) about it. What can the frequency-type theorist say about the reliability of interval estimates? Opinion Polls 1. We think of polls as Bernoulli trials: sampling with replacement with constant probability p. 2. Divides the population into the Gs and the ~Gs (this proportion gives p). When p is known, Bernoulli’s Theorem tells us that: For any small margin of error, there is a high probability that a large sample from the population will have a proportion of Gs close to p. 13 We can use Normal Fact III (from chapter 17 of the book) as follows: The probability that the proportion of Gs in our sample is within /n of p is 0.68. The probability that the proportion of Gs in our sample is within 2/n of p is 0.95. The probability that the proportion of Gs in our sample is within 3/n of p is 0.99. But we want to go from our sample to p. How do we do this? 14 Recall that = [p(1-p)n] In chapter 17 (exercises) we see that the margin of error is largest when p = ½. In that case, = 1/(2n). We conclude that: The probability is at least (worst case) 0.68 that the proportion, s, of Gs in the sample is within 1/(2n) of p. The probability is at least 0.95 that the proportion, s, of Gs in the sample is within 1/n of p. The probability is at least 0.99 that the proportion, s, of Gs in the sample is within 3/(2n) of p. So, if n = 10,000, then there is a 95% probability that the proportion s of Gs in the sample is within 1/10,000 = 1% of p (no matter what p is). 15 But this allows us to go from samples to wholes as follows: Let s be the proportion of Gs in your sample of 10,000. You know that: There is at least a 95% probability that s is within 1% of p. But if that is the case, then: There is at least a 95% probability that p is within 1% of s. Remember: p is fixed. This is just a fact about the population under study. What can vary is s, the proportion of Gs found in a sample. 16 So you sample 10,000 people and find that 25% of them are Gs. Do you conclude: There is a 95% probability that the percentage of Gs lies between 0.24 & 0.26? No. From the frequency perspective this is nonsense: either the proportion of Gs is between 0.24 and 0.26 or it isn’t. What should we say? 1. The interval 0.24-0.26 is called a confidence interval. It is an interval estimate of the proportion of Gs. 2. The 95% figure is our confidence in the interval estimate. That is, we made the estimate by a method that gives the correct interval 95% of the time. 17 A confidence statement has the form: On the basis of our data, we estimate that an unknown quantity lies in an interval. This estimate is made according to a procedure that is right with probability at least 0.95. Example (p. 233): 1026 residents were polled and 38% favoured a complete ban on smoking in restaurants. The result is considered accurate to within three percentage points, 19 times out of 20. What this means: 1. The proportion of residents who favour a ban lies in the interval 0.35-0.41. 2. The method used to get this figure is right 95% of the time. 18 Why choose plus or minus 3%? Recall that the 95% confidence interval is: 1/n = 1/1026 = 0.031 3%. The pollsters chose their numbers so that the confidence interval would be 95% with a 3% margin of error. We can calculate the 99% confidence interval. The margin or error is: The 68% confidence interval is: 19 Behaviour or inference? Neyman denies that we ever make inductive inferences such as: “The probability of x is y”. All we can do, he says, is make frequencytype claims about our methods (confidence intervals): “If I use this procedure repeatedly, I will be right 68/95/99% of the time.” Or: “If I reject H, I am using a method that will only lead me astray 1% of the time.” BUT: Why isn’t this an inference? It seems that you infer that H is false. It is good to get clear on how the inferences work, but we needn’t be dogmatic. 20 THE PROPENSITY INTERPRETATION We have spent some time examining the frequency theory of probability. It is an important perspective on probability for it allows us to view probability objectively, as a measure of occurrences in the world instead of personal assessment. Recall Von Mises: The essentially new idea which appeared about 1919…was to consider the theory of probability as a science of the same order as geometry or theoretical mechanics…just as the subject matter of geometry is the study of space phenomena, so probability theory deals with mass phenomena and repetitive events. This perspective is useful in dealing with any area where we need to draw conclusions from statistics: Medicine, demographics, genetic studies, etc. 21 Single cases Consider an event, E. E might be a flipping of a coin or the decay of a radioactive atom. What is the objective probability of E? Frequency theorists typically deny that this question has an answer. We can talk about the probability of heads in a sequence of tosses, or the probability of decay in a group of atoms, but that is all. Here is Von Mises again: We can say nothing about he probability of death of an individual even if we know his condition of life and health in detail. The phrase ‘probability of death’, when it refers to a single person has no meaning at all for us. This is one of the most important consequences of our definition of probability. It has no meaning, of course, because he defines probability as a relative frequency of events. 22 Dissatisfaction To many philosophers this seems unsatisfactory. For instance, Popper thought Quantum mechanics requires an objective notion of probability that also applies to single cases. E.g., the probability that this atom will decay at this time is p. Suppose some new substance is discovered, but there is only a small quantity of it in the universe, say one gram. Suppose a person, S, ingests all of the substance. It seems to makes sense to say ‘there is a probability it will kill him’ 23 A possible response Equate the probability of E with the frequency of E in the collective/sequence. Popper: We toss two dice, A and B. A is ‘loaded’: it is weighted so that 5 comes up ¼ of the time The material in B is uniformly distributed We toss the two dice as follows: A is thrown many times, B only occasionally. Therefore: The frequency of 5’s will be about ¼. Now we are about to toss B—single event. What is the probability of 5? If we equate it with the frequency in the collective, the answer is ¼. But B is not loaded, so the probability should be 1/6. 24 Dispositions Because of cases such as these, philosophers introduced the propensity interpretation of probability. On this view, the probability of E occurring is a measure of the propensity of a given situation to give rise to E. A propensity is a disposition or tendency for something to occur (as we have seen). Some points to note: Something can have the disposition p to X even if it never in fact X’s, or X’s only once. So, if objective probabilities are dispositions, we can meaningfully assign probabilities to single cases. 25 Properties of situations Suppose we toss a fair coin. Should the propensity theorist say the coin has a propensity of 0.5 to come up heads? No. Suppose we toss it on a surface that has a number of slots. Now there is a real probability of the coin landing on its edge. In this situation, the probability of heads is less than 0.5, even though the coin is fair. Propensities are properties of situations or experimental set-ups. A coin might have a propensity in situation S to come up heads 50% of the time. Propensities are relations between objects and set-ups. 26 Propensities and frequencies Of course, there is a relation between propensities and frequencies. If a situation, S, has a propensity, p, to produce event E, then if we repeatedly bring about S then the frequency of E’s should be close to p. This is one way in which we can test a propensity claim—by repeating the situation. Question: if we can’t repeat the situation, how could we test a propensity claim? 27 The nature of dispositions There is some philosophical controversy over what exactly a disposition is. Some think we can think of them as true counterfactual conditionals: If F were the case, then G would be the case. E.g.: Johnny is disposed to get angry when punched = If Johnny were to be punched, then he would become angry. Others think of them as a unique property that exists in an object or situation. This is something to think about. 28 Assigning propensities Suppose S is a 40 year old Canadian. We know that z% of 40 year old Canadians die before reaching 41. So, we say the propensity that S dies within a year is z. But S is also a smoker. We know that x% of Canadian smoker’s die within the year. Should the propensity that S die within a year be z or x? Response: use the narrowest reference class available. Since 40 year old Canadian smokers is a subset of 40 year old Canadians, the answer is x. 29 Problem A slightly different example: As before, x% of 40 year old Cdn. smokers die within the next year But y% of 40 year old Cdn. recreational swimmers die within the next year. Now, suppose S both smokes and swims. Which reference class do we use? Each is equally narrow There seems to be no way to uniquely assign a propensity. 30 Objectively based vs. objective Recall the frequency principle from a few weeks back: It tells you to equate the single case probability of an event to the frequency of that event in the long run. From a frequency dogmatist’s point of view, the single case probability has no meaning but we might use this as a useful guideline. Some people have suggested that propensity assignments are like this. They deny that there is any objective probability to single events. I.e., all such assignments are subjective. But you can base those assignments on frequency-type evidence. Hence, single case assignments can be objectively based but are not objective. 31 Reference classes again Hence, on this view it is no surprise that we come up against the reference class problem. Where there is insufficient frequencytype evidence, there is no objective basis for a single case probability assignment. But perhaps there is a way around this. Recall S, who is a member of two equally narrow reference classes with probabilities x and y. But suppose we have further information about S in particular, for example: S is from a long line of smokers none of whom have died of cancer before the age of 80. We can use this information… 32 Non-statistical information This gives us reason to favour y over x as the probability of S dying. Since S in particular seems unlikely to die from smoking this year, we seem justified in choosing the rec. swimmer’s class in assigning our probability. While this procedure will be subjective and imprecise, it is reasonable and can help us assign propensities to single cases. 33 Summing up Still, in simple set-ups it seems reasonable to suppose that there is an objective probability of an event occurring in a single case, e.g.: Tossing a die, spinning a roulette wheel, etc. It seems, however, difficult to accept objective propensities in more complex cases. Still, we might want to assign probabilities to such cases. Clearly there is more work to be done… 34 Alternate theories I. State of the universe (Miller): the state of the entire universe at T has a propensity to bring about a single event E. Objection: such a state isn’t repeatable so how can we test for such properties? II. Relevant Conditions (Fetzer): the set of conditions causally related to E has a propensity to bring about single event E. Objection: not easy to determine all causally relevant conditions. III. Long run propensity (Gillies): propensities are properties of repeatable conditions that determine the frequencies of E in the long run. Objection: no assignment for single cases. 35 Homework Do the exercises at the end of chapters 18 & 19. Read the sections on inference vs. behaviour again. This will come in handy later. 36