Demonstrating Your Impact on Campus: Program Evaluation 101

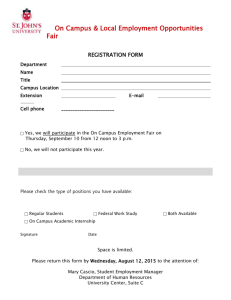

advertisement

Demonstrating Your Impact on Campus: Program Evaluation 101 Ariana Bennett, MPH 1 What Is Program Evaluation? Program evaluation is the process of determining the value or worth of a program, activity, or event. It is different from many other types of research because its purpose is to inform action and help you put your findings into practice to improve what you do. Why Is Program Evaluation so Important? Evaluation is how you can tell if your programs are having the positive effects you intend! Collecting information from your chapter members, event participants, and other partners can give you information about how many students you are reaching, how your events have impacted their knowledge, attitudes, and beliefs, and how you can improve your efforts. Having evidence that your chapter’s activities are effective can be crucial when you are petitioning for administrative support or applying for funding. How Do We Start? 1. Identify key stakeholders - Stakeholders are individuals or groups that affect or are affected by your program. They are the people that influence your work and that you have relationships with. On your campus, stakeholders might include: the student body, chapter members and leaders, school administrators, campus health and mental health services, and the student government. - These stakeholders care if and how your programs work. They may be key partners in helping you decide what aspects of your program to evaluate or they may be the very people you want to gather evaluation data from. 2. Be clear on the goals of the evaluation - Before you can decide what tactics you should use to evaluate your programs, it’s important to figure out what you want to know. For example, do you want to find out about your visibility on campus? Or, would you rather know how a conference you hosted increased participant’s knowledge or improved their attitudes about mental health? Answering these questions will help guide the rest of your evaluation. 3. Stay focused on your chapter’s mission, objectives, and charter - Keeping your chapter’s goals in mind can help you know how to interpret your evaluation results to know where your efforts have been successful and where there is room for improvement. Basic Types of Evaluation There are two main types of program evaluation, each with its own set of methods and measures. These types are quantitative and qualitative. 1. Quantitative - Quantitative evaluation consists of numeric observations that are standardized. This can take the form of a simple count (e.g. number of participants) or a frequency (e.g. percentage of participants who rated the event as “excellent”), or it can mean that a participant’s responses are converted from words into numbers (e.g. on a “yes/no” questions, “yeses” get coded as “1” and “nos” get coded as “2” in order to do calculations). - The most common quantitative method of program evaluation is the survey, which is a series of closed-ended questions that a participant is asked to answer. Closed-ended means that there are limited answers to choose from, and everyone gets the same choices. Surveys are a great way to get information from a large number of people in a relatively short time. Demonstrating Your Impact on Campus: Program Evaluation 101 Ariana Bennett, MPH 2 They are especially useful for getting information on people’s knowledge, attitudes, behaviors, and socio-demographics. 2. Qualitiative - Qualitative evaluation consists of observations that do not take the form of numbers. This type of evaluation can provide insight into the context, process, and meaning of participants’ experiences. Compared to quantitative evaluation, qualitative evaluation often provides a deeper look into HOW and WHY things happen, as opposed to WHAT happened. - The two most common qualitative methods of program evaluation are in-depth interviews and focus groups. Both of these methods use open-ended questions (questions with no preset answer categories) to allow participants to tell their stories about the topic at hand. o In-depth interviews are one-on-one interviews conducted with a participant. They allow one person to share a lot. o Focus groups are group interviews in which six to ten participants gather to answer questions and talk about a topic. Though each person gets to share less than in an indepth interview, the interaction between group members is an important part of the data. How Can We Use Evaluation on Our Campus? There are lots of ways! There are different aspects of your efforts on campus that you can evaluate. Some of these are more process-oriented, such as examining how the planning of an event went. Other options are more outcome-oriented, such as doing a pre- and post-test about attitudes about depression before and after a panel discussion to see the effects of the activity. Program evaluation is a big spectrum, so no matter how much experience, time, or resources you have, there are ways you can work evaluation tactics into the work you do. - Here are some ideas for some steps to start with: o Keep track of attendance at all your events—this will also give you the information you need to find out how many students come to more than one event! o Get attendees’ contact information whenever possible—even if you don’t have the resources to develop a survey now, if you have their email addresses on hand, you’ll always have the option of sending them an online survey later. o Use social media sites like Facebook and Twitter. These provide an easy way for you to track your visibility by tracking how many people “like” or “follow” you. It can also be a tool for disseminating surveys. o Set aside time at the end of chapter meetings or events to open the floor for people to share what they liked about the day’s activities and what they would have changed. o Design a brief “satisfaction survey” that you can pass out after events to see how much people liked the activities or create a survey using www.surveymonkey.com (it’s free!) and email it out to attendees after your event. o Design a brief questionnaire to give to people both before and after an event to see if their knowledge and/or attitudes changed based on their participation. Most importantly, keep up the great work and have fun! Demonstrating Your Impact on Campus: Program Evaluation 101 Ariana Bennett, MPH 3 Sources: Bamberger, M., Rugh, J., & Mabry, L. (2006). RealWorld Evaluation: Working Under Budget, Time, Data, and Political Constraints. Thousand Oaks: Sage Publications. Crosby, R.A., DiClemente, R.J., & Salazar, L.F. (Eds.). (2006). Research Methods in Health Promotion. San Francisco: John Wiley & Sons, Inc. Rossi, P.H., Lipsey, M.W., & Freeman, H.E. (2004). Evaluation: A Systematic Approach. Thousand Oaks: Sage Publications. Wholey, J.S., Hatry, H.P., Newcomer, K.E. (2004). Handbook of Practical Program Evaluation. San Francisco: John Wiley & Sons, Inc.