Homework assignment #4

advertisement

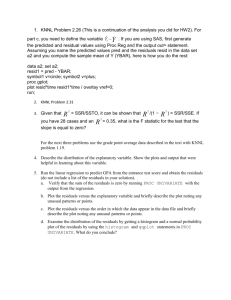

Homework assignment #2 Spring 06. GOAL: To perform a complete multiple regression analysis, from data screening to model validation. The emphasis of this exercise is on verifying regression assumptions. Use the Spartina.jmp data in the examples web page. These data are explained and used extensively in the RP book. Regress biomass (bmss) on pH and K. Open spartina.jmp. In JMP select Analyze -> Fit Model. In the Select Columns pane of the Fit Model window select bmss and click on Y. Select pH and click on Add. Select K and click on Add. In the drop-down menu for Emphasis select Minima Report. Click on Run. QuickTime™ and a TIFF (LZW) decompressor are needed to see this picture. 1. Check the ratio of observations to # of X's. Comment. A bare minimum of 6 observations per predictor is necessary for any model to be useful. Some authors recommend 30-50 observations per predictor. [5] 1 For this analysis there are 45/2 = 22.5 observations per predictor. This is a sufficient number that falls between the extremes of the recommendations. With this number of observations, we can consider a maximum of 7 predictors for the model. 2. Save the Hats, Residuals, Predicteds, Cook’s D, and studentized residuals. Request a plot of the residuals vs. the predicted values through the pop-down menu at the red triangle at the top of the output window. Identify outliers, if any, in the Y dimension based on a critical t value for =0.005. It is not necessary to use the deleted studentized residuals; use the regular studentized residuals. [20] After saving the Studentized residuals, I created a new column in the data table and added the following formula. This column show the P-value for each studentized residual. There is only one observation is identified as an outlier in Y. The observations that have a P-value lower than 0.005 can be pinpointed by selecting Rows -> Row Selection > Select where … Remember, the t-test and the critical t values are based on the degrees of freedom of the residual or error. In this exercise we use two predictors, so for a multiple linear regression there are 3 parameters. In the KNN textbook, p=number of parameters. Thus, p=3, and dfe=42. 3. Identify outliers in the X dimension, if any. Use the JMP Multivariate platform for this step. Include the two predictors in the analysis. Use the Jackknifed Mahalanobis (D), and write down the equation JMP uses for the critical value. This is found in the Statistical Details in the Statistics and Graphics Guide pdf that comes with the software. The file can also be downloaded from the JMP website. We use D because the predictors are random variables. [20] Using the Multivariate platform as indicated in the figure below, I obtained the jackknifed distances. The plot of the jackknifed distance has a horizontal dashed line. Four observations are above the line and thus are identified as outliers in the X dimension. In this case, it would be appropriate to use a Bonferroni correction for the detection of outliers, or to use an alpha level smaller than the default 5 used by JMP t o draw the line. The critical value of D for alpha=0.005 is 3.47178. 2 4. If the X’s were not random variables, we use the Hats to determine if any combination is too far from the rest. The average for the hats is p/n, the number of parameters divided by the number of observations. Thus if hii>2p/n we label the observation as an outlier in the X dimension. These can have too much leverage on the regression. Determine if there are any X-outliers assuming X’s are fixed (p=m+1). [10] P=3 so the critical value for hii is 6/45 = 0.133. Based on this, the 5 observations with the highest predicted values of biomass are outliers in the X dimensions. Four of these five are the same observations identified as outliers in the X dimensions by the jackknifed Mahalanobis distance. 5. Determine if any of the outliers is influential. You are not asked to do anything about the outliers. Just determine if there are any, who they are, and whether they are influential. [10] Critical value for Cook’s D is F Quantile(0.50, 3, 42) = 0.8016. None of the observations is influential. Once again, notice the degrees of freedom p=3, dfe=n-p=42. 3 6. Explain what it means to say that an observation is influential. [5] An observation is influential if its exclusion causes major changes in the fitted parameters or in the predictions. Keep in mind that a significant difference in the parameters may not lead to significant changes in the predictions if parameter change compensate the impacts in predicted values. Also, be aware of the fact that even if parameters changes are not significant when taken individually, if their impacts on predictions are in the same direction there can be significant changes in predicted values. 7. Check for normality of the residuals with a Shapiro-Wilk test with =0.01. Interpret the result by writing a sentence that indicates what null hypothesis is or is not rejected and why. [10] I used the Analyze -> Distribution platform, added the residuals to the Y, Columns box, and clicked on Run. In the Distribution window I clicked on the red triangle on the bar for Residual bmss and selected Fit Distribution -> Normal. Then I selected Goodness of Fit from the red triangle next to Fitted Normal. The probability of W being 0.9217 or greater given that the residuals are normal is 0.0048. Thus, the Ho that the residuals are normally distributed is rejected. 8. Test correlation in errors with the Durbin-Watson test. Assume that the observations were given in the order they were collected. Interpret the result by writing a sentence that indicates what null hypothesis is or is not rejected and why. [10] The Durbin-Watson test is displayed in the first figure, at the bottom of the Fit Least Squares window. The probability is greater than 0.05, thus, the Ho that the autocorrelation with lag 1 is zero cannot be rejected. This indicates that we cannot reject the independence of residuals, and therefore there is no basis to doubt that. Please, keep in mind that the inability to reject the assumption of independence does not mean that residuals or measurements are not “time series,” collected before and after some treatment, or over time. First, all data are generated and collected in space and time. Everything happens in space and time. Second, the simple detection of significant presence or absence of spatio-temporal correlations provides absolutely no basis to indicate why such correlation is absent or present. What happens in reality, as in the spartina data set, is that we usually do not know the location of events in space and time, or the locations are not conducive to testing for correlation. Evenf we knew the times when data were collected, their spatial locations, or the sequence in which they were analyzed in the lab, we might or might not find autocorrelations. 4 9. Make sure you understand that testing for normality and testing for homogeneity of variance are two different things by creating a fictitious hand-drawn (you can use whatever method is easiest for you to produce the plot) scatter plot of residuals vs. predicted values that are clearly not normal and also clearly have homogeneous variances. [10] The main point here is that for normality we only need the vertical axis, or the frequency of points for each level of e. It does not matter how those points are arranged over different values of predicted Y. Several students emphasized some sort of pattern of e over predicted Y. Although the pattern can be there, it does not contribute to answering this question. In other words, it is possible to create all sorts of patterns and still have homogeneity of variance and normal residuals. The patterns that look like smileys, increasing, decreasing, etc. show lack of fit, not lack of normality or heterogeneity of variance. 10. Plot residuals and split the data set into two groups based on the predicted Y by creating a grouping variable called GROUP that has a value equal to “below” (that is a string of text) if predicted Y is below a value you chose by eyeballing the scatter plot of residuals, and “above” if predicted Y is above or equal to the value chosen. This simply means that you split the data into two groups by drawing a vertical line in the plot of residuals vs. predicted values such that two groups of potentially different variance are labeled. Perform a modified Levene’s test or other test of homogeneity of variance. (Refer to Ch.4 of lecture topics.). Select Analyze-Fit Y by X. Put the residuals of bmss in the Y box and GROUP in the X box. Click OK. In the output window, click on the famous red triangle at the top and select UnEqual variances. Interpret the output by writing a sentence indicating what null hypothesis is or is not rejected and why. [25] 5 All tests of the Ho that variances are equal in both groups yield probabilities above the critical level, thus the null hypothesis cannot be rejected and we have no basis to doubt the assumption that variances are homogeneous. 11. Construct a partial regression plot of bmss vs. K by following these steps: first regress bmss and K (one at a time) on pH. Save the residuals of bmss and the residuals of K. Use the Fit Y by X platform to plot and regress Residual bmss on Residual K. Compare the model SS with the type III SS for K in the model with both predictors (first model of the homework). Compare the graph with the Leverage plot for K given by JMP. Interpret the comparison indicating why the values are different or similar. [25] 6 The two plots contain the same information, and other than the fact that the plot on the left is centered at 0, the graphs are identical. The model SS on the left (924265.51) is the same as the type III SS for K on the model with both variables on the right. The values are the same because on the left, we determined the variance in bmss explained by K after removing all effects of pH. That is essentially the definition of the type III SS. The estimated beta for K is also the same in both models. The betas are the “partial regression coefficients, the best estimate of the impact of one predictor on the response when all other predictors are maintained constant. The partial regression plots are also called “added variable” plots in the textbook. These plots are also used to test for the linearity of the response with respect to each predictor, after elimination of the potentially misleading collinear impacts of all other predictors. 7

![[#GEOD-114] Triaxus univariate spatial outlier detection](http://s3.studylib.net/store/data/007657280_2-99dcc0097f6cacf303cbcdee7f6efdd2-300x300.png)