Cache Memory (Key words: Cache Hit, Cache Miss, Hit Rate , Latency)

advertisement

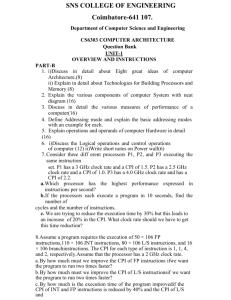

Introduction to Computer Administration Week-2 Advanced Concepts related to Computer Parts and Types 1. System Clock 2. MPIS / TFLOPS 3. Cache 4. DMA (Direct Memory Access) 5. Pipeline 6. SMP (symmetric multiprocessing) 7. Single-Point-Of-Failure System Clock ( Clock Rate , MHz , GHz ) The clock rate is the fundamental rate in cycles per second (measured in hertz) for the frequency of the clock in any synchronous circuit. For example, a crystal oscillator frequency reference typically is synonymous with a fixed sinusoidal waveform, a clock rate is that frequency reference translated by electronic circuitry into a corresponding square wave pulse [typically] for digital electronics applications. In this context the use of the word, speed (physical movement), should not be confused with frequency or its corresponding clock rate. Thus, the term "clock speed" is a misnomer. CPU manufacturers typically charge premium prices for CPUs that operate at higher clock rates. For a given CPU, the clock rates are determined at the end of the manufacturing process through actual testing of each CPU. CPUs that are tested as complying with a given set of standards may be labeled with a higher clock rate, e.g., 1.50 GHz, while those that fail the standards of the higher clock rate yet pass the standards of a lesser clock rate may be labeled with the lesser clock rate, e.g., 1.33 GHz, and sold at a relatively lower price Limits to clock rate The clock rate of a CPU is normally determined by the frequency of an oscillator crystal. The first commercial PC, the Altair 8800 (by MITS), used an Intel 8080 CPU with a clock rate of 2 MHz (2 million cycles/second). The original IBM PC (c. 1981) had a clock rate of 4.77 MHz (4,772,727 cycles/second). In 1995, Intel's Pentium chip ran at 100 MHz (100 million cycles/second), and in 2002, an Intel Pentium 4 model was introduced as the first CPU with a clock rate of 3 GHz (three billion cycles/second corresponding to ~3.3 10-10seconds per cycle). With any particular CPU, replacing the crystal with another crystal that oscillates half the frequency ("underclocking") will generally make the CPU run at half the performance. It will also make the CPU produce roughly half as much waste heat. The clock rate of a computer is only useful for providing comparisons between computer chips in the same processor family. An IBM PC with an Intel 80486 CPU running at 50 MHz will be about twice as fast as one with the same CPU, memory and display running at 25 MHz, while the same will not be true for MIPS R4000 running at the same clock rate as the two are different processors with different functionality. Furthermore, there are many other factors to consider when comparing the performance of entire computers, like the clock rate of the computer's front-side bus (FSB), the clock rate of the RAM, the width in bits of the CPU's bus and the amount of Level 1, Level 2 and Level 3 cache. Further, in many cases a computer's performance depends on factors outside of the CPU, such as the speed of access to storage devices such as hard drives. Clock rates should not be used when comparing different computers or different processor families. Rather, some software benchmark should be used. Clock rates can be very misleading since the amount of work different computer chips can do in one cycle varies. For example, RISC CPUs tend to have simpler instructions than CISC CPUs (but higher clock rates), and superscalar processors can execute more than one instruction per cycle (on average), yet it is not uncommon for them to do "less" in a clock cycle. In addition, subscalar CPUs or use of parallelism can also affect the quality of the computer regardless of clock rate. Clock rates: 1 Hertz = 1 Cycle Per Second 1 KHz = 1024 Cycles Per Second 1 MHz = 106 Cycle Per Second 1 GHz = 109 Cycle Per Second 1 THz = 1012 Cycle Per Second MIPS Processor Intel 486DX DEC Alpha 21064 EV4 IPS IPS/MHz Year 54 MIPS at 0.818 MIPS/MHz 1992 66 MHz 300 MIPS at 2 MIPS/MHz 1992 Source [4] Motorola 68060 Intel Pentium Pro ARM 7500FE PowerPC G3 Zilog eZ80 Intel Pentium III 150 MHz 88 MIPS at 66 MHz 541 MIPS at 200 MHz 35.9 MIPS at 40 MHz 525 MIPS at 233 MHz 80 MIPS at 50 MHz 1,354 MIPS at 500 MHz 1.33 MIPS/MHz 1994 2.705 MIPS/MHz 1996 [5] 0.897 MIPS/MHz 1996 2.253 MIPS/MHz 1997 1.6 MIPS/MHz 1999 [6] 2.708 MIPS/MHz 1999 [7] Freescale MPC8272 760 MIPS at 1.9 MIPS/MHz 400 MHz 3,561 MIPS at 1.2 GHz 5,935 MIPS AMD Athlon XP 2400+ at 2.0 GHz 9,726 MIPS Pentium 4 Extreme Edition at 3.2 GHz 2,000 MIPS ARM Cortex A8 at 1.0 GHz 12,000 MIPS AMD Athlon FX-57 at 2.8 GHz AMD Athlon 64 3800+ X2 14,564 MIPS (Dual Core) at 2.0 GHz Xbox360 IBM "Xenon" 19,200 MIPS Triple Core at 3.2 GHz 10,240 MIPS PS3 Cell BE (PPE only) at 3.2 GHz AMD Athlon FX-60 (Dual 18,938 MIPS Core) at 2.6 GHz Intel Core 2 Extreme 27,079 MIPS X6800 at 2.93 GHz Intel Core 2 Extreme 49,161 MIPS QX6700 at 2.66 GHz 8,800 MIPS P.A. Semi PA6T-1682M at 2.0 GHz Intel Core 2 Extreme 59,455 MIPS QX9770 at 3.2 GHz AMD Athlon 2000 Integrated Communications Processors 2.967 MIPS/MHz 2000 2.967 MIPS/MHz 2002 3.039 MIPS/MHz 2003 2.0 MIPS/MHz 2005 [8] 4.285 MIPS/MHz 2005 7.282 MIPS/MHz 2005 2.0 MIPS/MHz 2005 3.2 MIPS/MHz 2006 [9] 7.283 MIPS/MHz 2006 [9] 9.242 MIPS/MHz 2006 [9] 18.481 MIPS/MHz 2006 [10] 4.4 MIPS/MHz 2007 [11] 18.580 MIPS/MHz 2008 [12] Intel Core i7 Extreme 965EE AMD Phenom II X4 940 Black Edition 76,383 MIPS at 3.2 GHz 42,820 MIPS at 3.0 GHz 23.860 MIPS/MHz 14.273 MIPS/MHz 2008 [13] 2009 [14] TFLOPS (1012 FLoating point Operations Per Second) In computing, FLOPS (or flops or flop/s) is an acronym meaning FLoating point Operations Per Second. The FLOPS is a measure of a computer's performance, especially in fields of scientific calculations that make heavy use of floating point calculations, similar to the older, simpler, instructions per second. Computer Performance Name FLOPS yottaFLOPS 1024 zettaFLOPS 1021 exaFLOPS 1018 petaFLOPS 1015 teraFLOPS 1012 gigaFLOPS 109 megaFLOPS 106 kiloFLOPS 103 Cache Memory (Key words: Cache Hit, Cache Miss, Hit Rate , Latency , Cache Types) A CPU cache is a cache used by the central processing unit of a computer to reduce the average time to access memory. The cache is a smaller, faster memory which stores copies of the data from the most frequently used main memory locations. As long as most memory accesses are cached memory locations, the average latency of memory accesses will be closer to the cache latency than to the latency of main memory. When the processor needs to read from or write to a location in main memory, it first checks whether a copy of that data is in the cache. If so, the processor immediately reads from or writes to the cache, which is much faster than reading from or writing to main memory. The diagram on the right (above) shows two memories. Each location in each memory has a datum (a cache line), which in different designs ranges in size from 8 to 512 bytes. The size of the cache line is usually larger than the size of the usual access requested by a CPU instruction, which ranges from 1 to 16 bytes. Each location in each memory also has an index, which is a unique number used to refer to that location. The index for a location in main memory is called an address. Each location in the cache has a tag that contains the index of the datum in main memory that has been cached. In a CPU's data cache these entries are called cache lines or cache blocks. Most modern desktop and server CPUs have at least three independent caches: an instruction cache to speed up executable instruction fetch, a data cache to speed up data fetch and store, and a translation lookaside buffer used to speed up virtual-to-physical address translation for both executable instructions and data. When the processor needs to read or write a location in main memory, it first checks whether that memory location is in the cache. This is accomplished by comparing the address of the memory location to all tags in the cache that might contain that address. If the processor finds that the memory location is in the cache, we say that a cache hit has occurred; otherwise, we speak of a cache miss. In the case of a cache hit, the processor immediately reads or writes the data in the cache line. The proportion of accesses that result in a cache hit is known as the hit rate, and is a measure of the effectiveness of the cache. In the case of a cache miss, most caches allocate a new entry, which comprises the tag just missed and a copy of the data from memory. The reference can then be applied to the new entry just as in the case of a hit. Misses are comparatively slow because they require the data to be transferred from main memory. This transfer incurs a delay since main memory is much slower than cache memory, and also incurs the overhead for recording the new data in the cache before it is delivered to the processor. DMA (Direct Memory Access) Direct memory access (DMA) is a feature of modern computers and microprocessors that allows certain hardware subsystems within the computer to access system memory for reading and/or writing independently of the central processing unit. Many hardware systems use DMA including disk drive controllers, graphics cards, network cards and sound cards. DMA is also used for intra-chip data transfer in multicore processors, especially in multiprocessor system-on-chips, where its processing element is equipped with a local memory (often called scratchpad memory) and DMA is used for transferring data between the local memory and the main memory. Computers that have DMA channels can transfer data to and from devices with much less CPU overhead than computers without a DMA channel. Similarly a processing element inside a multi-core processor can transfer data to and from its local memory without occupying its processor time and allowing computation and data transfer concurrency. Without DMA, using programmed input/output (PIO) mode for communication with peripheral devices, or load/store instructions in the case of multicore chips, the CPU is typically fully occupied for the entire duration of the read or write operation, and is thus unavailable to perform other work. With DMA, the CPU would initiate the transfer, do other operations while the transfer is in progress, and receive an interrupt from the DMA controller once the operation has been done. This is especially useful in real-time computing applications where not stalling behind concurrent operations is critical. Another and related application area is various forms of stream processing where it is essential to have data processing and transfer in parallel, in order to achieve sufficient throughput. A typical usage of DMA is copying a block of memory from system RAM to or from a buffer on the device. Such an operation does not stall the processor, which as a result can be scheduled to perform other tasks Pipeline An instruction pipeline is a technique used in the design of computers and other digital electronic devices to increase their instruction throughput (the number of instructions that can be executed in a unit of time). The fundamental idea is to split the processing of a computer instruction into a series of independent steps, with storage at the end of each step. This allows the computer's control circuitry to issue instructions at the processing rate of the slowest step, which is much faster than the time needed to perform all steps at once. The term pipeline refers to the fact that each step is carrying data at once (like water), and each step is connected to the next (like the links of a pipe.) Generic pipeline Generic 4-stage pipeline; the colored boxes represent instructions independent of each other To the right is a generic pipeline with four stages: 1. 2. 3. 4. Fetch Decode Execute Write-back (for lw and sw memory is accessed after execute stage) The top gray box is the list of instructions waiting to be executed; the bottom gray box is the list of instructions that have been completed; and the middle white box is the pipeline. Execution is as follows: Time Execution Clock Tick 0 1 2 3 Four instructions are awaiting to be executed the green instruction is fetched from memory the green instruction is decoded the purple instruction is fetched from memory the green instruction is executed (actual operation is performed) the purple instruction is decoded 4 5 6 7 8 9 the blue instruction is fetched the green instruction's results are written back to the register file or memory the purple instruction is executed the blue instruction is decoded the red instruction is fetched the green instruction is completed the purple instruction is written back the blue instruction is executed the red instruction is decoded The purple instruction is completed the blue instruction is written back the red instruction is executed the blue instruction is completed the red instruction is written back the red instruction is completed All instructions are executed SMP (symmetric multiprocessing) In computing, symmetric multiprocessing or SMP involves a multiprocessor computer architecture where two or more identical processors can connect to a single shared main memory. Most common multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors. SMP systems allow any processor to work on any task no matter where the data for that task are located in memory; with proper operating system support, SMP systems can easily move tasks between processors to balance the workload efficiently. Single Point of Failure A Single Point of Failure, (SPOF), is a part of a system which, if it fails, will stop the entire system from working [1]. They are undesirable in any system whose goal is high availability, be it a network, software application or other industrial system. The assessment of a potentially single location of failure identifies the critical components of a complex system that would provoke a total systems failure in case of malfunction. Highly reliable systems may not rely on any such individual component. The strategy to prevent total system failure is Reduced Complexity Complex systems shall be designed according to principles decomposing complexity to the required level. Redundancy Redundant systems include a double instance for any critical component with an automatic and robust switch or handle to turn control over to the other well functioning unit (failover) Diversity Diversity design is a special redundancy concept that cares for the doubling of functionality in completely different design setups of components to decrease the probability that redundant components might fail both at the same time under identical conditions. Transparency Whatever systems design will deliver, long term reliability is based on transparent and comprehensive documentation.