Computer Revolution - ODU Computer Science

advertisement

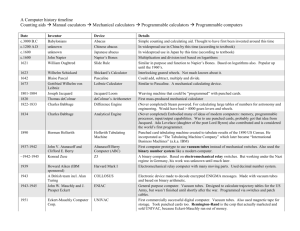

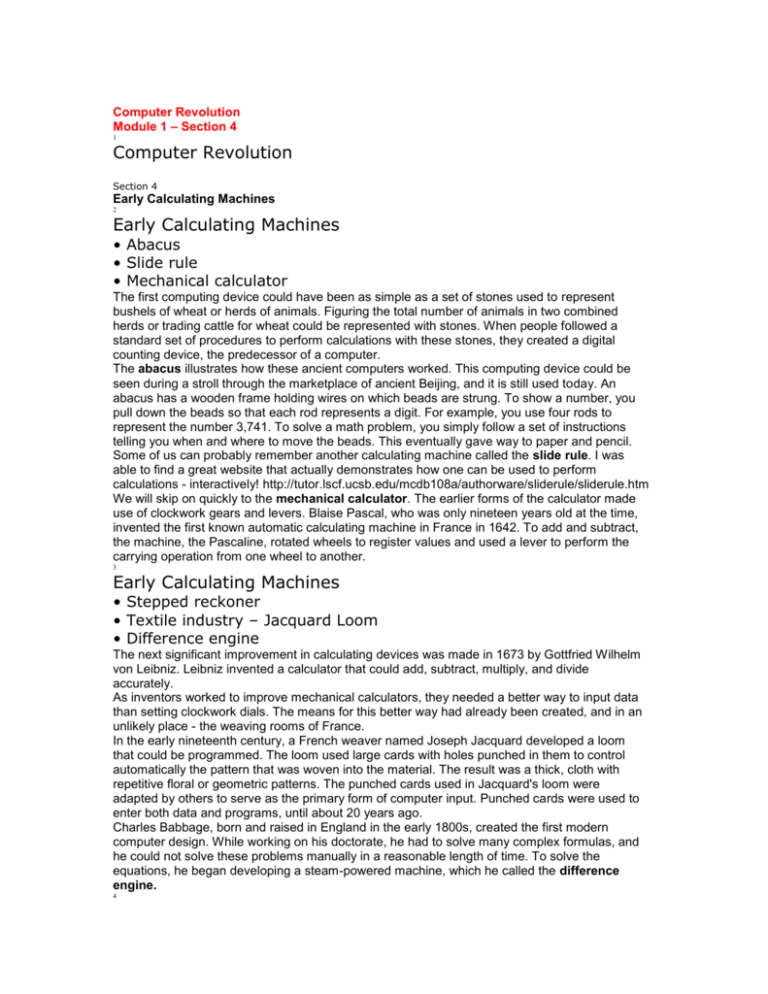

Computer Revolution Module 1 – Section 4 1 Computer Revolution Section 4 Early Calculating Machines 2 Early Calculating Machines • Abacus • Slide rule • Mechanical calculator The first computing device could have been as simple as a set of stones used to represent bushels of wheat or herds of animals. Figuring the total number of animals in two combined herds or trading cattle for wheat could be represented with stones. When people followed a standard set of procedures to perform calculations with these stones, they created a digital counting device, the predecessor of a computer. The abacus illustrates how these ancient computers worked. This computing device could be seen during a stroll through the marketplace of ancient Beijing, and it is still used today. An abacus has a wooden frame holding wires on which beads are strung. To show a number, you pull down the beads so that each rod represents a digit. For example, you use four rods to represent the number 3,741. To solve a math problem, you simply follow a set of instructions telling you when and where to move the beads. This eventually gave way to paper and pencil. Some of us can probably remember another calculating machine called the slide rule. I was able to find a great website that actually demonstrates how one can be used to perform calculations - interactively! http://tutor.lscf.ucsb.edu/mcdb108a/authorware/sliderule/sliderule.htm We will skip on quickly to the mechanical calculator. The earlier forms of the calculator made use of clockwork gears and levers. Blaise Pascal, who was only nineteen years old at the time, invented the first known automatic calculating machine in France in 1642. To add and subtract, the machine, the Pascaline, rotated wheels to register values and used a lever to perform the carrying operation from one wheel to another. 3 Early Calculating Machines • Stepped reckoner • Textile industry – Jacquard Loom • Difference engine The next significant improvement in calculating devices was made in 1673 by Gottfried Wilhelm von Leibniz. Leibniz invented a calculator that could add, subtract, multiply, and divide accurately. As inventors worked to improve mechanical calculators, they needed a better way to input data than setting clockwork dials. The means for this better way had already been created, and in an unlikely place - the weaving rooms of France. In the early nineteenth century, a French weaver named Joseph Jacquard developed a loom that could be programmed. The loom used large cards with holes punched in them to control automatically the pattern that was woven into the material. The result was a thick, cloth with repetitive floral or geometric patterns. The punched cards used in Jacquard's loom were adapted by others to serve as the primary form of computer input. Punched cards were used to enter both data and programs, until about 20 years ago. Charles Babbage, born and raised in England in the early 1800s, created the first modern computer design. While working on his doctorate, he had to solve many complex formulas, and he could not solve these problems manually in a reasonable length of time. To solve the equations, he began developing a steam-powered machine, which he called the difference engine. 4 Early Calculating Machines through those of today • Analytical engine • The 1890 Census machine • ENIAC Later, Babbage turned to planning a far more ambitious device, the analytical engine. The machine was designed to use a form of punched card similar to Jacquard's punched cards for data input. This device would have been a full-fledged modern computer with a recognizable input, output, processing, and storage cycle. Unfortunately, the technology of Babbage's time could not produce the parts required to complete the analytical engine. Ada Lovelace, the daughter of the poet Lord Byron and Babbage’s assistant, wrote the set of instructions the analytical engine would follow to compute Bernoulli numbers. She is considered the first programmer. The programming language Ada used by the Department of Defense was named after her. The next major figure in the history of computing was Dr. Herman Hollerith, a statistician. The United States Constitution calls for a census of the population every ten years, as a mean of determining representation in the U.S. House of Representatives. By the late nineteenth century, the hand-processing techniques were taking so long the 1880 census took more than seven years to tabulate. The need to automate the census became apparent. Dr. Hollerith devised a plan to encode the answers to the census questions on punched cards. He also developed a punching device that could process fifty cards in a minute. These innovations enabled the 1890 census to be completed in two and one-half years. In the late 1930s, the English mathematician Alan Turing wrote a paper describing all the capabilities and limitations of a hypothetical general-purpose computing machine that became known as the Turing Machine. World War II created a need for the American military to calculate trajectories for missiles quickly. The ENIAC was the result, though it was not completed until two months after the war ended. ENIAC could do five multiplication operations in a second. It was difficult to use because every time it was used to solve a new problem, the staff had to rewire it completely to enter the new instructions. This led to the stored program concept, developed by John von Neumann. The computer's program is stored in internal memory with the data. The first electronic computers were complex machines that required large investments to build and use. The computer industry might never have developed without government support and funding. World War II provided a stimulus for governments to invest enough money in research to create powerful computers. The earliest computers, created during the war, were the exclusive possessions of government and military organizations. Only in the 1950s did businesses become producers and consumers of computers. And only in the 1960s did it become obvious that a huge market existed for these machines. To describe the computer's technological progress since World War II, computer scientists speak of "computer generations." Each generation of technology has its own identifying characteristics. We're now using fourth-generation computer technology, and some experts say a fifth generation is already upon us. The evolution of digital computing is often divided into generations. Each generation is characterized by dramatic improvements over the previous generation in the technology used to build computers, the internal organization of computer systems, and programming languages. Although not usually associated with computer generations, there has been a steady improvement in algorithms, including algorithms used in computational science. 6 Generations of Modern Computers 1st Generation 1945 - 1959 5 1st Generation 1945-1959 • Made to order operating instructions • Different binary coded programs told it how to operate • Difficult to program and limited versatility and speed • Vacuum tubes • Magnetic drum storage The first generation of computers used vacuum tubes, were large and slow, and produced a lot of heat. The vacuum tubes failed frequently. The instructions were given in machine language, which is composed entirely of the numbers, 0’s and 1’s. Very few specialists knew how to program these early computers. All data and instructions came into these computers from punched cards. Magnetic drum storage was primarily used, with magnetic tape emerging in 1957. 2nd Generation 1959-1963 6 2nd Generation 1959-1963 • • • • • • Transistors Memory – magnetic core Assembly language Printers and memory Programming languages Careers First-generation computers were notoriously unreliable, largely because the vacuum tubes kept burning out. The transistor took computers into the second generation. They are small, require very little power, and run cool. And they're much more reliable. Memory was composed of small magnetic cores strung on wire within the computer. For secondary storage, magnetic disks were developed, although magnetic tape was still commonly used. They were easier to program because of the development of high level languages, that are easier to understand and are not machine specific. Second-generation computers could communicate with each other over telephone lines, transmitting data from one location to another. The problems stemmed from slow input and output devices which left the machine idle. The consequences were parallel programming and multi-programming. 3rd Generation 1964 - 1971 7 3rd Generation 1964-1971 • Quartz clock • Integrated circuit • Operating systems Integrated circuits took computer technology into the third generation. These circuits incorporate many transistors and electronic circuits on a single wafer or chip of silicon. This allowed for computers that cost the same, yet offered more memory and faster processing. Silicon Valley was born with the creation of Digital Equipment Corporation (DEC), and introduction of the minicomputer. Time-sharing became popular, as well as a variety of programming languages. The result was a competitive market for language translators and the beginning of the software industry. Another significant development of this generation was the launching of the first telecommunications satellite. 4th Generation 1971 - 1984 8 4th Generation 1971 – 1984 • LSI – Large Scale Integration • VLSI – Very Large Scale Integration • Chip • General consumer usage • Networks The microprocessor emerged, a tiny computer on a chip. It has changed the world! The techniques, called very large scale integration (VLSI), used to build microprocessors enable chip companies to mass produce computer chips that contain hundreds of thousands, or even millions, of transistors. Microcomputers emerged, aimed at computer hobbyists. Personal computers became popular first with the Apple computer, followed by the IBM compatibles. High speed computer networking in the form of local area networks (LANs) and wide area networks (WANs) provide connections within a building or across the globe. 5th Generation 1984 – 1990 9 5th Generation 1984 – 1990 • Parallel processing • Multi-processing • Chip advancement The development of the next generation of computer systems is characterized mainly by the acceptance of parallel processing. Until this time parallelism was limited to pipelining and vector processing, or at most to a few processors sharing jobs. The fifth generation saw the introduction of machines with hundreds of processors that could all be working on different parts of a single program. The scale of integration in semiconductors continued at an incredible pace by 1990 it was possible to build chips with a million components - and semiconductor memories became standard on all computers. 6th Generation 1990 – now 10 6th Generation 1990 – now • This is the future • What new advancements lie ahead? • What changes will be big enough to create this new generation? This generation is beginning with many gains in parallel computing, both in the hardware area and in improved understanding of how to develop algorithms to exploit diverse, massively parallel architectures. Parallel systems now compete with vector processors in terms of total computing power and most expect parallel systems to dominate the future. One of the most dramatic changes in the sixth generation will be the explosive growth of wide area networking. Network bandwidth has expanded tremendously in the last few years and will continue to improve for the next several years. T1 transmission rates are now standard for regional networks, and the national ``backbone'' that interconnects regional networks uses T3. Networking technology is becoming more widespread than its original strong base in universities and government laboratories as it is rapidly finding application in K-12 education, community networks and private industry. The federal commitment to high performance computing has been further strengthened with the passage of two particularly significant pieces of legislation: the High Performance Computing Act of 1991, which established the High Performance Computing and Communication Program (HPCCP) and Sen. Gore's Information Infrastructure and Technology Act of 1992, which addresses a broad spectrum of issues ranging from high performance computing to expanded network access and the necessity to make leading edge technologies available to educators from kindergarten through graduate school. Pioneers of Computing 11 Pioneers of Computing • • • • Charles Babbage Konrad Zuse John von Neumann Alan Turing Charles Babbage - One of the first renown pioneers, referred to as the "Father of Computing" for his contributions to the basic design of the computer through his Analytical machine. His previous Difference Engine was a special purpose device intended for the production of tables. Konrad Zuse – a German engineer who built the first program-controlled computing machine in the world. He has brought his inventions, patent outlines, talks and lectures to paper between 1936 and 1995. John von Neumann - one of this century’s preeminent scientists, along with being a great mathematician and physicist, was an early pioneer in fields such as game theory, nuclear deterrence, and modern computing. Von Neumann’s experience with mathematical modeling at Los Alamos, and the computational tools he used there, gave him the experience he needed to push the development of the computer. Also, because of his far reaching and influential connections, through the IAS, Los Alamos, a number of Universities and his reputation as a mathematical genius, he was in a position to secure funding and resources to help develop the modern computer. In 1947 this was a very difficult task because computing was not yet a respected science. Most people saw computing only as making a bigger and faster calculator. Von Neumann on the other hand saw bigger possibilities. Alan Turing - Turing was a student and fellow of King's College Cambridge and was a graduate student at Princeton University from 1936 to 1938. While at Princeton Turing published "On Computable Numbers", a paper in which he conceived an abstract machine, now called a Turing Machine. The Turing Machine is still studied by computer scientists. He was a pioneer in developing computer logic as we know it today and was one of the first to approach the topic of artificial intelligence. Important Machines and Developments 12 Important Machines • IBM 650 introduced in 1953 • IBM 7090 first 2nd Generation computer • Texas Instruments and Fairchild semiconductor both announce the integrated circuit in 1959 • DEC PDP 8 the first microcomputer sold for $18,000 in 1963 • IBM 360 introduced in 1964, used integrated circuits • 1968 Intel is established by Robert Noyce, Grove, and Moore • 1970 floppy disk introduced • IBM 650 introduced in 1953 • IBM 7090 the first of the "second-generation" of computers build with transistors was introduced in 1958. • Texas Instruments and Fairchild semiconductor both announce the integrated circuit in 1959. • DEC PDP 8 the first minicomputer sold for $18,000 in 1963. • The IBM 360 is introduced in April of 1964. It used integrated circuits • In 1968 Intel is established by Robert Noyce, Grove, and Moore. • In 1970 the floppy disk was introduced 13 Important Machines • • • • 1972 1972 1976 1978 – – – – Intel’s 8008 and 8080 DEC PDP 11/45 Jobs and Wozniak build the Apple I DEC VAX 11/780 • • • • • • • 1979 1981 1982 1984 1988 1992 1993 – – – – – – – Motorolla 68000 IBM PC Compaq IBM-compatible PC Sony and Phillips CD-ROM Next computer by Steve Jobs DEC 64-bit RISC alpha Intel’s Pentium • 1972 -- Intel's 8008 and 8080 • 1972 -- DEC PDP 11/45 • 1976 -- Jobs and Wozniak build the Apple I • 1978 -- DEC VAX 11/780 • 1979 -- Motorola 68000 • 1981 -- IBM PC • 1982 -- Compaq IBM-compatible PC • 1984 -- Sony and Phillips CD-ROM • 1988 -- Next computer by Steve Jobs • 1992 -- DEC 64-bit RISC alpha • 1993 -- Intel's Pentium This evolution of machines has provided the benefits of improved reliability, reduced size, increased speed, improved efficiency, and lower cost with each evolution. Taxonomy of Computers 14 Taxonomy of Computers • Mainframes • Super computers • Microprocessors A mainframe is a very large and expensive computer capable of supporting hundreds, or even thousands, of users simultaneously. In the hierarchy that starts with a simple microprocessor (in watches, for example) at the bottom and moves to supercomputers at the top, mainframes are just below supercomputers. In some ways, mainframes are more powerful than supercomputers because they support more simultaneous programs. But supercomputers can execute a single program faster than a mainframe. The distinction between small mainframes and minicomputers is vague, depending really on how the manufacturer wants to market its machines. Microprocessors take the critical components of a complete computer and house it on a single tiny chip. Wirth’s Law 15 Wirth’s Law • The software gets slower faster than the hardware gets faster • What does this mean? References: Pictures and history of computer: http://www.computerhistory.org/timeline/timeline.php?timeline_category=cmptr Computer Laws: http://www.sysprog.net/quotlaws.html Obstacles to a Technological Revolution: http://www.yale.edu/ynhti/pubs/A17/merrow.html Computers used in society: http://www.census.gov/dmd/www/dropin2.htm Technological revolution: http://mitsloan.mit.edu/50th/techrevpaper.pdf Internet: http://www.nap.edu/html/techgap/revolution.html Abacus Applet: http://www.tux.org/~bagleyd/java/AbacusAppJS.html 5 th generation computer: http://www.geocities.com/ccchinatrip/skool/page_6.htm Computer in use: http://www.infoplease.com/ipa/A0873822.html Wirth’s law: http://www.spectrum.ieee.org/WEBONLY/publicfeature/dec03/12035com.html