SREE 2008 Conference Structured Abstract

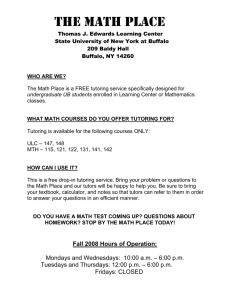

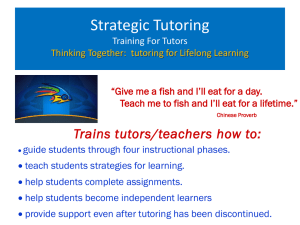

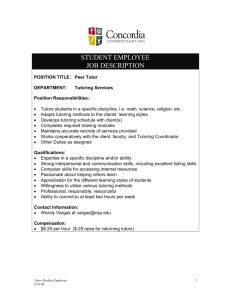

advertisement

Abstract Title Page Not included in page count. Title: Effective Programs for Struggling Readers: A Best-Evidence Synthesis (Paper submitted as part of the symposium Evidence for Interventions for Struggling Readers Chaired by Robert E. Slavin) Author(s): Robert E. Slavin Johns Hopkins University -andUniversity of York Cynthia Lake Johns Hopkins University Nancy A. Madden Johns Hopkins University -andUniversity of York Susan Davis Success for All Foundation 2009 SREE Conference Abstract Template Abstract Body Limit 5 pages single spaced. Background/context: Description of prior research and/or its intellectual context and/or its policy context. Success in beginning reading is a key prerequisite for success in reading in the later years. Longitudinal studies (e.g., Juel, 1988; Snow, Burns, & Griffin, 1998) have shown the children with poor reading skills at the end of first grade are unlikely to catch up later on, and that reading performance in third grade is a key predictor of dropout (Lloyd, 1978). In recognition of this, there has been a substantial focus over the past 20 years on development and evaluation of preventive and remedial programs for struggling readers in the elementary grades. Perhaps the most influential of these efforts is Reading Recovery (Pinnell, DeFord, & Lyons, 1988), the New Zealand program that provides one-to-one tutoring to struggling first graders, training certified teachers extensively in a well-structured model. However, there are also many other one-to-one tutoring models, including those that use paraprofessionals and volunteers (see Wasik & Slavin, 1993; Wasik, 1997), and there are alternative methods that use small groups, technology, and extended time after school to help young struggling readers get on the road to success. The emphasis in recent years on response to intervention (RTI), or the 3-tier model, in which children with learning difficulties are given immediate intervention, also illustrates the widespread conviction that effective early intervention is essential for struggling readers (see Vaughn et al., 2007; Swanson, 1999). Government programs are making substantial investments in programs for at-risk and struggling readers. In the US, local districts have widely implemented tutoring and small group remedial and preventive programs, and Reading First, part of No Child Left Behind, spent $1 billion a year on programs for grades K-3. In the UK, Every Child a Reader is disseminating Reading Recovery broadly. In this context, it is important to understand the research on the relative impacts of various programs for struggling readers. Reviews of research on programs for struggling readers have focused on some of the strategies available to educators and policy makers. For example, Elbaum, Vaughn, Hughes, & Moody (2000) reviewed tutoring programs, finding positive effects of one-to-one tutoring but no difference between one-to-one and small group tutoring. Wasik & Slavin (1993) reviewed research on one-to-one tutoring and reported better results for approaches involving certified teachers than for those using paraprofessionals, although Wasik (2007) also found many positive effects of volunteer tutoring. The What Works Clearinghouse (2008) review of beginning reading programs included studies of Reading Recovery and of a few other tutoring models, which generally received positive ratings. Yet there remains a need to review all types of interventions using consistent standards and procedures, to compare both the effectiveness and the cost-effectiveness of alternative approaches. Purpose/objective/research question/focus of study: Description of what the research focused on and why. The purpose of the proposed paper is to synthesize findings of all types of programs and practice designed to improve the reading achievement of children in grades 1-3 who are having difficulties in learning to read. The synthesis is a companion to reviews of core reading programs 2009 SREE Conference Abstract Template 1 for beginning reading (Slavin et al., 2009) and for upper-elementary reading (Slavin et al., 2008). The present review focuses on remedial preventive and special education programs provided to low-performing children. Whole-class programs for children who may be at risk but have not individually been found to be in need of additional assistance are reviewed in the core reading syntheses. The focus of the present paper is on “second-chance” programs for poor readers. In an RTI context, these would be Tier 2 or Tier 3 interventions, not Tier 1 initial instruction. The review considers the following research questions: 1. What are the effects on reading achievement of all types of programs and practices, including alternative approaches to tutoring, small-group interventions, and computerassisted instruction? 2. What are the relative effects on reading achievement of tutoring by certified teachers vs. paraprofessionals vs. volunteers? 3. What are the relative effects of one-to-one and small group interventions? 4. What are the relative effects of programs that provide extensive training and monitoring in comparison to those that provide less training and monitoring? 5. What are the relative effects of programs emphasizing phonics and phonemic awareness in comparison to those that provide less focus on phonics and phonetic awareness? 6. How do reading achievement outcomes of various reading interventions differ according to student characteristics, such as degree of reading difficulties, socioeconomic status, race, and age? 7. How do reading achievement outcomes of reading interventions differ according to methodological characteristics, such as use of random assignment, sample size, duration, and use of standardized versus experimenter-made measures? 8. How do reading interventions vary in terms of estimated cost-effectiveness? Setting: Specific description of where the research took place. n/a Population/Participants/Subjects: Description of participants in the study: who (or what) how many, key features (or characteristics). n/a Intervention/Program/Practice: Specific description of the intervention, including what it was, how it was administered, and its duration. n/a Research Design: Description of research design (e.g., qualitative case study, quasi-experimental design, secondary analysis, analytic essay, randomized field trial). Systematic review of research 2009 SREE Conference Abstract Template 2 Data Collection and Analysis: Description of plan for collecting and analyzing data, including description of data. This paper will report a systematic review of research on the reading achievement outcomes of programs for students who are experiencing difficulties in learning to read. The key elements of the review methodology are described in the following sections. Review Methods The review methods are an adaptation of a technique called best evidence synthesis (Slavin, 1986). Best-evidence syntheses seek to apply consistent, well-justified standards to identify unbiased, meaningful information from experimental studies, discussing each study in some detail, and pooling effect sizes across studies in substantively justified categories. The method is very similar to meta-analysis (Cooper, 1998; Lipsey & Wilson, 2001), adding an emphasis on narrative description of each study’s contribution. It is similar to the methods used by the What Works Clearinghouse (2008), with a few important exceptions noted in the following sections. See Slavin (2008) for an extended discussion and rationale for the procedures used in all of these reviews. Literature Search Procedures A broad literature search was carried out in an attempt to locate every study that could possibly meet the inclusion requirements. Electronic searches were made of educational databases (JSTOR, ERIC, EBSCO, Psych INFO, Dissertation Abstracts) using different combinations of key words (for example, “elementary students,” “reading disabilities,” “reading intervention,” “achievement”) and the years 1970-2008. We also conducted searches by program name. Web-based repositories and education publishers’ websites were also examined. We attempted to contact producers and developers of reading programs to check whether they knew of studies that we had missed. Citations were obtained from other reviews of reading programs including the What Works Clearinghouse (2008) beginning reading topic report, Adams (1990), National Reading Panel (2000), Snow, Burns & Griffin (1998), Rose (2006), and August & Shanahan (2006), or potentially related topics such as instructional technology (E. Chambers, 2003; Kulik, 2003; Murphy et al., 2002). We also conducted searches of recent tables of contents of key journals. We searched the following tables of contents from 2000 to 2008: American Educational Research Journal, Reading Research Quarterly, Journal of Educational Research, Journal of Educational Psychology, Reading and Writing Quarterly, Journal of Learning Disabilities, Journal of Special Education, Exceptional Children, British Journal of Educational Psychology, and Learning and Instruction. Citations of studies appearing in the studies found in the first wave were also followed up. Effect Sizes In general, effect sizes were computed as the difference between experimental and control individual student posttests after adjustment for pretests and other covariates, divided by the unadjusted posttest control group standard deviation. If the control group SD was not available, a pooled SD was used. Procedures described by Lipsey & Wilson (2001) and Sedlmeier & Gigerenzor (1989) were used to estimate effect sizes when unadjusted standard deviations were not available, as when the only standard deviation presented was already adjusted for covariates or when only gain score SD’s were available. If pretest and posttest means and SD’s were 2009 SREE Conference Abstract Template 3 presented but adjusted means were not, effect sizes for pretests were subtracted from effect sizes for posttests. Effect sizes were pooled across studies for each program and for various categories of programs. This pooling used means weighted by the final sample sizes. The reason for using weighted means is to maximize the importance of large studies, as the previous reviews and many others have found that small studies tend to overstate effect sizes (see Rothstein et al., 2005; Slavin, 2008; Slavin & Smith, 2008). Criteria for Inclusion Criteria for inclusion of studies in this review were as follows. 1. The studies evaluated programs for children identified as having difficulty in learning to read in grades 1-5. Studies of core classroom programs for all beginning readers are reviewed in a separate article (Slavin, Lake, Chambers, Cheung, & Davis, 2008). 2. The studies compared children taught in classes using a given reading intervention to those in control classes using no intervention beyond ordinary instruction or an alternative intervention. 3. Studies could have taken place in any country, but the report had to be available in English. 4. Random assignment or matching with appropriate adjustments for any pretest differences (e.g., analyses of covariance) had to be used. Studies without control groups, such as prepost comparisons and comparisons to “expected” scores, were excluded. 5. Pretest data had to be provided, unless studies used random assignment of at least 30 units (individuals, classes, or schools) and there were no indications of initial inequality. Studies with pretest differences of more than 50% of a standard deviation were excluded because, even with analyses of covariance, large pretest differences cannot be adequately controlled for as underlying distributions may be fundamentally different (Shadish, Cook, & Campbell, 2002). 6. The dependent measures included quantitative measures of reading performance, such as standardized reading measures. Experimenter-made measures were accepted if they were comprehensive measures of reading, which would be fair to the control groups, but measures of reading objectives inherent to the program (but unlikely to be emphasized in control groups) were excluded. Studies using measures inherent to treatments, usually made by the experimenter or program developer, have been found to be associated with much larger effect sizes than are measures that are independent of treatments (Slavin & Madden, 2008), and for this reason, effect sizes from treatment-inherent measures were excluded. The exclusion of measures inherent to the experimental treatment is a key difference between the procedures used in the present review and those used by the What Works Clearinghouse (2008). Measures of pre-reading skills such as auditory phonemic awareness, as well as related skills such as oral vocabulary, language arts, and spelling, were not included in this review. 7. A minimum study duration of 12 weeks was required. This requirement is intended to focus the review on practical programs intended for use for the whole year, rather than brief investigations. Study duration is measured from the beginning of the treatments to posttest, so, for example, an intensive 8-week intervention in the fall of first grade would be considered a year-long study if the posttest were given in May. The 12-week criterion has been consistently used in all of the systematic reviews done previously by the current 2009 SREE Conference Abstract Template 4 authors (i.e., Cheung & Slavin, 2005; Slavin & Lake, 2008; Slavin et al., 2008, in press). This is another difference between the current review and the What Works Clearinghouse (2008) beginning reading topic report, which included very brief studies. 8. Studies had to have at least 15 students and two teachers students in each treatment group. Findings/Results: Description of main findings with specific details. The review is currently in preparation, but several tentative conclusions are supported by the studies reviewed so far. 1. Both tutoring and small-group interventions have positive effects on reading outcomes for struggling readers. In particular, Reading Recovery has been extensively evaluated and found to be effective on measures not inherent to the program. Computer-assisted instruction has been associated with few positive effects. 2. One-to-one tutoring is associated with more positive effect sizes than small group tutoring. Tutoring using certified teachers appears to be more effective than tutoring using paraprofessionals or volunteers. Programs that provide more training and follow-up for tutors generally get better outcomes than programs that provide relatively little training. 3. Outcomes of various reading interventions appear to be similar across different student subgroups. In particular, outcomes for disadvantaged and non-disadvantaged students (compared to respective control groups) are similar. 4. Reading achievement outcomes are similar in randomized and matched studies, but smaller studies are associated with larger effect sizes. 5. More expensive programs (one-to-one tutoring with certified teachers) tend to get better effects on achievement measures, but one-to-one tutoring by paraprofessionals and volunteers appear to be more cost-effective. Conclusions: There is no question that one-to-one tutoring is effective in improving the reading achievement of struggling readers. However, there is too little evidence about the long-term effects of intensive early tutoring, and there is evidence that less expensive options, especially one-to-one tutoring by paraprofessionals, may be nearly as effective, and given their lower costs and greater availability, these may be cost-effective alternatives. If the preliminary findings hold up in the full review, the conclusions will pose a dilemma to practitioners and policy makers. The most effective and well-evaluated interventions for struggling readers appear to be the most expensive, and in areas of teacher shortages (such as disadvantaged inner-city and rural schools) it may be difficult to justify using experienced certified teachers to work with small numbers of children if effects nearly as large can be obtained with well-trained paraprofessionals. However, it may be that the more expensive oneto-one strategies will turn out to be markedly more effective than other solutions in the long term, or that they may reduce retentions or special education placements enough to make this the more cost-effective option. These conclusions will be refined and additional questions addressed as the review is completed. 2009 SREE Conference Abstract Template 5 Appendixes Not included in page count. Appendix A. References References are to be in APA format. (See APA style examples at the end of the document.) Adams, M.J. (2000). Beginning to read: Thinking and learning about print. Cambridge, MA: MIT Press. August, D., & Shanahan, T. (2006). Synthesis: Instruction and professional development. In D. August & T. Shanahan (Eds.), Developing literacy in second-language learners (pp. 351364). Mahwah, NJ: Erlbaum. Chambers, E. A. (2003). Efficacy of educational technology in elementary and secondary classrooms: A meta-analysis of the research literature from 1992-2002. Unpublished doctoral dissertation, Southern Illinois University at Carbondale. Cheung, A., & Slavin, R.E. (2005). Effective reading programs for English language learners and other language minority students. Bilingual Research Journal, 29 (2), 241-267. Cooper, H. (1998). Synthesizing research (3rd ed.). Thousand Oaks, CA: Sage. Juel, C. (1988). Learning to read and write: A longitudinal study of 54 children from first through fourth grades. Journal of Educational Psychology, 80 (4), 437-447. Kulik, J. A. (2003). Effects of using instructional technology in elementary and secondary schools: What controlled evaluation studies say. SRI Project Number P10446.001. Arlington, VA: SRI International. Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Thousand Oaks, CA: Sage. Lloyd, D. N. (1978). Prediction of school failure from third-grade data. Educational and Psychological Measurement, 38, 1193-1200. Murphy, R., Penuel, W., Means, B., Korbak, C., Whaley, A., & Allen, J. (2002). E-DESK: A review of recent evidence on discrete educational software. Menlo Park, CA: SRI International. National Reading Panel (2000). Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction. Rockville, MD: National Institute of Child Health and Human Development. Pinnell, G. S., DeFord, D. E., & Lyons, C. A. (1988). Reading Recovery: Early intervention for at-risk first graders. Arlington, VA: Educational Research Service. Rose, J. (2006). Independent review of the teaching of early reading. London: Department for Education and Skills. Rothstein, H.R., Sutton, A.J., & Borenstein, M. (Eds.) (2005). Publication bias in meta-analysis: Prevention assessment, and adjustments. Chichester, UK: John Wiley. Sedlmeier, P., & Gigerenzer, G. (1989). Do studies of statistical power have an effect on the power of studies? Psychological Bulletin, 105, 309-316. Shadish, W.R., Cook, T.D., & Campbell, D.T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Boston: Houghton-Mifflin. Slavin, R. (2008). What works? Issues in synthesizing education program evaluations. Educational Researcher, 37 (1), 5-14. Slavin, R.E. (1986). Best-evidence synthesis: An alternative to meta-analytic and traditional reviews. Educational Researcher, 15 (9), 5-11. 2009 SREE Conference Abstract Template Slavin, R.E., & Lake, C. (2008). Effective programs in elementary math: A best evidence synthesis. Review of Educational Research, 78 (3), 427-515. Slavin, R.E., Lake, C., Madden, N. A., Chambers, B., Cheung, A., & Davis, S. (2008a). Beyond the basics: Effective reading programs for the upper elementary grades. Manuscript submitted for publication. Slavin, R.E., Cheung, A., Groff, C., & Lake, C. (2008b). Effective programs in middle and high school reading: A best-evidence synthesis. Reading Research Quarterly, 43 (3), 290-322 Slavin, R.E., Lake, C., Chambers, B., Cheung, A., & Davis, S. (2009). Effective beginning reading programs: A best-evidence synthesis. Baltimore, MD: Johns Hopkins University, Center for Research and Reform in Education. Slavin, R. E., Lake, C., & Groff, C. (in press). Effective programs in middle and high school mathematics: A best-evidence synthesis. Review of Educational Research. Slavin, R.E., & Madden, N. A. (2008, March). Understanding bias due to measures inherent to treatments in systematic reviews in education. Paper presented at the annual meetings of the Society for Research on Educational Effectiveness, Crystal City, Virginia. Slavin, R.E., & Smith, D. (2008, March). Effects of sample size on effect size in systematic reviews in education. Paper presented at the annual meetings of the Society for Research on Educational Effectiveness, Crystal City, Virginia. Snow, C.E., Burns, S.M., & Griffin, P. (Eds.) (1998). Preventing reading difficulties in young children. Washington, DC: National Academy Press. Vaughn, S., Bos, C. L., & Schumm, J. S. (2007). Teaching students who are exceptional, diverse, and at risk (4th Ed.). Boston: Pearson. Wasik, B. A. (1997). Volunteer tutoring programs: Do we know what works? Phi Delta Kappan, 79(4), 283–287. Wasik, B. A., & Slavin, R. E. (1993). Preventing early reading failure with one-to-one tutoring. In R. E. Slavin, N. L. Karweit, & B. A. Wasik (Eds.) Preventing Early School Failure. Boston: Allyn & Bacon. What Works Clearinghouse (2008). Beginning reading. What Works Clearinghouse Topic Report. Retreived August 10, 2008, from http://ies.ed.gov/NCEE.wwc/. 2009 SREE Conference Abstract Template