Document

advertisement

AN EXPERIMENTAL STUDY OF POTENTIAL PARALLELISM

IN AN IMPLEMENTATION OF MIL-STD 188-220 John Graham

Lori L. Pollock

Department of Computer and Information Sciences

University of Delaware

fgraham,pollockg@cis.udel.edu

ABSTRACT

low-error-rate communications [4]. Parallelism can be

exploited in either of these approaches. Multiple, separate experiences in applying parallelism to communication protocols have indicated good performance improvements of network transport systems[14, 11, 7, 9,

5, 6, 8, 1, 2, 3, 12]. As shared memory multiprocessor

desktop workstations and server platforms are more

commonplace, the use of parallelization at the network

nodes is a real option. Parallelization also could be

used in conjunction with other approaches to dealing

with the communication bottlenecks.

This paper continues our eorts to explore the use of

parallelization for communication protocols of interest

to the Army. In May, 1998, we obtained an implementation of the MIL-STD 188-220A written by a vendor

that specializes in communication software. A number

of proling experiments were performed in addition to

visual inspection of frequently executed segments of

the code. We determined the most appropriate kind of

parallelism for this protocol code, and investigated its

potential for performance improvements through actual parallelization and performance measurement.

We rst describe the major parallelization methods

used for parallelizing communication protocols. The

MIL-STD 188-220A protocol and our particular targeted implementation are described. We describe our

experimental setup and results for potential segments

for parallelization. Then, we present our choice of

parallelization and our experiences in applying this

method to this implementation. Finally, conclusions

and future directions are presented.

Researchers have shown that communication protocols

can be parallelized in a number of dierent ways, often resulting in signicant increased performance of the

overall network communication. This paper reports on

our continuing eorts to explore the potential for parallelizing the MIL-STD 188-220 communications protocol, the proposed standard for interoperability over

Combat Net Radio, and a key to the Army's eorts to

digitize the battleeld and standardize protocols for the

physical, data link and network layers. We have examined a specic implementation of MIL-STD 188-220A's

datalink layer , and gathered various forms of information about the operation of the protocol, focusing on the

potential for performance enhancement through parallelism. This paper describes our experiments, results,

and conclusions.

Keywords: communication protocol, parallelization.

INTRODUCTION

A major challenge to increasing the performance of

data delivery across the fast physical networks available today is ecient protocols and protocol implementations. The two commonly used approaches for

improving performance of protocols is to implement existing protocols such that the processing time is minimized, or to design new protocols with mechanisms

specically architected for high-bandwidth, low-delay,

Prepared through collaborative participation in the Advanced Telecommunications/Information Distribution Research

Program (ATIRP) Consortium sponsored by the U.S. Army Research Laboratory under Cooperative Agreement DAAL01-96-20002. The U.S. government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any

copyright notation thereon.

PARALLELIZATION APPROACHES

Whether the solution is implemented in hardware, software, or a combination, the same general techniques for

1

uses TCP/IP in the Transport and Session Layers and

does not address layers 5, 6 or 7. The focus of our

study is restricted to the physical and data link layers.

For the physical layer, the standard species the use

of a frequency hopping packet scheme, output transmission rates ranging in general from 75 to 32000 bits

per second (bps), and half-duplex transmission mode.

The physical layer may be realized over radio, wireline

and/or satellite links.

A signicant amount of processing is performed by the

data link layer. Due in part to the use of TCP/IP

in the upper layers, the functionality of the data link

layer was augmented to include operations normally

handled in the upper layers and deemed necessary for

the protocol. The functionality of the data link layer

includes: ow control, Forward Error Correction coding (FEC) (optional), group and global multicast of

messages, priority queuing of messages (urgent, priority, routine), scrambling and unscrambling of data

(optional), Time Dispersive Coding (TDC) (optional),

and zero-bit insertion (bit-stung).

protocol parallelism apply. Classications for protocol

parallelism are dened as [2, 5, 11].

Connection parallelism: Connection parallelism

implies establishment of multiple links, or connections, between end systems comprised of single

processors or threads. Speedup is achieved with

multiple connections allowing concurrent communication.

Packet parallelism: Packet parallelism assigns

each arriving packet to one processor or thread,

from a pool of processors or threads, allowing several packets to be processed in parallel. This

approach distributes packets across processors,

achieving speedup both with multiple and single

connections.

Pipelined or layered parallelism: Pipelined or layered parallelism exploits the layering principle

common in protocol design, assigning each layer

to a separate processor. Performance gains are

achieved mainly through pipelining eects.

Functional parallelism: Functional parallelism decomposes functions within a single protocol and

assigns them to processing elements. Speedup

is achieved when packets require dierent independent functional processing, which can be performed simultaneously.

Message parallelism: Message parallelism associates a separate process with each incoming or

outgoing message. A processor or thread receives

a message and escorts it through the parallelized

portion of the protocol stack.

EXPERIMENTAL STUDY

Experimental setup. The code as provided from the

vendor was designed to compile and run using Borland

C on PC-based hardware. Since the goal of our work

has not been to analyze the hardware, but rather software implementations of the protocol, we ported the

code to a UNIX environment, where analysis and debugging tools are more readily available. The UNIX

platform that we chose is a Sun Microsystems ULTRA 10 running SunOS 5.6 and the C compiler from

Sun version 4.2. We also ran the tests on two other

Sun workstations, one with 4 processors and one with

14 processors. The processing times (and thus the

throughput) for these platforms were faster; however,

as the upcoming description indicates, the threshold of

when to thread and when not to thread did not change.

Thus, the results reported here are on a single processor workstation.

In the actual runs executed by the vendor, one host

(a 1553 bus interface ) generates messages to be handled by the protocol and sends those messages to another PC-based host where the protocol stack is implemented. Since our experiments did not test this physical interface, no such hardware setup was used. Note

that the experiments did cover the software used by

the physical layer of the protocol, but not the physical

Since no single approach is a win in every situation,

the goal of studying an implementation of MIL-STD

188-220A has been to identify not only opportunities

for exploiting parallelism, but also the most promising

approach from this list for this particular protocol.

MIL-STD 188-220A IMPLEMENTATION

The military standard MIL-STD 188-220A protocol [10] species interoperability of command, control, communications, computers, and C4 I systems via

Combat Net Radio (CNR) on the battleeld. The architecture of MIL-STD 188-220A uses the ISO 7-layer

reference model. The April 1995 draft of the standard

2

ing where parallelism might be applied is to investigate

where the code is spending most of its execution cycles.

Running the code as explained in the previous sections

and applying Sun's workshop tools resulted in a prole

that indicates the percent of execution time spent in

each procedure and the number of calls to each procedure.

In total, there are approximately 350 functions called

to process a message. The top 60 represent 90% of

the execution time. A prole of the execution can be

broken into three areas. First, we found about 25% of

total execution time to be system time, time spent by

the operating system working on behalf of the code,

typically as a result of UNIX system calls. Under different systems, or on actual embedded hardware, this

may dier. System time is time not available for parallelization.

The second component of execution time is user time

spent in libraries, accounting for about 15% of total

execution time. Primarily, this is time spent executing code in the standard C library and to a much less

extent, the library for the proler and data collector

itself. Again, this code does not represent an opportunity for parallelization.

The third remaining component is user time spent in

the protocol processing code. Our proling runs indicated that the largest single routine in terms of being called and spending execution cycles was a routine

called llword Bitman. Overall, more than 25% of processing time was spent in this routine. Unfortunately,

this is a fairly low level routine that does bit manipulation. There are no loops nor any complex code that

could be optimized in an obvious fashion. The next

most frequently called routine was another bit manipulation routine called readBits Mci, which took 11%

of the execution time. The same observation about

the structure of readBits Mci can be made as for llword Bitman. Overall, these two routines represent

about 50% of user time. In summary, these results indicate that functional parallelism is not a good choice

for increasing performance of this protocol code.

Another potential opportunity for parallelism that we

investigated is the ability to process one message while

receiving the next message. Close examination of the

message specication reveals that each message is not

encapsulated in a discrete component such that the

processing of dierent parts of the message could be

parallelized. The message structure requires that the

bits be examined and then the message processed ac-

hardware such as cables, circuit boards, etc.

A wrapper was constructed to generate messages by

reading a le and then sending the messages up the

protocol stack (receive) and responding down the protocol stack (transmit). The wrapper consists of two

components. The rst, pcmain.c, is the entry point

for the entire program. The main routine opens the

data le, and then the entry point to the receive stack,

pctest.c, is called. A message is detected if reading the

data le does not result in an error.

Once the message is read, it is passed into the protocol

stack, processed, and then transferred to the transmit

protocol stack. Eventually, the processed message is

returned to the user interface and displayed. In actual

tests, the display of the message was not performed

since it increased system call overhead signicantly.

The last step in the modication of the protocol code

was to modify the routines corresponding to higher layers to return immediately with no processing. The effect of this modication is to prole only the implementation of the physical and data link layers.

One method for this setup would be to have two separate processes and use the UNIX pipe to hook the processes together. This was rejected for our experiments

because of the system overhead involved in using the

pipe. Instead, the transmit and receive stacks were implemented in one process, eliminating system overhead

due to the experimentation procedure.

For this experiment, we were unable to use actual data

gathered from real communications. In particular, we

did not have records available that showed the distribution of the various sizes of messages in a typical session

nor the various types of messages that may be used in a

typical session. We ran our experiments with three basic distributions of message sizes: exponential, normal

(binomial) and uniform. For the exponential distribution, the message prole was heavily weighted towards

smaller messages, 75% being smaller than 50 bytes in

length. For binomial distribution, 75% of the messages

were larger than 50 bytes, but the majority (95%) were

less than 100 bytes in length. For the uniform distribution, message sizes were equally distributed between 1

and 1000 bytes. These message sizes were determined

to be reasonable based on examination of the message

format specication. A typical large message might be

a request for a le transfer, while a small message (less

than 10 bytes) could represent a simple command.

Proling the execution. The rst step in identify3

is a message size of 0, which corresponds to the nonthreaded version.

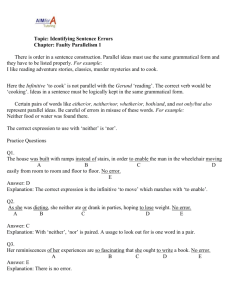

The plotted results agree with the predicted results

regarding message size and usefulness of threading. In

all three message size distributions, we were able to determine a message size threshold where use of threads

would benet the overall performance. In particular,

the threshold for uniform distribution is a message size

of 500 bytes, for normal is 200 bytes, and for exponential is 200 bytes. These number correspond to the

lowest points in the graphs. The amount less than the

non-threaded case (message size 0) indicates the overall benet. Note that the graph turns upward before

returning to the optimal level. This is a reection of

the overhead incurred from using too small a message

size. Not every possible message size was tested but

the peak in the graph would reect an approximation

of the worst case for choosing the size of the message.

cordingly. Thus, the problem with this approach in this

protocol implementation is that the size of the message

is not known until the bits are examined. At that time,

the remainder of the message may be very short (2-3

words) and the processing overhead to thread the message may be excessive.

Based on our proling results, we concluded that the

most promising approach for performance improvement through parallelization would be to thread the

code based on messages. Our implementation of this

approach to parallelization and our results are described in the next section.

Parallelization of the Code. To implement parallelism within the MIL-STD 188-220A implementation

in this study, we used the POSIX pthreads library. We

modied the protocol code to create a new thread each

time a message arrives. The new thread then runs in

parallel with the existing main path of execution of the

program and in parallel with any other threads that

were started to process a previous message.

Typically, threading code is most eective when processing involves system overhead that results in waiting

for a system resource. For this protocol code, overall

system overhead is relatively small (around 25% of the

total processing time) on average for a stream of messages of any message sizes. However, the overhead is

a constant for a given message, that is, the number

of cycles of system overhead is the same, regardless of

the message size. This means that for a small message,

the overhead will be close to 100%, while for a large

message, the overhead is less than 5%.

The net eect is that if you create a thread to process

a small message, the increase in system overhead due

to the thread management overhead results in processing time for that message actually increasing rather

than decreasing. At the other end of the scale, large

messages should be threaded to improve throughput

by allowing many messages to be processed in parallel

with the larger messages.

This phenomenon was handled in our implementation

by selecting a threshold based on message size. Below

the threshold, the messages are processed sequentially.

Above the threshold, a new thread is created, and the

message is processed in parallel. The graphs depicted

in Figure 1 show processing times of both the threaded

version and non-threaded version, for various threshold

values. The message size threshold is plotted against

system time, user time and total time. The baseline

SUMMARY AND FUTURE DIRECTIONS

To our knowledge, this is the rst eort to study the

opportunities for potential performance enhancements

due to parallelization in implementations of MIL STD

188-220A. The results found through experimentation

are compared with the predicted results of [13]. These

experiments focus on nding parallel opportunities using functional parallelism and packet parallelism as described above. Functional parallelism does not seem to

be promising based on the fact that most of the processing time is being spent doing very low level operations. These operations are very fast, and overhead

associated with parallelizing that code would actually

lower the throughput. If you consider one message to

be a packet that is handled by one level of the protocol stack, then some benet is realized by packet parallelization. As noted in [13], such benets are gained

only when processing is large enough. This is indicated

by using threads on larger messages.

Future directions for continued study will rst focus

on the use of actual data les that contain real messages, which would potentially alter the prole. Actual

measurements of the number of messages and size of

messages may inuence the resulting throughput and

parallelization opportunities.

\The views and conclusions contained in this document are those

of the authors and should not be interpreted as representing the

ocial policies, either expr essed or implied, of the Army Research Laboratory or the U.S. Government."

4

References

[1] Torsten Braun and Martina Zitterbart. Parallel transport

system design. In High Performance Networking, IV, pages

397{412. Elsevier Science Publishers B.V., 1993.

[2] Toong Shoon Chan and Ian Gorton. A parallel approach to

high-speed protocol processing. Technical report, University

of New South Wales, March 1994.

[3] Christophe Diot and Michel Ng.X. Dang. A high performance implementation of OSI transport protocol class 4;

evaluation and perspectives. In 15th Conference on Local Computer Networks, pages 223{230, Minneapolis, Minnesota, 1990.

[4] Willibald A. Doeringer, Doug Dykeman, Matthias Kaiserswerth, Bernd Werner Meister, Harry Rudin, and Robin

Williamson. A survey of light-weight transport protocols

of high-speed networks. IEEE Communications Magazine,

38(11):2025{2039, November 1990.

[5] Murry W. Goldberg, Gerald W. Neufeld, and Mabo R. Ito.

A parallel approach to OSI connection-oriented protocols.

In IFIP Workshop Protocols for High Speed Networks, pages

219{232. Elsevier Science Publishers B.V., 1993.

[6] Mabo R. Ito, Len Y. Takeuchi, and Gerald W. Neufeld. A

multiprocessor approach for meeting the processing requirements for OSI. IEEE Journal on Selected Areas in Communications, 11(2):220{227, February 1993.

[7] Niraj Jain, Mischa Schwartz, and Theodore R. Bashkow.

Transport protocol processing at GBPS rates. In ACM SIGCOMM, pages 188{199, Philadelphia, 1990.

[8] O.G. Koufopavlou, A.N. Tantawy, and M. Zitterbart. Analysis of TCP/IP for high performance parallel implementations. In 17th Conference on Local Computer Networks,

pages 576{585, Minneapolis, Minnesota, 1992.

[9] Thomas F. La Porta and Mischa Schwartz. The multistream protocol: A highly exible high-speed transport protocol. IEEE Journal on Selected Areas in Communications,

11(4):519{530, May 1993.

[10] Military Standard. Interoperability standard for digital message transfer device subsystems. (MIL-STD-188-220A), July

1995.

[11] Erich M. Nahum, David J. Yates, James F. Kurose, and

Don Towsley. Performance issues in parallelized network

protocols. In Firat Symposium on Operating Systems Design

and Implementation, 1994.

[12] Douglas C. Schmidt and Tatsuya Suda. The performance

of alternative threading architectures for parallel communication subsystems. submitted to: Journal of Parallel and

Distributed Computing, pages 1{19, 1996.

[13] Thomas P. Way, Cheer-Sun Yang, and Lori L. Pollock. Potential performance improvements of MIL-STD 188-220A

through parallelism. ARL-ATIRP Second Annual Technical

Conference, January 1998.

[14] Martina Zitterbart. Parallelism in communication subsystems. In High Performance Networks, pages 177{194.

Kluwer Academic Publishers, 1994.

Exponential Distribution of Message Size

30

User Time

System Time

total Time

Non-Threaded Basline

25

Time (seconds)

20

15

10

5

0

0

50

100

150

200

250

Message Size (bytes)

Binomial Distribution of Message Size

300

350

400

35

User Time

System Time

Total Time

Non-Threaded Basline

30

Time (seconds)

25

20

15

10

5

0

0

50

100

150

200

Message Size (bytes)

Random Distribution of Message Size

250

300

User Time

System Time

total Time

Non-Threaded Basline

70

60

Time (seconds)

50

40

30

20

10

0

0

200

400

600

Message Size (bytes)

Figure 1: Results of Parallelization

5

800

1000