HP FlexFabric Reference Architecture: Data Center Trends

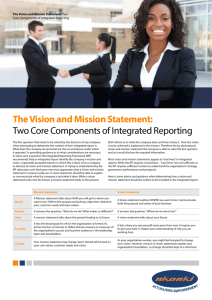

advertisement