Detecting Weak Identification by Bootstrap∗

advertisement

Detecting Weak Identification by Bootstrap∗

Zhaoguo Zhan†

March 4, 2014

Abstract

This paper proposes bootstrap resampling as a diagnostic tool to detect weak

instruments in instrumental variable regression. When instruments are not weak,

the bootstrap distribution of the Two-Stage-Least-Squares estimator is close to the

normal distribution. As a result, substantial difference between these two distributions indicates the existence of weak instruments. We use the Kolmogorov-Smirnov

statistic to measure the difference, which is also a proxy for the size distortion of

a one-sided t test associated with Two-Stage-Least-Squares. Monte Carlo experiments and an empirical application are employed for illustration of the proposed

approach.

JEL Classification: C18, C26, C36

Keywords: Weak instruments, weak identification, bootstrap

∗

The author would like to thank the editor and two anonymous referees for their constructive comments, which greatly helped to improve the paper. The author is also very grateful to Frank Kleibergen,

Sophocles Mavroeidis, Blaise Melly and participants in seminar talks at Brown University for their valuable comments. The Monte Carlo study in this paper was supported by Brown University through the

use of the facilities of its Center for Computation and Visualization. All errors remain mine.

†

Mail: School of Economics and Management, Tsinghua University, Beijing, China, 100084. Email:

zhanzhg@sem.tsinghua.edu.cn. Phone: (+86)10-62789422.

1

Introduction

This paper proposes a simple and intuitive method for detecting weak instruments in the

linear Instrumental Variable (IV) regression model. Based on bootstrap resampling, the

underlying idea of the proposed method is straightforward: the bootstrap distribution of

the commonly used Two-Stage-Least-Squares (TSLS) estimator differs substantially from

normality under weak instruments, thus the departure of this bootstrap distribution from

the normal distribution signals the existence of weak instruments.

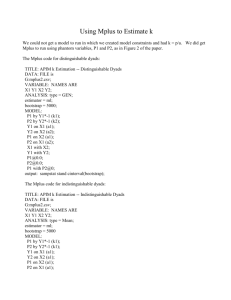

The idea of this paper is graphically illustrated by Figure 1.1 In the left panel, we

present the p.d.f., c.d.f. and Q-Q plot of the bootstrapped TSLS estimator under a weak

instrument. These three plots indicate that when a weak instrument is used, the bootstrap

distribution of the TSLS estimator is substantially different from the normal distribution.

By contrast, a stronger instrument is used to replace the weak instrument to draw the

right panel of Figure 1, which suggests that the bootstrap distribution is closer to the

normal distribution, compared to the left panel. As indicated by Figure 1, this paper

suggests that the strength of instruments can be inferred by comparing the bootstrap

distribution of the standardized TSLS estimator with the standard normal distribution.

There exists a sizeable and growing literature on weak instruments, and more generally,

weak identification, which this paper builds upon. See, e.g., Stock et al. (2002) for an early

survey. It is now well known that when instruments are weak, the TSLS estimator can

be severely biased and its associated t test suffers from size distortion. Staiger and Stock

(1997) and Stock and Yogo (2005) thus suggest the first-stage F test for excluding weak

instruments in the empirically relevant case of one right-hand side endogenous variable,

which is also the focus of this paper.

As TSLS is now a standard toolkit for empirical economists, the F test, together with

the F > 10 rule of thumb in Staiger and Stock (1997) and Stock and Yogo (2005), is

1

Figure 1 results from the empirical application that will be discussed later.

1

Figure 1: p.d.f., c.d.f. and Q-Q plot of two bootstrapped TSLS estimators

1

1

0.9

0.9

0.8

0.8

0.7

0.7

0.6

0.6

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0

−5

0

0

−5

5

0

(a) p.d.f.

(b) p.d.f.

1

1

0.9

0.9

0.8

0.8

0.7

0.7

0.6

0.6

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0

−5

0

0

−5

5

0

(c) c.d.f.

3

2

2

1

1

0

0

−1

−1

−2

−2

−2

−1

0

5

(d) c.d.f.

3

−3

−3

5

1

2

−3

−3

3

(e) Q-Q

−2

−1

0

1

2

3

(f) Q-Q

· · · standardized bootstrap distribution — standard normal

Note: The left panel corresponds to the bootstrap distribution of the standardized TSLS

estimator under a weak instrumental variable. This weak instrument is replaced by a stronger

one, to draw the bootstrap distribution in the right panel. The data is from Card (1995).

2

widely adopted in economic studies to rule out weak instruments. However, it is known

that the F test and the F > 10 rule have its pitfalls. For example, the F statistic itself is

only indirectly related to the TSLS bias or the size distortion of t test, thus this statistic

lacks an intuitive explanation. In addition, F > 10 is only valid under homoscedasticity; to

account for heteroscedasticity, autocorrelation and clustering, Olea and Pflueger (2013)

suggest a modified F test with larger critical values. Furthermore, in Hansen (1982)’s

Generalized Method of Moments (GMM) framework, which nests the linear IV model,

extensions of the F test to detect weak identification are known to be challenging (see,

e.g., Wright (2002)(2003)).

This paper contributes to the weak instruments literature as follows. First, we adopt

the Edgeworth expansion to show that the F test with the F > 10 rule of thumb does not

imply that the size distortion of the t test with critical values from the normal distribution

is controlled under 5% at all pre-specified levels. Following the existing literature, the

strength of instruments is measured by the so-called concentration parameter, and we

show that the concentration parameter appears in the leading term of the Edgeworth

expansion of the TSLS estimator. This leading term indicates the difference between

the distribution of the TSLS estimator and normality; to make this term less than 5%,

the concentration parameter needs to exceed a threshold, which calls for a much larger F

statistic even in the homoscedasticity and just-identified setup. The F > 10 rule of thumb

thus does not imply that the distribution of the TSLS estimator is well approximated by

the normal distribution.

Second, we show that the bootstrap method provides an intuitive and realistic check

for weak instruments, particularly in the empirically relevant case of one right-hand side

endogenous variable, when the number of instruments is limited (e.g., the just-identified

case). We show that the bootstrap distribution of the standardized TSLS estimator under

weak instruments can be substantially different from the normal distribution. The reason

is that, the bootstrap resample preserves the strength of identification, so that if weak in3

struments exist, they are also likely to exist in the bootstrap resample. As a result, the difference between the bootstrap distribution and the normal distribution conveys the information of identification strength. We measure this difference by the Kolmogorov-Smirnov

distance, since it can be viewed as the proxy for size distortion of the t test with critical

values from the normal distribution. By examining this difference, empirical researchers

can thus easily evaluate how strong or weak the instruments are. Unlike the existing

detection methods (see, e.g., Hahn and Hausman (2002), Stock and Yogo (2005), Wright

(2002)(2003), Bravo et al. (2009), Inoue and Rossi (2011), Olea and Pflueger (2013)) that

mainly provide a statistic to measure the strength of identification or instruments, the

proposed bootstrap approach has the unique feature of providing a graphic view, as shown

in Figure 1, and its reported statistic can be directly interpreted.

Finally, although we focus on the linear IV regression model under homoscedasticity,

it is also likely that the idea of this paper can be extended to more generalized models.

For example, under strong identification, the bootstrap distribution of the conventional

GMM estimator is expected to be close to normality (see, e.g., Hahn (1996)); by contrast,

under weak identification, this bootstrap distribution could be far from normality (see,

e.g., Hall and Horowitz (1996)). Hence in general, it could be feasible to use the bootstrap

as a universal tool for detecting weak identification in both IV and GMM applications,

although this requires future investigation.

It is worth emphasizing that other than TSLS and its associated t test, there exist several robust tests (see, e.g., Anderson and Rubin (1949), Stock and Wright (2000),

Kleibergen (2002)(2005), Moreira (2003)), which can be inverted to produce reliable confidence intervals for parameters of interest, regardless of the strength of instruments.

Although these robust methods are naturally appealing, it is still common practice that

empirical economists exclude weak instruments by reporting a large F statistic, before

proceeding to apply TSLS and the t test. The reason is at least partially due to the

understanding that if F > 10, then the outcome of TSLS and t is similar to that of in4

verting robust tests. This paper targets this common practice by arguing that F > 10 is

a relatively low benchmark for excluding weak instruments. For instance, in the empirical

application with F > 10 adopted in this paper, we show that the difference in confidence

intervals by t and robust methods is not negligible, while the proposed bootstrap method

provides a more realistic check than the F test.

This paper is organized as follows. In Section 2, we show that the popular F > 10

rule of thumb is a low benchmark for weak instruments in the linear IV regression model.

In Section 3, we propose the bootstrap-based method for detecting weak instruments.

Monte Carlo evidence and an illustrative application are included in Section 4. Section 5

concludes. The proofs are attached in the Appendix.

2

2.1

Instrumental variable, Edgeworth expansion

Linear IV model

Following Stock and Yogo (2005), we focus on the linear IV model under homoscedasticity,

with n i.i.d. observations:

Y

= Xθ + U

(1)

X = ZΠ + V

(2)

where U = (U1 , ..., Un )′ and V = (V1 , ..., Vn )′ are n × 1 vectors of structural and reduced

form errors respectively, and (Ui , Vi )′ , i =1, ..., n, is assumed to have mean zero with

2

ρσu σv

σu

covariance matrix Σ =

. Z = (Z1 , ..., Zn )′ is the n × k matrix of

2

ρσu σv

σv

instruments with E(Zi Ui ) = 0 and E(Zi Vi ) = 0. Y = (Y1 , ..., Yn )′ and X = (X1 , ..., Xn )′

are n × 1 vectors of endogenous observations. The single structural parameter of interest

is θ, while Π is the k × 1 vector of nuisance parameters.

5

The number of instruments k is assumed to be fixed in the model setup. Hence we do

not adopt the many instruments asymptotics in, e.g., Chao and Swanson (2005), where

the number of instruments grows as the number of observations increases. In addition,

p

′

′

d

we have Z ′ Z/n → Qzz by the law of large numbers, and ( Z√nU , Z√nV ) → (Ψzu , Ψzv ) by the

central limit theorem, provided the moments exist, where Qzz = E(Zi Zi′ ) is invertible,

(Ψ′zu , Ψ′zv )′ ∼ N (0, Σ ⊗ Qzz ).

The commonly used TSLS estimator for θ, denoted by θ̂n , is written as:

−1 ′

θ̂n = X ′ Z(Z ′ Z)−1 Z ′ X

X Z(Z ′ Z)−1 Z ′ Y

(3)

Assumption 1 (Strong Instrument Asymptotics). Π = Π0 6= 0, and Π0 is fixed.

Under Assumption 1 and the setup of the model, the classical result is that θ̂n is

asymptotically normally distributed, according to first-order asymptotics:

√

d

n(θ̂n − θ) → (Π′0 Qzz Π0 )−1 Π′0 Ψzu

(4)

We slightly rewrite the asymptotic result above to standardize θ̂n :

√ θ̂n − θ d

n

→ N(0, 1)

σ

(5)

where σ 2 = (Π′ Qzz Π)−1 σu2 , and N(0, 1) stands for the standard normal variate, whose

c.d.f. and p.d.f. are denoted by Φ(·) and φ(·), respectively.

2.2

Weak instruments

The sizeable literature on weak instruments highlights that in finite samples, the difference

in distribution between the TSLS estimator and the normal variate could be substantial.

See Nelson and Startz (1990), Bound et al. (1995), etc. The substantial difference further

6

implies the TSLS bias as well as the size distortion of the associated t test using critical

values from the normal distribution. This is often referred to as the weak instrument

problem, or more generally, the weak identification problem.

Stock and Yogo (2005) provide some quantitative definitions of weak instruments

based on the TSLS bias and size distortions of the t/Wald test. For example, if the size

distortion of the t test exceeds 5% (e.g., the rejection rate under the null exceeds 10% at

the 5% level), then instruments are deemed weak. Motivated by Stock and Yogo (2005),

we adopt the 5% rule and provide a similar quantitative definition of weak identification.

Definition 1. The identification strength of IV applications is deemed weak if the maximum difference between the distribution function of the standardized IV estimator and

that of the standard normal variate exceeds 5%; otherwise the identification is deemed

strong.

Under this definition, identification is deemed weak iff the Kolmogorov-Smirnov distance below exceeds 5%:

√ θ̂ − θ

n

≤ c) − Φ(c)

KS = sup P ( n

σ

−∞<c<∞

Note that

√

(6)

n θ̂nσ−θ is the non-studentized t statistic, thus Definition 1 can be viewed as

the following: if the size distortion of a one-sided non-studentized t test at any level exceeds

5%, then identification is deemed weak. In this sense, Definition 1 can be considered as

an extension of the definition in Stock and Yogo (2005), since it aims to control the size

distortion at any nominal level, while the definition in Stock and Yogo (2005) relies on a

preset significance level (e.g., the commonly used 5%).

7

2.3

Edgeworth expansion

In order to illustrate the departure of

√

n θ̂nσ−θ from normality, we employ the Edgeworth

expansion. The following result is an application of Theorem 2.2 in Hall (1992).

Theorem 2.1. Under Assumption 1, if the following two conditions for the (2k + k 2 ) × 1

random vector Ri = (Xi Zi′ , Yi Zi′ , vec(Zi Zi′ )′ )′ hold:

i. E(||Ri ||3 ) < ∞,

ii. lim sup||t||→∞|Eexp(it′ Ri )| < 1,

then

√

n θ̂nσ−θ admits the two-term Edgeworth expansion uniformly in c, −∞ < c < ∞:

√ θ̂n − θ

P( n

≤ c) = Φ(c) + n−1/2 p(c)φ(c) + O(n−1 )

σ

(7)

where p(c) is a polynomial of degree 2 with coefficients depending on the moments of Ri

up to order 3.

A similar result is stated in Moreira et al. (2009), where the necessity of the two technical conditions i and ii is explained: the first condition is the imposed minimum moment

assumption, while the second is Cramér’s condition discussed in Bhattacharya and Ghosh

(1978).

Based on the Edgeworth expansion above, the leading term that affects the departure

√

of n θ̂nσ−θ from normality is captured by n−1/2 p(c)φ(c), which shrinks to zero as n goes

to infinity. In the corollary below, we provide the closed form of p(c) in a simplified setup:

the empirically relevant just-identified case.

Corollary 2.2. Under the assumptions of Theorem 2.1, if Zi is independent of Ui , Vi and

the model is just-identified, i.e., k = 1, then:

p(c) = q

ρc2

Π′0 Qzz Π0

σv2

8

(8)

and

√

n θ̂nσ−θ admits the two-term Edgeworth expansion uniformly in c, −∞ < c < ∞:

√ θ̂n − θ

n−1/2 ρc2 φ(c)

ρc2 φ(c)

≤ c) = Φ(c) + q ′

+ O(n−1 ) = Φ(c) + p

+ O(n−1)

P( n

2

Π0 Qzz Π0

σ

µ

2

(9)

σv

Corollary 2.2 indicates that the departure of distribution of the TSLS estimator from

normality in the just-identified model is crucially affected by two parameters: ρ, which

measures the degree of endogeneity; and n

Π′0 Qzz Π0

,

σv2

which is the (population) concentration

parameter when k = 1:

µ2 ≡ E(

Π′ Z ′ ZΠ

Π′0 Qzz Π0

)

=

n

kσv2

σv2

(10)

µ2 is the so-called concentration parameter widely used in the weak instrument literature. Since we divide k in its definition, µ2 can also be viewed as the average strength

of the k instruments. As indicated by Corollary 2.2, for the normal distribution to well

approximate the distribution of the TSLS estimator, µ2 needs to be large.

2.4

Insufficiency of F > 10

The first-stage F test advocated by Staiger and Stock (1997) is commonly used for detecting weak instruments in the TSLS procedure. For the linear IV model described above,

the F statistic is computed by:

F =

Π̂′n Z ′ Z Π̂n

kσ̂v2

(11)

where Π̂n = (Z ′ Z)−1 Z ′ X, σ̂v2 = (X − Z Π̂n )′ (X − Z Π̂n )/(n − k). Thus the F statistic can

be viewed as an estimator of the concentration parameter µ2 .

Staiger and Stock (1997) suggest a rule of thumb for weak instruments, i.e., if F is less

than 10, then instruments are deemed weak. As explained in Stock and Yogo (2005), this

rule of thumb is motivated by the quantitative definition of weak instruments based on

the TSLS bias under k ≥ 3, rather than the size of the t test. Consequently, F > 10 does

9

not necessarily imply that the size distortion of the t test is controlled. In fact, to make

the size distortion of the two-sided t test less than 5% at the 5% level, Stock and Yogo

(2005) suggest F needs to exceed 16.38 in the just-identified case.2

However, as we will show below, Corollary 2.2 suggests that even F > 16.38 could not

exclude the type of weak identification in Definition 1. This implies that in the empirically

relevant case of just-identification, the size distortion of the one-sided t test could still be

severe, even when F > 16.38.

To illustrate the insufficiency of F > 10 as well as F > 16.38, we omit the O(n−1 )

term of the Edgeworth expansion in Corollary 2.2 as if the leading term n−1/2 p(c)φ(c)

solely determines the size distortion, then we approximately have strong identification if

for all −∞ < c < ∞:

−1/2 2

′

n

q ρc φ(c) ≤ 5% =⇒ n Π0 Qzz Π0 ≥ 400ρ2 c4 φ2 (c)

Π′0 Qzz Π0 σv2

2

(12)

σv

√

At the point c = ± 2, 400ρ2 c4 φ2 (c) achieves its maximum. When the degree of

endogeneity is severe, i.e., |ρ| ≈ 1, the maximum is slightly above 34. Consequently,

the concentration parameter roughly needs to exceed 34 to avoid the 5% distortion in

Definition 1, if endogeneity is severe.

The argument in (12) thus suggests that the F > 10 rule of thumb is not sufficient to

rule out weak identification in Definition 1. In the empirically relevant just-identified case,

for example, the first-stage F statistic is an estimator of the concentration parameter. If

F exceeds 10 but is lower than, say 34, then it is still very likely that the concentration

parameter is not large enough to exclude the 5% distortion when it comes to the one-sided

t test. To rephrase, even when F > 10 holds in empirical applications, the chance that

the distribution of the TSLS estimator substantially differs from normality is still large,

which further induces the potentially substantial size distortion of the t test.

2

see Table 5.1, 5.2 in Stock and Yogo (2005).

10

As illustrated above, neither the F > 10 rule of thumb in Staiger and Stock (1997) nor

F > 16.38 in Stock and Yogo (2005) implies that the size distortion of the one-sided t test

can be controlled under 5%. Given this result, a question naturally arises: other than F ,

can we have another method for detecting weak instruments? Ideally, this method should

provide a realistic check for the deviation of the TSLS distribution from normality, and be

easy-to-use. The rest of this paper suggests that the bootstrap could be such a method.

3

Bootstrap

Since its introduction by Efron (1979), the bootstrap has become a practical tool for

conducting statistical inference, and its properties can be explained using the theory of

the Edgeworth expansion in Hall (1992). As an alternative to the limiting distribution,

the bootstrap approximates the distribution of a targeted statistic by resampling the

data. In addition, there is considerable evidence that the bootstrap performs better

than the first-order limiting distribution in finite samples. See, e.g., Horowitz (2001).

However, the bootstrap does not always work well. When IV/GMM models are weakly

identified, for instance, the bootstrap is known to provide a poor approximation to the

exact finite sample distribution of the conventional IV/GMM estimators, as explained in

Hall and Horowitz (1996).

Nevertheless, the fact that the bootstrap fails still conveys useful information for the

purpose of this paper. When instruments are weak, the bootstrap distribution of the TSLS

estimator can be far away from normal; by contrast, when instruments are not weak, the

bootstrap distribution is a good proxy for the exact distribution of the TSLS estimator,

both of which are close to normal. Thus we can examine the departure of the bootstrap

distribution from the normal distribution to evaluate the strength of instruments, as

illustrated by Figure 1 in the Introduction.

11

3.1

Residual Bootstrap

To detect whether there exists weak identification in Definition 1, we are interested in

deriving the Kolmogorov-Smirnov distance. Since the exact distribution of the TSLS

estimator is unknown, we replace it by its bootstrap counterpart.

For this purpose, we employ the residual bootstrap for the linear IV model, as follows.

Step 1: Û, V̂ are the residuals induced by θ̂n , Π̂n in the linear IV model:

Û = Y − X θ̂n

(13)

V̂

(14)

= X − Z Π̂n

Step 2: Recenter Û , V̂ to get Ũ, Ṽ , so that they have mean zero and are orthogonal

to Z.

Step 3: Sampling the rows of (Ũ , Ṽ ) and Z independently n times with replacement,

and let (U ∗ , V ∗ ) and Z ∗ denote the outcome, following the naming convention of bootstrap.

Step 4: The dependent variables (X ∗ , Y ∗ ) are generated by:

X ∗ = Z ∗ Π̂n + V ∗

(15)

Y ∗ = X ∗ θ̂n + U ∗

(16)

As the counterpart of θ̂n , the bootstrapped TSLS estimator θ̂n∗ is computed by the

bootstrap resample (X ∗ , Y ∗ , Z ∗ ):

θ̂n∗

h ′

i−1 ′

′

′

∗

∗

∗′ ∗ −1 ∗′ ∗

= X Z (Z Z ) Z X

X ∗ Z ∗ (Z ∗ Z ∗ )−1 Z ∗ Y ∗

(17)

Under Assumption 1, θ̂n is asymptotically normally distributed. Similarly, the bootstrapped θ̂n∗ has the same asymptotical normal distribution. Also, the bootstrapped TSLS

estimator admits an Edgeworth expansion, as stated below.

12

Theorem 3.1. Under the conditions in Theorem 2.1, the bootstrap version of the nonstudentized t statistic admits the two-term Edgeworth expansion uniformly in c, −∞ <

c < ∞:

√ θ̂∗ − θ̂n

≤ c|Xn ) = Φ(c) + n−1/2 p∗ (c)φ(c) + O(n−1 )

P( n n

σ̂

′

(18)

′

where σ̂ 2 = (Π̂′n ZnZ Π̂n )−1 ŨnŨ , Xn = (X, Y, Z) denotes the sample of observations, and

p∗ (c) is the bootstrap counterpart of p(c).

3.2

Approximation of KS by KS ∗

With the help of the bootstrap, we can now approximate the unknown KolmogorovSmirnov distance KS in (6) by KS ∗ below:

√ θ̂∗ − θ̂

n

KS ∗ = sup P ( n n

≤ c|Xn ) − Φ(c)

σ̂

−∞<c<∞

(19)

√ ∗

To compute KS ∗ , we need P ( n θ̂n −σ̂ θ̂n ≤ c|Xn ), which can be derived by bootstrapping

as follows. Re-do the residual bootstrap procedure B times to compute {θ̂n∗i , i = 1, ..., B},

where θ̂n∗i denotes the ith TSLS estimator by bootstrapping. By the strong law of large

P

√ θ̂n∗i −θ̂n

√ ∗

a.s

numbers, we have B1 B

≤ c|Xn ) → P ( n θ̂n −σ̂ θ̂n ≤ c|Xn ). Since we can

i=1 1( n

σ̂

√ ∗

make B sufficiently large, P ( n θ̂n −σ̂ θ̂n ≤ c|Xn ) is effectively given. Consequently, KS ∗ is

effectively given, once the data is provided.

For KS ∗ to be a good proxy for KS, the bootstrap distribution needs to well approximate the exact distribution of the TSLS estimator. In fact, under strong instruments,

√

both KS ∗ and KS shrink to zero at the speed of n, and KS ∗ approximates KS super

consistently, as stated by the theorem below.

Theorem 3.2. Under the conditions in Theorem 2.1: KS = Op (n−1/2 ), KS ∗ = Op (n−1/2 ),

13

KS ∗ − KS = Op (n−1 ).3

Theorem 3.2 suggests that the bootstrap-based KS ∗ is the ideal proxy for KS under

strong instruments, since their difference is negligible. By the same logic, the sampling

distribution of KS ∗ can also be accounted for by bootstrapping. The argument is straightforward: just as we can use the bootstrap-based KS ∗ as the proxy for KS, we can similarly

apply this principle again to approximate KS ∗ by its bootstrap-based counterpart denoted

by KS ∗∗ . Consequently, a double-bootstrap procedure described below can be adopted

to construct the bootstrap confidence interval for KS (see, e.g., Horowitz (2001)).

1. First bootstrap: compute KS ∗ as explained above.

2. Second bootstrap: construct the bootstrap confidence interval of KS.

(a) Take a bootstrap resample from Xn , call it Xn1 ;

(b) Repeat first bootstrap by replacing Xn with Xn1 , and we get

KS ∗∗1

√ θ̂∗∗ − θ̂∗1

n

n

1

≤ c|Xn ) − Φ(c)

= sup P ( n

∗1

σ̂

−∞<c<∞ (20)

where θ̂n∗∗ is the bootstrapped TSLS estimator computed by the resample taken

from Xn1 ; θ̂n∗1 , σ̂ ∗1 defined in Xn1 are the counterpart of θ̂n , σ̂ defined in Xn .

(c) Repeatedly take Xn2 , ..., XnB from Xn , and compute KS ∗∗2 , ..., KS ∗∗B . As a

result, we can construct a 100(1 − α)% bootstrap confidence interval of KS by,

e.g., taking lower and upper

3.3

α

2

quantiles of KS ∗∗1 , ..., KS ∗∗B .

Weak instrument asymptotics

So far, we have shown that KS ∗ is the ideal proxy for KS under strong instruments,

and both KS and KS ∗ shrink to zero as the sample size increases. In this subsection,

3

The Op (·) orders presented in this paper are sharp rates.

14

we show that under weak instruments, neither KS nor KS ∗ is likely to be close to zero.

The reason is that neither the exact distribution nor the bootstrap distribution of the

standardized TSLS estimator will coincide with the normal distribution under weak instruments. We show this result under Assumption 2 below, using Hahn and Kuersteiner

(2002)’s specification to model weak instruments.

Assumption 2. Π =

Π0

,

nδ

where 0 ≤ δ < ∞.

When δ = 0, Assumption 2 reduces to the classic strong instrument asymptotics in Assumption 1. When δ = 12 , Assumption 2 corresponds to the local-to-zero weak instrument

asymptotics in Staiger and Stock (1997). When δ = ∞, there is no identification. In addition, when 0 < δ < 12 , the identification is considered by Hahn and Kuersteiner (2002)

and Antoine and Renault (2009) as nearly weak ; when

1

2

< δ < ∞, the identification is

treated as near non-identified in Hahn and Kuersteiner (2002). Overall, Assumption 2

corresponds to the drifting data generating process with asymptotically vanishing identification strength, which is weaker than in Assumption 1.

It is known that the truncated Edgeworth expansion provides a poor approximation

under weak instruments, we thus rewrite the standardized TSLS estimator θ̂n in the

manner of Rothenberg (1984):4

√ θ̂n − θ

n

=

σ

where ζu ≡

Π′ Z ′ U

σu σv k 1/2 µ

N(0, 1), Svv ≡

d

→ N(0, 1), Svu ≡

V ′ Z(Z ′ Z)−1 Z ′ V

kσv2

ζu + Svu

Π′ Z ′ ZΠ

kσv2 µ2

p

k

µ2

ζv

+ 2 k1/2

+

µ

V ′ Z(Z ′ Z)−1 Z ′ U

kσu σv

= Op (1), µ =

q

(21)

Svv

µ2

= Op (1),

Π′ Z ′ ZΠ

kσv2 µ2

p

→ 1, ζv ≡

Π′ Z ′ V

k 1/2 σv2 µ

d

→

µ2 .

As illustrated by the rewriting of (21), the concentration parameter µ2 crucially affects

the deviation of the distribution of the standardized TSLS estimator from the normal

4

Our rewriting differs from that in Rothenberg (1984), since we allow for random instruments, and

our definition of the concentration parameter incorporates k.

15

distribution, and is thus widely used as a measure of the identification strength in the

weak instruments literature. For the standardized TSLS estimator to be asymptotically

normally distributed, the concentration parameter needs to accumulate to infinity as the

sample size grows. On the other hand, if µ2 remains small, then the distribution of the

standardized TSLS estimator is far from normal.

For this reason, our discussion below focuses on the concentration parameter. It can

be shown that under weak instruments, this parameter does not diverge to infinity. Under

Assumption 2, µ2 is written as:

2

µ =

′

1−2δ Π0 Qzz Π0

n

kσv2

(22)

Similarly, for the bootstrap counterpart of µ2 , denoted by µ∗2 , we get:

[ Πnδ0 + (Z ′ Z)−1 Z ′ V ]′ Z Z[ Πnδ0 + (Z ′ Z)−1 Z ′ V ]

′

µ

∗2

=

k Ṽ ′ Ṽ /n

(23)

We explore the difference between µ2 and µ∗2 in the theorem below, in order to compare

the identification strength in the sample and the bootstrap resample. In particular, we

emphasize that neither µ2 nor µ∗2 accumulates to infinity under weak instruments, which

implies that neither the standardized TSLS estimator nor its bootstrapped version is

approximately normally distributed, and thus neither KS nor KS ∗ will shrink to zero.

Theorem 3.3. Under the specification in Assumption 2:

1. If 0 ≤ δ < 12 , then µ2 = Op (n1−2δ ) → ∞, µ∗2 = Op (n1−2δ ) → ∞, and:

µ∗2 − µ2 = op (1)

16

(24)

2. If δ = 12 , then µ2 =

Π′0 Qzz Π0

,

kσv2

d

µ∗2 →

′

Π′0 Qzz Π0 +2Π′0 Ψzv +Ψzv Q−1

zz Ψzv

,

kσv2

and:

′

2Π′0 Ψzv + Ψzv Q−1

zz Ψzv

µ −µ →

2

kσv

∗2

3. If

1

2

2 d

(25)

d

< δ < ∞, then µ2 = op (1), µ∗2 → χ2k /k, and:

d

µ∗2 − µ2 → χ2k /k

(26)

Theorem 3.3 conveys the following message. When instruments are not very weak

(0 ≤ δ <

1

),

2

the difference between µ∗2 and µ2 is negligible, hence the identification

strength in the bootstrap resample well preserves the original identification strength in

the sample. By contrast, when instruments are weak or almost useless ( 12 ≤ δ < ∞), the

bootstrap does not well preserve the original identification strength, as µ∗2 asymptotically

differs from µ2 , which helps explain why the bootstrap may not function well under weak

instruments.5

Although the bootstrap does not always preserve the original identification strength

(i.e., the difference between µ∗2 and µ2 is not always negligible), Theorem 3.3 shows that

it does preserve the pattern of identification: under strong instruments, both µ∗2 and µ2

accumulate to infinity, hence the bootstrap distribution asymptotically coincides with the

normal distribution; under weak instruments, neither µ∗2 nor µ2 accumulates to infinity,

hence the bootstrap distribution asymptotically differ from the normal distribution.

Note that the mean of the asymptotic difference between µ∗2 and µ2 is equal to 1 when

1

2

≤ δ < ∞ in Theorem 3.3. Thus on average, the bootstrap identification strength of weak

instruments measured by µ∗2 appears similar to but slightly stronger than its counterpart

µ2 . For our purpose of evaluating instruments, this property is desirable: it implies

5

It is a well known phenomenon that the bootstrap does not work well under weak identification, and

Theorem 3.3 helps provide an explanation.

17

that the use of the bootstrapped identification strength to detect weak instruments is

unlikely to exaggerate the actual weak instrument problem, hence the proposed approach

is conservative.

3.4

Many instruments

Although bootstrapping only increases the strength of each weak instrument by roughly

1 on average, the joint strength of k instruments is increased by around k. This might

induce the following suspicion: when there are many weak or even useless instruments,

the bootstrap distribution of the standardized TSLS estimator must be close to normal,

since the total concentration parameter is increased by a large k. This suspicion thus

further implies the proposed bootstrap approach appears improper for detecting many

weak or even useless instruments.

However, the above suspicion is incorrect. A subtle point is as follows: it is not necessary that the more instruments we have, the closer to normal the distribution of the

standardized TSLS estimator. The reason can be viewed from the so-called many instruments asymptotics in, e.g., Chao and Swanson (2005), which provides a rate condition for

the TSLS estimator to be consistent: the total concentration parameter (the average concentration parameter multiplied by k) must grow at a faster rate than that of the number

of instruments; in other words, the average concentration parameter of all k instruments

must accumulate as k gets large, for the TSLS estimator to be consistent. In our model

setup, the number of instruments k is fixed, while µ2 as well as µ∗2 is the average strength

of k instruments. Under weak instruments, Theorem 3.3 (part 2, 3) shows that neither

µ2 nor µ∗2 accumulates to infinity, thus the rate condition in Chao and Swanson (2005) is

not satisfied when

1

2

≤ δ < ∞. The failure in the rate condition further implies that the

bootstrapped TSLS estimator after standardization still differs from a standard normal

variate, even for large k’s.

18

The same reason can also be viewed from (21). Consider the term Svu

q

k

,

µ2

which

needs to converge to zero, for the standardized TSLS estimator to converge to a standard

q

normal variate. Since Svu converges to ρ, µk2 needs to be around zero in large samples.

This requires µ2 to be much larger than k. For instance, by the Edgeworth expansion in

Section 2, we suggest that when k = 1, µ2 needs to exceed 34, for the normal distribution

to well approximate the exact distribution of the standardized TSLS estimator. If k is

q

large, while µ2 is relatively small, then Svu µk2 is non-negligible, which further implies

that the standardized TSLS distribution is far from normal. In other words, for large

k’s, the average strength µ2 must be even larger, for the standardized TSLS estimator

to be close to the standard normal variate. This requirement, corresponding to the rate

condition in Chao and Swanson (2005), does not hold when

1

2

≤ δ < ∞ in our setup

for weak instruments; by contrast, this condition does hold if 0 ≤ δ < 12 , which implies

both the exact and bootstrap distribution of the standardized TSLS estimator converge

to normal. Note that 0 ≤ δ <

while

1

2

1

2

corresponds to strong and nearly weak instruments,

≤ δ < ∞ corresponds to weak and nearly non-identified instruments. Thus the

proposed bootstrap approach is suitable for detecting many weak and nearly non-identified

instruments.

For the reasons above, although the bootstrap could slightly increase the strength of

each instrument, simply adding many weak or irrelevant instruments does not necessarily

drive the bootstrapped version of the standardized TSLS estimator to the standard normal

variate: this depends on the ratio of the overall concentration parameter and the number

of instruments. The proposed bootstrap approach is thus still applicable for large k’s.

3.5

A diagnostic tool

Based on Theorem 3.2 and 3.3 above, it is proper to use KS ∗ to evaluate the strength

of the instruments. Under strong instruments, the difference between KS ∗ and KS is

19

negligible, as stated in Theorem 3.2, and both KS and KS ∗ are close to zero. Under weak

instruments, KS ∗ remains substantially larger than zero, as stated in Theorem 3.3, which

shows that the identification strength in the bootstrap resample does not accumulate as

the sample size increases, making the bootstrap distribution substantially different from

normal.

Associated with the point estimate KS ∗ , the bootstrap confidence interval can be

constructed by the double-bootstrap procedure described above. Reporting KS ∗ and

its associated bootstrap confidence interval would help researchers evaluate how serious

the weak instrument problem could be. Overall, this paper suggests the following: the

bootstrap distribution of the standardized TSLS estimator, together with KS ∗ and its

bootstrap confidence interval, can serve as a diagnostic tool for evaluating instruments.

4

Monte Carlo and application

Monte Carlo evidence and an illustrative application for the proposed bootstrap approach

are presented in this section.

4.1

4.1.1

Monte Carlo

Just-identification

In the data generating process, we employ the just identified linear IV model: Yi =

Xi · 0 + Ui , Xi = Zi · Π + Vi , Ui and Vi are jointly normally distributed with unit variance

and correlation coefficient equal to ρ. Zi ∼ NID(0, 1), i = 1, ..., 1000. We set ρ = 0.99

√

and ρ = 0.50 for severe and medium endogeneity respectively. Π = µ/ 1000, in order to

control the strength of identification.

In Table 1, corresponding to each concentration parameter µ2 , we report the KS

distance between the standardized TSLS estimator and the standard normal variate. The

20

sample median, mean and standard deviation of the bootstrap-based KS ∗ are presented

in subsequent columns, respectively. The number of Monte Carlo replications is 1000,

while the number of bootstrap replications is B = 100000.

First of all, Table 1 shows that as µ2 gets larger, KS becomes smaller. Also, KS

decreases when endogeneity is less severe. In particular, when ρ = 0.99 and µ2 = 35,

KS ≈ 0.05, which is consistent with the Edgeworth expansion in Corollary 2.2 adopted

to show the insufficiency of F > 10. The first two columns of Table 1 suggest that similar

to µ2 , KS is also a reasonable measure for the identification strength.

Secondly, Table 1 indicates that KS ∗ approximates KS well, especially when µ2 is

large. This is consistent with Theorem 3.2 that under strong instruments, KS ∗ is the

ideal proxy for KS. When µ2 is small, although its standard error is sizable, the mean

and median of KS ∗ remain large and close to KS. This does not contradict Theorem 3.3,

which suggests µ2 and µ∗2 are similarly small under weak instruments.

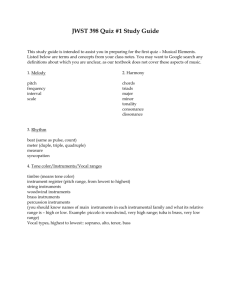

Figure 2 is drawn based on the Monte Carlo results for Table 1, and it further illustrates the idea of this paper: the departure of the bootstrap distribution from normality

conveys the strength of identification. In particular, as identification strength measured

by the concentration parameter µ2 increases, KS ∗ shrinks to zero. When identification

is strong, KS ∗ approximates KS very well, as indicated by the short bounds of KS ∗ .

When identification is weak, similar to KS, KS ∗ also becomes large, although KS ∗ may

not approximate KS well, as indicated by the wide bounds. Overall, KS ∗ conveys the

strength of identification. When endogeneity is severe (ρ = 0.99 in the top panel of Figure

2), the imposed 5% bar implies that the concentration parameter needs to exceed 34; by

contrast, when endogeneity is medium (ρ = 0.50 in the bottom panel of Figure 2), the 5%

bar requires a much lower value for the concentration parameter, which is still well above

10.

21

4.1.2

Over-identification

We also consider over-identification by setting k = 10. The purpose is to check whether

KS ∗ remains as a reasonable proxy for KS, when the number of instruments is large.

The data generating process is similar to the one above for just-identification, with the

√

following modifications: Zi ∼ NID(0, Ik ), Π = ιk · µ/ 1000, where Ik is the k × k identity

matrix, ιk is the k × 1 vector of ones. The Monte Carlo outcome is presented in Table

2. Similar to Table 1, Table 2 also shows that KS ∗ approximates KS well, particularly

under larger µ2 .

Compared to Table 1, Table 2 shows that KS becomes much larger after k is increased.

q

The increase in KS is mainly driven by the term Svu µk2 in (21): for the same µ2 , the

q

increase in k makes Svu µk2 less likely to be negligible, which further induces severer

departure of the standardized TSLS estimator from the standard normal variate. In

addition, since both KS and KS ∗ get larger when k changes from 1 to 10, this implies

the proposed bootstrap tool for evaluating instruments becomes stricter for larger k’s.

In other words, even when the average µ2 remains the same for each instrument, adding

more instruments can increase KS and KS ∗ ; consequently, these instruments are more

likely to be deemed weak by the proposed bootstrap approach.

4.2

Application

In this subsection, we employ an empirical application to illustrate the proposed bootstrap

approach. The same data as in Card (1995) is used. By employing the IV approach, Card

(1995) answers the following question: what is the return to education? Or specifically,

how much more can an individual earn if he/she completes an extra year of schooling?

The data set is ultimately taken from the National Longitudinal Survey of Young Men

between 1966-1981 with 3010 observations, and there are two variables in the data set

that measure college proximity: nearc2 and nearc4, both of which are dummy variables,

22

and are equal to 1 if there is a two-year or four-year college in the local area respectively.

See Card (1995) for detailed description of the data.

To identify the return to education, Card (1995) considers a structural wage equation

as follows:

lwage = α + θ · edu + W ′ β + u

(27)

where lwage is the log of wage; edu is the years of schooling; the covariate vector W

contains the control variables; u is the error term; and edu can be instrumented by nearc2

or nearc4. Among the set of parameters (α, θ, β′ ), θ measuring the return to education

is of interest.

In the basic specification, Card (1995) uses five control variables: experience, the

square of experience, a dummy for race, a dummy for living in the south, and a dummy for

living in the standard metropolitan statistical area (SMSA). To bypass the issue that experience is also endogenous, Davidson and MacKinnon (2010) replace experience and the

square of experience with age and the square of age. Following Davidson and MacKinnon

(2010), we use age, square of age, and the three dummy variables as control variables.

Hence edu is the only endogenous regressor. While Davidson and MacKinnon (2010) simultaneously use more than one instrument, we use the two instruments, nearc2 and

nearc4, one by one as the single instrument for edu to illustrate the proposed bootstrap

approach.

To get started, we examine the strength of instruments by the first stage F test in

Stock and Yogo (2005): if nearc2 is used as the single IV for edu, F = 0.54; if nearc4 is

used as the single IV for edu, F = 10.52. According to the rule of thumb F > 10, these two

F statistics suggest that nearc4 is a strong instrumental variable, while nearc2 is weak.

Table 3 reports the point estimate and 95% confidence interval of return to education

derived by TSLS and t test using nearc2 and nearc4 as the instrument, respectively. In

23

addition, the identification-robust conditional likelihood ratio (CLR)6 test by Moreira

(2003) is also applied to construct a confidence interval as a benchmark. Under the

weaker nearc2, CLR produces a much wider confidence interval than the one produced

by inverting the t test. Under the stronger nearc4, the two confidence intervals by CLR

and t are getting closer, but their difference still appears substantial.

Now we use the bootstrap approach to evaluate the strength of the two instruments,

respectively. To do so, we compute the TSLS estimator of return to education by the

residual bootstrap 2000 times. After standardization, we plot the p.d.f., c.d.f and Q-Q of

the bootstrapped TSLS estimator against the standard normal in Figure 1.

nearc2 as IV (left panel of Figure 1): Just by eyeballing the left panel in Figure 1,

it is obvious that the bootstrap distribution is far from normality, suggesting nearc2 is a

weak instrument. In addition, KS ∗ = 0.2325 further indicates nearc2 is weak, since this

number is well above 0.05. Furthermore, the 90% C.I. for KS constructed by doublebootstrap is found to be (0.0709, 0.4672), which contains no points below 0.05. All of

these results consistently suggest that nearc2 is a weak instrument.

nearc4 as IV (right panel of Figure 1): The bootstrap distribution does not appear

too far from normality by eyeballing the right panel in Figure 1. However, KS ∗ = 0.0774,

which is above 0.05, indicating the strength of nearc4 is not sufficient to exclude weak

identification in Definition 1. The bootstrap confidence interval for KS is (0.0226, 0.1016),

containing points above and below 0.05. Consequently, nearc4 is deemed stronger than

nearc2, but not sufficiently strong to rule out that it is a weak instrument.

As illustrated by this empirical example, the bootstrap provides empirical researchers

a graphic view of the identification strength while the F test does not. By eyeballing the

p.d.f., c.d.f. or Q-Q plot of the bootstrap distribution, empirical researchers can evaluate

how strong or weak the instruments are. Furthermore, compared to the F statistic, the

6

The IV model under consideration is just identified, hence CLR is equivalent to the AR test in

Anderson and Rubin (1949) and K test in Kleibergen (2002).

24

KS ∗ statistic by bootstrap is easier to interpret: it is the maximum difference between

the bootstrap distribution and the normal distribution, which is a proxy for the worst size

distortion when it comes to the one-sided t test. Last but not the least, this empirical

example also suggests that F > 10 does not imply the difference in outcome by t and

robust methods is negligible.

5

Conclusion

This paper suggests using the bootstrap as a tool for detecting weak instruments. The

main message is that the distance between the bootstrap distribution of the standardized

TSLS estimator and the normal distribution conveys information about the strength of

instruments. Empirical researchers can easily detect weak instruments by examining

whether the bootstrap distribution is close to the normal distribution.

The formal discussion of the paper is made under the linear IV model with one endogenous variable and fixed number of instruments. The widely used F test and the

F > 10 rule of thumb in Staiger and Stock (1997) are employed to motivate and compare

with the proposed approach. Monte Carlo evidence and an illustrative application are

also provided. The hope is that the proposed bootstrap-based diagnostic tool can help

empirical researchers decide whether we should be concerned about weak instruments or

not, since this tool is intuitive, graphic and easily applicable.

25

References

Anderson, T. and Rubin, H. (1949). Estimation of the parameters of a single equation

in a complete system of stochastic equations. Annals of Mathematical Statistics, pp.

46–63.

Antoine, B. and Renault, E. (2009). Efficient GMM with nearly-weak instruments.

Econometrics Journal, 12, S135–S171.

Bhattacharya, R. and Ghosh, J. (1978). On the validity of the formal Edgeworth

expansion. Annals of Statistics, 6 (2), 434–451.

Bound, J., Jaeger, D. and Baker, R. (1995). Problems with instrumental variables

estimation when the correlation between the instruments and the endogeneous explanatory variable is weak. Journal of the American Statistical Association, pp. 443–450.

Bravo, F., Escanciano, J. and Otsu, T. (2009). Testing for Identification in GMM

under Conditional Moment Restrictions, working Paper.

Card, D. (1995). Using geographic variation in college proximity to estimate the return to

schooling, Aspects of labour market behaviour: essays in honour of John Vanderkamp.

ed. L.N. Christofides, E.K. Grant, and R. Swidinsky.

Chao, J. and Swanson, N. (2005). Consistent estimation with a large number of weak

instruments. Econometrica, 73 (5), 1673–1692.

Davidson, R. and MacKinnon, J. (2010). Wild bootstrap tests for IV regression.

Journal of Business and Economic Statistics, 28 (1), 128–144.

Efron, B. (1979). Bootstrap methods: another look at the jackknife. Annals of Statistics,

pp. 1–26.

26

Hahn, J. (1996). A note on bootstrapping generalized method of moments estimators.

Econometric Theory, 12 (1), 187–197.

— and Hausman, J. (2002). A new specification test for the validity of instrumental

variables. Econometrica, 70 (1), 163–189.

— and Kuersteiner, G. (2002). Discontinuities of weak instrument limiting distributions. Economics Letters, 75 (3), 325–331.

Hall, P. (1992). The Bootstrap and Edgeworth expansion. Springer Verlag.

— and Horowitz, J. (1996). Bootstrap critical values for tests based on generalized

method of moments estimators. Econometrica, 64 (4), 891–916.

Hansen, L. (1982). Large sample properties of generalized method of moments estimators. Econometrica, pp. 1029–1054.

Horowitz, J. L. (2001). The bootstrap. Handbook of econometrics, 5, 3159–3228.

Inoue, A. and Rossi, B. (2011). Testing for weak identification in possibly nonlinear

models. Journal of Econometrics, 161 (2), 246–261.

Kleibergen, F. (2002). Pivotal statistics for testing structural parameters in instrumental variables regression. Econometrica, pp. 1781–1803.

— (2005). Testing parameters in GMM without assuming that they are identified. Econometrica, pp. 1103–1123.

Moreira, M. (2003). A conditional likelihood ratio test for structural models. Econometrica, pp. 1027–1048.

—, Porter, J. and Suarez, G. (2009). Bootstrap validity for the score test when

instruments may be weak. Journal of Econometrics, 149 (1), 52–64.

27

Nelson, C. and Startz, R. (1990). Some further results on the exact small sample

properties of the instrumental variable estimator. Econometrica, pp. 967–976.

Olea, J. L. M. and Pflueger, C. (2013). A robust test for weak instruments. Journal

of Business & Economic Statistics.

Rothenberg, T. (1984). Approximating the distributions of econometric estimators and

test statistics. Handbook of econometrics, 2, 881–935.

Staiger, D. and Stock, J. (1997). Instrumental variables regression with weak instruments. Econometrica, pp. 557–586.

Stock, J. and Wright, J. (2000). GMM with weak identification. Econometrica, pp.

1055–1096.

—, — and Yogo, M. (2002). A survey of weak instruments and weak identification in

generalized method of moments. Journal of Business and Economic Statistics, 20 (4),

518–529.

— and Yogo, M. (2005). Testing for weak instruments in linear IV regression, Identification and Inference for Econometric Models: Essays in Honor of Thomas Rothenberg.

ed. DW Andrews and JH Stock, pp. 80–108.

Wright, J. (2002). Testing the null of Identification in GMM. International Finance

Discussion Papers, 732.

— (2003). Detecting lack of identification in GMM. Econometric Theory, 19 (02), 322–

330.

28

Appendix

Proof. (Corollary 2.2) Apply Theorem 2.2 in Hall (1992) to compute the following items:

A1 = − r Π′ Qρ zz Π , A2 =

0

0

2

σv

r −6ρ

Π′ Qzz Π0

0

2

σv

, and

ρ

1

p(c) = −A1 − A2 (c2 − 1) = q ′

c2

Π0 Qzz Π0

6

σv2

√ ∗

Proof. (Theorem 3.2) By definition, KS ∗ = sup−∞<c<∞ P ( n θ̂n −σ̂ θ̂n ≤ c|Xn ) − Φ(c),

√

KS = sup−∞<c<∞ P ( n θ̂nσ−θ ≤ c) − Φ(c). Apply the triangle property:

√ θ̂∗ − θ̂

√

θ̂

−

θ

n

n

KS ∗ − KS ≤

sup P ( n n

≤ c|Xn ) − P ( n

≤ c)

σ̂

σ

−∞<c<∞

To complete the proof, we only need to show p∗ (c) = p(c) + Op (n−1/2 ), since:

√ θ̂∗ − θ̂n

P( n n

≤ c|Xn ) = Φ(c) + n−1/2 p∗ (c)φ(c) + O(n−1)

σ̂

√ θ̂n − θ

P( n

≤ c) = Φ(c) + n−1/2 p(c)φ(c) + O(n−1 )

σ

√ θ̂n∗ − θ̂n

√ θ̂n − θ

P( n

≤ c|Xn ) − P ( n

≤ c) = n−1/2 [p∗ (c) − p(c)] φ(c) + O(n−1 )

σ̂

σ

For the random vector Ri , define its population mean µ and its sample mean R̄:

Ri = (Xi Zi′ , Yi Zi′ , vec(Zi Zi′ )′ )′

µ = E(Ri ) = (Π′ Qzz , Π′ Qzz θ, vec(Qzz )′ )′

n

1X

1 ′

R̄ =

Ri =

(X Z, Y ′ Z, vec(Z ′Z)′ )′

n i=1

n

29

2

Define a function A : R2k+k → R that operates on a vector of the type of Ri :

−1

Xi Zi′ (Zi Zi′ )−1 Zi Yi − θ

σ

−1

′

′

−1 ′

[X Z(Z Z) Z X] X ′ Z(Z ′ Z)−1 Z ′ Y − θ

A(R̄) =

σ

A(Ri ) =

[Xi Zi′ (Zi Zi′ )−1 Zi Xi ]

Note that

A(µ) = 0, and

√ θ̂n − θ √

n

= nA(R̄)

σ

For the vector Ri , put:

µi1 ,...,ij = E (Ri − µ)(i1 ) ...(Ri − µ)(ij )

where (Ri −µ)(ij ) denotes the ij -th element in Ri −µ. Thus µi1 ,...,ij is the centered moment

of elements in Ri .

For the function A, put:

ai1 ,...,ij =

∂j

A(x)|x=µ

∂x(i1 ) ...∂x(ij )

where x(ij ) is the ij -th element in x. Thus ai1 ,...,ij is the derivative of A taking values at

µ.

The following results follow Theorem 2.2 in Hall (1992):

1

p(c) = −A1 − A2 (c2 − 1)

6

2 2k+k 2

2k+k

1 X X

A1 =

aij µij

2 i=1 j=1

A2 =

2

2

2

2k+k

X 2k+k

X 2k+k

X

i=1

j=1

ai aj al µijl + 3

2

2

2

2

2k+k

X 2k+k

X 2k+k

X 2k+k

X

i=1

l=1

30

j=1

l=1

m=1

ai aj alm µil µjm

Similarly for the bootstrap counterparts, we have:

1

p∗ (c) = −A∗1 − A∗2 (c2 − 1)

6

2 2k+k 2

2k+k

1 X X ∗ ∗

A∗1 =

a µ

2 i=1 j=1 ij ij

A∗2

=

2

2

2

2k+k

X 2k+k

X 2k+k

X

i=1

j=1

a∗i a∗j a∗l µ∗ijl

+3

2

2

2

2

2k+k

X 2k+k

X

X 2k+k

X 2k+k

i=1

l=1

j=1

a∗i a∗j a∗lm µ∗il µ∗jm

m=1

l=1

where

′

′

′

Ri∗ = (Xi∗ Zi∗ , Yi∗ Zi∗ , vec(Zi∗ Zi∗ )′ )′

Z ′Z ′ Z ′Z

Z ′Z ′ ′

µ∗ = E∗ (Ri∗ ) = (Π̂′n

, Π̂n

θ̂n , vec(

))

n

n

n

µ∗i1 ,...,ij = E∗ (Ri∗ − µ∗ )(i1 ) ...(Ri∗ − µ∗ )(ij )

∂j

A(x)|x=µ∗

∂x(i1 ) ...∂x(ij )

a∗i1 ,...,ij =

p

Note that if we can show that a∗i1 ,...,ij → ai1 ,...,ij and µ∗i1 ,...,ij = µi1 ,...,ij + Op (n−1/2 )

respectively, then it follows that, A∗i = Ai + Op (n−1/2 ), i = 1, 2, and consequently,

p∗ (c) = p(c) + Op (n−1/2 ). For a∗i1 ,...,ij : a∗i1 ,...,ij =

′

′

′

∂j

A(x)|x=µ∗ .

∂x(i1 ) ...∂x(ij )

Under Assump-

p

tion 1, µ∗ = (Π̂′n ZnZ , Π̂′n ZnZ θ̂n , vec( ZnZ )′ )′ → µ = (Π′ Qzz , Π′ Qzz θ, vec(Qzz )′ )′ ; hence by

p

continuous mapping, a∗i1 ,...,ij → ai1 ,...,ij . For µ∗i1 ,...,ij , apply the central limit theorem for

the sample mean, provided the moments exist:

µ∗i1 ,...,ij = E∗ (Ri∗ − µ∗ )(i1 ) ...(Ri∗ − µ∗ )(ij )

n

1 X ∗

=

(Ri − µ∗ )(i1 ) ...(Ri∗ − µ∗ )(ij )

n i=1

= µi1 ,...,ij + Op (n−1/2 )

31

Table 1: Monte Carlo for just-identification

Panel A: ρ = 0.99, k = 1

µ2

KS

1

5

10

20

30

35

40

50

60

100

0.159

0.110

0.089

0.064

0.053

0.049

0.046

0.041

0.038

0.030

KS ∗

median mean

0.140

0.107

0.089

0.067

0.054

0.050

0.047

0.042

0.038

0.030

0.207

0.122

0.092

0.069

0.055

0.051

0.048

0.043

0.039

0.031

s.d.

0.124

0.069

0.032

0.016

0.012

0.011

0.009

0.008

0.007

0.006

Panel B: ρ = 0.50, k = 1

µ2

KS

1

5

10

20

30

35

40

50

60

100

0.099

0.078

0.059

0.040

0.032

0.029

0.027

0.024

0.022

0.017

KS ∗

median mean

0.096

0.073

0.056

0.040

0.030

0.030

0.028

0.025

0.023

0.018

0.163

0.091

0.063

0.044

0.032

0.032

0.029

0.026

0.024

0.019

s.d.

0.125

0.073

0.037

0.019

0.012

0.011

0.010

0.009

0.008

0.007

Note: µ2 is the concentration parameter; KS is the Kolmogorov-Smirnov distance between the

standardized TSLS estimator and the standard normal variate; KS ∗ is the bootstrap

approximation to KS; ρ is the correlation coefficient of structural errors that measures the

degree of endogeneity; k is the number of instruments for the single endogenous regressor. The

detailed description of D.G.P. for this table is provided in the section of Monte Carlo.

32

Figure 2: Illustration of µ2 , KS and KS ∗ for just-identification

Panel A: ρ = 0.99, k = 1

0.5

0.4

KS

0.3

0.2

0.1

0

0

10

20

30

40

50

60

70

80

90

100

60

70

80

90

100

µ2

Panel B: ρ = 0.50, k = 1

0.5

0.4

KS

0.3

0.2

0.1

0

0

10

20

30

40

50

µ2

— KS

· · · median of KS ∗ - - - 90% bounds of KS ∗

Note: KS is the Kolmogorov-Smirnov distance between the standardized TSLS estimator and

the standard normal variate; KS ∗ is the bootstrap approximation to KS; ρ is the correlation

coefficient of structural errors that measures the degree of endogeneity; k is the number of

instruments for the single endogenous regressor. The detailed description of D.G.P. for this

figure is provided in the section of Monte Carlo.

33

Table 2: Monte Carlo for over-identification

Panel A: ρ = 0.99, k = 10

µ

2

1

5

10

20

30

35

40

50

60

100

KS ∗

median mean

KS

0.732

0.459

0.340

0.246

0.203

0.189

0.176

0.158

0.145

0.112

0.615

0.424

0.325

0.240

0.198

0.184

0.173

0.156

0.143

0.111

0.608

0.426

0.326

0.240

0.199

0.185

0.173

0.156

0.143

0.111

s.d.

0.073

0.048

0.032

0.022

0.018

0.017

0.017

0.016

0.015

0.015

Panel B: ρ = 0.50, k = 10

µ2

KS

1

5

10

20

30

35

40

50

60

100

0.379

0.231

0.171

0.125

0.102

0.095

0.089

0.080

0.074

0.056

KS ∗

median mean

0.185

0.183

0.152

0.116

0.098

0.091

0.086

0.077

0.071

0.056

0.201

0.187

0.153

0.117

0.098

0.091

0.086

0.078

0.071

0.056

s.d.

0.110

0.057

0.035

0.022

0.018

0.017

0.016

0.015

0.014

0.013

Note: µ2 is the concentration parameter; KS is the Kolmogorov-Smirnov distance between the

standardized TSLS estimator and the standard normal variate; KS ∗ is the bootstrap

approximation to KS; ρ is the correlation coefficient of structural errors that measures the

degree of endogeneity; k is the number of instruments for the single endogenous regressor. The

detailed description of D.G.P. for this table is provided in the section of Monte Carlo.

34

Table 3: Return to education in Card (1995)

IV: nearc2

IV: nearc4

0.54

10.52

0.5079

0.0936

(-0.813, 1.828)

(-0.004, 0.191)

(−∞, −0.1750] ∪ [0.0867, +∞)

[0.0009, 0.2550]

0.2325

0.0774

first-stage F statistic

TSLS estimate θ̂n

95% C.I. by t/Wald

95% C.I. by AR/CLR/K

KS ∗

Note: This table presents the estimate θ̂n and confidence intervals for return to education

using the data in Card (1995). The first stage F statistic is reported for the two instrumental

variables, nearc2, nearc4, which are used one by one for the endogenous years of schooling.

The included control variables are age, square of age, and dummy variables for race, living in

the south and living in SMSA. KS ∗ is the Kolmogorov-Smirnov distance between the bootstrap

distribution of the standardized TSLS estimator and the standard normal distribution.

35