Orthogonality

advertisement

Jim Lambers

MAT 610

Summer Session 2009-10

Lecture 3 Notes

These notes correspond to Sections 2.5-2.7 in the text.

Orthogonality

A set of vectors {x1 , x2 , . . . , x𝑘 } in ℝ𝑛 is said to be orthogonal if x𝑇𝑖 x𝑗 = 0 whenever 𝑖 ∕= 𝑗. If, in

addition, x𝑇𝑖 x𝑖 = 1 for 𝑖 = 1, 2, . . . , 𝑘, then we say that the set is orthonormal. In this case, we can

also write x𝑇𝑖 x𝑗 = 𝛿𝑖𝑗 , where

{

1 𝑖=𝑗

𝛿𝑖𝑗 =

0 𝑖 ∕= 𝑗

is called the Kronecker delta.

[

]𝑇

[

]𝑇

are orthogonal, as x𝑇 y = 1(4) −

and y = 4 2 1

Example The vectors x = 1 −3 2

√

3(2) + 2(1) = 4 − 6 + 2 = 0. Using the fact that ∥x∥2 = x𝑇 x, we can normalize these vectors to

obtain orthonormal vectors

⎡

⎤

⎡ ⎤

1

4

x

1

y

1

x̃ =

= √ ⎣ −3 ⎦ , ỹ =

= √ ⎣ 2 ⎦,

∥x∥2

∥y∥2

14

21 1

2

which satisfy x̃𝑇 x̃ = ỹ𝑇 ỹ = 1, x̃𝑇 ỹ = 0. □

The concept of orthogonality extends to subspaces. If 𝑆1 , 𝑆2 , . . . , 𝑆𝑘 is a set of subspaces of ℝ𝑚 ,

we say that these subspaces are mutually orthogonal if x𝑇 y = 0 whenever x ∈ 𝑆𝑖 , y ∈ 𝑆𝑗 , for 𝑖 ∕= 𝑗.

Furthermore, we define the orthogonal complement of a subspace 𝑆 ⊆ ℝ𝑛 to be the set 𝑆 ⊥ defined

by

𝑆 ⊥ = {y ∈ ℝ𝑚 ∣ x𝑇 y = 0, x ∈ 𝑆}.

Example Let 𝑆 be the subspace of ℝ3 defined by 𝑆 = span{v1 , v2 }, where

⎡ ⎤

⎡

⎤

1

1

v1 = ⎣ 1 ⎦ , v2 = ⎣ −1 ⎦ .

1

1

{[

]𝑇 }

1 0 −1

Then, 𝑆 has the orthogonal complement 𝑆 ⊥ = span

.

It can be verified directly, by computing inner products, that the single basis vector of 𝑆 ⊥ ,

[

]𝑇

v3 = 1 0 −1 , is orthogonal to either basis vector of 𝑆, v1 or v2 . It follows that any

1

multiple of this vector, which describes any vector in the span of v3 , is orthogonal to any linear

combination of these basis vectors, which accounts for any vector in 𝑆, due to the linearity of the

inner product:

(𝑐3 v3 )𝑇 (𝑐1 v1 + 𝑐2 v2 ) = 𝑐3 𝑐1 v3𝑇 v1 + 𝑐3 𝑐2 v3𝑇 v2 = 𝑐3 𝑐3 (0) + 𝑐3 𝑐2 (0) = 0.

Therefore, any vector in 𝑆 ⊥ is in fact orthogonal to any vector in 𝑆. □

It is helpful to note that given a basis {v1 , v2 } for any two-dimensional subspace 𝑆 ⊆ ℝ3 , a basis

{v3 } for 𝑆 ⊥ can be obtained by computing the cross-product of the basis vectors of 𝑆,

⎡

⎤

(v1 )2 (v2 )3 − (v1 )3 (v2 )2

v3 = v1 × v2 = ⎣ (v1 )3 (v2 )1 − (v1 )1 (v2 )3 ⎦ .

(v1 )1 (v2 )2 − (v1 )2 (v2 )1

A similar process can be used to compute the orthogonal complement of any (𝑛 − 1)-dimensional

subspace of ℝ𝑛 . This orthogonal complement will always have dimension 1, because the sum of the

dimension of any subspace of a finite-dimensional vector space, and the dimension of its orthogonal

complement, is equal to the dimension of the vector space. That is, if dim(𝑉 ) = 𝑛, and 𝑆 ⊆ 𝑉 is a

subspace of 𝑉 , then

dim(𝑆) + dim(𝑆 ⊥ ) = 𝑛.

Let 𝑆 = range(𝐴) for an 𝑚 × 𝑛 matrix 𝐴. By the definition of the range of a matrix, if y ∈ 𝑆,

then y = 𝐴x for some vector x ∈ ℝ𝑛 . If z ∈ ℝ𝑚 is such that z𝑇 y = 0, then we have

z𝑇 𝐴x = z𝑇 (𝐴𝑇 )𝑇 x = (𝐴𝑇 z)𝑇 x = 0.

If this is true for all y ∈ 𝑆, that is, if z ∈ 𝑆 ⊥ , then we must have 𝐴𝑇 z = 0. Therefore, z ∈ null(𝐴𝑇 ),

and we conclude that 𝑆 ⊥ = null(𝐴𝑇 ). That is, the orthogonal complement of the range is the null

space of the transpose.

Example Let

⎡

⎤

1

1

𝐴 = ⎣ 2 −1 ⎦ .

3

2

If 𝑆 = range(𝐴) = span{a1 , a2 }, where a1 and a2 are the columns of 𝐴, then 𝑆 ⊥ = span{b}, where

[

]𝑇

b = a1 × a2 = 7 1 −3 . We then note that

𝐴𝑇 b =

[

1

2 3

1 −1 2

]

2

⎡

⎤

[ ]

7

⎣ 1 ⎦= 0 .

0

−3

That is, b is in the null space of 𝐴𝑇 . In fact, because of the relationships

rank(𝐴𝑇 ) + dim(null(𝐴𝑇 )) = 3,

rank(𝐴) = rank(𝐴𝑇 ) = 2,

we have dim(null(𝐴𝑇 )) = 1, so null(𝐴) = span{b}. □

A basis for a subspace 𝑆 ⊆ ℝ𝑚 is an orthonormal basis if the vectors in the basis form an

orthonormal set. For example, let 𝑄1 be an 𝑚 × 𝑟 matrix with orthonormal columns. Then these

columns form an orthonormal basis for range(𝑄1 ). If 𝑟 < 𝑚, then this basis can be extended to a

basis of all of ℝ𝑚 by obtaining an orthonormal basis of range(𝑄1 )⊥ = null(𝑄𝑇1 ).

⊥

If we define 𝑄2 to be a matrix whose

[ columns] form an orthonormal basis of range(𝑄1 )𝑇 , then

the columns of the 𝑚 × 𝑚 matrix 𝑄 = 𝑄1 𝑄2 are also orthonormal. It follows that 𝑄 𝑄 = 𝐼.

That is, 𝑄𝑇 = 𝑄−1 . Furthermore, because the inverse of a matrix is unique, 𝑄𝑄𝑇 = 𝐼 as well. We

say that 𝑄 is an orthogonal matrix.

One interesting property of orthogonal matrices is that they preserve certain norms. For example, if 𝑄 is an orthogonal 𝑛 × 𝑛 matrix, then ∥𝑄x∥22 = x𝑇 𝑄𝑇 𝑄x = x𝑇 x = ∥x∥22 . That is, orthogonal

matrices preserve ℓ2 -norms. Geometrically, multiplication by an 𝑛 × 𝑛 orthogonal matrix preserves

the length of a vector, and performs a rotation in 𝑛-dimensional space.

Example The matrix

⎤

cos 𝜃 0 sin 𝜃

0

1

0 ⎦

𝑄=⎣

− sin 𝜃 0 cos 𝜃

⎡

is an orthogonal matrix, for any angle 𝜃. For any vector x ∈ ℝ3 , the vector 𝑄x can be obtained by

rotating x clockwise by the angle 𝜃 in the 𝑥𝑧-plane, while 𝑄𝑇 = 𝑄−1 performs a counterclockwise

rotation by 𝜃 in the 𝑥𝑧-plane. □

In addition, orthogonal matrices preserve the matrix ℓ2 and Frobenius norms. That is, if 𝐴 is

an 𝑚 × 𝑛 matrix, and 𝑄 and 𝑍 are 𝑚 × 𝑚 and 𝑛 × 𝑛 orthogonal matrices, respectively, then

∥𝑄𝐴𝑍∥𝐹 = ∥𝐴∥𝐹 ,

∥𝑄𝐴𝑍∥2 = ∥𝐴∥2 .

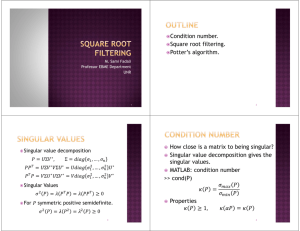

The Singular Value Decomposition

The matrix ℓ2 -norm can be used to obtain a highly useful decomposition of any 𝑚 × 𝑛 matrix 𝐴.

Let x be a unit ℓ2 -norm vector (that is, ∥x∥2 = 1 such that ∥𝐴x∥2 = ∥𝐴∥2 . If z = 𝐴x, and we

define y = z/∥z∥2 , then we have 𝐴x = 𝜎y, with 𝜎 = ∥𝐴∥2 , and both x and y are unit vectors.

3

𝑛 and ℝ𝑚 , respectively, to obtain

We can extend x and y,

to orthonormal [bases of ℝ

]

]

[ separately,

orthogonal matrices 𝑉1 = x 𝑉2 ∈ ℝ𝑛×𝑛 and 𝑈1 = y 𝑈2 ∈ ℝ𝑚×𝑚 . We then have

[ 𝑇 ]

]

[

y

𝑇

𝑈1 𝐴𝑉1 =

𝐴 x 𝑉2

𝑇

𝑈2

[ 𝑇

]

y 𝐴x y𝑇 𝐴𝑉2

=

𝑈2𝑇 𝐴x 𝑈2𝑇 𝐴𝑉2

[

]

𝜎y𝑇 y y𝑇 𝐴𝑉2

=

𝜎𝑈2𝑇 y 𝑈2𝑇 𝐴𝑉2

[

]

𝜎 w𝑇

=

0 𝐵

≡ 𝐴1 ,

where 𝐵 = 𝑈2𝑇 𝐴𝑉2 and w = 𝑉2𝑇 𝐴𝑇 y. Now, let

[

s=

𝜎

w

]

.

Then ∥s∥22 = 𝜎 2 + ∥w∥22 . Furthermore, the first component of 𝐴1 s is 𝜎 2 + ∥w∥22 .

It follows from the invariance of the matrix ℓ2 -norm under orthogonal transformations that

𝜎 2 = ∥𝐴∥22 = ∥𝐴1 ∥22 ≥

∥𝐴1 s∥22

(𝜎 2 + ∥w∥22 )2

≥

= 𝜎 2 + ∥w∥22 ,

2

∥s∥2

𝜎 2 + ∥w∥22

and therefore we must have w = 0. We then have

𝑈1𝑇 𝐴𝑉1

[

= 𝐴1 =

𝜎 0

0 𝐵

]

.

Continuing this process on 𝐵, and keeping in mind that ∥𝐵∥2 ≤ ∥𝐴∥2 , we obtain the decomposition

𝑈 𝑇 𝐴𝑉 = Σ

where

𝑈=

[

u1 ⋅ ⋅ ⋅ u𝑚

]

∈ ℝ𝑚×𝑚 ,

𝑉 =

[

v1 ⋅ ⋅ ⋅ v𝑛

]

∈ ℝ𝑛×𝑛

are both orthogonal matrices, and

Σ = diag(𝜎1 , . . . , 𝜎𝑝 ) ∈ ℝ𝑚×𝑛 ,

𝑝 = min{𝑚, 𝑛}

is a diagonal matrix, in which Σ𝑖𝑖 = 𝜎𝑖 for 𝑖 = 1, 2, . . . , 𝑝, and Σ𝑖𝑗 = 0 for 𝑖 ∕= 𝑗. Furthermore, we

have

∥𝐴∥2 = 𝜎1 ≥ 𝜎2 ≥ ⋅ ⋅ ⋅ ≥ 𝜎𝑝 ≥ 0.

4

This decomposition of 𝐴 is called the singular value decomposition, or SVD. It is more commonly

written as a factorization of 𝐴,

𝐴 = 𝑈 Σ𝑉 𝑇 .

The diagonal entries of Σ are the singular values of 𝐴. The columns of 𝑈 are the left singular

vectors, and the columns of 𝑉 are the right singluar vectors. It follows from the SVD itself that

the singular values and vectors satisfy the relations

𝐴𝑇 u𝑖 = 𝜎𝑖 v𝑖 ,

𝐴v𝑖 = 𝜎𝑖 u𝑖 ,

𝑖 = 1, 2, . . . , min{𝑚, 𝑛}.

For convenience, we denote the 𝑖th largest singular value of 𝐴 by 𝜎𝑖 (𝐴), and the largest and smallest

singular values are commonly denoted by 𝜎𝑚𝑎𝑥 (𝐴) and 𝜎𝑚𝑖𝑛 (𝐴), respectively.

The SVD conveys much useful information about the structure of a matrix, particularly with

regard to systems of linear equations involving the matrix. Let 𝑟 be the number of nonzero singular

values. Then 𝑟 is the rank of 𝐴, and

range(𝐴) = span{u1 , . . . , u𝑟 },

null(𝐴) = span{v𝑟+1 , . . . , v𝑛 }.

That is, the SVD yields orthonormal bases of the range and null space of 𝐴.

It follows that we can write

𝑟

∑

𝐴=

𝜎𝑖 u𝑖 v𝑖𝑇 .

𝑖=1

This is called the SVD expansion of 𝐴. If 𝑚 ≥ 𝑛, then this expansion yields the “economy-size”

SVD

𝐴 = 𝑈1 Σ 1 𝑉 𝑇 ,

where

𝑈1 =

[

u1 ⋅ ⋅ ⋅ u𝑛

]

∈ ℝ𝑚×𝑛 ,

Σ1 = diag(𝜎1 , . . . , 𝜎𝑛 ) ∈ ℝ𝑛×𝑛 .

Example The matrix

⎡

⎤

11 19 11

𝐴 = ⎣ 9 21 9 ⎦

10 20 10

has the SVD 𝐴 = 𝑈 Σ𝑉 𝑇 where

√

√ ⎤

⎡ √

1/√3 −1/√2

1/√6

𝑈 = ⎣ 1/√3

1/ 2

1/ 6 ⎦ ,

√

1/ 3

0 − 2/3

and

√

√

√ ⎤

1/ 6 −1/√3

1/ 2

√

⎦

𝑉 = ⎣ 2/3

1/√3

√

√0 ,

1/ 6 −1/ 3 −1/ 2

⎡

⎡

⎤

42.4264

0 0

0 2.4495 0 ⎦ .

𝑆=⎣

0

0 0

5

]

]

[

[

Let 𝑈 = u1 u2 u3 and 𝑉 = v1 v2 v3 be column partitions of 𝑈 and 𝑉 , respectively.

Because there are only two nonzero singular values, we have rank(𝐴) = 2, Furthermore, range(𝐴) =

span{u1 , u2 }, and null(𝐴) = span{v3 }. We also have

𝐴 = 42.4264u1 v1𝑇 + 2.4495u2 v2𝑇 .

□

The SVD is also closely related to the ℓ2 -norm and Frobenius norm. We have

∥𝐴∥2 = 𝜎1 ,

and

min

x∕=0

∥𝐴∥2𝐹 = 𝜎12 + 𝜎22 + ⋅ ⋅ ⋅ + 𝜎𝑟2 ,

∥𝐴x∥2

= 𝜎𝑝 ,

∥x∥2

𝑝 = min{𝑚, 𝑛}.

These relationships follow directly from the invariance of these norms under orthogonal transformations.

The SVD has many useful applications, but one of particular interest is that the truncated SVD

expansion

𝑘

∑

𝐴𝑘 =

𝜎𝑖 u𝑖 v𝑖𝑇 ,

𝑖=1

where 𝑘 < 𝑟 = rank(𝐴), is the best approximation of 𝐴 by a rank-𝑘 matrix. It follows that the

distance between 𝐴 and the set of matrices of rank 𝑘 is

min ∥𝐴 − 𝐵∥2 = ∥𝐴 − 𝐴𝑘 ∥2 =

rank(𝐵)=𝑘

𝑟

∑

𝜎𝑖 u𝑖 v𝑖𝑇 = 𝜎𝑘+1 ,

𝑖=𝑘+1

because the ℓ2 -norm of a matrix is its largest singular value, and 𝜎𝑘+1 is the largest singular value

of the matrix obtained by removing the first 𝑘 terms of the SVD expansion. Therefore, 𝜎𝑝 , where

𝑝 = min{𝑚, 𝑛}, is the distance between 𝐴 and the set of all rank-deficient matrices (which is zero

when 𝐴 is already rank-deficient). Because a matrix of full rank can have arbitarily small, but still

positive, singular values, it follows that the set of all full-rank matrices in ℝ𝑚×𝑛 is both open and

dense.

Example The best approximation of the matrix 𝐴 from the previous example, which has rank

two, by a matrix of rank one is obtained by taking only the first term of its SVD expansion,

⎡

⎤

10 20 10

𝐴1 = 42.4264u1 v1𝑇 = ⎣ 10 20 10 ⎦ .

10 20 10

6

The absolute error in this approximation is

∥𝐴 − 𝐴1 ∥2 = 𝜎2 ∥u2 v2𝑇 ∥2 = 𝜎2 = 2.4495,

while the relative error is

∥𝐴 − 𝐴1 ∥2

2.4495

=

= 0.0577.

∥𝐴∥2

42.4264

That is, the rank-one approximation of 𝐴 is off by a little less than six percent. □

Suppose that the entries of a matrix 𝐴 have been determined experimentally, for example by

measurements, and the error in the entries is determined to be bounded by some value 𝜖. Then, if

𝜎𝑘 > 𝜖 ≥ 𝜎𝑘+1 for some 𝑘 < min{𝑚, 𝑛}, then 𝜖 is at least as large as the distance between 𝐴 and

the set of all matrices of rank 𝑘. Therefore, due to the uncertainty of the measurement, 𝐴 could

very well be a matrix of rank 𝑘, but it cannot be of lower rank, because 𝜎𝑘 > 𝜖. We say that the

𝜖-rank of 𝐴, defined by

rank(𝐴, 𝜖) = min rank(𝐵),

∥𝐴−𝐵∥2 ≤𝜖

is equal to 𝑘. If 𝑘 < min{𝑚, 𝑛}, then we say that 𝐴 is numerically rank-deficient.

Projections

Given a vector z ∈ ℝ𝑛 , and a subspace 𝑆 ⊆ ℝ𝑛 , it is often useful to obtain the vector w ∈ 𝑆 that

best approximates z, in the sense that z − w ∈ 𝑆 ⊥ . In other words, the error in w is orthogonal to

the subspace in which w is sought. To that end, we define the orthogonal projection onto 𝑆 to be

an 𝑛 × 𝑛 matrix 𝑃 such that if w = 𝑃 z, then w ∈ 𝑆, and w𝑇 (z − w) = 0.

Substituting w = 𝑃 z into w𝑇 (z − w) = 0, and noting the commutativity of the inner product,

we see that 𝑃 must satisfy the relations

z𝑇 (𝑃 𝑇 − 𝑃 𝑇 𝑃 )z = 0,

z𝑇 (𝑃 − 𝑃 𝑇 𝑃 )z = 0,

for any vector z ∈ ℝ𝑛 . It follows from these relations that 𝑃 must have the following properties:

range(𝑃 ) = 𝑆, and 𝑃 𝑇 𝑃 = 𝑃 = 𝑃 𝑇 , which yields 𝑃 2 = 𝑃 . Using these properties, it can be shown

that the orthogonal projection 𝑃 onto a given subspace 𝑆 is unique. Furthermore, it can be shown

that 𝑃 = 𝑉 𝑉 𝑇 , where 𝑉 is a matrix whose columns are an orthonormal basis for 𝑆.

Projections and the SVD

Let 𝐴 ∈ ℝ𝑚×𝑛 have the SVD 𝐴 = 𝑈 Σ𝑉 𝑇 , where

[

]

[

]

˜𝑟 , 𝑉 = 𝑉𝑟 𝑉˜𝑟 ,

𝑈 = 𝑈𝑟 𝑈

7

where 𝑟 = rank(𝐴) and

𝑈𝑟 =

[

u1 ⋅ ⋅ ⋅ u𝑟

]

,

𝑉𝑟 =

[

v1 ⋅ ⋅ ⋅ v𝑟

]

.

Then, we obtain the following projections related to the SVD:

∙ 𝑈𝑟 𝑈𝑟𝑇 is the projection onto the range of 𝐴

∙ 𝑉˜𝑟 𝑉˜𝑟𝑇 is the projection onto the null space of 𝐴

˜𝑟 𝑈

˜𝑟𝑇 is the projection onto the orthogonal complement of the range of 𝐴, which is the null

∙ 𝑈

space of 𝐴𝑇

∙ 𝑉𝑟 𝑉𝑟𝑇 is the projection onto the orthogonal complement of the null space of 𝐴, which is the

range of 𝐴𝑇 .

These projections can also be determined by noting that the SVD of 𝐴𝑇 is 𝐴𝑇 = 𝑉 Σ𝑈 𝑇 .

Example For the matrix

⎡

⎤

11 19 11

𝐴 = ⎣ 9 21 9 ⎦ ,

10 20 10

that has rank two, the orthogonal projection onto range(𝐴) is

]

[

[

] u𝑇1

= u1 u𝑇1 + u2 u𝑇2 ,

𝑈2 𝑈2𝑇 = u1 u2

u𝑇2

[

]

where 𝑈 = u1 u2 u3 is the previously defined column partition of the matrix 𝑈 from the

SVD of 𝐴. □

Sensitivity of Linear Systems

Let 𝐴 ∈ ℝ𝑚×𝑛 be nonsingular, and let 𝐴 = 𝑈 Σ𝑉 𝑇 be the SVD of 𝐴. Then the solution x of the

system of linear equations 𝐴x = b can be expressed as

x = 𝐴−1 b = 𝑉 Σ−1 𝑈 𝑇 b =

𝑛

∑

u𝑇 b

𝑖

𝑖=1

𝜎𝑖

v𝑖 .

This formula for x suggests that if 𝜎𝑛 is small relative to the other singular values, then the system

𝐴x = b can be sensitive to perturbations in 𝐴 or b. This makes sense, considering that 𝜎𝑛 is the

distance between 𝐴 and the set of all singular 𝑛 × 𝑛 matrices.

8

In an attempt to measure the sensitivity of this system, we consider the parameterized system

(𝐴 + 𝜖𝐸)x(𝜖) = b + 𝜖e,

where E ∈ ℝ𝑛×𝑛 and e ∈ ℝ𝑛 are perturbations of 𝐴 and b, respectively. Taking the Taylor

expansion of x(𝜖) around 𝜖 = 0 yields

x(𝜖) = x + 𝜖x′ (0) + 𝑂(𝜖2 ),

where

x′ (𝜖) = (𝐴 + 𝜖𝐸)−1 (e − 𝐸x),

which yields x′ (0) = 𝐴−1 (e − 𝐸x).

Using norms to measure the relative error in x, we obtain

∥x(𝜖) − x∥

∥𝐴−1 (e − 𝐸x)∥

= ∣𝜖∣

+ 𝑂(𝜖2 ) ≤ ∣𝜖∣∥𝐴−1 ∥

∥x∥

∥x∥

{

}

∥e∥

+ ∥𝐸∥ + 𝑂(𝜖2 ).

∥x∥

Multiplying and dividing by ∥𝐴∥, and using 𝐴x = b to obtain ∥b∥ ≤ ∥𝐴∥∥x∥, yields

(

)

∥e∥ ∥𝐸∥

∥x(𝜖) − x∥

= 𝜅(𝐴)∣𝜖∣

+

,

∥x∥

∥b∥ ∥𝐴∥

where

𝜅(𝐴) = ∥𝐴∥∥𝐴−1 ∥

is called the condition number of 𝐴. We conclude that the relative errors in 𝐴 and b can be

amplified by 𝜅(𝐴) in the solution. Therefore, if 𝜅(𝐴) is large, the problem 𝐴x = b can be quite

sensitive to perturbations in 𝐴 and b. In this case, we say that 𝐴 is ill-conditioned; otherwise, we

say that 𝐴 is well-conditioned.

The definition of the condition number depends on the matrix norm that is used. Using the

ℓ2 -norm, we obtain

𝜎1 (𝐴)

𝜅2 (𝐴) = ∥𝐴∥2 ∥𝐴−1 ∥2 =

.

𝜎𝑛 (𝐴)

It can readily be seen from this formula that 𝜅2 (𝐴) is large if 𝜎𝑛 is small relative to 𝜎1 . We

also note that because the singular values are the lengths of the semi-axes of the hyperellipsoid

{𝐴x ∣ ∥x∥2 = 1}, the condition number in the ℓ2 -norm measures the elongation of this hyperellipsoid.

Example The matrices

[

𝐴1 =

0.7674 0.0477

0.6247 0.1691

]

[

,

𝐴2 =

0.7581 0.1113

0.6358 0.0933

]

do not appear to be very different from one another, but 𝜅2 (𝐴1 ) = 10 while 𝜅2 (𝐴2 ) = 1010 . That

is, 𝐴1 is well-conditioned while 𝐴2 is ill-conditioned.

9

To illustrate the ill-conditioned nature of 𝐴2 , we solve the systems 𝐴2 x1 = b1 and 𝐴2 x2 = b2

for the unknown vectors x1 and x2 , where

[

]

[

]

0.7662

0.7019

b1 =

, b2 =

.

0.6426

0.7192

These vectors differ from one another by roughly 10%, but the solutions

[

]

[

]

0.9894

−1.4522 × 108

x1 =

, x2 =

0.1452

9.8940 × 108

differ by several orders of magnitude, because of the sensitivity of 𝐴2 to perturbations. □

Just as the largest singular value of 𝐴 is the ℓ2 -norm of 𝐴, and the smallest singular value is

the distance from 𝐴 to the nearest singular matrix in the ℓ2 -norm, we have, for any ℓ𝑝 -norm,

∥Δ𝐴∥𝑝

1

=

min

.

𝜅𝑝 (𝐴) 𝐴+Δ𝐴 singular ∥𝐴∥𝑝

That is, in any ℓ𝑝 -norm, 𝜅𝑝 (𝐴) measures the relative distance in that norm from 𝐴 to the set of

singular matrices.

Because det(𝐴) = 0 if and only if 𝐴 is singular, it would appear that the determinant could be

used to measure the distance from 𝐴 to the nearest singular matrix. However, this is generally not

the case. It is possible for a matrix to have a relatively large determinant, but be very close to a

singular matrix, or for a matrix to have a relatively small determinant, but not be nearly singular.

In other words, there is very little correlation between det(𝐴) and the condition number of 𝐴.

Example Let

⎡

⎢

⎢

⎢

⎢

⎢

⎢

⎢

𝐴=⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎣

1 −1 −1 −1 −1 −1 −1 −1 −1 −1

0

1 −1 −1 −1 −1 −1 −1 −1 −1

0

0

1 −1 −1 −1 −1 −1 −1 −1

0

0

0

1 −1 −1 −1 −1 −1 −1

0

0

0

0

1 −1 −1 −1 −1 −1

0

0

0

0

0

1 −1 −1 −1 −1

0

0

0

0

0

0

1 −1 −1 −1

0

0

0

0

0

0

0

1 −1 −1

0

0

0

0

0

0

0

0

1 −1

0

0

0

0

0

0

0

0

0

1

⎤

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥.

⎥

⎥

⎥

⎥

⎥

⎥

⎦

Then det(𝐴) = 1, but 𝜅2 (𝐴) ≈ 1, 918, and 𝜎10 ≈ 0.0029. That is, 𝐴 is quite close to a singular

𝑇 ,

matrix, even though det(𝐴) is not near zero. For example, the nearby matrix 𝐴˜ = 𝐴 − 𝜎10 u10 v10

10

which has entries

⎡

⎢

⎢

⎢

⎢

⎢

⎢

⎢

˜

𝐴≈⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎣

1

−0

−0

−0

−0.0001

−0.0001

−0.0003

−0.0005

−0.0011

−0.0022

−1

1

−0

−0

−0

−0.0001

−0.0001

−0.0003

−0.0005

−0.0011

−1

−1

−1

−1

−1

−1

−1

−1

1

−1

−1

−1

−0

1

−1

−1

−0

−0

1

−1

−0

−0

−0

1

−0.0001

−0

−0

−0

−0.0001 −0.0001

−0

−0

−0.0003 −0.0001 −0.0001

−0

−0.0005 −0.0003 −0.0001 −0.0001

is singular. □

11

−1

−1

−1

−1

−1

−1

1

−0

−0

−0

−1

−1

−1

−1

−1

−1

−1

1

−0

−0

−1

−1

−1

−1

−1

−1

−1

−1

1

−0

−1

−1

−1

−1

−1

−1

−1

−1

−1

1

⎤

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥,

⎥

⎥

⎥

⎥

⎥

⎥

⎦