A Technical Note Accompanying the Excel Spreadshhet

advertisement

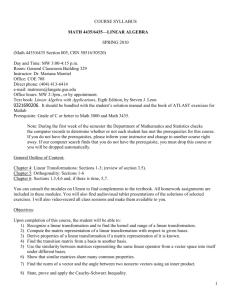

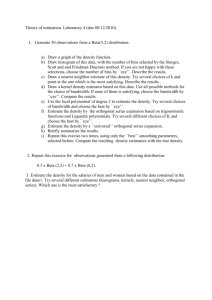

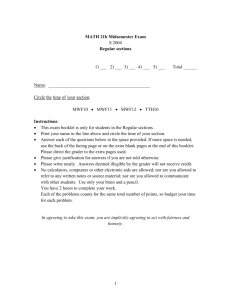

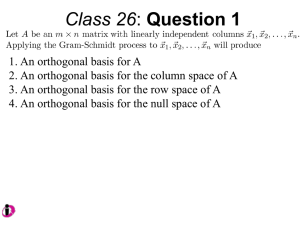

A Technical Note Accompanying the Excel Spreadshhet “creating_scatterplot_with_desired_r.xls” Hungwen Chang Department of Mathematics Laney College May 7, 2011 Revision history: [v.1.01, April 1, 2012] In the paragraph following the first displayed formula, an erroneous statement on cosine, not used elsewhere, is removed from one sentence. [v.1.02, April 1, 2012] A typo was fixed. This note describes a strategy taken by the spreadsheet “creating_scatterplot_with_desired_r.xls” (starting with version 2.0 of March 2011) to create a bivariate date set with prescribed values for x , y , s x , s y , and r . The strategy relies on a formulation of linear regression in terms of linear algebra, a formulation we first review below. Given a bivariate data set ( x1 , y1 ) , … , ( xn , y n ) , the regression line y p b ax is the least squares line, i.e. the line that minimizes the sum of squares of residuals. Denote by 1 the vector 1, 1,,1 R n , X the vector x1 , x 2 , , x n R n , and Y the vector y1 , y 2 ,, y n R n . Assume that xi ’s are not all identical, and so 1 and X are linearly independent. Endow R n with the usual Euclidean inner product. Then finding the least squares line y p b ax is the same as finding b and a to minimize || Y (b1 aX ) || . This b1 aX is therefore the orthogonal projection of Y onto the 2-dim linear subspace of R n spanned by 1 and X . For any V v1 , v 2 , , v n R n , let V stand for the mean of the components, i.e. V (v1 v 2 v n ) / n . The orthogonal projection of V onto the linear span of 1 is thus V 1 , Shifting all components of V together to get V V 1 in order to force the mean to 0 is nothing other than extracting the part of V orthogonal to 1 R n . The 2-dim linear span of 1 and X has, as an orthogonal basis, 1 and X X 1 . So the orthogonal projection of Y onto this 2-dim linear subspace is Y 1 with b1 aX , we see a ( X X 1) (Y Y 1) ( X X 1) . Compare this || X X 1 || 2 ( X X 1) (Y Y 1) and b Y aX . Moreover, b1 aX can be written || X X 1 || 2 as Y 1 a( X X 1) . The vector Y is thus decomposed as Y Y 1 a( X X 1) Y , with the three vectors on the right-hand side mutually orthogonal. The first two pieces Y 1 a( X X 1) encode the regression line, whereas Y encodes the n residuals. The correlation 1 coefficient r , written as ( X X 1) (Y Y 1) , is nothing other than the cosine of the angle between || X X 1 || || Y Y 1 || Y Y 1 and X X 1 . Since Y Y 1 is orthogonally decomposed as Y Y 1 a( X X 1) Y , it follows that the square of the cosine of this angle is r 2 1 r2 || a( X X 1) || 2 , and that || a( X X 1) Y || 2 || Y || 2 . So || a( X X 1) Y || 2 || Y || 1 r 2 || a( X X 1) Y || 1 r 2 || Y Y 1 || s y 1 r 2 n 1 . Also observe that a ( X X 1) (Y Y 1) r || Y Y 1 || rs y . || X X 1 || sx || X X 1 || 2 This geometric picture makes it easier to see how to generate a data set with prescribed values for x , y , s x , s y , and r . First, calculate a rs y / s x . Then choose X x1 , x2 ,, xn with the desired mean X x and the desired standard deviation s x . Then let Y y 1 a( X x 1) Y y 1 (rs y / s x )( X x 1) Y , where Y can be any vector orthogonal to 1 and X x 1 , with length || Y || s y 1 r 2 n 1 . The n components of Y will be our y1 , y 2 ,, y n . Suppose, in addition, we would like the data set ( x1 , y1 ) , … , ( xn , y n ) to mimic the result of repeating for n times a random procedure that has two random variables x and y following jointly the bivariate normal distribution with parameters x , y , x , y , and . Then the variable x follows N ( x , x ) , and the variable y , when conditioned on x x0 , follows 2 N ( y ( x0 x ), (1 2 ) y ) , where y / x . To create X x1 , x2 ,, xn , we first 2 generate n numbers one by one using a random generator modeled on N (0,1) . Denote the result by Z z1 , z 2 ,, z n . Then extract the part of Z orthogonal to 1 , arriving at Z 0 Z Z 1 , with Z 0 1 . Scaling it properly (to ensure the desired standard deviation s x ) and then adding x 1 (to ensure the desired mean x ) to get X x 1 (s x n 1 / || Z 0 ||) Z 0 . Conditioned on 2 x1 , x2 ,, xn , y1 , y 2 ,, y n follows y 1 a( X x 1) (1 2 ) y z1, z 2 ,, z n , where z1, z 2 ,, z n are independent, each being N (0,1) . We first generate n numbers one by one using a random generator modeled on N (0,1) . This gives Z z1, z 2 ,, z n . Then Z can be 2 decomposed into three mutually orthogonal pieces as Z Z 1 Z 0 ( Z Z 1) Z 0 Z , with the || Z 0 || 2 first piece parallel to 1 , the second parallel to Z 0 (and thus to X x 1 .) Now we keep the direction of each of the three pieces while fine-tuning their lengths to the desired values. This leads to Y y 1 (rs y / s x )( X x 1) Y , where Y (s y 1 r 2 n 1 / || Z ||) Z , which has length || Y || s y 1 r 2 n 1 . The n components of Y are taken to be y1 , y 2 ,, y n . We now have the desired ( x1 , y1 ) , … , ( xn , y n ) . The implementation of the strategy laid out here appears mainly in the sheet with tab “Norm Gen” in version 2.0 or newer of the Excel spreadsheet creating_scatterplot_with_desired_r.xls”, which is part of the collection of spreadsheets for use in an introductory course in Statistics, a collection originally created by Bill Lepowsky of the Math Department of Laney College in 2010. References Freedman, David A. 2009. Statistical Models – Theory and Practice. Cambridge University Press. 3