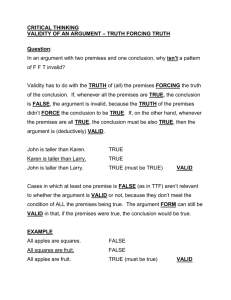

Chapter 1: An Introduction to Philosophy of Science

advertisement