Ch. 9 Scalar Quantization Uniform Quantizers

advertisement

Ch. 9 Scalar Quantization

Uniform Quantizers

1

Characteristics of Uniform Quantizers

Constraining to UQ makes the design easier but performance usually suffers…

For a uniform quantizer the following two constraints are imposed:

• DBs are equally spaced (Step Size = Δ)

For a given Rate…

• RLs are equally spaced & centered between the DBs

Only One Choice to

make in Design

–3.5Δ

–3Δ

–2.5Δ –1.5Δ –0.5Δ

–2Δ

–Δ

Mid-Riser SQ

0.5Δ

0

1.5Δ

Δ

2.5Δ

2Δ

x

3.5Δ

3Δ

This Fig in the

book has an error

Mid-Step SQ

Output

3.0

2.0

1.0

–1.0

–2.0

–3.0

2

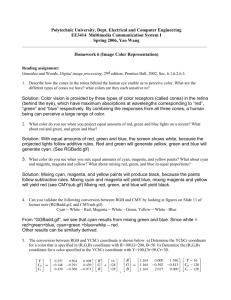

PDF-Optimized Uniform Quantizers

The idea here is: Assuming that you know the PDF of the

samples to be quantized… design the quantizer’s step so that it is

fX(x)

optimal for that PDF.

1/(2Xmax)

For Uniform PDF

-Xmax

Xmax

Want to uniformly quantize an RV X ~ U(-Xmax,Xmax)

Assume that desire M RLs for R = ⎡log2(M)⎤

Î M equally-sized intervals having Δ = 2Xmax/M

3

Distortion is:

σ =

X max

∫ [ x − Q ( x )]

2

2

q

f X ( x )dx

− X max

RLs

iΔ

⎡ 1 ⎤

= 2 ∑ ∫ ( x − (i − ) Δ ) ⎢

⎥ dx

i =1 ( i −1) Δ

⎣ 2 X max ⎦

M /2

2

1

2

DLs

Now b y exploiting the structure…

Each of these

integrals is

identical!

fX(x)

1/(2Xmax)

-Xmax –2Δ –Δ

M

σ q2 = 2

2

Δ 2Δ

Xmax

x

Using Δ =2Xmax/M

Δ /2

Δ /2

⎡

⎤

1

2

2 1

q

dq

= ∫ q dq

⎢

⎥

∫

Δ

⎣ 2 X max ⎦

−Δ /2

− Δ /2

4

So… the result is:

Δ /2

1

σ = ∫ q dq

Δ

− Δ /2

2

q

Δ

σ =

12

2

2

2

q

To get SQR, we need the variance (power) of the signal…

Since the signal is uniformly dist. we know from Prob.

Theory that 2 ( 2 X max )2 Δ 2 M 2

=

σ =

X

12

12

⎡ σ X2 ⎤

SQR( dB ) = 10log10 ⎢ 2 ⎥ = 10 log10 ⎡⎣ M 2 ⎤⎦

⎢⎣ σ q ⎥⎦

For n-bit

Quantizer:

M = 2n

= 20 log10 ⎡⎣ 2 n ⎤⎦

= 6.02n dB

6 dB per bit

5

Rate Distortion Curve for UQ of Uniform RV

2

2

X max

Δ 2 4 X max

−2 n

2

σ =

=

=

12 12 M 2

3

2

q

2

X

σ q2 = max 2 −2 n

3

Distortion

σ q2

σ x2

Distortion

Rate

Exponential

Rate (bits/sample)

n

6

PDF-Optimized Uniform Quantizers

For Non-Uniform PDF

•

•

•

•

•

We are mostly interested in Non-Uniform PDFs whose domain is not bounded.

For this case, the PDF must decay asymptotically to zero…

So… we can’t cover the whole infinite domain with a finite number of Δ-intervals!

We have to choose a Δ & M to achieve a desired MSQE

Need to balance to types of errors:

– Granular (or Bounded) Error

– Overload (or Unbounded) Error

f X ( x)

M=8

A fixed version

of Fig. 9.10

Typically, M & Δ are

such that OL Prob. is less

than the Granular Prob.

Not a DB… the

Rt-most DB is ∞

-4Δ

Not a DB… the

left-most DB is -∞

4Δ

7

Goal: Given M (i.e., given the Rate n… typically M = 2n)

Find Δ to minimize MSQE (i.e., Distortion)

DBs

M=8

bM = +∞

b0 = –∞

−7 Δ −5Δ −3Δ −Δ

2 2 2 2

Δ

2

3Δ 5Δ

2 2

7Δ

2

RLs

Write distortion as a function of Δ and then minimize w.r.t. Δ:

M

σ = ∑∫

2

q

By

Assumed

Symmetry

on PDF

i =1

bi

bi −1

( x − yi )

2

f X ( x )dx

Granular

2

⎡ M /2 iΔ

⎤

1

x

1

f

(

x

)

dx

= ⎢2 ∑ ∫

−

−

Δ

( ( 2) ) X

⎥

( i −1) Δ

⎣ i =1

⎦

∞

+ 2 ∫M

2

Δ

(x −(

M

2

−

1

2

) Δ)

2

Overload

f X ( x )dx

8

d σ q2

Now to minimize… take derivative & set to zero:

dΔ

=0

Gets complicated & messy to do analytically… solve numerically!

This balances the granular & overload effects to minimize distortion…

↑Δ Î ↓Overload… but… ↑Granular

σ q2

min{ f1 ( x ) + f 2 ( x )}

Slopes are

negatives

⇒

df1 ( x ) df 2 ( x )

+

=0

dx

dx

⇒

df1 ( x )

df ( x )

=− 2

dx

dx

Distributions that have heavier tails tend to have larger step sizes…

How practical are these quantizers?

• Useful when source really does adhere to designed-for-pdf

• Otherwise have degradation due to mismatch

– Right PDF, Wrong Variance

– Wrong PDF Type

9

# of

Bits

1

2

3

4

5

10

Adaptive Uniform Quantizers

We use adaptation to make UQ robust to Variance Mismatch

Forward-Adaptive Quantization

• Collect a Block of N Samples

• Estimate Signal Variance in the

Error in Book

xB,0 xB,1 … xB,N-1

Bth

Block σˆ ( B ) = 1

N

• Normalize the Samples in the Bth Block

2

x

N −1

2

x

∑ B ,i

i =0

xˆ B ,i = xB ,i / σˆ x2 ( B )

• Quantize Normalized Samples

– Always Use Same Quantizer: Designed for Unit Variance

• Quantize Estimated Variance

– Send as “Side Info”

Design Issues

• Block Size

– Short Î captures changes, ↑SI

– Long Î misses changes, ↓SI

• # of bits for SI… 8 bits is “typical”

11

Quantization of 16-bit Speech

16-Bit Original

vs.

3-bit Fixed Quantizer

16-Bit Original

vs.

3-bit Forward-Adapt Quant.

Another Application

Synthetic Aperture Radar (SAR)

often uses “Block Adaptive

Quantizer (BAQ)”

12

Alternative Method for Forward-Adaptive Quant.

“Block-Shifted Adaptive Quantization (BSAQ)”

• Given digital samples w/ large # of bits (B bits)

• In each block, shift all samples up by S bits

– S is chosen so that the MSB of largest sample in block is “filled”

• Truncate shifted samples to b < B bits

• Side Info = S (use ⎡log2(B)⎤ bits)

Original 8 bit samples

000000000000

000000000000

000000100000

100100001001

111111111111

101011001110

010001001010

010110010010

Shifted Up by 2 Bits

000000100000

100100001001

111111111111

101011001110

010001001010

010110010010

Coded S = 010

Truncated to 3 Bits

000000100000

100100001001

111111111111

To Decode: Shift down,

fill zeros above & below

000000000000

000000000000

000000100000

100100001001

111111111111

000000000000

000000000000

000000000000

13

Backward-Adaptive Quantization

There are some downsides to Forward AQ:

• Have to send Side Information… reduces the compression ratio

• Block-Size Trade-Offs… Short Î captures changes, ↑SI

• Coding Delay… can’t quantize any samples in block until see whole block

Backward-Adaptation Addresses These Drawbacks as Follows:

• Monitor which quantization cells the past samples fall in

– Increase Step Size if outer cells are too common

– Decrease Step Size if inner cells are too common

Because it is based on past quantized values,

• no side info needed for the decoder to synchronize to the encoder

– At least when no transmission errors occur

• no delay because current sample is quantized based on past samples

• So in principle block size can be set based on rate of signal’s change

How many past samples to use? How are the decisions made?

Jayant provided simple answers!!

14

Jayant Quantizer (Backward-Adaptive)

•

•

Use single most recent output

If it…

– is in outer levels, increase Δ … or… is in inner levels, decrease Δ

•

•

Assign each interval a multiplier: Mk for the kth interval

Update Δ according to:

Δ n = M l ( n −1) Δ n −1

New Δ

•

•

last sample’s

level index

Old Δ

Multipliers for outer levels are > 1 (Multipliers are symmetric)

“

“ inner “

“ <1

Specify Δmin & Δmax to avoid “going too far”

15

Example of Jayant Multipliers

x

x

16

How Do We Pick the Jayant Multipliers?

IF we knew the PDF & designed for the correct Δ…

Then we want the multipliers to have no effect after, say, N samples:

Sequence of multipliers

for some observed N

samples…

M 3M 6 M 4 M1 " M 4 M 5 ≈ 1

()

Use = “for

design”

# of

Levels

M

Let nk = # of times Mk is used… Then () becomes

nk

M

∏ k =1

k =0

M

Now taking the Nth root gives…

∏M

k =0

nk

N

k

=1

M

Pk

M

∏ k =1

k =0

where we have used the frequency of occurrence view: Pk ≈ nk/N

For a given “designed-for” PDF it is possible to find the correct Δ and

then find the resulting Pk probabilities… Then this…

Is a requirement on the multipliers Mk values

But… there are infinitely many solutions!!!!

17

One way to further restrict to get a unique solution is to require a specific

Integers (+/-)

form on the Mk:

lk

Mk = γ

M

l P

γ

∏ =1

k k

k =0

γ

Real # >1

⎡M

⎤

⎢ lk Pk ⎥

⎣⎢ k =0

⎦⎥

∑

M

=1

∑l P

k =0

k

k

= 0 ()

So… if we’ve got an idea of the Pk… () Î values for the lk

Then use creativity to select γ:

Large γ gives fast adaptation

Small γ gives slow adaptation

In general we want faster expansion than contraction:

•

Samples in outer levels indicate possible overload (potential big

overload error)… so we need to expand fast to eliminate this potential

•

Samples in inner levels indicate possible underload (granular error is

likely too big… but granular is not as dire as overload)… so we only

need to contract slowly

18

Robustness of Jayant Quantizer

Optimal Non-Adapt is slightly

better when perfectly matched

Jayan is significantly better

when mismatched

Combining Figs. 9.11 & 9.18

19