From: AAAI Technical Report WS-93-02. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved.

TowardParallel and Distributed Learning by Meta-Learning

Philip K. Chanand Salvatore J. Stolfo

Department of ComputerScience

ColumbiaUniversity

New York, NY10027

pkc@cs.columbia.edu and sal@cs.columbia.edu

1

Abstract

Muchof the research in inductive learning concentrates on problems with relatively small amounts

of data. With the coming age of very large network computing, it is likely that orders of magnitude moredata in databases will be available for

various learning problemsof real world irnponance.

Learning techniques are central to knowledgediscovery and the approach proposed in this paper may

substantially increase the amountof data a knowledge discovery system can handle effectively. Metalearning is proposedas a general technique to integrating a numberof distinct learning processes. This

paper details several meta-leamingstrategies for integrating independently learned classifiers by the

sameleamer in a parallel and distributed computing

environment.Ourstrategies are particularly suited

for massive amounts of data that main-memorybased learning algorithms cannot efficiently handle. Thestrategies are also independentof the particular learning algorithm used and the underlying

parallel and distributed platform. Preliminary experiments using different data sets and algorithms

demonstrateencouragingresults: parallel learning

by meta-learning can achieve comparable prediction accuracy in less space and time than purely

serial learning.

Keywords: machine learning, inductive learning, meta-leaming,parallel and distributed processhag, and large databases.

AAA1.93

Introduction

Muchof the research in inductive learning concentrates on problems with relatively small amounts

of data. With the comingage of very large network

computing,it is likely that orders of magnitudemore

data in databaseswill be available for various learning problems of real world importance. The Grand

Challenges of HPCC

[20] are perhaps the best exampies. Learning techniques are central to knowledge

discovery [ 11 ] and the approachproposedhere may

substantially increase the amountof data a Knowledge Discovery system can handle effectively.

Quinlan [14] approached the problem of efficientiy applying learning systems to data that are

substantially larger than available main memory

with a windowingtechnique. A learning algorithm

is applied to a small subset of training data, called

a window,and the learned concept is tested on the

remaining training data. This is repeated on a new

windowof the same size with some of the incorrectly classified data replacing someof the data in

the old window

until all the data are correctly classified. Wirth and Catlett [21] showthat the windowing technique does not significantly improvespeed

on reliable data. On the contrary, for noisy data,

windowingconsiderably slows downthe computation. Catlett [3] demonstrates that larger amounts

of data improvesaccuracy, but he projects that 1133

[15] on modemmachines will take several months

to learn froma million records in the flight data set

obtained from NASA.He proposes some improvementsto the ID3algorithm particularly for handling

KnowledgeDiscovery in Databases Workshop1993

Page 227

attributes with real numbers,but the processingtime

is still prohibitive due to the algorithm’s complexity. In addition, typical leaming systems like ID3

are not designedto handle data that exceedthe size

of a monolithic memoryon a single processor. Although most modemoperating systems support virtual memory,the application of complexalgorithms

like ID3to the large amountof disk-resident data we

realistically assumecan result in intolerable amount

of I/O or even thrashing of external disk storage.

Clearly, parallel and distributed processingprovides

the best hope of dealing with such large amountsof

data.

Oneapproachto this problemis to parallelize the

learning algorithms and apply the parallelized algorithmto the entire data set (presumablyutilizing

multiple I/O channels to handle the I/O bottleneck).

Zhanget al.’s work[23] on parallelizing the backpropagation algorithm on a Connection Machineis

one example. This approach requires optimizing

the codefor a particular algorithmon a specific architecture. Another approach which we propose in

this paper is to run the serial code on a numberof

data subsets in parallel and combinethe results in

an intelligent fashion thus reducingand limiting the

amountof data inspected by any one learning process. This approach has the advantage of using the

same serial code without the time-consumingprotess of parallelizing it. Since the frameworkfor

combiningthe results of learned concepts is independent of the learning algorithms, it can be used

with different learners. In addition, this approachis

independent of the computingplatform used. However, this approach cannot guarantee the accuracy

of the learned concepts to be the sameas the serial

version since clearly a considerable amountof information is not accessible to each of the learning

processes. Furthermore, because of the proliferation of networks of workstations and distributed

databases, our approach of not relying on specific

parallel and distributed environmentis particularly

attractive.

In this paper we introduce the concept of metalearning and its use in combiningresults from a set

of parallel or distributed learning processes. Section 2 discusses meta-learningand howit facilitates

parallel and distributed learning. Section 3 details

our strategies for parallel learning by meta-learning.

Page 228

Section 4 discusses our preliminary experimentsand

Section 5 presents the results. Section 6 discusses

our findings and work in progress. Section 7 concludes with a summaryof this study.

2

Meta-learning

Meta-learning can be loosely defined as learning

from information generated by a learner(s). It can

also be viewedas the learning ofmeta-knowledgeon

the learned information. In our workwe concentrate

on learning from the output of inductive learning (or

learning-from-examples) systems. Meta-leaming,

in this case, meanslearning fromthe classifiers produced by the learners and the predictions of these

classifiers on training data. A classifier (or concept) is the output of an inductive learning system

and a prediction (or classification) is the predicted

class generated by a classifier whenan instance is

supplied. That is, weare interested in the output of

the learners, not the learners themselves. Moreover,

the training data presented to the learners initially

are also available to the meta-learnerif warranted.

Meta-leamingis a general technique to coalesce

the results ofmultiplelearners. In this paper weconcentrate on using meta-leamingto combineparallel

learning processes for higher speed and to maintain

the prediction accuracy that would be achieved by

the sequential version. This involves applying the

samealgorithm on different subsets of the data in

parallel and the use of meta-leamingto combinethe

partial results. Weare not awareof any workin the

literature on this approachbeyondwhatwasfirst reported in [18] in the domainof speech recognition.

Workon using meta-learning for combiningdifferent learning systemsis reported elsewhere[4, 6] and

is further discussed at the end of this paper. In the

next section we will discuss our approach on the

howto use meta-leamingfor parallel learning using

only one learning algorithm.

3

Parallel Learning

Theobjective here is to speedup the learning process

by divide-and.conquer. The data set is partitioned

into subsets and the sameleaming algorithm is applied on each of these subsets. Several issues arise

KnowledgeDiseover~ in Databases Workshop1998

AAAL98

3.1

First, how manysubsets should be generated?

This largely depends on the numberof processors

available and the size of the training set. Thenumber of processors puts an upper boundon the number

of subsets. Anotherconsideration is the desired accuracy we wish to achieve. As we will see in our

experiments, there maybe a tradeoff between the

number of subsets and the final accuracy. Moreover, the size of each subset cannot be too small

because sufficient data must be available for each

learning process to producean effective classifier.

Wevaried the numberof subsets from 2 to 64 in our

experiments.

Second, what is the distribution of training examples in the subsets? The subsets can be disjoint

or overlap. The class distribution can be random,

or follow some deterministic scheme. Weexperimentedwith disjoint equal-size subsets with random

distributions of classes. Disjoint subsets implies no

data is shared betweenlearning processes and thus

no communicationoverhead is paid during training

in a parallel execution environment.

Third, whatis the strategy to coalesce the partial

results generated by the learning processes? This is

the more important question. The simplest approach

is to allow the separate learners to vote and use the

prediction with the mostvotes as the classification.

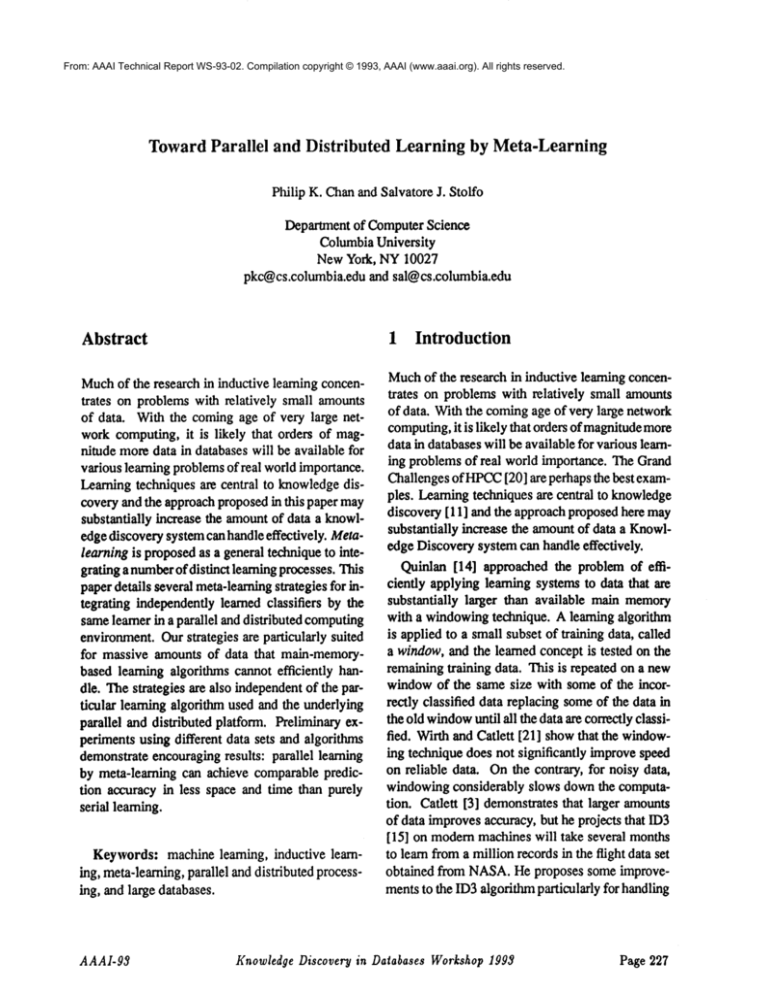

Our approachis meta-learning arbiters in a bottomup binary-tree fashion. (The choice of a binary tree

is discussedlater.)

Anarbiter, together with an arbitration rule, decide a final classification outcomebased upon a

number of candidate predictions. An arbiter is

learned from the output of a pair of learning processes and recursively, an arbiter is learned from

the output of two arbiters. A binary tree of arbiters

(called an arbiter tree) is generatedwith the initially

learned classifiers at the leaves. (Thearbiters themselves are essentially classifiers.) For s subsets and

s classifiers, there are log2(s) levels in the generated arbiter tree. The mannerin whicharbiters are

computedand used is the subject of the following

sections.

AAAI-93

Classifying using an arbiter

tree

Whenan instance is classified by the arbiter tree,

predictions flow from the leaves to the root. First,

eachof the leaf classifiers producesan initial prediction; i.e., a classification of the test instance. From

a pair of predictions and the parent arbiter’s prediction, a combined prediction is produced by some

arbitration rule. Thesearbitration rules are dependent uponthe mannerin whichthe arbiter is learned

as detailed below. This process is applied at each

level until a final prediction is producedat the root

of the tree. Since at each level, the leaf classifiers

and arbiters are independent,predictions are generated in parallel. Before we discuss the arbitration

process in detail, wefirst describe howarbiters are

learned.

3.2

Meta-learning an arbiter tree

Weexperimented with several schemes to metalearn a binary tree of arbiters. Thetraining examples

for an arbiter are selected fromthe original training

examplesused in its two subtrees.

In all these schemesthe leaf classifiers are first

learned from randomlychosen disjoint data subsets

andthe classifiers are groupedin pairs. (Thestrategy

for pairing classifiers is discussed later.) For each

pair of classifiers, the union of the data subsets on

whichthe classifiers are trained is generated. This

union set is then classified by the two classifiers.

A selection rule comparesthe predictions from the

two classifiers and selects instances from the union

set to formthe training set for the arbiter of the pair

of classifiers. Thus,the role acts as a data filter to

producea training set with a particular distribution

of the examples. The arbiter is learned from this

set with the same learning algorithm. In essence,

we seek to computea training set of data for the

arbiter that the classifiers together do a poor job of

classifying. The process of forming the union of

data subsets, classifying it using a pair of arbiter

trees, comparingthe predictions, forminga training

set, and training the arbiter is recursively performed

until the root arbiter is formed.

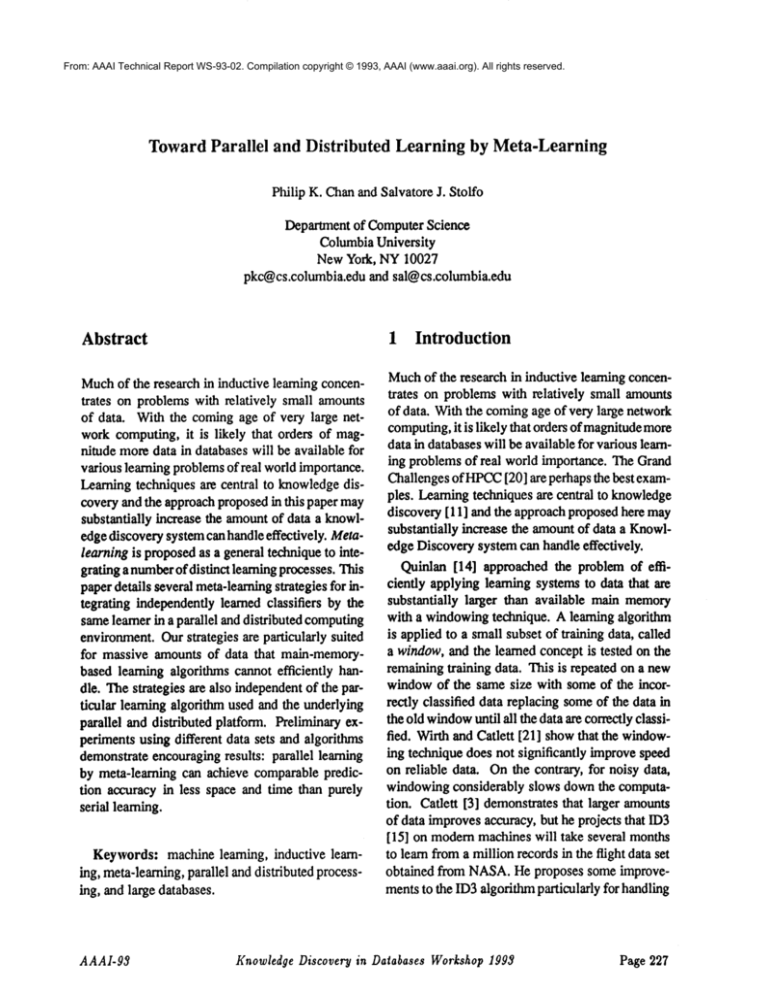

For example,supposethere are initially four training data subsets (Tl - T4). First, four classifiers

(C! - C’4) are getwavaledin parallel from Tl - T4.

KnowledgeDiscovery in Databases Workshop1993

Page 229

.

Arbiters

Classifiers

Trainingdatasubsets

Returninstances with predictions that disagree,

Td, as in the first case, but also predictions

that agree but are incorrect; i.e, T = Td UTi,

where 7"/ = {x C E I (aTe(z) AT2(z))^

(class(x) # ATl(x))}. Note that we lump together both cases of data that are incorrectly

classified or are in disagreement.(Henceforth,

denotedas meta-different-incorrect).

Figure 1: Samplearbiter tree

,

The union of subsets Tl and T2, Ul2, is then classifted by Cl and (72, which generates two sets of

predictions (Pl and P2). Based on predictions

and/’2, and the subset U12,a selection rule generates a training set (Tl2) for the arbiter. Thearbiter

(Al2) is then trained fromthe set Tin using the same

learning algorithmused to learn the initial classitiers. Similarly, arbiter A34is generatedin the same

fashion starting from 7"3 and 7"4, in parallel with

Al2, and hence all the first-level arbiters are produced. Then Ut4 is formed by the union of subset

TI through T4 and is classified by the arbiter trees

rooted with Al2 and A34. Similarly, Tl4 and Al4

(root arbiter) are generatedand the arbiter tree

completed(see Figure 1).

3.3 Detailed strategies

Weexperimentedwith three strategies for the selection rule, whichgenerates training examplesfor

the arbiters. Basedon the predictions from two arbiter subtrees AT1and AT2(or two leaf classifiers)

rooted at two sibling arbiters, and a set of training

examples,E, the strategy generates a set of arbiter

training examples, T. ATI(x) denotes the prediction of training examplex by arbiter subtree ATI.

class(x) denotes the given classification of example

x. The three versions of this selection rule implementedand reported here are as follows:

.

Retuma set of three training sets: Td and

Ti, as defined above, and Tc with examples

that have the same correct predictions; i.e.,

T = {Ta, Ti, Tc}, where Tc = {x 6 E I

(ATI(x) = AT2(x))A(class(x)=

Hereweattempt to separate the data into three

cases and distinguish each case by learning a

separate "subarbiter." Ta, Ti, and Tc generate

Aa, Ai, and Ao respectively. The first arbiter is like the one computedin the first case

to arbitrate disagreements. The second and

third arbiters attempt to distinguish the cases

whenthe two predictions agree but are either

incorrect or correct. (Henceforth, denoted as

meta-different-incorrect-correct).

Sampletraining sets generated by the three schemes

are depicted in Figure 2.

Thelearned arbiters are trained on the particular

distinguished distributions of training data and are

used in generating predictions. (Note that the arbiters are trained by the samelearner used to train

the leaf classifiers.) Recall, however,at each arbiter we have two predictions, Pl and P2, from two

lowerlevel arbiter subtrees (or leaf classifiers) and

the arbiter’s, A, ownprediction to arbitrate between.

Ai(x) is denotedas the prediction of training example x by arbiter Ai. Twoversions of the arbitration

rule have been implemented.The first version correspondsto the first two selection strategies, while

the second version corresponds to the third strategy. Wedenote by instance the test instance to be

classified.

Returninstances with predictions that disagree,

i.e., T = Td = {x E ElATe(x) # AT2(x)}.

Thus, the arbiter will be used to decide between

conflicting classifications. Note, however,it l&2. Return the majority vote of Pl, /72, and

cannotdistinguish classifications that agree but

A( instance), with preference given m the

whichare incorrect. (For further reference, this

arbiter’s choice; i.e., if Pt ~ P2 return

schemeis denotedas recta-different.)

A(instance) else realm Pl.

Page 230

KnowledgeDiscovery in Databases Workshop1998

AAAI-98

Class

class(z)

a

b

C

b

v °rll IIB°c’ Prx

attrvec(z)

attrvecl

attrvec2

attrvec3

attrvec4

Training set from

[

the meta.differentarbiter scheme I

Xl

X2

Z3

X4

a

a

b

b

a

b

b

b

Trainingset from

the meta-different-incorrectarbiter scheme

Class

Attribute vector

Instance

1

b

attrvec2

2

C

attrvec3

Trainingset from

the meta.different-incorrect-correct

arbiter scheme

Set

Instance

Class

Attribute vector

Different(Td)

1

b

attrvec2

Incorrect(Ti)

1

C

attrvec3

1

a

attrveel

Correct

(T~)

2

b

attrvee4

Figure2: Sample

training sets generatedby the three arbiter strategies

cessors,there are log(s) interationsin buildingthe

arbiter tree andeachtakesO( t 2) time.Thetotal time

is thereforeO(t2 log(s)), whichimpliesa potential

O(s2 / log( s ) fold speed-up. Similarly,O(nv~)

algorithmsyield O(vrg/lo#(s)) fold speed-upand

O(n)yield O(s/tog(s)). This roughanalysis also

assumesthat eachdata subset fits in the mainmemTo achievesignificant speed-up,the training set

ory.

In addition, the estimatesdo not take into acsize for an arbiter, in these three schemes,is recount

the burden of communicationoverheadand

stricted to be no larger thanthe trainingset size for

speedgainedby multipleI/O channelsin the parala classifier. Thatis, the amountof computationin

lel case(whichwill be addressedin future papers).

training an arbiter is bounded

by the time to train

Furthermore,

weassumethat the processors have

a leaf classifier. In a parallel computationmodel

eachlevel of arbiters can be learnedas efficiently relatively the samespeed;load balancingand other

as the leaf classifiers. (In the third scheme

the three issues in a heterogeneousenvironmentare beyond

the scopeof this paper. Wealso note that oncean

subarbitersare producedin parallel.) Withthis reits applicationin a parallel

striction, substantial speed-upof the learningphase arbiter tree is computed,

environment

can

be

done

efficiently

accordingto the

can be predicted. Assumethe numberof data subsets of the initial distribution is s. Let t = N/s be schemeproposedin [18].

the size of each data subset, whereNis the total

numberof training examples.Furthermore,assume

the learning algorithmtakes O(n2) time in the sequentialcase. In the parallel case, if wehaves pro3. if pt ~ I~ return Aa(instance)

else if pt = Ae(instanee)

return At(instance)

else return Ai(instanee),

where A = {Ad, At, Ac}.

AAA1-93

KnowledgeDiscoveryin DatabasesWorkshop1998

Page 231

4

Experiments

Four inductive learning algorithms were used in our

experiments. ID3 [15] and CART[1] were obtained from NASAAmes Research Center in the

INDpackage[2]. Theyare both decision tree learning algorithms. WPEBLS

is the weighted version of

PEBLS[8], which is a memory-basedlearning algorithm. BAYES

is a simple Bayesian learner based

on conditional probabilities, whichis described in

[7]. The latter two algorithms were reimplemented

inC.

Twodata sets, obtained from the UCI Machine

Learning Database, were used in our studies. The

secondaryprotein structure data set (SS) [13], courtesy of Qian and Sejnowski, contains sequences of

aminoacids and the secondarystructures at the corresponding positions. There are three structures

(three classes) and 20 aminoacids (21 attributes

becauseof a spacer) in the data. The aminoacid sequenceswere split into shorter sequences of length

13 according to a windowingtechnique used in [13].

The sequences were then divided into a training and

test set, whichare disjoint, according to the distribution described in [13]. The training set has

18105instances and the test set has 3520. The DNA

splice junction data set (SJ) [19], courtesy of Towell, Shavlik and Noordewier,contains sequences of

nucleotides and the type of splice junction, if any,

(three classes) at the center of each sequence. Each

sequencehas 60 nucleotides with 8 different values

each (four base ones plus four combinations). Some

2552 sequences were randomlypicked as the trainhag set and the rest, 638 sequences, becamethe test

set. Althoughthese are not very large data sets, they

give us an idea on howour strategies perform.

As mentioned above, we varied the number of

subsets from 2 to 64 and the equal-size subsets

are disjoint with randomdistribution of classes.

Theprediction accuracy on the test set is our primary comparison measure. The three meta-leaming

strategies for arbiters were run on the two data sets

with the four learning algorithms. In addition, we

applied a simple voting schemeon the leaf classifters. The results are plotted ha Figure 3. The

accuracy for the serial case is plotted as "one subset."

If werelax the restriction onthe size of the data set

Page 232

for training an arbiter, we mightexpect an improvementha accuracy, but a decline in execution speed.

To test this hypothesis, a numberof experiments

were performed varying the maximum

training set

size for the arbiters. Thedifferent sizes are constant

multiples of the size of a data subset. The results

plotted in Hgure 4 were obtained from using the

meta-different-incorrect strategy on the SJ data.

5 Results

In Figure 3, for the three arbiter strategies, weobserve that the accuracy stayed roughly the samefor

the SS data and slightly decreased for the SJ data

whenthe numberof subsets increased. With 64 subsets, mostof the learners exhibited approximatelya

10%drop in accuracy, with the exception of BAYES

and one case in CART.The sudden drop in accuracy

in those cases waslikely due to the lack of information ha the training data subsets. In the SJ data there

are only ~ 40 training examplesha each of the 64

subsets. If welook at the case with 32 subsets (,,, 80

exampleseach), all the learners sustained a drop in

accuracy of at most 10%. As we observe, the big

drops did not happenin the SS data. This showsthat

the data subset size cannot be too small. Thevoting

schemeperformed poorly for the SJ data, but was

better than most schemesin the SS data. Basically,

the accuracy was roughly the same percentage of

the mostfrequent class in the data and in the SS data

case, simple voting performedrelatively better. The

behavioron the training set wassimilar to the test set

and those results are not presented here due to space

limitations. Furthermore, the three strategies had

comparableperformanceand since the first strategy

producesfewer examplesin the arbiter training sets,

it is the preferredstrategy.

As we expected, by increasing the maximum

arbiter training set size, higher accuracy can be obtained (see Figure 4, only the results on the SJ data

are presented). Whenthe maximum

size was just

two timesthe size of the original subsets, the largest

accuracy drop was less than 5%accuracy, cutting

half of the 10%drop occurred whenthe maximum

size was the same as the subset size as mentioned

above. Furthermore, when the maximumsize was

unlimited(i.e., at mostthe size of the entire training

KnowledgeDiscovery in Databases Workshop1995

AAAI-g8

ID3 (gJ)

100

7O

tilt

feint

~-di f relent- incorrect "*""

di f ~erQnt- [noor~ot’correct

-e ....

rot I Jig ~--

65

different

di ~ fe rent - incorrect

"*’dL f fertnt"Incorrectoorreo¢ "¯ ....

voting

9S

....

.o...oI~,.

o

o 60

’

I°

..% .......

8S

"t """""

SO

i

2

!

i

i

4

8

16

Number of subeotl

i

32

CART (SS)

7O

8O

i

200 ]

¯

dLf£erent

different-~ncorrect

~’dLfferont-lncorreat-correct ’Q’VOting ~--

65

i

|

*

¯

8

16

Number of 8ul0metm

64

CA.qT (SJ)

.

)2

64

.

dif £erent.

d£ f ferent -lno.or~ot

~d i i fe rent ° Incorrect

- oorreo¢

"e ....

voting

.

l

I

.

-- 95

60

"’"’f

.....

SO’

"

,IS

i

2

i

i

i

8

16

4

Nmd~c of eubeets

i

32

;-’I

~SmLS (S8

70

dLfferon*

-*--]

dLflerent.’tncorreot

-*-- l

d Jr £ ferent" lnoor~ot" oorreot

voting -e--.

"~--J

¯

|

/

^

~5

I, *

2

i

4

Nu~r

100

"

....

80

64

I

I

9S l

~..

,

8

of

,

26

t

32

64

8ubee¢o

~E~bS

....

(SJ)

~ftQ~’nt

~-dl f fetm~t - JJ~oor~ot "’- dl~LllllQlllntvot£ng~noorc~nt-oor~llol; "Q ....

I

85

SO

.....

’J’~

.

2

2

4

8

16

Number of 8uboece

32

64

tO

dtffecqm¢

~ I

dlffe~n¢-inoorreot

"~" I

dlilerent-~noorreot’oorreot

.e....

voCtn~ -"--

.....

\

.....

32

4

8

16

Number of 8ubeet8

200:

.....

l

I

.

6= |[

2

64

ISAYES (SJ)

I~,Y CS (SSl

70

I

1

dll~fecen¢

8tfferent-lJ~orcec¢

~-

gS

"’-~

---.~.~’"-~

\/,

..............

’’%,

’.

8S

50

4S

,

2

,

,

,

4

8

16

Number of 8ubsetll

,

32

80

64

1

4

8

16

~hmber of eub4e~J

32

64

Figure3: Results on different strategies

AAAI-9#

KnowledgeDiscover’!l in Daiabases Workshop199~

P~ge 233

ID3 ($J)

100

IO0

Nax xl

Xax x2 ~-Hax x3 -o-.

Onl Lml ted -0--

9S

95

G

85

85

80

*

2

,

t

,

16

4

8

Number of muboeto

|

32

8O

64

2

2

4

8

16

Number of 8ubeeto

64

"~"

BAYES (SJ}

WPEBLS(SJ)

32

100

100

Wax x2

XaX X2

Xax X3

VhlXRlted

9S

-e~

~-e.~-- .

Nix Xl "*--N&X x2 "~-

,~

~~z3 .* ....

ted

",-..,.

""**.%,

95

o

................

;,’..,-:~

......

..~.

..........

85

8S

80

i

2

I

t

t

4

8

16

Number Of subsets

i

32

8O

64

1

2

¯

8

IG

Number of subsets

32

64

arbiter U’a~’~gset sizes

Figure4: Results on different m~imum

set), the accuracywas roughlythe sameas in the

serial case.

Since our experimentsare still preliminary,the

systemis not implemented

on a parallel/distributed

platform and we do not have relevant timing resuits. However,accordingto the theoretical analysis, significant speed-upcan be obtainedwhenthe

maximum

arbiter trainingset size is fixed to the subset size (see Section 3.3; ID3 and CART

is O(nl)

[15], wherel is the numberof leaves, and assuming I is proportionalto v/n, the complexitybecomes

municationandmultiple I/O channelson speed are

not taken into account. For WPEBLS

(O(n2)),

speed-upsteadily increased as the numberof subsets increasedandleveled off after eight subsets to

a factorof six (see Figure5). (Thiscase is closest

a linear speed-up.) For ID3 and CART

(O(nv/’~)),

the speed-upslowly increased andleveled off after

eight subsets to a factor of three. BAYES

did not exhibit parallel speed-updueto its linear complexity.

Theleveling-off wasmainly due to the bottleneck

at the root level, whichhadthe largest trainingset.

WI,EaLS

is O(n2);BAYES

is O(n)).

Next, weinvestigate the size andlocation of the

largest

training set in the entire arbiter tree. This

Whenthe maximum

arbiter training set size is

gives us a notion of the memoryrequirement at

unlimited, we calculate the speed-upsbasedon the

any processing site and the location of the main

largest training set size at each level (whichtakes

the longest to finish). In this case, since weassume bottleneck. Ourempiricalresults indicates that the

largest training set size wasalwaysaround30%of

wedo not havea parallelized version of the learners available, the computing

resourcebeingutilized

the total training set andalways happenedat the

root level, independentof the numberof subsets

is reducedin half at each level. That is, at the

root level, only one processorwill be in use. The that was larger than two. (Note whenthe number

of subsets wastwo, the training set size was50%of

following discussion is basedon theoretical calcuthe original set at the leaves andbecamethe largest

lations using recordedarbiter training set sizes obin the tree.), Thisimplies that the bottleneckwasin

tained fromthe SJ data. Again,the effects of corn-

Page 234

KnowledgeDiscovery in Databases WorkshopI998

AAALg$

CkRT (SJ)

ID3 ($3)

Largest

tra£nlg

¯

*

Ae~ura~r (xl0t)

-e-set size (xl0t)

~’Speed-up-e--.

*

Largest

,

\\

~

I

tralnlg

o

q

I

32

64

\

Accura~F (xlOt)

-*-set size (xl0t)

-~-4

Speed~up

.e.-*

\

..o..

e

...I.

..........

..:

.......

i

I

l

4

8

16

Number of auh4nete

~LS

Largest.

t

i

|

8

16

4

Number of euJ~etJ

!

32

64

BAYES (SJ)

(S3)

trainlg

~. 10

J

2

Ac¢mra~/ (xl0q)

-4-sol i~ze (:[101)

~Speed-up-e .....

!’

i’

,

10

"\

Laziest

.

tralnlg

.

A~uraclr (xl0t)

set size (zlOt)

-*--

_ : ~peeo’~ptO

\

.m’"’

,.~:"~ .~........... ~- ........... . ............ ~ ........

2

......."’

o................

~...............

¯ ...............

Q................

....~...e

..............

i

2

i

l

l

4

8

16

Number of subsets

i

32

0

64

l

2

l

t

i

8

16

4

Nulber of subsets

l

32

64

Figure 5: Results on unlimitedmaximum

arbiter training set size

processingarmmd

30%of the entire training data set

at the root level. Thisalso impliesthat this parallel

meta-learningstrategy requiredonly around30%of

the memory

used by the serial case at any single

processingsite. Strategies for reducingthe largest

training set size is discussedin the next section.

Recallthat the accuracy

level of this parallelstrategy

is roughlythe sameas the serial case. Thus, the

parallel rests-learningstrategy(with no limit on the

arbitertrainingset size) canperformthe samejob as

the serial case with less time and memory

without

parallelizing the learningalgorithms.

6 Discussion

Thetwo data sets chosenin our experimentsrepresent twodifferentkindsof datasets: oneis difficult

to learn (SS with 50+%accuracy)and the other

easy to learn (SJ with 90+%accuracy). Ourarbiter

schemesmaintainedthe low accuracyin the first

case and mildly degradedthe high accuracyin the

secondcase witha restriction on the arbiter training set size. When

the restriction on the size of the

AAAL98

trainingset for an arbiter waslifted, the samelevel

of aconacycould be achieved with less time and

memory

for the second case. Since we assert that

this approach

is scalable due to the independence

of

each learningprocess, this indicates the robustness

of our strategies andhence their effectiveness on

massive amountsof data.

Largest arbiter training set size As mentionedin

the previoussection, we discoveredthat our scheme

requiredat most30%

of the entire trainingset at any

momentto maintain the sameprediction accuracy

as in the serial case for the SJ data. However,the

percentageis dependenton several factors: the prediction accuracyof the algorithmon the given data

set, the distribution of the datain the subsets, and

the pairingof learnedclassifiers andarbitersat each

level.

If the predictionaccuracy

is high, the arbitertrainhag sets will be small becausethe predictions will

usually be correct andfew disagreementswill occur. In our experiments,the distribution of data in

the mbsetswasrandomandlater we discoveredthat

KnowledgeDiscovery in Databases Workshop1995

Page 235

CART (SJ)

100

Ra~ dimt.

Uni£o~ dist.

"~’-

80

64

i

3

i

i

i

4

8

16

Number of subsetm

i

32

64

BAYES (SO)

~EBLS (S3)

100

100

Random dist.

Onlfona d£st.

-*--*---

Onifom diet. "~""

---4~

95

95

ep

v

ov

85

85

O0

i

i

i

4

8

16

Number ot aubsets

I

32

80

64

i

2

i

,

i

4

8

16

Number of subsets

, !\

32

64

Figure

6: Accuracy

with different class distributions

half of the final arbiter tree wastrainedon examples

withonly two of the three classes. Thatis, half of

the tree wasnot awareof the third class appearingin

the entire trainingdata. Wepostulatethat if the class

distributionin the subsets is uniform,the leaf classifters andarbiters in the arbiter tree will be more

accurateandhencethe training sets for the arbiter

will be smaller. Indeed,results fromour additional

experimentson different class distributions (using

the meta-different-incorrectstrategy on the SJ data

set), shownin Figure6, indicate that uniformclass

distributions can achieve higher accuracythan randomdistributions.

Andlastly, the "neighboring"

leaf classifiers and

arbiters were paired in ourexperiments.Onemight

use moresophisticatedschemesfor pairing to reduce

the size of the arbiter training sets. Oneschemeis

to pair classifiers andarbiters that agree mostoften with each other and producesmaller training

sets (called min-size). Anotherschemeis to pair

those that disagreethe mostandproducelargertraining sets (called max-size). At first glancethe first

schemewouldseemto be moreattractive. However,

Page 236

since disagreementsare present, if they do not get

resolvedat the bottomof the tree, they will all surface nearthe root of the tree, whichis also whenthe

choiceof pairingsis limitedor nonexistent

(thereare

only two arbiters one level belowthe root). Hence,

it mightbemorebeneficialto resolveconflictsnear

the leaves leaving fewer disagreementsnear the mot

These sophisticated pairing schemes might decrease the arbiter training set size, but they might

also increase the communicationoverhead. When

pairingis performed

at everylevel, the overhead

is

incurred at every level. The schemesalso create

synchronizationpoints at each level, instead of at

each node whenno special pairings are performed.

A compromise

strategy might be to performpairing

only at the leaf level. This indirectly affects the

subsequenttraining sets at each level, but synchronization occurs only at each node and not at each

level.

Some experiments were performed on the two

pairingstrategies appliedonly at the leaf level and

the results are shownin Figure 7. All these experiments were conductedon the SJ data set and

KnowledgeDiscovery in Databases Workshop1993

AAAI-93

11)1 (gJ)

\

\

\

\

CA~T {S3)

o

vlOOi

l~ndom diet.

R~nd~a diet. 2 "+’P~ndom diet. 2, max-eize Pairing .e-..

Random diet.

2, Imin-mize

pairing

~--

P~ nd~m diet.

@~ndk2mdiet.2 "÷’-P~m diet.

2, max-size pairing .e ....

Raxx~a diet.

2, lain-0ize

pairing ~-Uniform dist.

.6..

\

\

\

80

6o

4O

.......

__.

=--~=~=~-~-~.~:

to

l

2

w

100,

¯

4

J

i

4

8

16

Number of subeetn

¯

Rand~ dist.

¯

i

32

,

,

Randomdiet.

2, JL3Xm|J. ZO Pairing

.......

i

2

64

¯

0

\

\

\

a

32

64

BAYES (SJ)

,

-e--

.e ....

~’~".....

-~-..~.Z

....

m

i

I

¯

8

1G

Number of eubset~

I~mdkxmdiet.

R~rKI~S diet.

,

,

~ dist.

-*-Ra~l~ diet.

2 ~2, max-size Pairing .e-,.

2~ mln-e/ze

pa£r£ng -~-Unifon~ diet. -6.-

¯ ,~ 60

~

40

40

, I/A\\

20

i~

o

0

2

4

8

br Of euJ:~get.I

16

32

~4

,

2

i

i

i

8

li

4

Mmd~r of subaets

i

32

64

Figure7: Arbitertrainingset size withdifferent class distributionsandpairingstrategies

used the meta.different.incorrect strategy for metalearningarbiters. In additionto theinitial random

class distribution, a uniformclass distributionanda

secondrandomclass distribution (Randomdist. 2)

wasused. The secondrandomdistribution does not

havethe propertythat half of the learned arbiter

tree wasnot awareof one of the classes, as in the

initial random

distribution. Differentpairingstrategies were used on the uniformdistribution andthe

secondrandomdistribution. As shownin Figure 7,

the uniformdistribution achievedsmaller training

sets than the other two randomdistributions. The

largest trainingset size wasaround10%of the original data whenthe number

of subsets waslarger than

eight, except for BAYES

with 64 subsets (BAYES

seemedto be not able to gather enoughstatistics

on small subsets, whichcan also be observedfrom

results presentedearlier). (Note that whenthe number of subsets is eight or fewer, the training sets

for the leaf classifiers are larger than 10%of the

original data set andbecomethe largest in the arbiter tree.) Thetwopairingstrategies did not affect

the sizes for the uniformdistribution andare not

AAAI-93

shownin thefigure. Onepossible explanation is

that the uniformdistribution producedthe smallest

training sets possible andthe pairing strategies did

not matter. However,the max-size pairing strategy

did generally reducethe sizes for the secondrandomdistribution. Themen-sizepairing strategy, on

the other hand, did not affect, or sometimeseven

increased, the sizes. In summary,

uniformclass distributiontends to producethe smallesttraining sets

andthe max-sizepairing strategy can reducethe set

sizes in random

class distributions.

In our discussion so far, we have assumedthat

the arbiter trainingset is unbounded

in orderto determine howthe pairing strategies maybehavein

the case wherethe training set size is bounded.The

max-sizestrategyaimsat resolvingconflicts nearthe

leaves wherethe maximum

possible arbiter training

set size is small(the unionof the twosubtrees)leaving fewerconflicts nearthe root. If thetrainingset

size is boundedat each node, a randomsample(with

the bounded

size) of a relatively small set near the

root wouldbe representativeof the set chosenwhen

the size is unbounded.

KnowledgeDiscovery in Databases Workshop1993

Page 237

Order of the arbiter tree A binary arbiter tree

configuration was chosen for experimental purposes. There is no apparent reason whythe arbiter

tree cannot be n-ary. However,the different strategies proposedabove are designed for n to be equal

to two. Whenn is greater than two, a majority classification fromthe n predictions mightbe sufficient

as an arbitration rule. The examplesthat do notreceive a majorityclassification constitute the training

set for an arbiter. It mightbe worthwhileto have a

large value of n since the final tree will be shallow,

and thus training may he faster. However, more

disagreements and higher communicationoverhead

will appearat eachlevel in the tree dueto the arbitration of manymorepredictions at a single arbitration

site.

Alternate approach An anonymous reviewer of

another paper proposed an "optimal" formula based

on Bayes Theoremto combinethe results of classifters, namely, P(a:) = ~c P(c) P(xlc ), where

z is a prediction and c is a classifier. P(c) is the

prior whichrepresents howlikely classifier c is the

true model and P(zJe) represents the probability

classifier e guesses z. Therefore, P(z) represents

the combinedprobability of prediction z to be the

correct answer. Unfortunately, to be optimal, Bayes

Theoremrequires the priors P(e)’s to be known,

whichare usually not, and it also requires the summarionto be over all possible classifiers, whichis

almost impossible to achieve. However,an approximate P(z) can still be calculated by approximating the priors using various established techniques

on the training data and using only the classifiers

available. This techniqueis essentially a "weighted

voting scheme" and can be used as an aitemative

to generating arbiters. This and the aforementioned

strategies and issues are the subject matter of ongoing experimentation.

Schapire’s hypothesis boosting Our ideas are related to using meta-leaming to improve accuracy.

Themost notable workin this area is due to Schapire

[16], which he refers to as hypothesis boosting.

Basedon an initial learned hypothesis for someconcept derived from a randomdistribution of training

data, Schapire’s schemeiteratively generates two

Page 238

additional distributions of examples.Thefirst newly

derived distribution includes randomlychosentraining examplesthat are equally likely to be correctly

or incorrectlyclassified bythe first learnedclassifier.

Anewclassifier is formedfromthis distribution. Finally, a third distribution is formedfromthe training

exampleson whichboth of the first two classifiers

disagree. Athird classifier (in effect, an arbiter)

computedfor this distribution. The predictions of

the three learned classifiers are combinedusing a

simplearbitration rule similar to the oneof the rules

we presented above. Schapire rigorously proves that

the overall accuracy is higher than the one achieved

by simplyapplying the learning algorithmto the initial distribution under the PAClearning model. In

fact, he showsthat arbitrarily high accuracy can be

achieved by recursively applying the same procedure. However,his approach is limited to the PAC

model of learning, and furthermore, the mannerin

whichthe distributions are generated does not lend

itself to parallelism. Since the second distribution

dependson the first and the third dependson the second, the distributions are not available at the same

time and their respective learning processes cannot

be run concurrently. Weuse three distribution as

well, but the first two are independentand are available simultaneously. The third distribution, for the

arbiter, however, depends on the first two. Freund [9] has a similar approach, but with potentially

manymore distributions. Again, the distributions

can only be generatediteratively.

Workin progress In addition to applying metalearning to combiningresults from a set of parallel or distributed learning processes, meta-learning

can also be used to coalesce the results from multiple different inductive learning algorithms applied

to the same set of data to improve accuracy [5].

Thepremiseis that different algorithms havedifferent representations and search heuristics, different

search spaces are being explored and hence potentially diversed results can be obtained fromdifferent algorithms. Mitchell [12] refers to this phenomenonas inductive bias. Wepostulate that by

combiningthe different results intelligently through

meta-leaming, higher accuracy can be obtained. We

call this approachmultistrategy hypothesis boosting.

KnowledgeDiscovery in Databases Workshop1993

AAAL93

Preliminaryresults reported in [4] are encouraging.

Zhanget al.’s [24] and Wolpert’s[22] workis in this

direction. Silver et al.’s [17] andHolder’s[10] work

also employsmultiple learners, but no learning is involved at the meta level. Since the ultimate goal of

this work is to improveboth the accuracy and efficiency of machine leaming, we have been working

on combiningideas in parallel learning, described

in this paper, with those in multistrategy hypothesis boosting. Wecall this approach multistrategy

parallel learning. Preliminary results reported in

[6] are encouraging. To our knowledge, not much

workin this direction has beenattempted by others.

References

[l] L.

Breiman, J. H. Friedman, R. A. Olshen,

and C. J. Stone. Classification and Regression

Trees. Wadsworth,Belmont, CA, 1984.

[21 W. Buntine and R. Caruana. Introduction to

lArD and Recursive Partitioning. NASA

Ames

Research Center, 1991.

[31J.

Catlett. Megainduction:A test fright. In

Proc. Eighth Intl. Work. Machine Learning,

pages 596-599, 1991.

[4] P.

7

Concluding Remarks

Several meta-learningschemesfor parallel learning

are presented in this paper. In particular, schemes

for building arbiter trees are detailed. Preliminary

empirical results from boundedarbiter training sets

indicate that the presented strategies are viable in

speeding up learning algorithms with small degradation in prediction accuracy. Whenthe arbiter trainhag sets are unbounded,the strategies can preserve

prediction accuracy with less training time and required memory

than the serial version.

The schemespresented here is a step toward the

multistrategy parallel learning approach and the

preliminary results obtained are encouraging. More

experiments are being performedto ensure that the

results wehave achieved to date are indeed statistically significant, and to study howmeta-leaming

scales with muchlarger data sets. Weintend to further explore the diversity and possible "symbiotic"

effects of multiple learners to improve our metalearning schemesin a parallel environment.

Acknowledgements

This work has been partially supported by grants

from NewYork State Science and TechnologyFoundation, Citicorp, and NSFCISE. Wethank David

Wolpertfor manyuseful and insightful discussions

that substantially improvedthe ideas presented in

this paper.

AAA1-93

Chart and S. Stolfo. Experimentson multistrategy learning by meta-leaming. Submitted

to CIKM93,1993.

[51P. Chanand S. Stolfo.

Meta-leamingfor multistrategy and parallel learning. In Proc. Second

Intl. Work. on Multistrategy Learning, 1993.

To appear.

[6] P.

Chan and S. Stolfo. Towardmultistrategy

parallel and distributed learning in sequence

analysis. In Proc. First Intl. Conf. Intel. Sys.

Mol. Biol., 1993. To appear.

171P. Clark and T. Niblett.

TheCN2induction algorithm. MachineLearning, 3:261-285, 1987.

[8] S.

Cost and S. Salzberg. A weighted nearest

neighbor algorithm for learning with symbolic

features. MachineLearning, 10:57-78, 1993.

[9] Y.

Freund. Boosting a weak learning algorithm by majority. In Proc. 3rd Work. Comp.

Learning Theory, pages 202-216, 1990.

[10] L. Holder. Selection of learning methodsusing

an adaptive model of knowledgeutility. In

Proc. MSL-91, pages 247-254, 1991.

[111 C. Matheus, R Chart, and 13. PiateskyShapiro. Systems for knowledge discovery

in databases. IEEETrans. Know.Data. Eng.,

1993. To appear.

[12] T. M. Mitchell. Theneed for biases in learning

generalizaions. Technical Report CBM-TR117, Dept. Comp.Sci., Rutgers Univ., 1980.

KnowledgeDiscovery in Databases Workshop1993

Page 239

[131 N. Qian and T. Sejnowski. Predicting the

secondary structure of globular proteins using neural network models. Z Mol. Biol.,

202:865-884, 1988.

[24] X. Zhang, J. Mesirov, and D. Waltz. A hybrid

system for protein secondarystructure prediction. J. Mol. Biol., 225:1049-1063,1992.

[141 J. R. Quinlan.Induction over large data bases.

Technical Report STAN-CS-79-739, Comp.

Set. Dept., Stanford Univ., 1979.

[151 J. R. Quinlan. Induction of decision trees. Machine Learning, 1’:81-106, 1986.

[16]R. Schapire. Thestrength of weaklearnability.

Machine Learning, 5:197-226, 1990.

[171 B. Silver, W. Frawley, G. lba, J. Vittal,

and K. Bradford. ILS: A framework for

multi-paradigmatic learning. In Proc. Seventh

Intl. Conf. MachineLearning, pages 348-356,

1990.

[18] S. Stolfo, Z. Galil, K. McKeown,

and R. Mills.

Speechrecognition in parallel. In Proe. Speech

Nat. Lang. Work., pages 353-373. DARPA,

1989.

[19] G. Towell, J. Shavlik, and M. Noordewier.Refinement of approximate domain theories by

knowledge-based neural networks. In Proc.

AAA/-90,pages 861-866, 1990.

[201 B. Wahet al. High performance computing and communicationsfor grand challenge

applications: Computer vision, speech and

natural languageprocessing, and artificial intelligence. IEEETrans. Know. Data. Eng.,

5(1):138-154, 1993.

[21] J. Wirth and J. Catlett. Experiments on the

costs and benefits of windowingin ID3. In

Proc. Fifth Intl. Conf. Machine Learning,

pages 87-99, 1988.

[22]D.

Wolpert. Stacked generalization.

Networks, 5:241-259, 1992.

Neural

[231X. Zhang, M. Mckenna, J. Mesirov, and

D. Waltz. An efficient implementationof the

backpropagation algorithm on the connection

machine CM-2. Technical Report RL89-1,

Thinking MachinesCorp., 1989.

Page 240

KnowledgeDiscovery in Databases Workshop1993

AAAI-93