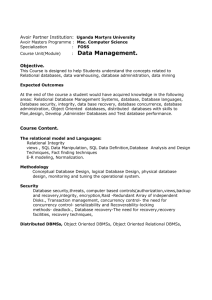

iii Andrews University School of Education DATA WAREHOUSING

advertisement