A Framework for Analyzing Levels of Analysis Issues in Studies of E

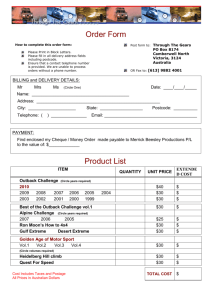

advertisement