- Department of Geography

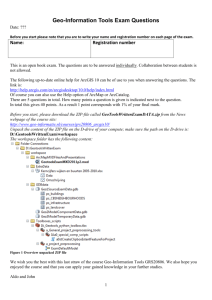

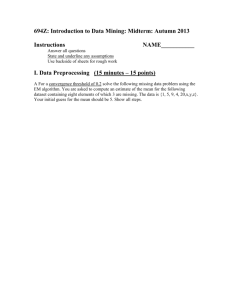

advertisement