Critical Values for Testing Location

advertisement

10.2478/v10048-009-0004-8

MEASUREMENT SCIENCE REVIEW, Volume 9, Section 1, No. 1, 2009

Critical Values for Testing Location-Scale Hypothesis

František Rublı́k

Institute of Measurement Science, Slovak Academy of Sciences, Dúbravská cesta 9, 84104 Bratislava, Slovakia

E-mail: umerrubl@savba.sk

The exact critical points for selected sample sizes and significance levels are tabulated for the two-sample test statistic which

is a combination of the Wilcoxon and the Mood test statistic. This statistic serves for testing the null hypothesis that two sampled

populations have the same location and scale parameters.

Keywords: distribution-free rank test; combination of rank statistics; small sample sizes; location-scale hypothesis.

1. Introduction

S

better results than the statistics constructed especially for the

one type change of location or constructed especially for the

one type change of scale. Readers interested in further discussion of the need of testing the hypothesis (2) can found further

arguments in Section 1 of [6] or in [3].

We shall deal with the test based on the ranks of the observations. As is well known, the advantage of such a test is

that in the setting (1) with F continuous, the use of this rank

test guarantees that the significance level will have the chosen

value α. The null hypothesis (2) is against the alternative H1

that at least one of the equalities (2) does not hold, tested usually by means of the Lepage test from [4]. Critical constants

of this test for small sample sizes can be found in [5], the Lepage test is included also into the monograph [2]. The Lepage

test statistic is given by the formula

uppose that the random variables X, Y have the distribution functions

!

!

t − µY

t − µX

, P(Y ≤ t) = F

, (1)

P(X ≤ t) = F

σX

σY

where the real numbers µX , µY denote the location parameters, σX > 0, σY > 0 are scale parameters and F is a continuous distribution function defined on the real line. Assume that X1 , . . . , Xm is a random sample from the distribution of X, Y1 , . . . , Yn is a random sample from the distribution of Y and these random samples are independent. Let

(R1 , . . . , RN ), N = m+n, denote the ranks of the pooled sample

(X1 , . . . , Xm , Y1 , . . . , Yn ). The hypothesis

H0 : µX = µy ,

σ X = σY

T = T K + T B,

(2)

(S W − E(S W |H0 ))

(S B − E(S B |H0 ))2 (3)

, TB =

,

Var(S W |H0 )

Var(S B |H0 )

P

where S W = m

i=1 Ri is the Wilcoxon rank test statistic and

Pm

S B = i= aN (Ri ) is the Ansari-Bradley rank test statistic (i.e.,

the vector of scores aN = (1, 2, ..., k, k, . . . , 2, 1) if N = 2k and

aN = (1, 2, . . . , k, k + 1, k, . . . , 2, 1) if N = 2k + 1). An analogous statistic has been formulated in the multisample setting in [7], another test statistics for this problem have been

studied in [8], where also their non-centrality parameters for

testing the null location-scale hypothesis are for some situations computed. As shown on p. 283 of [8], the bounds

for the asymptotic efficiency in the case of there considered

sampled distributions and quadratic rank statistics do not depend on the number of sampled populations. As concluded

ibidem, taking into account computed values of the asymptotic efficiencies, one sees that a combination of the multisample Kruskal-Wallis statistic (in the two-sample case the

Wilcoxon test statistic) with the Mood test statistic appears

to be a good choice when one considers symmetric distributions whose type of tail weight is unknown. The statistics in

[8] are defined in the general multisample setting, but they

can be used also in the two-sample setting for small sample

sizes, when the tables of the critical constants can be computed. However, the only available tables of critical constants

2

TK =

is sometimes called also the null location-scale hypothesis.

If the populations X and Y differ in their location parameters only, then the tests designed for detecting this change

(e.g. Wilcoxon-Mann-Whitney or van der Waerden test) can

be used to detect this difference. Similarly, if X and Y differ in

the scale parameter only, then the tests designed for detecting

this change (e.g. Mood or Ansari-Bradley test) can be used.

However, the tests designed for detecting difference of the location usually do not perform well when the change occurs

in the scale parameter only. Similarly, the tests designed for

detecting difference of the scale usually do not perform well

when the change occurs in the location parameter only. But in

the real situation it is not known which type of change will occur and if the experimenter is interested both in the difference

of the location and also in the difference of scale, the use of

the test statistics mentioned before will not be advantageous.

This is due to the fact that such a difference cannot be simply

detected in the one step, and the two step use of the mentioned tests would lead to the increase of the nominal level of

significance (the probability of the error of type I). But what

is even more important is the fact, that in practice the change

in the location is very often accompanied by the change in

scale, and in such a case the statistics constructed especially

for testing the location-scale null hypothesis (2) usually yield

9

MEASUREMENT SCIENCE REVIEW, Volume 9, Section 1, No. 1, 2009

for testing the location scale-hypothesis are those published

in [5]. The mentioned table is computed for m=2(1)30, n=2;

m=3(1)26, n=3; m=4(1)18, n=4; m=5(1)13, n=5; m=6(1)10,

n=6; m=7,8, n=7 and the significance levels α = 0.01, 0.02,

0.05, 0.10 and 0.2. In this paper also the significance level

α = 0.005 is included, the extremely unbalanced sample

sizes like m = 2, n = 30 are not considered and instead of

these critical constants for some other small sample sizes for

m = 7(1)10 are computed.

2. Basic formulas

P

k2 ≤ (r + s)2 , then sj=1 i2j = k2 if and only if this equality

holds and (r + s) < {i1 , . . . , i s }.

(II) This assertion follows from the fact, that D(s, r, k1 , k2 )

is a union of the disjoint sets D(s, r − 1, k1 , k2 ) and

P

P

{(i1 , . . . , i s ) ∈ J(r, s); i s = s + r, sj=1 i j = k1 , sj=1 i2j = k2 }. If R = (R1 , . . . , RN ) is a random vector which is uniformly distributed over the set of all permutations of the set

{1, . . . , N}, then according to Theorem 1 on p. 167 of [1] for

any set A ⊂ {1, . . . , N} consisting of m distinct integers

!

N

P({R1 , . . . , Rm } = A) = 1

.

m

The topic of this paper is the computation of the critical values

for the two-sample test statistic (4). In accordance with [8] la- Combining this equality with Lemma 1 one can construct a

bel the combination of the Wilcoxon and the Mood statistic program for computation of critical values of the statistic (4).

by T S Q . Thus in the notation from the previous section

3. Tables of critical values

T S Q = T K + Q,

12

m(N + 1)

SW −

mn(N + 1)

2

!2

In this section we present the exact critical values of the statistic T S Q from (4), given in Table 2. First we describe the output

!2

of

the table.

2

(4)

m(N − 1)

180

Since the set V = V(m, n) of possible values of the statis,

S̃

−

Q=

12

mn(N + 1)(N 2 − 4)

tic

T

S Q is finite for all sample sizes m, n, generally one cannot

!2

m

X

find exact critical values for arbitrary prescribed probability α

N+1

S̃ =

Ri −

.

of the type I error. In the following table the number on the

2

i=1

intersection of the column for w with the row for significance

Since according to the assumptions the distribution function level α denotes the quantity

F in (1) is continuous, the statistic T S Q is distribution-free

w = min{t ∈ V; P(T S Q ≥ t) ≤ α},

whenever the null hypothesis (2) holds. The null hypothesis

(2) is rejected whenever T S Q ≥ wα . Values of wα = wα (m, n)

the entry corresponding to α and w is the quantity

can be found in the table presented in the next section, for the

sample sizes not included into the table instead of wα use the

w = max{t ∈ V; P(T S Q ≥ t) > α}.

(1 − α)th quantile of the chi-square distribution with 2 degrees

of freedom.

Further, for given α,

In the computation of the tables of this paper the following

p = P(T S Q ≥ w), p = P(T S Q ≥ w)

lemma is useful.

TK =

,

denote the corresponding probabilities of the type I error.

Thus p is the largest available significance level not exceeding α and p is the smallest available significance level greater

than α. The value of critical constant yielding the significance

level closer to the nominal level α is printed in boldface letter. If the difference in computed values exceeds the number

of decimal places used to describe the result of computation,

then the boldface symbol is used for the value corresponding

to p.

Lemma 1. Let J(m, n) denote the set of all m-tuples

(i1 , . . . , im ) consisting of integers such that 1 ≤ i1 < . . . <

im ≤ m + n. Suppose that

n

D(m, n, k1 , k2 ) = (i1 , . . . , im )

∈ J(m, n);

m

X

j=1

i j = k1 ,

m

X

j=1

o

i2j = k2 ,

and B(m, n, k1 , k2 ) denotes the number of elements of

D(m, n, k1 , k2 ).

4. Some simulation results

(I) Let s > 1. If at least one of the inequalities k1 ≤ (r + s),

k2 ≤ (r + s)2 holds, then B(s, r, k1 , k2 ) = B(s, r − 1, k1 , k2 ).

The aim of the simulation is to obtain a picture of the power

(II) If k1 > (s + r), k2 > (s + r)2 , then B(s, r, k1 , k2 ) = of tests based on the statistics (3) and (4) for small sample

B(s, r − 1, k1 , k2 ) + B(s − 1, r, k1 − (r + s), k2 − (r + s)2 ).

sizes covered by Table 2. To consider power of the concerned

tests in the case of distributions with various tail behaviour,

P

Proof. (I) If k1 ≤ r + s, then sj=1 i j = k1 if and only if the sampling from normal, logistic and Cauchy distribution is

this equality holds and (r + s) < {i1 , . . . , i s }. Similarly, if employed, in each case µX = 0, σX = 1. The simulations are

10

MEASUREMENT SCIENCE REVIEW, Volume 9, Section 1, No. 1, 2009

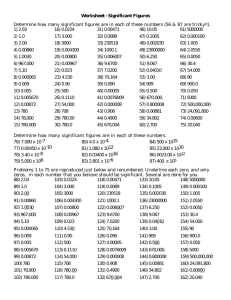

Table 1: Simulation estimates of the power.

m = 3, n = 7

µY = 1.5, σY = 3

P(T S Q ≥ wα |Normal)

P(T ≥ tα|Normal)

P(T S Q ≥ wα |Logistic)

P(T ≥ tα|Logistic)

P(T S Q ≥ wα |Cauchy)

P(T ≥ tα|Cauchy)

α = 0.05

0.057

0.032

0.032

0.017

0.041

0.025

µY = 3.5, σY = 4

α = 0.10

0.073

0.141

0.041

0.131

0.058

0.114

α = 0.05

0.202

0.136

0.073

0.047

0.084

0.060

α = 0.10

0.224

0.251

0.086

0.170

0.104

0.165

µY = 7, σY = 5

α = 0.05

0.533

0.435

0.191

0.137

0.174

0.138

α = 0.10

0.547

0.539

0.208

0.247

0.197

0.233

m = 7, n = 8

µY = 1.5, σY = 3

P(T S Q ≥ wα |Normal)

P(T ≥ tα|Normal)

P(T S Q ≥ wα |Logistic)

P(T ≥ tα|Logistic)

P(T S Q ≥ wα |Cauchy)

P(T ≥ tα|Cauchy)

α = 0.05

0.448

0.415

0.363

0.326

0.228

0.219

µY = 3.5, σY = 4

α = 0.10

0.640

0.605

0.540

0.510

0.323

0.356

α = 0.05

0.745

0.730

0.586

0.547

0.431

0.419

α = 0.10

0.886

0.868

0.765

0.732

0.566

0.568

µ2 = 7, σ2 = 5

α = 0.05

0.950

0.952

0.796

0.774

0.659

0.643

α = 0.10

0.987

0.984

0.915

0.900

0.759

0.757

based in each case on 10000 trials. The critical constants wα Lepage test.

of the statistic T S Q defined in (4) are taken from Table 2, the

critical constants tα of the statistic (3) are those computed in

Acknowledgments

[5]. The results of simulations are in Table 1 .The power better of the two considered cases is emphasized by the boldface

The research was supported by the grants VEGA 1/0077/09,

type.

APVV-SK-AT-0003-09, and APVV-RPEU-0008-06.

5. Discussion and conclusions

References

As shown on p. 283 of [8], the bounds for the asymptotic efficiency of the test statistics (3) and (4) in the case of there

considered sampled distributions do not depend on the number of sampled populations, i.e., they are the same in the

two-sample case and in the multisample case. As concluded

in [8], taking into account computed values of the asymptotic efficiencies, one sees that a combination of the multisample Kruskal-Wallis statistic (in the two-sample case the

Wilcoxon test statistic) with the Mood test statistic appears to

be a good choice when one considers symmetric distributions

whose type of tail weight is unknown. However, these considerations are related to the asymptotic case, when both m and n

tend to infinity. The simulation results, given in Table 1 do not

contradict the mentioned asymptotic results. After inspecting

Table 1 it can be said that for small sample sizes and α = 0.05

testing based on (4) is preferable to (3), but for α = 0.1 it is

advisable to use the Lepage test. This suggests that the test

based on (4) can be considerered as a useful competitor to the

11

[1] Hájek, J., Šidák, Z. & Sen, P. K. (1999). Theory of Rank Tests.

Academic Press, San Diego.

[2] Hollander, M. & Wolfe, D. A. (1999). Nonparametric Statistical Methods. Wiley, New York.

[3] Hutchison, T. P. (2002). Should we routinely test for simultaneous location and scale changes? Ergonomics 45, 248-251.

[4] Lepage, Y. (1971). A combination of Wilcoxon’s and AnsariBradley’s statistics. Biometrika 58, 213-217.

[5] Lepage, Y. (1973). A table for a combined Wilcoxon AnsariBradley statistic. Biometrika 60, 113-116.

[6] Podgor, M. J. & Gastwirth, J. L. (1994). On non-parametric and

generalized tests for the two-sample problem with location and

scale change alternatives. Statistics in Medicine 13, 747-758.

[7] Rublı́k, F. (2005). The multisample version of the Lepage test.

Kybernetika 41, 713-733.

[8] Rublı́k, F. (2007). On the asymptotic efficiency of the multisample location-scale rank tests and their adjustment for ties.

Kybernetika 43, 279-306.

MEASUREMENT SCIENCE REVIEW, Volume 9, Section 1, No. 1, 2009

Table 2: Critical values of the test statistic T S Q from (4).

α

w

p

m=3

0.200

0.100

0.050

0.020

0.010

0.005

3.8571

3.8571

.100

.100

m=3

0.200

0.100

0.050

0.020

0.010

0.005

3.5520

4.2077

6.3272

.179

.083

.048

m=3

0.200

0.100

0.050

0.020

0.010

0.005

3.2649

4.1147

5.5213

8.1098

8.9011

.191

.082

.045

.018

.009

m=3

0.200

0.100

0.050

0.020

0.010

0.005

3.0754

4.1233

5.3559

7.3751

9.3529

10.8733

.185

.099

.048

.018

.009

.0044

m=3

0.200

0.100

0.050

0.020

0.010

0.005

2.9745

4.1324

5.5500

7.7052

8.9403

10.4904

.196

.098

.049

.020

.010

.0049

m=3

0.200

0.100

0.050

0.020

0.010

0.005

2.9361

3.9796

5.3942

7.5072

9.0206

10.9565

.200

.100

.050

.020

.009

.0045

w

p

w

n=3

3.6428

3.6428

3.8571

3.8571

3.8571

3.8571

m=3

.300

.300

.100

.100

.100

.100

4.1666

n=6

3.2796

3.6121

4.7342

6.4519

6.4519

6.4519

.202

.107

.071

.024

.024

.024

3.1298

4.2532

5.5000

7.3181

.200

.100

.055

.027

.018

.009

3.0649

4.1558

5.7662

8.5714

9.6103

.207

.103

.052

.022

.013

.0088

3.0101

4.0495

5.4958

7.5350

9.6862

11.4342

.196

.100

.046

.018

.007

.0036

m=3

.201

.103

.051

.022

.012

.0074

2.9600

4.0403

5.3113

7.7315

9.4113

10.9618

n=18

2.9346

3.9680

5.3563

7.2937

9.0135

10.2827

.199

.094

.049

.014

.007

m=3

n=15

2.9535

4.0342

5.3508

7.2552

8.5929

10.2632

.2

.100

.0500

.017

m=3

n=12

3.0509

4.0607

5.3110

6.9951

8.8842

9.3529

.143

m=3

n=9

3.1184

4.0659

5.4434

6.9572

8.1098

8.9011

p

.198

.098

.050

.019

.008

.0041

m=3

.201

.102

.054

.021

.011

.0060

2.9725

4.0114

5.5537

7.5125

9.2757

11.1522

.197

.099

.049

.019

.009

.0039

12

w

p

w

n=4

3.1666

4.6666

4.6666

4.6666

4.6666

4.6666

m=3

.257

.114

.114

.114

.114

.114

3.9555

5.5555

n=7

3.0519

4.0454

4.2980

7.0000

7.3181

7.3181

.217

.117

.067

.033

.017

.017

3.3803

4.1880

6.2435

8.1367

.203

.108

.056

.021

.014

.007

3.0363

4.0621

5.1757

8.2787

10.2667

.204

.104

.050

.021

.011

.0071

2.9507

4.0935

5.5371

7.7827

9.9883

11.9532

.200

.099

.047

.018

.009

.0029

m=3

.200

.100

.052

.021

.010

.0062

2.94899

3.9981

5.5137

7.5665

9.5494

11.4046

n=19

2.9416

4.0034

5.5308

7.4759

9.1235

10.6854

.198

.099

.049

.016

.006

m=3

n=16

2.9512

3.9953

5.2542

7.3407

9.0836

10.5143

.176

.091

.036

.012

m=3

n=13

2.9532

4.0478

5.3165

7.4057

9.4535

9.6862

.143

.071

m=3

n=10

3.0026

3.8961

5.1532

7.6363

8.5714

9.6103

p

.198

.098

.049

.019

.009

.0035

m=3

.200

.100

.051

.022

.010

.0052

2.9438

4.0038

5.4752

7.7419

9.4466

11.0676

.199

.100

.050

.019

.009

.0045

w

p

2.8888

3.9555

5.5555

5.5555

5.5555

5.5555

.214

.143

.071

.071

.071

.071

n=5

n=8

3.2435

4.0213

4.9359

7.5897

8.1367

8.1367

.212

.103

.061

.024

.012

.012

n=11

2.9454

4.0606

5.0303

6.8015

8.9833

10.2667

.203

.104

.055

.022

.011

.0055

n=14

2.9239

3.9398

5.4970

7.6424

8.4444

9.9883

.206

.101

.050

.021

.012

.0059

n=17

2.94899

3.9879

5.4526

7.2454

8.7461

10.7446

.200

.100

.051

.021

.011

.0053

n=20

2.9419

3.9342

5.4142

7.7142

9.3609

10.8086

.202

.101

.051

.020

.010

.0056

MEASUREMENT SCIENCE REVIEW, Volume 9, Section 1, No. 1, 2009

Table 2 (cont.)

α

w

p

m=3

0.200

0.100

0.050

0.020

0.010

0.005

2.9728

4.0028

5.4801

7.6875

9.5134

11.1861

.199

.100

.049

.020

.010

.0049

m=3

0.200

0.100

0.050

0.020

0.010

0.005

2.9346

3.9901

5.5686

7.6349

9.4075

10.8813

.198

.099

.050

.019

.010

.0048

m=4

0.200

0.100

0.050

0.020

0.010

0.005

4.0833

5.3333

.143

.057

m=4

0.200

0.100

0.050

0.020

0.010

0.005

3.3150

4.3809

5.4065

7.3589

7.8974

8.0769

.197

.094

.045

.015

.009

.0030

m=4

0.200

0.100

0.050

0.020

0.010

0.005

3.2362

4.2450

5.3450

7.0050

7.8862

9.6562

.199

.099

.049

.019

.009

.0050

m=4

0.200

0.100

0.050

0.020

0.010

0.005

3.1821

4.1970

5.3630

7.1794

8.2294

9.6545

.200

.100

.049

.019

.010

.0046

w

p

w

n=21

2.9421

3.9893

5.4174

7.4701

9.0867

10.1608

m=3

.201

.101

.050

.021

.011

.0059

2.9334

4.0059

5.4788

7.6777

9.4325

11.5446

n=24

2.9305

3.9696

5.5463

7.5029

9.1548

10.6064

.200

.100

.051

.021

.010

.0055

2.9230

3.9645

5.5424

7.6441

9.1838

10.9392

.257

.114

.057

.057

.057

.057

3.4088

4.0353

5.4285

6.3636

6.3636

.203

.100

.052

.021

.015

.0091

3.3681

4.2706

5.1442

6.3804

8.7012

.198

.099

.048

.018

.006

m=4

.201

.101

.051

.021

.013

.0070

3.2417

4.2284

5.2468

6.8384

8.2702

10.2907

n=13

3.1713

4.1781

5.3576

7.0958

8.1727

9.5330

.198

.087

.040

.008

.008

m=4

n=10

3.2050

4.2262

5.3362

6.5362

7.6762

9.0262

.199

.100

.049

.020

.010

.0049

m=4

n=7

3.2637

4.3260

5.3809

6.5897

7.3589

7.8974

.200

.100

.050

.0200

.010

.0043

m=3

n=4

3.9833

4.3333

5.3333

5.3333

5.3333

5.3333

p

.200

.099

.050

.020

.010

.0037

m=4

.200

.102

.050

.020

.011

.0055

3.1729

4.1513

5.2953

7.2302

8.4144

9.9476

.200

.100

.050

.020

.009

.0049

13

w

p

w

n=22

2.9296

3.9927

5.4576

7.5263

9.0514

10.5105

m=3

.201

.100

.050

.021

.010

.0052

2.9329

4.0080

5.5554

7.6948

9.2269

10.845

n=25

2.9196

3.9645

5.4941

7.6152

9.0769

10.4910

.200

.101

.050

.020

.010

.0055

2.9518

3.9828

5.6001

7.7392

9.1764

10.6898

.214

.103

.056

.024

.024

.008

3.5681

4.5284

4.8465

7.0000

7.2727

7.2727

.202

.103

.053

0.022

.010

.0061

3.2467

4.2337

5.5064

6.9610

8.2337

9.4285

.194

.099

.046

.018

.010

.0042

m=4

.201

.100

.051

.021

.011

.0051

3.2324

4.2535

5.3403

7.0168

8.7682

9.3060

n=14

3.1701

4.1393

5.2615

7.1506

8.3009

9.6938

.190

.095

.048

.014

.005

.0048

m=4

n=11

3.2056

4.1673

5.2347

6.7829

8.2625

9.7909

.200

.100

.050

.020

.010

.0049

m=4

n=8

3.3269

4.2417

5.0824

6.3804

8.1978

8.7912

.200

.099

.049

.019

.010

.0046

m=3

n=5

3.4088

3.9885

5.2322

6.1298

6.1298

6.3636

p

.200

.100

.050

.019

.009

.0049

m=4

.200

.100

.051

.020

.010

.0056

3.1781

4.1361

5.3610

7.3428

8.5902

10.1045

.200

.099

.050

.020

.010

.0049

w

p

2.9314

3.9974

5.5378

7.5875

9.0567

10.5965

.201

.100

.050

.020

.011

.0054

n=23

n=26

2.9288

3.9775

5.5736

7.6155

9.0829

10.6528

.200

.100

.050

.020

.010

.0055

n=6

3.4375

4.1363

4.8181

6.4375

7.0000

7.0000

.200

.105

.057

.024

.014

.014

n=9

3.2467

4.1991

5.3766

6.9350

7.1428

8.9610

.200

.105

.052

.021

.013

0. 070

n=12

3.1799

4.2156

5.2913

7.0035

8.3375

8.9698

.201

.101

.052

.020

.010

.0060

n=15

3.1734

4.1280

5.3381

7.3045

8.5122

9.7811

.201

.100

.050

.020

.010

.0054

MEASUREMENT SCIENCE REVIEW, Volume 9, Section 1, No. 1, 2009

Table 2 (cont.)

α

w

p

m=4

0.200

0.100

0.050

0.020

0.010

0.005

3.1769

4.1680

5.3877

7.3336

8.5665

10.0103

0.199

.100

.050

.020

.010

.0047

m=4

0.200

0.100

0.050

0.020

0.010

0.005

3.1586

4.1541

5.3977

7.3112

8.7481

10.2226

.200

.100

.049

.020

.010

.0049

m=5

0.200

0.100

0.050

0.020

0.010

0.005

3.5290

4.4618

5.1818

6.8181

6.8181

.198

.095

.048

.008

.008

m=5

0.200

0.100

0.050

0.020

0.010

0.005

3.2961

4.4026

5.4935

6.7558

7.4415

8.3532

.197

.096

.046

.018

.009

.0047

m=5

0.200

0.100

0.050

0.020

0.010

0.005

3.2895

4.3676

5.4004

6.7731

8.0235

9.4096

.200

.100

.050

.020

.010

.0046

m=5

0.200

0.100

0.050

0.020

0.010

0.005

3.2444

4.3277

5.4038

7.0441

8.2403

9.5495

.200

.100

.050

.020

.010

.0050

w

p

w

n=16

3.1669

4.1661

5.3779

7.2881

8.5638

9.8625

m=4

.200

.101

.051

.020

.010

.0052

3.1482

4.2149

5.4040

7.3345

8.6124

9.9360

n=19

3.1398

4.1436

5.3751

7.2699

8.7225

10.1015

.200

.100

.050

.020

.010

.0051

3.1397

4.1493

5.3985

7.3358

8.7746

10.2820

.214

.111

.063

.024

.024

.008

3.4017

4.5094

5.0735

6.2940

7.4837

7.6068

.202

.106

.051

.021

.011

.0062

3.2900

4.4666

5.4444

6.6944

7.7777

8.2711

.200

.095

.050

.020

.010

.0050

m=5

.200

.100

.051

.020

.010

.0050

3.2467

4.3321

5.4362

6.9356

8.2432

9.1812

n=14

3.2438

4.3228

5.3989

7.0403

8.1971

9.4789

.197

.097

.050

.019

.009

.0043

m=5

n=11

3.2565

4.3590

5.3943

6.7034

7.9123

9.1162

.200

.100

.050

.020

.010

.0050

m=5

n=8

3.2961

4.3246

5.3532

6.4285

7.3168

8.2987

.200

.100

.050

.020

.010

.0048

m=4

n=5

3.2618

4.1836

4.8381

6.4090

6.4090

6.8181

p

.200

.100

.050

.020

.010

.0048

m=5

.200

.100

.050

.020

.010

.0052

3.2299

4.3187

5.4186

7.0269

8.4011

9.5897

.200

.100

.050

.020

.010

.0049

14

w

p

w

n=17

3.1464

4.2096

5.4038

7.2867

8.5756

9.7931

m=4

.201

.100

.050

.020

.010

.0052

3.1530

4.1820

5.3659

7.2789

8.7934

10.1821

n=20

3.1373

4.1479

5.3844

7.3185

8.7486

10.1472

.200

.100

.050

.020

.010

.0052

3.1371

4.1464

5.4134

7.4488

8.9051

10.2684

.201

.102

.058

.024

.011

.0087

3.3827

4.5054

5.4474

6.5852

7.4442

.201

.102

.051

.021

.011

.0060

3.3292

4.3595

5.3404

6.8762

7.6948

8.9092

.200

.100

.050

.020

.010

.0047

m=5

.200

.101

.050

.020

.010

.0052

3.2576

4.3295

5.4002

6.9724

8.2860

9.3724

n=15

3.2294

4.3173

5.4154

7.0255

8.3831

9.5786

.199

.098

.048

.018

.008

m=5

n=12

3.2444

4.3251

5.4339

6.8584

8.1988

8.9286

.200

.100

.050

.020

.010

.0049

m=5

n=9

3.2622

4.4100

5.4233

6.6400

7.5600

8.2400

.200

.099

.050

.0202

.010

.0048

m=4

n=6

3.3333

4.4273

5.0427

6.0683

6.8376

7.4837

p

.200

.100

.050

.020

.010

.0049

m=6

.200

.100

.050

.020

.010

.0050

3.4981

4.4981

5.4212

6.3553

7.4102

8.0109

.199

.100

.048

.019

.006

.0043

w

p

3.1413

4.1775

5.3559

7.2681

8.7173

10.1168

.200

.100

.050

.020

.010

.0051

n=18

n=21

3.1313

4.1330

5.4077

7.4122

8.8223

10.2359

.200

.100

.050

.020

.010

.0051

n=7

3.3450

4.4376

5.3871

6.4270

7.3538

8.4615

.202

.101

.051

.020

.010

.0051

n=10

3.3143

4.3405

5.3241

6.7239

7.6066

8.8310

.200

.101

.050

.020

.011

.0053

n=13

3.2576

4.3216

5.4002

6.9305

8.1773

9.2534

.200

.100

.050

.020

.010

.0051

n=6

3.2674

4.4835

5.4212

6.1648

7.2527

7.4102

.203

.104

.052

.024

.011

.0065

MEASUREMENT SCIENCE REVIEW, Volume 9, Section 1, No. 1, 2009

Table 2 (cont.)

α

w

p

m=6

w

p

w

n=7

p

w

m=6

p

n=8

3.3543

4.4749

5.4545

6.5677

7.2727

8.2300

.200

.100

.048

.019

.010

.0041

3.3321

4.4452

5.4545

6.3747

6.9758

8.0222

.203

.101

.050

.020

.011

.0051

3.3500

4.4468

5.4333

6.5302

7.3375

8.3218

.197

.100

.050

.020

.010

.0047

3.3375

4.4385

5.4218

6.5218

7.2708

8.1968

.201

.101

.051

.020

.011

.0053

0.200

0.100

0.050

0.020

0.010

0.005

3.3170

4.4683

5.4700

6.8313

7.7563

8.5019

m=6

.200

.100

.049

.020

.010

.0049

n=10

3.3086

4.4487

5.4683

6.8016

7.6605

8.4733

.201

.101

.050

.020

.010

.0054

3.2812

4.4572

5.4694

6.8474

7.8872

8.9718

m=6

.200

.100

.050

.020

.010

.0048

n=11

3.2748

4.4550

5.4630

6.8410

7.8745

8.8718

.202

.100

.051

.020

.010

.0050

0.200

0.100

0.050

0.020

0.010

0.005

3.3020

4.4653

5.5602

6.7183

7.2500

8.0500

.200

.100

.049

.020

.010

.0047

m=7

0.200

0.100

0.050

0.020

0.010

0.005

3.3223

4.5132

5.5739

6.8912

7.7453

8.5894

.199

.100

.050

.020

.010

.0050

m=8

0.200

0.100

0.050

0.020

0.010

0.005

3.3161

4.5215

5.5567

6.8413

7.6685

8.2352

.199

.099

.050

.020

.010

.0050

m=9

0.200

0.100

0.050

0.020

0.010

0.005

3.3001

4.5414

5.6218

6.8923

7.7602

8.5224

.200

.100

.050

.020

.010

.0050

n=7

3.2857

4.4500

5.5551

6.6163

7.0499

7.8622

m=7

.203

.101

.050

.021

.011

.0058

3.3223

4.4931

5.5029

6.7162

7.4921

8.0855

n=10

3.2982

4.4972

5.5719

6.8711

7.7052

8.5714

m=7

.201

.100

.050

.020

.010

.0051

3.2836

4.5140

5.5428

6.8373

7.7821

8.7792

n=8

3.3114

4.5063

5.5509

6.7904

7.5215

8.1060

.200

.100

.050

.020

.010

.0050

m=8

.200

.101

.050

.020

.010

.0053

3.3294

4.5126

5.6062

6.8323

7.6510

8.4580

n=9

3.2938

4.5321

5.6174

6.8849

7.7500

8.4931

.200

.100

.050

.020

.010

.0048

.199

.100

.050

.020

.010

.0050

m=9

.200

.100

.050

.020

.010

.0051

3.2831

4.5346

5.6481

6.9801

7.8680

8.7150

.200

.100

.050

.020

.010

.0050

15

m=6

.200

.100

.0050

.020

.010

.0054

n=12

3.2998

4.4561

5.5087

6.8837

7.9539

9.0235

.200

.100

.050

.020

.010

.0050

3.2998

4.4473

5.5065

6.8771

7.9100

8.9183

.200

.100

.050

.020

.010

.0051

3.3445

4.5012

5.5694

6.7635

7.5478

8.4740

3.2905

4.4804

5.6012

6.9289

7.9493

8.9306

n=9

3.3138

4.4990

5.6042

6.8245

7.6413

8.4541

.200

.100

.050

.020

.010

.0050

m=8

.200

.100

.050

.020

.010

.0051

3.2947

4.5401

5.6291

6.9243

7.7567

8.6375

n=10

3.2815

4.5308

5.6468

6.9779

7.8563

8.7101

.198

.100

.050

.020

.010

.0047

m=7

.200

.100

.050

.020

.010

.0050

.200

.100

.050

.020

.010

.0050

m=10

.200

.100

.100

.020

.010

.0050

n=9

3.2843

4.5020

5.4296

6.7569

7.4612

8.2782

n=11

3.2831

4.5140

5.5428

6.8373

7.7805

8.7648

p

.199

.100

.050

.020

.010

.0050

m=7

.200

.100

.050

.020

.010

.0051

w

3.2953

4.5037

5.4449

6.7825

7.5588

8.2953

n=8

3.3189

4.4921

5.5029

6.6980

7.4686

7.9873

p

m=6

0.200

0.100

0.050

0.020

0.010

0.005

m=7

w

3.2938

4.5354

5.6922

7.0358

7.9567

8.7702

.200

.100

.050

.020

.010

.0050

n=9

3.3328

4.4756

5.5480

6.7581

7.5424

8.4441

.200

.100

.050

.020

.010

.0051

n=12

3.2871

4.4789

5.6000

6.9159

7.9373

8.9087

.201

.101

.050

.020

.010

.0050

n=10

3.2922

4.5401

5.6236

6.9159

7.7552

8.6138

.200

.100

.050

.020

.010

.0050

n=10

3.2922

4.5224

5.6862

7.0311

7.9475

8.7657

.200

.100

.050

.020

.010

.0050