Automated Essay Scoring in the Classroom Paper Presented at the

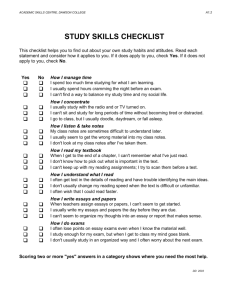

advertisement

Automated Essay Scoring in the Classroom Paper Presented at the American Educational Research Association April 7-11, 2006 San Francisco, California Douglas Grimes Dept. of Informatics University of California, Irvine grimesd@ics.uci.edu Mark Warschauer Dept. of Education University of California, Irvine markw@uci.edu (please check with authors before citing or quoting) AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 1 Abstract Automated essay scoring (AES) software uses natural language processing techniques to evaluate essays and generate feedback to help students revise their work. We used interviews, surveys, and classroom observations to study teachers and students using AES software in five elementary and secondary schools. In spite of generally positive attitudes toward the software, levels of use were lower than expected. Although teachers and students valued the automated feedback as an aid for revision, teachers scheduled little time for revising, and students made little use of the feedback except to correct spelling errors. There was no evidence of AES favoring or disfavoring particular groups of students. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 2 Introduction The global information economy places increasing demands on literacy skills (Marshall et al., 1992; Zuboff, 1989), yet literacy has been lagging in the U.S. for decades (National Commission on Excellence in Education, 1983). Increased writing practice is a cornerstone for improving literacy levels (National Commission on Writing in America's Schools and Colleges, 2003). However, writing teachers are challenged to find the time to evaluate their students’ essays. If a teacher wants to spend five minutes per student per week correcting papers for 180 students, she must somehow find 15 hours per week, generally after hours. Automated Essay Scoring (AES) software has been applied for two primary purposes: fast, cost-effective grading of high-stakes writing tests, and as a classroom tool to enable students to write more while easing the grading burden on teachers. Using a 6point scale to rate essays, grader reliability is on a par with human graders: Inter grader consistency between human and a machine have been as high as between two human graders for at least three major AES programs. Although much has been published on the reliability of automated scoring and its application to high-stakes tests such as the GMAT and TOEFL exams, less has been published on AES as a tool for teaching writing (exceptions: Attali, 2004; Elliot et al., 2004; Shermis et al., 2004). Our research on AES in grades 5 through 12 explores how students and teachers use it and how their attitudes and usage patterns vary by social context. AES uses artificial intelligence (AI) to evaluate essays. It compares lexical and syntactic features of an essay with several hundred baseline essays scored by human AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 3 graders. Thus, scores reflect the judgments of the humans who graded the baseline essays. In addition to scoring essays, the programs flag potential errors in spelling, grammar and word usage, and suggest ways to improve organization and development. We studied two popular AES programs, MY Access, from Vantage Learning, and Criterion, from ETS Technologies, a for-profit subsidiary of the Educational Testing Service. Both have two engines, one that scores essays, and another that provides writing guidance and feedback for revising. Prior Research: Context and Controversy Most prior AES research falls into two categories -- the technical features of natural language processing (NLP), and reliability studies based on comparing human graders with a machine grader (AES program). Computers are notoriously fallible in emulating the critical thinking required to assess writing (e.g., Dreyfus, 1991). People become wary when computers make judgments and acquire an aura of expertise (Bandura, 1985). Hence it is no surprise that automated writing assessment has been sharply criticized. Among the concerns are: deskilling of teachers and decontextualization of writing (Hamp-Lyons, 2005); manipulation of essays to trick the software, biases against innovation, and separation of teachers from their students (Cohen et al., 2003); and violation of the essentially social nature of writing (CCCC Executive Committee, 1996). Most of the criticisms focus on its use in large-scale, high-stakes tests. Classroom use of AES presents a very different scenario. The major vendors market their software to supplement but not replace human teachers, and recommend a low-stakes approach to classroom use of AES, encouraging teachers to assign the lion’s share of grades. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 4 Criterion and MY Access are marketed mostly to upper elementary and secondary education schools, especially junior high and middle schools. We selected study sites accordingly – one K-8 school, three junior high schools, and one high school in Orange County, California. The student bodies varied widely in academic achievement, socioeconomic status, ethnic makeup, and access to computers. Automated Writing Evaluation and Feedback The dual engine architecture of both Criterion and MY Access represent the merger of two streams of development in natural language processing (NLP) technology – statistical tools to emulate or surpass human graders in evaluating essays, and feedback tools for automated feedback on organization, style, grammar and spelling. Ellis Page pioneered automated writing evaluation in the 1960s with Page Essay Grade (PEG), which used multiple regression analysis of measurable features of text, such as essay length and average sentence length, to build a scoring model based on a corpus of human-graded essays (Page, 1967; Shermis et al., 2001). In the 1990s ETS and Vantage Learning developed competing AES programs called e-rater and Intellimetric, respectively (Burstein, 2003; Cohen et al., 2003; Elliot & Mikulas, 2004). Like PEG, both employed regression models based on a corpus of human-graded essays. Since 1999 e-rater has been used in conjunction with a human grader to score the analytical writing assessment in GMAT, the standard entrance examination used by most business schools. Intellimetric has also been tested on college entrance examinations, although published details of the results are much sketchier (e.g., Elliot, 2001). Automated feedback tools on writing mechanics (spelling, grammar, and word usage), style, and organization comprise a parallel stream of evolution. Feedback tools AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 5 for mechanics and style developed in the 1980s (Kohut et al., 1995; MacDonald et al., 1982). Organization, syntax and style checking continue to present formidable technological challenges today (Burstein et al., 2003; Stanford NLP Group, 2005). ETS and Vantage Learning both addressed the market for their scoring engines by coupling with a complementary feedback engine, and Criterion and MY Access emerged in the late 1990s. Most of the research on AES adheres to an established model for evaluating largescale tests which require human judgment. The goal is to maximize inter-rater reliability, i.e., minimize variability in scores among raters. At least four automated evaluation programs – PEG, e-rater, Intellimetric, and Intelligent Essay Assessor (IEA, from Pearson Knowledge Technologies) – have scored essays roughly on a par with expert human raters (Burstein, 2003; Cohen et al., 2003; Elliot & Mikulas, 2004; Landauer et al., 2003). Methodology This is a mixed-methods exploratory case-study to learn how AES is used in classrooms. Research Sites and Participants: We studied AES use in four junior high schools and one high school in Southern California. The two junior high schools we studied most intensively were part of a larger 1:1 laptop study, where all students in certain grades had personal laptops that they used in most of their classes. One of the 1:1 schools was a new, high-SES K-8 school with mostly Caucasian and Asian students. The other was an older, low-SES junior high school with two-thirds Latino students. The other three AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 6 schools (two junior high schools and one high school) used either laptop carts or computer labs. Three schools used My Access; two used Criterion. Teachers using My Access were selected by availability, and included most of the language arts teachers in the 1:1 schools, plus a few teacher of other subjects in those, and three language arts or English teachers in non-1:1 schools. Teachers using Criterion were recommended by their principals Data collection: We recorded and transcribed semi-structured audio interviews with three principals, three technical administrators, and nine language arts teachers. We also observed approximately twenty language arts classes using MY Access and Criterion, conducted two focus groups with students, and examined student essays and reports of over 2,400 essays written with MY Access. Finally, we conducted surveys of teachers and students in the 1:1 laptop schools. Nine teachers and 564 students responded to the section on MY Access. Findings Our finding can be summarized in terms of usage patterns, attitudes, and social context: 1. How do teachers make use of automated essay scoring? 2. How do students make use of automated essay scoring? 3. How does usage vary by school and social context? 1. Teachers Paradox #1: High opinions and low utilization All of the teachers and administrators we interviewed expressed favorable views of their AES programs overall. Several talked glowingly about students’ increased AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 7 motivation to write. The teacher survey gave quantitative support for interview data (Table 1): Six of the seven teachers said that My Editor, the MY Access function that highlights likely errors in mechanics (spelling, punctuation, and grammar) was helpful. Five of seven agreed that My Tutor, the function that gives generic advice on writing, was helpful. More important, all seven said they would recommend the program to other teachers, and six of seven said they thought the program helps students develop insightful, creative writing. Table 1: Teacher Views of My Access Please indicate how much you agree or disagree with each of the following statements The errors pointed out by My Editor are helpful to my students. The advice given in My Tutor is helpful to my students. I would recommend the program to other teachers. The program helps students develop insightful, creative writing. Strongly Disagree Disagree Neutral Agree Strongly Agree Ave. 0% (0) 14% (1) 0% (0) 71% (5) 14% (1) 3.86 0% (0) 29% (2) 0% (0) 57% (4) 14% (1) 3.57 0% (0) 0% (0) 0% (0) 86% (6) 14% (1) 4.14 0% (0) 0% (0) 14% (1) 86% (6) 0% (0) 3.86 Average 3.86 Average scores are the weighted averages for the row: 1 = Strongly disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly agree. Nine teachers answered the section of the survey on MY Access. Two of them said they used MY Access less than 15 minutes a week. They answered “N/A” to the above questions and were eliminated from the responses reported here. Two of the remaining seven taught general education in fifth or sixth grade. The other five all taught 7th or 8th grade: four taught English Language Arts or English Development, and one taught special education. AES makes it easier to manage writing portfolios. Warschauer notes in the context of 1:1 laptop programs: AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 8 A major advantage of automated writing evaluation was that it engaged students in autonomous activity while freeing up teacher time. Teachers still graded essays, but they could be more selective about which essays, and which versions, they chose to grade. In many cases, teachers allowed students to submit early drafts for automated computer scoring and a final draft for teacher evaluation and feedback. (Warschauer, in press, p. 108) One teacher compared My Access to “a second pair of eyes” to watch over her students, making it easier to manage a classroom full of squirrelly students. Given their positive attitudes toward My Access, we were surprised how infrequently teachers used it. 7th-grade students in two 1:1 laptop averaged only 2.38 essays each in the 2004-2005 school year (Table 2). Students in lower grades in the 1:1 laptop program, and 7th-grade students in non-1:1 schools used My Access even less. Table 2 My Access Use by 7 -Graders in Two 1:1 Laptop Schools 2004-2005 School Year Low-SES School High-SES School Total Revisions Essays % Essays % Essays % 0 695 73% 153 69% 848 72% 1 136 14% 52 23% 188 16% 2 51 5% 16 7% 67 6% >2 74 8% 2 1% 76 6% Essays 956 100% 223 100% 1,179 100% Students 403 93 496 Essays/Stu. 2.37 2.40 2.38 Explanation: In the low-SES school, 403 students produced 695 essays with no revisions, 136 with one revision, 51 with two, and 74 with more than two, a total of 956 essays, or 2.37 essays per student. Similarly, in the high-SES school, 93 students produced 153 essays with no revisions, 52 with one revision, 16 with two, and 2 with more than two, a total of 223 essays, or 2.40 essays per student. th AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 9 In spite of limited use, teachers said that MY Access allowed them to assign more writing. The most common explanation for low use was lack of time. Several teachers said they would like to have their students use the program more, but they needed precious class time for grammar drills and other preparation for state tests. Paradox #2: Flawed scores are useful. Although teachers often disagreed with the automated scores, they found them helpful for teaching. The only question in the My Access teacher survey that received a negative overall response related to the fairness of scoring. On a scale of 1 to 5, where 3 was neutral, the average response to “The numerical scores are usually fair and accurate” was 2.71. Nevertheless, six of the seven teachers said that the numerical scores helped their students improve their writing. Several observations may help resolve this seeming paradox. First, students tended to be less skeptical of the scores than teachers. The average teacher response to “the numerical scores are usually fair and accurate” was 2.71 (Table 3). In contrast, the average student response to “The essay scores My Access gives are fair” was 3.44 (Table 3). Second, speed of response is a strong motivator, no matter what the score. Interviews with teachers and students and student responses to open-ended survey questions all confirmed our observation that students were excited by scores that came back within a few seconds, whether or not they felt the grades were fair. Teachers discovered that the scores turned the frequently tedious process of essay assessment into a speedy cousin of computer games and TV game shows. Students sometimes shouted with joy on receiving a high score, or moaned in mock agony over a low one. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 10 Teachers may also have felt relieved to offload the blame for grades onto a machine. Thus teachers could play the role of students’ friend, coaching them to improve their scores, rather than the traditional adversarial role of teacher-as-examiner, ever vigilant of errors. Whatever the reasons, teachers actively encouraged students to score higher, often emphasizing scores per se instead of mastery of writing skills that may have been only loosely connected to scores. Most teachers continued to grade essays manually, although perhaps more quickly than before. Several said they wanted to make sure the grades were fair. One said that My Editor overwhelmed students by flagging too many errors; she just highlighted a few of the more significant ones. Discussion: The teachers using MY Access in our study were all in their first year of using any AES program. It remains to be seen if they gain confidence in the automated scoring with more experience, and rely more on the automated grades. A teacher who had three years’ experience with Criterion only spot-checked student essays, and emphasized that ETS encouraged low-stakes use of the program. Paradox #3: Teachers valued AES for revision, but scheduled little time for it. As Table 2 indicates, 72% of the seventh-grade essays in My Access were not revised at all – only 28% were revised after receiving a preliminary score and feedback. However, these figures are not reliable indicators of how much students would revise if given ample time to polish an essay and sufficient experience with the program. On one hand, the 28% figure may actually overstate the number of actual revisions for several reasons. For example, teachers sometimes assigned an essay over portions of two or three class periods, so each sitting after the first will show up as a revision unless they AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 11 save without submitting. Students can submit at any time, and can submit repeatedly in a single sitting, just to see if their score has gone up when they fix one spelling error. Sometime they resubmit without making any changes at all, in which case the number of revisions overstates the actual editing. On the other hand, the 28% figure might understate the amount of revising of routine essay assignments because teacher sometimes disallowed revisions, either for internal testing purposes (e.g. to compare writing skills at the beginning and end of the school year), or to simulate the timed writing conditions of standardized tests. One teacher wrote, “I feel that My Access puts the emphasis on revision. It is so wonderful to be able to have students revise and immediately find out if they improved.” 2. Students We used several data collection methods for student attitudes. Our student survey contained both multiple-choice and open-ended questions. We asked students’ opinions in focus groups and informal conversations. We asked teachers and administrators about student attitudes, and we observed students using the programs. No surprise: Students liked fast scores and feedback, even when they didn’t trust the scores and ignored the feedback. Table 3 summarizes the responses of over 400 seventh-grade students to part of our survey on My Access. On the same scale, where 1 = “strongly disagree” and 5 = “strongly agree,” answers to seven of the eight questions averaged slightly above 3, or “neutral.” The average response to all eight questions indicated positive attitudes toward My Access. However, individual opinions varied widely. A narrow majority of students regarded the automated scores as fair, but confidence in My Access scores varied widely. 51% of students agreed or strongly AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 12 agreed with “The essay scores My Access gives are fair” (Table 3). A fifth grade teacher wrote, “I have had students who have turned in papers for their grade level, and they were incomplete, and they still received a 6! I think it lost some credibility with my students. If I use the middle school prompts it is better, but then I feel I am stepping on the toes of the sixth, seventh, and eighth grade teachers.” Table 3: Student Views of My Access Please indicate how much you agree or disagree with each of the following statements: I find My Access easy to use. I sometimes have trouble knowing how to use My Access. I like writing with My Access I revise my writing more when I use My Access. Writing with My Access has increased my confidence in my writing. My Tutor has good suggestions for improving my writing. The essay scores My Access gives are fair. My Access helps improve my writing. Strongly disagree 3% (11) Disagree 7% (28) Neutra l 25% (108) Agree 47% (199) Strongly agree 13% (57) I don't know 5% (23) 12% (51) 42% (177) 23% (98) 18% (77) 2% (8) 3% (11) 9% (38) 5% (22) 15% (62) 16% (67) 33% (140) 26% (109) 29% (124) 33% (139) 10% (43) 14% (61) 4% (17) 6% (27) 6% (25) 18% (77) 30% (127) 28% (120) 12% (50) 7% (28) 3.23 8% (34) 11% (47) 25% (107) 26% (109) 10% (41) 20% (87) 3.22 5% (22) 6% (24) 12% (52) 10% (41) 26% (109) 22% (92) 39% (167) 38% (160) 12% (53) 18% (75) 6% (24) 8% (32) Ave. 3.65 2.55 3.18 3.38 3.44 3.56 Responses of 428 seventh-grade students to the My Access portion of a survey on a 1:1 laptop program. The two schools are the same as in Table 2, and most of the students are the same. Our survey asked students to complete two sentences: “The best thing about My Access is ....” and “The worst thing about My Access is ....” Approximately equal AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 13 numbers of students mentioned the scores in a positive light as in a negative one. The most common unprompted answer to “the worst thing ...” was unfair scores (15.3% of responses); the second most common answer to “the best thing ...” was the scores (16.2% of responses). Discussions with students corroborated this antimony. In general, the speed of scoring and feedback appeared to be more important more than the accuracy. Many students were not bothered by anomalies in the scoring. Some compared AES with video games; speed of response seemed to matter more than fairness, at least when the stakes were low. Unlike a high-stakes test, they could ask the teacher to override a low score they considered unfair. The two AES programs we studied were least capable of scoring short or highly innovative essays. Novice writers who struggled to produce a few lines sometimes received zeroes. Their essays were ungradable because they were too short, failed to use periods between sentences, or did not conform to the expected formulaic structure. Student surveys, interviews, and observations confirmed the motivating power of quick numeric scores. Given the low-stakes nature of the automated scores, and the teachers’ willingness to override them, students had little to lose from an unfairly low grade. We asked a focus group of 7th graders what they would do if they wrote an essay they thought was worth a 6 and it received a 2, and they unanimously chimed back that they would tell the teacher, and she would fix it. Unsurprisingly, their strategy was not symmetrical for scores that were too high; when we asked them what they would do if they wrote an essay they thought only deserved a 2 and it got a 6, they all said they would keep the score, but wouldn’t reveal the grading aberration to the teacher. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 14 Discussion: Diverse schools of psychology are in substantial agreement with the common sense notion that clear, proximal goals are ceteris paribus stronger motivators than ambiguous, distal ones (summarized in Bandura, 1985). Several teachers compared AES to video games in terms of students’ motivation to improve their scores. No surprise: Students’ revisions were superficial. As Table 2 indicates, 72% of the My Access essays were not revised at all after the first submission. As indicated above, the seemingly low level of revision may be due to lack of time. It is consistent with findings, such as Janet Emig’s classic study of the writing habits of twelfth-graders, that students are generally disinclined to revise schoolsponsored writing (Emig, 1971). The average student response to “I revise my writing more when I use My Access” was 3.38 (Table 3). This is in line with our finding that students generally revise more when using laptops (Warschauer, in press; Warschauer et al., 2005). However, it was clear that most students in our study conceived of revising as merely correcting errors in mechanics, especially spelling. Examination of ten essays that were revised revealed none that had been revised for content or organization. Except for one essay in which a sentence was added (repeating what had already been said), all of the revisions maintained the previous content and sentence structure. Changes were limited to single words and simple phrases, and the original meaning remained intact. Most changes appeared to be in response to the automated error feedback. Discussion: The above observations about superficial editing apply to seventh grade and below in our study. It is well recognized in the composition community that such young writers are less able than older ones to view their own work critically, a AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 15 prerequisite for in-depth revision (Bereiter et al., 1987). In general, students revise more when writing on computers than on paper (Warschauer, in press, p. 98). Hence AES revision frequency should be compared with writing on a word processor, rather than on paper. Frequency of revision also varies with audience (Butler-Nalin, 1984). Writing for the teacher-as-examiner (as in the stereotypical classroom essay) elicits the fewest revisions; writing for authentic audiences, especially those outside of school, elicits the most. Hence the artificial audience of AES, even when supplemented by a teacher in the role of examiner, would not be expected to inspire students to revise deeply. Paradox: Students treat machine assessment as almost human. Two teachers noted that students treated AES programs like a human reviewer in that they do not like to turn in less than their best effort. We observed a high school literature class that lent confirmation to her observation. An unexpected interruption forced the teacher to stop the students in mid-essay. She asked them to submit their incomplete essays so that a group of visiting teachers could see the Criterion feedback. The students objected that they would get low scores because they were not finished; they wanted to save their essays and exit instead of submitting for them a score, even though the scores wouldn’t count toward their class grade. Seventh-graders in two focus groups also said they did not want to submit an incomplete essay, even though it did not count toward a class grade. Thus students often appear to treat numeric scores as personal evaluations, even when their essays are incomplete. They cheered high scores and bemoaned low ones, but treated them with more levity than grades from their teacher. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 16 A high school principal who had approximately six years experience supervising teachers with Criterion said that students viewed their work more critically as they wrote, editing before they submit. She suggested that mere anticipation of automated feedback or scores may affect the writing processes. If this hypothesis is valid, the number of revisions reported in Table 2 will understate the amount of revision. Tracking the evolution of a text reveals only the visible product of revision, not mental revisions that take place before the writer puts them on paper or computer (Witte, 1987). "It makes little psychological sense to treat changing a sentence after it is written down as a different process from changing it before it is written" (Scardamalia et al., 1986, p. 783). No surprise: AES did not noticeably affect scores on standardized writing tests Scores on the Language Arts portion of the California State Tests for 2005 for seventh-graders at our two 1:1 schools using AES were not significantly different from their peers at other schools in the same district (Warschauer & Grimes, 2005). Given the limited use of AES in the two focus schools, it would be unrealistic to expect improvement in standardized tests so soon. 3. Social context SES did not appear to affect revision patterns or attitudes toward AES. The two schools we studied most intensively had recently introduced 1:1 laptop programs, where all 7th-graders had personal laptops they used in most their classes. One school was high-SES, dominated by students who spoke English or Korean at home. The other school was low-SES; two-thirds of the students were Latinos, and native Spanish speakers slightly outnumbered native English speakers. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 17 Language arts teachers in the high-SES school assigned more writing in My Access than in the low-SES school, but revision patterns were substantially the same (Table 2). Table 4 breaks down the attitude averages in Table 3 by school. The two schools are essentially indistinguishable in terms of average responses. The differences are a tenth of a point or less in all cases except one (“My Tutor has good suggestions for improving my writing”), which differed by about two-tenths. The similarity in survey responses was surprising, given the substantial difference in demographics, disciplinary problems, standardized test scores, and general enthusiasm for learning in the two student groups. Table 4 Student Attitudes toward My Access in a High-SES and a Low-SES School Please indicate how much you agree or disagree with each of the following statements: Low-SES (N = 347) High-SES (N = 163) I find My Access easy to use. 3.65 3.69 I sometimes have trouble knowing how to use My Access. I like writing with My Access. 2.54 3.17 2.59 3.21 I revise my writing more when I use My Access. 3.37 3.42 Writing with My Access has increased my confidence in my writing. 3.26 3.22 My Tutor has good suggestions for improving my writing. 3.20 3.42 The essay scores My Access gives are fair. 3.45 3.35 My Access helps improve my writing. 3.57 3.50 The students are mostly the same seventh-graders as in Table 3, plus some upper elementary students. Teachers in both schools said that MY Access allowed them to assign more writing. Teachers in the high-SES school were more comfortable assigning homework in My Access because they were confident students had Internet access at home. The AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 18 number of teachers is not large enough to estimate statistical significance in any of the quantitative comparisons between schools. Two teachers using Criterion in other schools said students occasionally requested permission to write extra essays, in addition to regular homework – a rare and pleasant surprise for teachers accustomed to students complaining about homework. AES does not appear to favor particular categories of middle-school learners. Teachers also said that it assisted writing development for diverse categories of students -- English Language Learners, gifted, special education, at-risk, and students with no special needs (Table 5). Table 5: Teachers’ View of My Access for Diverse Groups of Learners For each category of students, please indicate how much My Access assists their writing development: Very Slig Respo Positive -- Neut htly ral Posit N/ nse A Avera it really helps ive ge writing English 17 0% 67% (0) (4) Language 17% (1) Learners % 4.20 (1) Special 0% 33% Education (0) (2) 17 50% (3) AERA 2006, Symposium on Technology and Literacy 4.60 % Automated Essay Scoring, p. 19 (1) Gifted 29 0% 43% (0) (3) 29% (2) % 4.40 (2) At-risk 17 17% 33% (1) (2) 33% (2) % 4.20 (1) General 17 students -- 0% 33% 50% (3) no special (0) % 4.60 (2) (1) needs The same seven teachers responded as in Tables 1 and 3 above. Two more columns, “Very negative – it really hinders learning” and “Slightly negative” were full of zeroes and have been suppressed to save space. A high school teacher and principal said that Criterion was useful for average and below-average high school students, especially in ninth and tenth grades. However, older students, especially the skilled writers in Advanced Placement classes, quickly learned to spoof the scoring system. They needed feedback on style, and Criterion’s error feedback, oriented at correcting the mechanics of Standard American English, was of less use to them than to students still struggling with conventions of grammar, punctuation, and spelling. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 20 Discussion: The larger social context of high-stakes testing and the prior practices of individual teachers appeared to shape classroom use of AES more than racial, ethnic, or economic status of the students. It appears that AES is most useful for students who are neither too young and unskilled as writers, nor too skilled. Fourth-graders in California are expected to understand four simple genres and basic punctuation, two skills which happen to be needed for AES programs to score an essay (California Department of Education Standards and Assessment Division, 2002). Students with these skills and the ability to type roughly as fast as they write by hand could be expected to use AES. Older writers may become sufficiently skilled to outgrow AES, perhaps in high school, when they master the basic genres and mechanics of writing and develop their own writing styles, or if they write best when not conforming to the formulaic organization expected by the programs. Skilled writers, it seems, are more likely to be critical of the scores and the feedback. A study of contrasts: two teachers, two schools, two AES programs, and two styles of teaching In order to give a sense of the diverse ways in which we saw AES being used, we offer case studies of two seventh grade teachers in two schools with highly contrasting demographics. The first school is two-thirds Latino with slightly below average API (Academic Performance Index) scores compared to similar schools, and almost 60% of the students on free or reduced lunch program; the second is a California blue-ribbon school with mostly white and oriental students in a high-income neighborhood. The first is the low-SES school in Table 2; however, the second is not the high-SES school in that table. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 21 All of the seventh-graders in the first school participated in a 1:1 laptop program, funded by an Enhancing Education Through Technology (EETT) grant. The teacher in our case-study taught courses principally composed of Latino English language learners. She said that some of her students had never written a whole paragraph before they entered seventh grade. Their first attempts to write essays on My Access resulted in low scores – mostly 1’s and 2’s on a 6-point scale – but they quickly improved. Having laptops with wireless Internet connection allowed them to do research related to the AES prompts. For example, before writing to a prompt about a poem by Emily Dickinson, they researched and answered questions on the author’s life and times. Her students struggled with keyboarding skills when they first received their laptops. Most wrote faster by hand than on computer, at least in their first few months with laptops. Deficient keyboarding skills only compounded these novice writers’ reticence. To facilitate the flow of words, the teacher asked them to write first by hand, and copy it to the computer. In contrast, a teacher in the second school had the enviable position of more attentive students with better training in typing and computer use. For many years she had given her students one thirty-minute timed essay per week. Before introducing Criterion three years ago, she spent all day either Saturday or Sunday each weekend to grade over 150 students. She still schedules one thirty-minute timed essay per week, with ten minutes of pre-writing beforehand, alternating between Criterion and a word processor on alternate weeks. Criterion saves her a few hours compared with the word-processed essays. She said: AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 22 I have 157 students and I need a break.... I cannot get through these essays and give the kids feedback. One of the things I’ve learned over the years is it doesn’t matter if they get a lot of feedback from me, they just need to write. The more they write, the better they do. She added that students are excited about Criterion. “They groan when they have to write an essay by hand. They’d much rather write for Criterion.” These two cases present many contrasts aside from demographics: • Many students in the first school have little typing experience prior to entering seventh grade. The second school is in a school district that attempts to train all students to type at least thirty words a minute by the time they finish sixth grade. Consequently, most seventh graders have the keyboarding skills needed for fluid computer use. • The narrative feedback from My Access, used in the first school, was extremely verbose and totally generic (not adapted to each essay). In contrast, the narrative feedback from Criterion, used in the second school, was concise and specific to each essay. (My Access did allow teachers to select a more basic level of narrative feedback, but we did not see any teachers use it. My Tutor, the narrative feedback function in My Access, is now much simpler than at the time of our study.) • The teacher in the second school writes many of her own prompts for Criterion, whereas the teacher in the first school uses only prompts provided by My Access. (Both programs allow teachers to create their own prompts, but the programs can AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 23 only score essays written to prompts that have a corpus of several hundred human-graded essays in the database.) • Teachers in the first school count themselves lucky if half their students do any homework; in the second school the teacher can count on most students doing about three hours of homework a week. In both cases, most the writing is done at school, but for very different reasons. In the low-SES school, parents are less involved, and many students would not write at all if their homework were their only writing practice. In the high-SES school, the teacher complained that she used to assign writing homework, and the parents became too involved: “I got tired of grading the parents’ homework!” She stopped assigning written homework. In other respects the two teachers and their schools are similar. In both cases, the teachers teach the five-paragraph formula. Both teachers take a disciplined and structured approach to instruction, but the seventh graders in the high-SES school write far more copiously and coherently than do their ELL peers in the low-SES school. Both teachers are accustomed to technical problems, such as cranky hardware, students who have trouble logging in or saving their work, or students who don’t understand how word-wrapping works. (Teachers have to instruct students not to use manual line feeds to avoid unscorable essays because both My Access and Criterion interpret manual line feeds as paragraph delimiters.) Both teachers responded to the technical problems gracefully, calling on spare hardware or technical support as needed. Both felt strongly that computer experience is an essential part of twenty-first century education, and that AES helps students gain both writing experience and computer AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 24 experience. In both cases, AES relieved the teacher of some of the burden of corrections, comments, and grades. Conclusions In summary, the chief benefits of AES in the classroom include increased motivation for students and easier classroom management for teachers. There is also some evidence of increased writing practice and increased revision. The chief drawbacks are that students who are already challenged by writing, typing, and computers may be further stymie; and unless computer hardware and technical support are already available, they require substantial investments. It is also possible that AES may be misused to reinforce artificial, mechanistic, and formulaic writing disconnected from genuine communication. More research is merited on the amount of writing and revision, especially as teachers grow in familiarity with the programs, and students become more accustomed to writing on computers. However, we do not expect teachers to substantively change their instructional practice, such as relating writing to students’ daily lives, encouraging deeper revision, or addressing authentic audiences, without professional training and major changes in the educational environment. We expect that AES will continue to grow in popularity. We hope there is parallel growth in the quality of feedback, especially feedback on syntax, and in the ability of these programs to assess diverse writing styles and structures. Perhaps more important, we hope to see professional development of teachers and new forms of assessment, so that writing instruction can be freed from reductionist formulae and become a tool for authentic communication, not just a tool for acing tests. The effects of any tool depend on its use: AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 25 The main point to bear in mind is that such automated systems do not replace good teaching but should instead be used to support it. This is particularly so with the instruction of at-risk learners, who may lack the requisite language and literacy skills to make effective use of automated feedback. Students must learn to write for a variety of audiences, including not only computer scoring engines, but peers, teachers, and interlocutors outside the classroom, and must also learn to accept and respond to feedback from diverse readers. (Warschauer, in press, n.p.) AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 26 References Attali, Y. (2004, April 12 to 16, 2004). Exploring the feedback and revision features of criterion. Paper presented at the National Council on Measurement in Education (NCME), San Diego, CA. Bandura, A. (1985). Social foundations of thought and action: A social cognitive theory:Prentice Hall. Bereiter, C., & Scardamalia, M. (1987). The psychology of written composition.Hillsdale, N.J.: Lawrence Erlbaum Associates. Burstein, J. (2003). The e-rater scoring engine: Automated essay scoring with natural language processing. In M. D. Shermis & J. Burstein (Eds.), Automated essay scoring: A cross-disciplinary perspective (chap. 7) (pp. 113-121). Mahwah, New Jersey: Lawerence Erlbaum Associates, Publisher. Burstein, J., & Marcu, D. (2003). Automated evaluation of discourse structure in student essays (chap. 13). In M. D. Shermis & J. Burstein (Eds.), Automated essay scoring: A cross-disciplinary perspective.Mahwah, New Jersey: Lawerence Erlbaum, Associates. Butler-Nalin, K. (1984). Revising patterns in students' writing. In A. N. Applebee (Ed.), Contexts for learning to write (pp. 121-133): Ablex Publishing Co. California Department of Education Standards and Assessment Division. (2002). Teacher guide for the california writing standards tests at grades 4 and 7:California Department of Education. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 27 CCCC Executive Committee. (1996). Writing assessment: A position statement. NCTE Cohen, Y., Ben-Simon, A., & Hovav, M. (2003, October, 2003). The effect of specific language features on the complexity of systems for automated essay scoring. Paper presented at the 29th Annual Conference of the International Association for Educational Assessment, Manchester, UK. Dreyfus, H. L. (1991). What computers still can't do: A critique of artificial reason.Cambridge, Mass.: MIT Press. Elliot, S. M., & Mikulas, C. (2004, April 12-16, 2004). The impact of my access!™ use on student writing performance: A technology overview and four studies. Paper presented at the Annual Meeting of the American Educational Research Association, San Diego, CA. Emig, J. (1971). The composing process of twelfth graders.Urbana, IL: National Council of Teachers of English. Hamp-Lyons, L. (2005). Book review: Mark d. Shermis, jill c. Burstein (eds.), automated essay scoring. Assessing Writing, 9, 262-265. Kohut, G. F., & Gorman, K. J. (1995). The effectiveness of leading grammar/style software packages in analyzing business students' writing. Journal of Business and Technical Communication, 9(3), 341-361. Landauer, T. K., Laham, D., & Foltz, P. W. (2003). Automated scoring and annotation of essays with the intelligent essay assessor. In M. D. Shermis & J. Burstein (Eds.), Automated essay sscoring: A cross-disciplinary perspective (pp. 87-112). Mahwah, NJ: Lawrence Erlbaum Associates. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 28 MacDonald, N. H., Frase, L. T., S., G. P., & Keenan, S. A. (1982). The writer's workbench: Computer aids for text analysis. IEEE Transactions on Communications(30), 105-110. Marshall, R., & Tucker, M. (1992). Thinking for a living: Work, skills, and the future of the american economy.New York: Basic Books. National Commission on Excellence in Education. (1983). A nation at risk. from www.ed.gov/pubs/NatAtRisk/risk.html National Commission on Writing in America's Schools and Colleges. (2003). The neglected "r": The need for a writing revolution. Page, E. (1967). Statistical and linguistic strategies in the computer grading of essays. Paper presented at the 1967 International Conference On Computational Linguistics. Scardamalia, M., & Bereiter, C. (1986). Research on written composition. In C. Wittrock (Ed.), Handbook of research on teaching (3rd ed.) (pp. 778-803). New York, NY: Macmillan. Shermis, M. D., Burstein, J. C., & Bliss, L. (2004, April, 2004). The impact of automated essay scoring on high stakes writing assessments. Paper presented at the Annual Meetings of the National Council on Measurement in Education, San Diego, CA. Shermis, M. D., Mzumara, H. R., Olson, J., & Harrington, S. (2001). On-line grading of student essays: Peg goes on the world wide web. Assessment & Evaluation in Higher Education, 26(3), 247 - 259. Stanford NLP Group. (2005). Stanford parser. Retrieved 12/1/2005, 2005, from http://nlp.stanford.edu/software/lex-parser.shtml AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 29 Warschauer, M. (in press). Laptops & literacy:Teachers' College Press. Warschauer, M., & Grimes, D. (2005). First year evaluation report: Fullerton school district laptop program. Zuboff, S. (1989). In the age of the smart machine: The future of work and power:Basic Books. AERA 2006, Symposium on Technology and Literacy Automated Essay Scoring, p. 30