Contingency Contracting Officer Proficiency Assessment Test

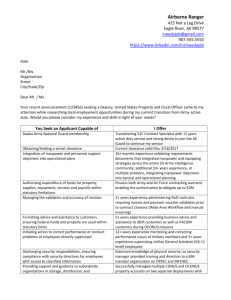

advertisement