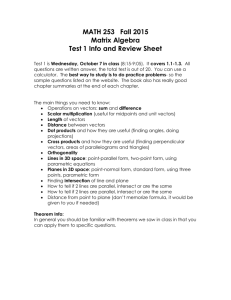

Linear Algebra Review and Matlab Tutorial Linear

advertisement

Background Material

A computer vision "encyclopedia": CVonline.

http://homepages.inf.ed.ac.uk/rbf/CVonline/

Linear Algebra Review

and

Matlab Tutorial

Linear Algebra:

•Eero Simoncelli “A Geometric View of Linear Algebra”

http://www.cns.nyu.edu/~eero/NOTES/geomLinAlg.pdf

Overview

Standard math textbook notation

Scalars are italic times roman:

n, N

Vectors are bold lowercase:

x

Row vectors are denoted with a transpose:

xT

Matrices are bold uppercase:

M

Tensors are calligraphic letters:

T

Warm-up: Vectors in Rn

We can think of vectors in two ways:

Online Introductory Linear Algebra Book by Jim Hefferon.

http://joshua.smcvt.edu/linearalgebra/

Notation

Michael Jordan slightly more in depth linear algebra review

http://www.cs.brown.edu/courses/cs143/Materials/linalg_jordan_86.pdf

Assigned Reading:

Eero Simoncelli “A Geometric View of Linear Algebra”

http://www.cns.nyu.edu/~eero/NOTES/geomLinAlg.pdf

Vectors in R2

Scalar product

Outer Product

Bases and transformations

Inverse Transformations

Eigendecomposition

Singular Value Decomposition

Vectors in Rn

Notation:

Length of a vector:

Points in a multidimensional space with respect to some

coordinate system

translation of a point in a multidimensional space

ex., translation of the origin (0,0)

x = x12 + x22 + L xn2 =

n

∑x

i =1

2

i

1

Dot product or scalar product

Dot product is the product of two vectors

Example:

Scalar Product

x1 y1

x ⋅ y = ⋅ = x1 y1 + x2 y2 = s

x2 y2

x, y

x ⋅ y = xT y = [x1

It is the projection of one vector onto another

x ⋅ y = x y cos θ

Notation

y1

L xn ] M

yn

We will use the last two notations to denote the dot

product

θ

x.y

Norms in Rn

Scalar Product

Commutative:

Distributive:

Linearity

x⋅y = y ⋅x

Euclidean norm (sometimes called 2-norm):

(x + y )⋅ z = x ⋅ z + y ⋅ z

(cx ) ⋅ y = c(x ⋅ y )

x ⋅ (cy ) = c(x ⋅ y )

(c1x) ⋅ (c2 y ) = (c1c2 )(x ⋅ y )

Non-negativity:

Orthogonality:

x = x 2 = x ⋅ x = x12 + x22 + L + xn2 =

n

∑x

i =1

2

i

The length of a vector is defined to be its (Euclidean) norm.

A unit vector is of length 1.

Non-negativity properties also hold for the norm:

∀x ≠ 0, y ≠ 0 x ⋅ y = 0 ⇔ x ⊥ y

Bases and Transformations

We will look at:

Linear Independence

Bases

Orthogonality

Change of basis (Linear Transformation)

Matrices and Matrix Operations

Linear Dependence

Linear combination of vectors x1, x2, … xn

c1x1 + c2 x 2 + L + cn x n

A set of vectors X={x1, x2, … xn} are linearly dependent if there

exists a vector

xi ∈ X

that is a linear combination of the rest of the vectors.

2

Bases (Examples in R2)

Linear Dependence

In R

n

sets of n+1vectors are always dependent

there can be at most n linearly independent vectors

Bases

Bases

A basis is a linearly independent set of vectors that spans the

“whole space”. ie., we can write every vector in our space as

linear combination of vectors in that set.

ê1

Every set of n linearly independent vectors in Rn is a basis of Rn

A basis is called

orthogonal, if every basis vector is orthogonal to all other basis vectors

orthonormal, if additionally all basis vectors have length 1.

Standard basis in Rn is made up of a set of unit vectors:

ê 2

We can write a vector in terms of its standard basis:

ê 1

ê n

ê 2

ê 3

Observation: -- to find the coefficient for a particular basis vector, we

project our vector onto it.

xi = eˆ i ⋅ x

Change of basis

Outer Product

[

Suppose we have a new basis B = b 1

and a vector x ∈ R

m

L bn ]

,

bi ∈ R

m

x1

x o y = xy T = M [ y1

x n

that we would like to represent in

terms of B

b2

x

x2

~

x2

~

x

~

x1

b1

Compute the new components

~

x = B −1 x

When B is orthonormal

b T1 x

~

x= M

b T x

n

~

x

is a projection of x onto bi

Note the use of a dot product

ym ] = M

A matrix M that is the outer product of two vectors is a matrix of

rank 1.

3

Matrix Multiplication – dot product

Matrix Multiplication – outer product

BA =

b1T

M

bmT

a1 L

b1 ⋅ a1

O

b m ⋅ a1

an

Matrix multiplication can be expressed using a sum of outer

products

Matrix multiplication can be expressed using dot products

a1T

BA = b1 L b n

M

aTn

= b1a1T + b 2aT2 + Lb n aTn

b1 ⋅ a n

b m ⋅ a n

n

= ∑ bi o ai

i =1

Singular Value Decomposition:

Rank of a Matrix

D=USVT

=

S

U

D

I1x I 2

A matrix D∈ R

SVD orthogonalizes these spaces and decomposes D

has a column space and a row space

(

(

D = USV T

U

V

contains the left singular vectors/eigenvectors )

contains the right singular vectors/eigenvectors )

Rewrite as a sum of a minimum number of rank-1 matrices

R

D=∑ σ u

r =1

D=USV

Matrix SVD Properties:

=

σ

1

v1T

+σ

u1

2

u2

Multilinear Rank Decomposition:

D

=

U

r

r =1

…..

v2T

r

r

ovr

Matrix Inverse

R

Rank Decomposition:

sum of min. number of rank-1 matrices

D

D = ∑ σ u ov

T

V

+σ

R

r

r

vRT

uR

R1

R2

r1 =1

r2 =1

D = ∑ ∑ σ u ov

S

r1 r 2

r1

r2

V

4

Some matrix properties

Matlab Tutorial

5