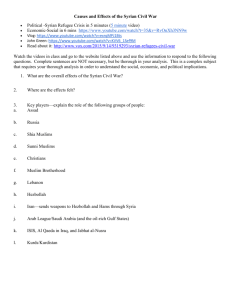

PDF - The Machine Learning Salon

advertisement

The Machine

Learning Salon

Starter Kit

Jacqueline Isabelle Forien

1st Edition - Summer 2015

ABOUT................................................................................37

The Machine Learning Salon Starter Kit....................................................37

Founder of The Machine Learning Salon...................................................38

MOOC, Opencourseware in English...................................39

COURSERA: Machine Learning Stanford Course....................................39

COURSERA: Pratical Machine Learning..................................................39

COURSERA: Neural Networks for Machine Learning..............................40

COURSERA: Data Science Specialization.................................................40

COURSERA: Reasoning, Data Analysis and Writing Specialization.........42

COURSERA: Data Mining Specialization.................................................43

COURSERA: Cloud Computing Specialization.........................................46

COURSERA: Miscellaneous.......................................................................48

STANFORD University: Stanford Engineering Everywhere......................52

STANFORD University: 2015 Stanford HPC Conference Video Gallery.53

STANFORD University: Awni Hannun of Baidu Research.......................53

STANFORD University: Steve Cousins of Savioke....................................53

STANFORD University: Ron Fagin of IBM Research...............................54

STANFORD University: CS224d: Deep Learning for Natural Language

Processing by Richard Socher, 2015............................................................54

EdX: Articifial Intelligence (BerkeleyX).......................................................55

EdX: Big Data and Social Physics (Ethics)...................................................55

EdX: Introduction to Computational Thinking and Data Science.............56

MIT OpenCourseWare (OCW)...................................................................56

VLAB MIT Entreprise Forum Bay Area, Machine Learning Videos.........56

Foundations of Machine Learning by Mehryar Mohri - 10 years of

Homeworks with Solutions and Lecture Slides............................................57

Carnegie Mellon University (CMU) Video resources..................................57

CMU: Convex Optimisation, Fall 2013, by Barnabas Poczos and Ryan

Tibshirani.....................................................................................................58

CMU: Machine Learning, Spring 2011, by Tom Mitchell..........................58

CMU: 10-601 Machine Learning Spring 2015 - Lecture 18 by Maria-Florina

Balcan...........................................................................................................59

CMU: 10-601 Machine Learning Spring 2015, Homeworks & Solutions &

Code (Matlab)...............................................................................................59

CMU: 10-601 Machine Learning Spring 2015 - Recitation 10 by Kirstin Early..

59

CMU: Abulhair Saparov’s Youtube Channel..............................................59

CMU: Machine Learning Course by Roni Rosenfeld, Spring 2015............59

CMU: Language and Statistics by Roni Rosenfeld, Spring 2015................60

Metacademy Concept list and roadmap list.................................................60

HARVARD University: Advanced Machine Learning, Fall 2013...............61

HARVARD University: Data Science Course, Fall 2013.............................61

OXFORD University: Nando de Freitas Video Lectures............................61

OXFORD University: Deep learning - Introduction by Nando de Freitas, 2015.

62

OXFORD University: Deep learning - Linear Models by Nando de Freitas,

2015..............................................................................................................62

OXFORD University: Yee Whye Teh Home Page, Department of Statistics,

University College........................................................................................62

CAMBRIDGE University: Machine Learning Slides, Spring 2014............63

CALTECH University: Learning from Data...............................................63

UNIVERSITY COLLEGE LONDON (UCL): Discovery.........................63

UCL: Supervised Learning by Mark Herbster............................................64

Yann LeCun’s Publications...........................................................................64

Ecole Normale Superieure: Francis Bach, Courses and Exercises with solutions

(English-French) ...........................................................................................64

Technion, Israel Institute of Technology, Machine Learning Videos..........65

E0 370: Statistical Learning Theory by Prof. Shivani Agarwal, Indian Institute

of Science.....................................................................................................66

NPTEL, National Programme on Technology Enhanced Learning, India67

.

Pattern Recognition Class, Universität Heidelberg, 2012 (Videos in English)67

Videolectures.net..........................................................................................70

MLSS Machine Learning Summer Schools Videos....................................70

GoogleTechTalks..........................................................................................71

Udacity Opencourseware.............................................................................71

Udacity's Videos ..........................................................................................73

Mathematicalmonk Machine Learning.......................................................73

Judea Pearl Symposium................................................................................73

SIGDATA, Indian Institute of Technology Kanpur....................................74

Hakka Labs ..................................................................................................74

Open Yale Course........................................................................................74

COLUMBIA University: Machine Learning resources...............................74

COLUMBIA University: Applied Data Science by Ian Langmore and Daniel

Krasner.........................................................................................................75

Deep Learning..............................................................................................75

BigDataWeek Videos....................................................................................76

Neural Information Processing Systems Foundation (NIPS) Video resources76

NIPS 2014 Workshop Videos.......................................................................76

NIPS 2014 Workshop - (Bengio) OPT2014 Optimization for Machine Learning

......................................................................................................................77

Hong Kong Open Source Conference 2013 (English&Chinese) ................77

ICLR 2014 Videos.......................................................................................77

ICLR 2013 Videos.......................................................................................78

Machine Learning Conference Videos........................................................78

Internet Archive...........................................................................................79

University of Berkeley..................................................................................80

AMP Camps, Big Data Bootcamp, UC Berkeley ........................................80

AI on the Web, AIMA (Artificial Intelligence: A Modern Approach) by Stuart

Russell and Peter Norvig..............................................................................80

Resources and Tools of Noah's ARK Research Group...............................80

ESAC DATA ANALYSIS AND STATISTICS WORKSHOP 2014.........81

The Royal Society .......................................................................................82

Statistical and causal approaches to machine learning by Professor Bernhard

Schölkopf......................................................................................................83

Deep Learning RNNaissance with Dr. Juergen Schmidhuber.....................83

Introduction to Deep Learning with Python by Alec Radford....................83

A Statistical Learning/Pattern Recognition Glossary by Thomas Minka...83

The Kalman Filter Website by Greg Welch and Gary Bishop.....................83

Lisbon Machine Learning School (LXMLS)...............................................84

LXMLS Slides, 2014....................................................................................85

INTRODUCTORY APPLIED MACHINE LEARNING by Victor Lavrenko

and Nigel Goddard, University of Edinburgh, 2011...................................86

Data Mining and Machine Learning Course Material by Bamshad Mobasher, DePaul University, Fall 2014........................................................................86

Intelligent Information Retrieval by Bamshad Mobasher, DePaul University,

Winter 2015..................................................................................................86

Student Dave Youtube Channel...................................................................87

Current Courses of Justin E. Esarey, RICE University................................87

From Bytes to Bites: How Data Science Might Help Feed the World by David

Lobell, Stanford University..........................................................................88

Conference on Empirical Methods in Natural Language Processing (and

forerunners) (EMNLP)..................................................................................88

Columbia University's Laboratory for Intelligent Imaging and Neural

Computing (LIINC).....................................................................................89

Enabling Brain-Computer Interfaces for Labeling Our Environment by Paul

Sadja.............................................................................................................89

The Unreasonable Effectivness Of Deep Learning by Yann LeCun, Sept 2014..

89

Machine Learning by Prof. Shai Ben-David, University of Waterloo, Lecture

1-3, Jan 2015................................................................................................89

Computer Vision by Richard E. Turner, Slides, Exercises & Solutions,

University of Cambridge.............................................................................90

Probability and Statistics by Carl Edward Rasmussen, Slides, University of

Cambridge....................................................................................................90

Machine Learning by Carl Edward Rasmussen, Slides, University of

Cambridge....................................................................................................90

Seth Grimes's videos.....................................................................................90

Introduction to Reinforcement Learning by Shane Conway, Nov 2014......90

Machine Learning and Data Mining by Prof. Dr. Volker Tresp, 2014, LMU91

Applied Machine Learning by Joelle Pineau, Fall 2014, McGill University91

Analyzing data from the city of Montreal....................................................91

Artificial Intelligence by Joelle Pineau, Winter 2014-2015, McGill University.....

91

Talking Machines: The History of Machine Learning from the Inside Out92

The Simons Institute for the Theory of Computing..................................92

DIKU - Datalogisk Institut, Københavns Universitet Youtube Channel....92

Hashing in machine learning by John Langford, Microsoft Research.........93

Dimensionality reductions by Alexander Andoni, Microsoft Research.......93

RE.WORK Deep Learning Summit Videos, San Francisco 2015..............93

Machine Learning Tutorial, UNSW Australia.............................................93

Oxford's Podcast...........................................................................................93

Natural Language Processing by Mohamed Alaa El-Dien Aly, 2014, KAUST.....

94

QUT - Queensland University of Technology, Brisbane, Australia............94

Data & Society..............................................................................................94

Open Book for people with autism...............................................................95

NUMDAN, Recherche et téléchargement d’archives de revues mathématiques

numérisées....................................................................................................95

Project Euclid, mathematics and statistics online.........................................95

Statistical Modeling: The Two Cultures by Leo Breiman, 2001..................95

mini-DML....................................................................................................95

MISCELLANEOUS....................................................................................96

The Automatic Statistician project...............................................................96

A selection of Youtube's featured channels..................................................97

Introduction To Modern Brain-Computer Interface Design by Swartz Center

for Computational Neuroscience.................................................................98

Distributed Computing Courses (lectures, exercises with solutions) by ETH

Zurich, Group of Prof. Roger Wattenhofer.................................................98

The wonderful and terrifying implications of computers that can learn | Jeremy

Howard | TEDxBrussels..............................................................................99

Partially derivative, A podcast about data, data science, and awesomeness!99

Class Central................................................................................................99

Beginning to Advanced University CS Courses.........................................100

WIRED UK Youtube Channel..................................................................100

Davos 2015 - A Brave New World - How will advances in artificial intelligence,

smart sensors and social technology change our lives?...............................100

World Economic Forum.............................................................................101

The Global Gender Gap Report................................................................101

The LINCS project....................................................................................102

Australian Academy of Science.................................................................102

Artificial intelligence: Machines on the rise................................................102

Bill Gates Q&A on Reddit..........................................................................103

Second Price went to Yarin Gal for his extrapolated art image, Cambridge

University Engineering Photo Competition...............................................103

Draw from a Deep Gaussian Process by David Duvenaud, Cambridge

University Engineering Photo Competition...............................................103

MOOC, Opencourseware in Spanish................................104

MOOC, Opencourseware in German...............................104

MOOC, Opencourseware in Italian..................................104

MOOC, Opencourseware in French..................................105

France Universite Numerique (FUN).........................................................105

FUN: MinesTelecom: 04006 Fondamentaux pour le Big Data.................105

University of Laval (French Canadian)......................................................105

Théorie algorithm. des graphes..................................................................106

Hugo Larochelle, Apprentissage automatique, French Canadian.............106

Francis Bach, Ecole Normale Superieure - Courses and Exercises with solutions

(English-French) .........................................................................................107

College de France, Mathematics and Digital Science, French...................108

Le Laboratoire de Recherche en Informatique (LRI)................................108

MOOC, Opencourseware in Russian.................................110

Russian Machine Learning Resources.......................................................110

The Yandex School of Data Analysis.........................................................110

Alexander D’yakonov Resources................................................................111

MOOC, Opencourseware in Japanese..............................112

MOOC, Opencourseware in Chinese................................113

Yeeyan Coursera Chinese Classroom........................................................113

Hong Kong Open Source Conference 2013 .............................................113

Guokr.com..................................................................................................113

MOOC, Opencourseware in Portuguese...........................115

Aprendizado de Maquina by Bianca Zadrozni, Instituto de Computação, UFF,

2010............................................................................................................115

Algoritmo de Aprendizado de Máquina by Aurora Trinidad Ramirez Pozo,

Universidade Federal do Paraná, UFPR....................................................115

Digital Library, Universidad de Sao Paulo.................................................115

MOOC, Opencourseware in Hebrew................................116

Open University of Israel...........................................................................116

Homeworks, Assignments & Solutions................................117

CS229 Stanford Machine Learning List of projects (free access to abstracts),

2013 and previous years.............................................................................117

CS229 Stanford Machine Learning by Andrew Ng, Autumn 2014 .........117

CS 445/545 Machine Learning by Melanie Mitchell, Winter Quarter 2014117

Introduction to Machine Learning, Machine Learning Lab, University of

Freiburg, Germany.....................................................................................118

Unsupervised Feature Learning and Deep Learning by Andrew Ng, 2011 ?118

Machine Learning by Andrew Ng, 2011....................................................118

Pattern Recognition and Machine Learning, Solutions to Exercises, by Markus

Svensen and Christopher Bishop, 2009......................................................119

Machine Learning Course by Aude Billard, Exercises & Solutions, EPFL,

Switzerland.................................................................................................119

T-61.3025 Principles of Pattern Recognition Weekly Exercises with Solutions (in

English), Aalto University, Finland, 2015..................................................119

T-61.3050 Machine Learning: Basic Principles Weekly Exercises with Solutions

(in English), Aalto University, Finland, Fall 2014.......................................119

CSE-E5430 Scalable Cloud Computing Weekly Exercises with Solutions (in

English), Aalto University, Finland, Fall 2014............................................119

Weekly Exercises with Solutions (in English) from Aalto University, Finland120

SurfStat Australia: an online text in introductory Statistics.......................120

Learning from Data by Amos Storkey, Tutorial & Worksheets (with solutions),

University of Edinburgh, Fall 2014............................................................120

Web Search and Mining by Christopher Manning and Prabhakar Raghavan,,

Winter 2005................................................................................................120

Statistical Learning Theory by Peter Bartlett, Berkeley, Homework & solutions,

Spring 2014................................................................................................120

Introduction to Time Series by Peter Bartlett, Berkeley, Homework & solutions,

Fall 2010.....................................................................................................121

Introduction to Machine Learning by Stuart Russel, CS 194-10, Fall 2011,

Assignments & Solutions............................................................................121

Statistical Learning Theory by Peter Bartlett, Berkeley, Homework & solutions, Fall 2009.....................................................................................................121

Advanced Topics in Machine Learning by Arthur Gretton, 2015, University

College London (exercises with solutions)..................................................121

Reinforcement Learning by David Silver, 2015, University College London

(exercises with solutions).............................................................................121

Emmanuel Candes Lectures, Homeworks & Solutions, Stanford University

(great resources, not to be missed!).............................................................122

Advanced Topics in Convex Optimization by Emmanuel Candes, Handouts,

Homeworks & Solutions, Winter 2015, Stanford University.....................122

MSM 4M13 Multicriteria Decision Making by SÁNDOR ZOLTÁN

NÉMETH, School of Mathematics, University of Birmingham.............122

10-601 Machine Learning Spring 2015, Homeworks & Solutions & Code

(Matlab)......................................................................................................122

Introduction to Machine Learning by Alex Smola, CMU, Homeworks &

Solutions.....................................................................................................123

Applications.........................................................................124

MIT Media Lab.........................................................................................124

TEDx San Francisco, Connected Reality..................................................124

Emotion&Pain Project................................................................................124

IBM Research.............................................................................................125

EFPL Ecole Polytechnique Fédérale de Lausanne ....................................125

Visualizing MBTA Data: An interactive exploration of Boston's subway system..

126

Commercial Applications ...................................................127

Google glass................................................................................................127

Google self-driving car...............................................................................127

SenseFly......................................................................................................127

HOW MICROSOFT'S MACHINE LEARNING IS BREAKING THE

GLOBAL LANGUAGE BARRIER..........................................................127

RESEARCH PAPERS, in English......................................128

Cambridge University Publications page...................................................128

arXiv.org by Cornell University Library ...................................................128

Google Scholar...........................................................................................128

Google Research.........................................................................................128

Yahoo Research..........................................................................................129

Microsoft Research.....................................................................................129

Journal from MIT Press.............................................................................129

DROPS, Dagstulh Research Online Publication Server............................129

OPEN SOURCE SOFTWARE, in English.......................130

Weka 3: Data Mining Software in Java......................................................130

A deep-learning library for Java.................................................................130

List of Java ML Software by Machine Learning Mastery.........................130

List of Java ML Software by MLOSS........................................................130

MathFinder: Math API Discovery and Migration, Software Engineering and

Analysis Lab (SEAL), IISc Bangalore.........................................................130

Google Java Style........................................................................................131

JSAT: java-statistical-analysis-tool by Edward Raff....................................131

Theano Library for Deep Learning, Python..............................................131

Theano and LSTM for Sentiment Analysis by Frederic Bastien, Universite de

Montreal.....................................................................................................132

Introduction to Deep Learning with Python..............................................132

COURSERA: An Introduction to Interactive Programming in Python (Part 1)...

132

COURSERA: An Introduction to Interactive Programming in Python (Part 2)...

133

COURSERA: Programming for Everybody (Python)...............................133

Udacity - Programming foundations with Python.....................................133

Scikit-learn, Machine Learning in Python.................................................133

Pydata ........................................................................................................134

PyData NYC 2014 Videos..........................................................................134

PyData, The Complete Works by Rohit Sivaprasad..................................134

Anaconda...................................................................................................135

Ipython Interactive Computing..................................................................135

Scipy...........................................................................................................135

Numpy........................................................................................................136

matplotlib...................................................................................................136

pandas.........................................................................................................136

SymPy.........................................................................................................136

Orange........................................................................................................137

Pythonic Perambulations: How to be a Bayesian in Python......................137

emcee..........................................................................................................137

PyMC.........................................................................................................137

Pylearn2......................................................................................................137

PyCon US 2014..........................................................................................138

PyCon India 2012......................................................................................138

PyCon India 2013......................................................................................138

Montreal Python........................................................................................138

SciPy 2014..................................................................................................139

PyLadies London Meetup resources..........................................................139

Python Tools for Machine Learning by CB Insights..................................139

Python Tutorials by Jessica MacKellar.......................................................139

INTRODUCTION TO PYTHON FOR DATA MINING.....................140

Notebook Gallery: Links to the best IPython and Jupyter Notebooks by ?140

Google Python Style Guide........................................................................140

Natural Language Processing with Python by Steven Bird, Ewan Klein, and

Edward Loper............................................................................................141

PyBrain Library..........................................................................................141

Classifying MNIST dataset with Pybrain...................................................142

OCTAVE....................................................................................................142

PMTK Toolbox by Matt Dunham, Kevin Murphy...................................142

Octave Tutorial by Paul Nissenson.............................................................143

JULIA.........................................................................................................143

Julia by example by Samuel Colvin............................................................144

The R PROJECT for Statistical Computing.............................................144

Coursera: R Programming.........................................................................144

R Graph Gallery........................................................................................145

Code School - R Course.............................................................................145

Coursera R programming..........................................................................145

Open Intro R Labs.....................................................................................145

R Tutorial...................................................................................................145

DataCamp R Course.................................................................................146

R Bloggers..................................................................................................146

R-Project Package: caret: Classification and Regression Training.........146

A Short Introduction to the caret Package by Max Kuhn.........................146

R packages by Hadley Wickham................................................................147

Google's R Style Guide..............................................................................147

STAN Software..........................................................................................147

List of Machine Learning Open Source Software.....................................148

Google Prediction API...............................................................................148

Reddit ........................................................................................................149

SCHOGUN toolbox..................................................................................149

Comparison between ML toolbox.............................................................149

Infer.NET, Microsoft Research...................................................................149

F# Software Foundation.............................................................................150

BigML........................................................................................................150

BRML Toolbox in Matlab/Julia David Barber Toolbox, University College

London.......................................................................................................150

SCILAB......................................................................................................150

OverFeat and Torch7, CILVR Lab @ NYU.............................................150

FAIR open sources deep-learning modules for Torch................................151

IPython kernel for Torch with visualization and plotting...........................151

Deep Learning Lecture 9: Neural networks and modular design in Torch by

Nando de Freitas, Oxford University.........................................................151

Deep Learning Lecture 8: Modular back-propagation, logistic regression and

Torch..........................................................................................................151

Machine Learning with Torch7: Defining your own Neural Net Module152

.

Lua Tutorial in 15 Minutes by Tyler Neylon.............................................152

Google: Punctuation, symbols & operators in search.................................152

WolframAlpha............................................................................................152

Computation and the Future of Mathematics by Stephen Wolfram, Oxford's

Podcast........................................................................................................153

Mloss.org....................................................................................................153

Sourceforge.................................................................................................153

AForge.NET Framework............................................................................153

cuda-convnet..............................................................................................153

word2vec.....................................................................................................154

Open Machine Learning Workshop organized by Alekh Agarwal, Alina

Beygelzimer, and John Langford, August 2014..........................................154

Maxim Milakov Software...........................................................................154

Alfonso Nieto-Castanon Software..............................................................154

Lib Skylark..................................................................................................155

Mutual Information Text Explorer............................................................155

Data Science Resources by Jonathan Bower on GitHub...........................155

Joseph Misiti Blog.......................................................................................156

Michael Waskom GitHub repositories.......................................................156

Visualizing distributions of data.................................................................156

Exploring Seaborn and Pandas based plot types in HoloViews by Philipp John

Frederic Rudiger.........................................................................................157

"Machine Learning: An Algorithmic Perspective" Code by Stephen Marsland....

157

Sebastian Raschka GitHub Repository & Blog (Great Resources, everything you

need is there!)..............................................................................................157

Open Source Hong Kong..........................................................................158

Lamda Group, Nanjing University............................................................158

GATE, General Architecture for Text Engineering...................................158

CLARIN, Common Language Resources and Technology Infrastructure159

FLaReNet, Fostering Language Resources Network..................................159

My Data Science Resources by Viktor Shaumann.....................................159

MISCELLANEOUS..................................................................................160

Overleaf (ex WriteLaTeX).........................................................................160

Interview of Dr John Lees-Miller by Imperial College London ACM Student

Chapter.......................................................................................................160

LISA Lab GitHub repository, Université de Montréal .............................160

MILA, Institut des algorithmes d'apprentissage de Montréal, Montreal Institute

for Learning Algorithms.............................................................................161

Vowpal Wabbit GitHub repository by John Langford...............................161

Google-styleguide: Style guides for Google-originated open-source projects161

BIG DATA/CLOUD COMPUTING, in English.............162

Apache Spark Machine Learning Library.................................................162

Ampcamp, Big Data Boot Camp...............................................................162

Spark Summit 2013 Videos .......................................................................162

Spark Summit 2014 Videos .......................................................................162

Spark Summit 2015 Videos & Slides..........................................................163

Spark Summit Training & Videos..............................................................163

Databricks Videos.......................................................................................163

SF Scala & SF Bay Area Machine Learning, Joseph Bradley: Decision Trees on

Spark...........................................................................................................163

Apache Mahout ML library.......................................................................163

Apache Mahout on Javaworld....................................................................164

MapReduce programming with Apache Hadoop, 2008............................164

Hadoop Users Group UK..........................................................................164

Deeplearning4j...........................................................................................164

Udacity opencourseware "Intro to Hadoop and MapReduce" ................165

Storm Apache............................................................................................166

Scaling Apache Storm by Taylor Goetz.....................................................166

Michael Viogiatzis Blog .............................................................................166

Prediction IO..............................................................................................166

PredictionIO tutorial - Thomas Stone - PAPIs.io '14.................................166

Container Cluster Manager.......................................................................167

Domino Data Labs.....................................................................................167

Data Science Central..................................................................................168

Amazon Web Services Videos....................................................................168

Google Cloud Computing Videos..............................................................168

VLAB: Deep Learning: Intelligence from Big Data, Stanford Graduate School

of Business..................................................................................................168

Machine Learning and Big Data in Cyber Security Eyal Kolman Technion

Lecture .......................................................................................................168

Chaire Machine Learning Big Data, Telecom Paris Tech (Videos in French)168

An Architecture for Fast and General Data Processing on Large Clusters by

Matei Zaharia, 2014...................................................................................169

Big Data Requires Big Visions For Big Change | Martin Hilbert | TEDxUCL...

170

Ethical Quandary in the Age of Big Data | Justin Grace | TEDxUCL...170

Big Data & Dangerous Ideas | Daniel Hulme | TEDxUCL....................171

List of good free Programming and Data Resources, BITBOOTCAMP.171

BIG Data, Medical Imaging and Machine Intelligence by Professor

H.R.Tizhoosh at the University of Waterloo.............................................172

Session 6: Science in the cloud: big data and new technology...................172

MapReduce for C: Run Native Code in Hadoop by Google Open Source

Software......................................................................................................172

Machine Learning & Big Data at Spotify with Andy Sloane, Big Data Madison

Meetup.......................................................................................................173

Hands on tutorial on Neo4J with Max De Marzi, Big Data Madison Meetup.....

173

TED Talk: What do we do with all this big data? by Susan Etlinger.........173

Big Data's Big Deal by Viktor Mayer-Schonberger, Oxford's Podcast.......173

BID Data Project - Big Data Analytics with Small Footprint.....................174

SF Big Analytics and SF Machine learning meetup: Machine Learning at the

Limit by Prof. John Canny.........................................................................174

COMPETITIONS, in English...........................................176

Angry Birds AI Competition......................................................................176

ChaLearn...................................................................................................176

ImageNet Large Scale Visual Recognition Challenge 2015 (ILSVRC2015)177

Kaggle........................................................................................................177

Kaggle Competition Past Solutions............................................................177

Kaggle Connectomics Winning Solution Research Article........................177

Solution to the Galaxy Zoo Challenge by Sander Dieleman.....................177

Winning 2 Kaggle in class competitions on spam......................................178

Matlab Benchmark for Packing Santa’s Sleigh translated in Python.........178

Machine learning best practices we've learned from hundreds of competitions Ben Hamner (Kaggle)................................................................................178

TEDx San Francisco, Jeremy Howard talk (Connecting Devices with

Algorithms).................................................................................................178

CrowdANALYTICS..................................................................................178

Challenges for governmental applications..................................................178

InnoCentive Challenge Center..................................................................178

TunedIT.....................................................................................................179

Ants, AI Challenge, sponsored by Google, 2011........................................179

International Collegial Programming Contest...........................................179

Dream challenges.......................................................................................179

Texata.........................................................................................................180

IoT World Forum Young Women's Innovation Grand Challenge.............180

COMPETITIONS, in French............................................182

COMPETITIONS, in Russian...........................................182

Russian AI Cup - Competition Programming Artificial Intelligence.........182

OPEN DATASET, in English.............................................183

Friday Lunch time Lectures at the Open Data Institute, Videos, slides and

podcasts (not to be missed!)........................................................................183

Open data Institute: Certify your open data..............................................183

The Text REtrieval Conference (TREC) Datasets.....................................183

HDX Humanitarian Data Exchange.........................................................184

World Data Bank........................................................................................185

US Dataset.................................................................................................185

US City Open Data Census.......................................................................186

Machine Learning repository.....................................................................186

IMAGENET..............................................................................................186

Stanford Large Network Dataset Collection..............................................187

Deep Learning datasets..............................................................................187

Open Government Data (OGD) Platform India.......................................188

Yahoo Datasets...........................................................................................188

Windows Azure Marketplace.....................................................................188

Amazon Public Data Sets...........................................................................188

Wikipedia: Database Download.................................................................189

Gutenberg project (Free books available in different format, useful for NLP)189

Freebase......................................................................................................189

Datamob Data............................................................................................189

Reddit Datasets...........................................................................................189

100+ Interesting Data Sets for Statistics....................................................189

Data portal of the City of Chicago............................................................190

Data portal of the City of Seattle..............................................................190

Data portal of the City of LA....................................................................190

California Department of Water Resources..............................................190

Data portal of the City of Dallas...............................................................191

Data portal of the City of Austin...............................................................191

How to produce and use datasets: lessons learned, mlwave.......................191

MITx and HarvardX release MOOC datasets and visualization tools.....192

Finding the perfect house using open data, Justin Palmer’s Blog...............192

Synapse.......................................................................................................192

NYC Taxi Trips Date from 2013...............................................................192

Sebastian Raschka’s Dataset Collections....................................................192

Awesome Public Datasets by Xiaming Chen, Shanghai, China................192

UK Dataset.................................................................................................193

LONDON DATASTORE - 601 datasets found (28-08-2015)..................193

Transport For London Open Data, UK....................................................193

Gaussian Processes List of Datasets...........................................................193

The New York Times Linked Open Data .................................................194

Google Public Data Explorer.....................................................................194

The Million Song Dataset..........................................................................195

CrowFlower Open Data Library...............................................................195

OPEN DATASET, in French..............................................196

Montreal, Portail Donnees Ouvertes (French&English), Canada..............196

Insee, France...............................................................................................196

RATP Open Data, French Tube in Paris, France......................................196

L’Open-Data français cartographié...........................................................196

OPEN DATASET, China...................................................197

Lamda Group.............................................................................................197

DATA VISUALIZATION..................................................198

Visualization Lab Gallery, Computer Science Division, University of California,

Berkeley......................................................................................................198

Visualization Lab Software, Computer Science Division, University of

California, Berkeley....................................................................................200

Visualization Lab Course Wiki, Computer Science Division, University of

California, Berkeley....................................................................................200

Mike Bostock..............................................................................................200

Eyeo Festival...............................................................................................200

MIT Data Collider.....................................................................................200

D3 JS Data-Driven Documents..................................................................200

Shan He, Research Fellow at MIT Senseable City Lab.............................201

Gource software version control visualization ...........................................201

Logstalgia, website access log visualization................................................201

Andrew Caudwell's Blog............................................................................201

MLDemos , EPFL, Switzerland.................................................................202

The University of Florida Sparse Matrix Collection.................................202

Visualization & Graphics lab, Dept. of CSA and SERC, Indian Institute of

Science, Bangalore.....................................................................................203

Allison McCann.........................................................................................203

Scott Murray..............................................................................................203

Gephi: The Open Graph Viz Platform......................................................203

Data Analysis and Visualization Using R by David Robinson...................204

Visualising Data Blog (Huge list of resources, great blog!).........................204

The 8 hats of Data Visualisation Design by Andy Kirk.............................205

Andy Kirk, Visualisation consultant at the Big Data Week, 2013..............205

Image Gallery by the Arts and Humanities Research Council, UK..........205

Setosa.io by Victor Powell & Lewis Lehe...................................................205

BOOKS, in English............................................................206

2015

206

Bayesian Reasoning and Machine Learning, David Barber, 2012 (online version

04-2015)......................................................................................................206

Deep Learning (Artificial Intelligence) , An MIT Press book in preparation, by

Yoshua Bengio, Ian Goodfellow and Aaron Courville, Jul-2015................206

Neural Networks and Deep Learning by Michael Nielsen, 2015 .............207

2014

208

An Architecture for Fast and General Data Processing on Large Clusters by

Matei Zaharia, 2014...................................................................................208

Deep Learning Tutorial by LISA Lab, University of Montreal, 2014.......209

Statistical Inference for Everyone, by Professor Bryan Blais, 2014............210

Mining of Massive Datasets by Jure Leskovec, Anand Rajaraman, Jeff Ullman,

2014............................................................................................................210

Social Media Mining by Reza Zafarani, Mohammad Ali Abbasi, Huan Liu,

2014............................................................................................................211

Causal Inference by Miguel A. Hernán and James M. Robins, May 14, 2014,

Draft...........................................................................................................212

Slides for High Performance Python tutorial at EuroSciPy, 2014 by Ian Ozsvald.

213

Probabilistic Programming and Bayesian Methods for Hackers by Cameron

Davidson-Pilon, 2014.................................................................................213

Past, Present, and Future of Statistical Science by COPSS, 2014.............213

Essential of Metaheuristics by Sean Luke, 2014........................................213

2013

214

Interactive Data Visualization for the Web By Scott Murray, 2013...........214

Statistical Model Building, Machine Learning, and the Ah-Ha Moment by

Grace Wahba, 2013....................................................................................214

An Introduction to Statistical Learning with applications in R. by Gareth James

Daniela Witten Trevor Hastie Robert Tibshirani, 2013 (first printing).....214

2012

215

Reinforcement Learning by Richard S. Sutton and Andrew G. Barto, 2012,

Second edition in progress (PDF)...............................................................215

R Graphics Cookbook Code Resources (Graphs with ggplot2) by Winston

Chang, 2012...............................................................................................215

Supervised Sequence Labelling with Recurrent Neural Networks by Alex

Graves, 2012...............................................................................................215

A course in Machine Learning by Hal Daume, 2012................................216

Machine Learning in Action, Peter Harrington, 2012...............................216

A Programmer's Guide to Data Mining, by Ron Zacharski, 2012............216

2010

217

Artificial Intelligence, Foundations of Computational Agents by David Poole

and Alan Mackworth, 2010........................................................................217

Introduction to Machine Learning by Ethem Alpaydın, MIT Press, Second

Edition, 2010, 579 pages............................................................................217

2009

218

The Elements of Statistical Learning, T. Hastie, R. Tibshirani, and J. Friedman,

2009............................................................................................................218

Learning Deep Architecture for AI by Yoshua Bengio, 2009....................219

An Introduction to Information Retrieval by Christopher D. Manning

Prabhakar Raghavan Hinrich Schütze, 2009.............................................219

2008

220

Kernel Method in Machine Learning by Thomas Hofmann; Bernhard

Schölkopf; Alexander J. Smola, 2008.........................................................220

Introduction to Machine Learning, Alex Smola, S.V.N. Vishwanathan, 2008

220

2006

221

Pattern Recognition and Machine Learning, Christopher M. Bishop, 2006221

Gaussian processes for Machine Learning, C. Rasmussen and C. Williams, 2006

....................................................................................................................222

2005

222

Bayesian Machine Learning by Chakraborty, Sounak, 2005.....................222

Machine Learning by Tom Mitchell, 2005................................................222

2003

223

Information Theory, Inference, and Learning Algorithms, David McKay, 2003..

223

MISCELLANEOUS

224

Free Book List.............................................................................................224

Free resource book (need to sign in)...........................................................224

Wikipedia: Machine Learning, the Complete Guide.................................224

ISSUU........................................................................................................224

Neural Networks, A Systematic Introduction by Raul Rojas.....................225

BOOKS, in Spanish............................................................226

BOOKS, in Portuguese.......................................................226

BOOKS, in German...........................................................226

BOOKS, in Italian..............................................................226

BOOKS, in French.............................................................226

BOOKS, in Russian............................................................227

Pattern Recognition by А.Б.Мерков, 2014................................................227

Algorithmic models of learning classification: rationale, comparison, selection,

2014............................................................................................................227

BOOKS, in Japanese..........................................................227

BOOKS, in Chinese...........................................................228

Blog recommending useful books...............................................................228

Textbook for Statistics................................................................................228

Introduction to Pattern recognition............................................................228

Translated version of Machine Learning by Tom Mitchell.......................228

Presentation, Infographics and Documents in English.......229

Meetup's Presentations...............................................................................229

Slideshare.com............................................................................................229

Slides.com...................................................................................................229

Powershow.com..........................................................................................229

Speaker Deck..............................................................................................229

Introduction to Artificial Intelligence, 2014, University of Waterloo........229

Aprendizado de Maquina, Conceitos e definicoes by Jose Augusto Baranauskas .

229

Aprendizado de Maquina by Bianca Zadrozni, Instituto de Computação, UFF,

2010............................................................................................................230

NYC ML Meetup, 2014.............................................................................230

Statistics with Doodles by Thomas Levine.................................................230

Conferences.........................................................................231

ICML, Lille, France 2015...........................................................................231

ICML, Beijing, China 2014.......................................................................231

ICML, Atlanta, US 2013...........................................................................231

ICML, Edinburgh, UK 2012.....................................................................231

ICML, Bellevue, US 2011..........................................................................231

Full archive of ICML.................................................................................231

Machine Learning Conference Videos......................................................232

Annual Machine Learning Symposium.....................................................232

MLSS Machine Learning Summer Schools..............................................232

Data Gotham 2012, 2013...........................................................................232

Meetup ......................................................................................................232

Data Science Weekly

List of Meetups.....................................................232

London Machine Learning Meetup...........................................................232

BLOGS, in English.............................................................233

Igor Carron Blog........................................................................................233

Data Science Weekly..................................................................................233

Yann LeCun, Google+...............................................................................233

KDD Community, Knowledge discovery and Data Mining......................233

Kaggle Blog................................................................................................233

Digg............................................................................................................234

Feedly..........................................................................................................234

Mlwave.......................................................................................................234

FastML.......................................................................................................234

Beating the Benchmark..............................................................................234

Trevor Stephens Blog.................................................................................235

Mozilla Hacks.............................................................................................235

Banach's Algorithmic Corner, University of Warsaw................................235

DataCamp Blog..........................................................................................235

Natural Language Processing Blog, Hal Daume........................................235

Maxim Milakov Blog..................................................................................235

Alfonso Nieto-Castanon Blog.....................................................................235

Persontyle Blog...........................................................................................236

Analytics Vidhya.........................................................................................236

Bugra Akyildiz Blog....................................................................................237

Rasbt Blog..................................................................................................237

Gilles Louppe Blog.....................................................................................237

AI Topics....................................................................................................237

AI International..........................................................................................237

Joseph Misiti Blog.......................................................................................237

MIRI, Machine Intelligence Research Institute.........................................238

Kevin Davenport Data Blog.......................................................................238

Alexandre Passant Blog..............................................................................238

Daniel Nouri Blog......................................................................................239

Yvonne Rogers Blog...................................................................................239

Igor Subbotin Blog (Both in English & Russian)........................................239

Sebastian Raschka GitHub Repository & Blog (Great Resources, everything you

need is there!)..............................................................................................239

Popular Science Website.............................................................................240

HOW MICROSOFT'S MACHINE LEARNING IS BREAKING THE

GLOBAL LANGUAGE BARRIER .........................................................240

Max Woolf Blog.........................................................................................240

Rasmus Bååth Research Blog.....................................................................240

Flowing Data Blog......................................................................................241

The Shape of Data Blog............................................................................241

Data School Blog........................................................................................242

Julia Evans Blog..........................................................................................242

Stephan Hügel's Blog.................................................................................243

BACKCHANNEL "Tech Stories Hub" by Steven Levy............................244

DataScience Vegas.....................................................................................245

The Twitter Developer Blog.......................................................................245

Tyler Neylon Blog.......................................................................................245

Victor Powell Blog......................................................................................245

CrowFlower Blog........................................................................................245

Edward Raff Blog......................................................................................245

Dirk Gorissen Blog and Projects.................................................................246

Joseph Jacobs Homepage & Blog...............................................................246

MISCELLANEOUS..................................................................................246

Allen Institute for Artificial Intelligence (AI2)............................................246

Artificial General Intelligence (AGI) Society..............................................247

AUAI, Association for Uncertainty in Artificial Intelligence.....................247

BLOGS, in Spanish.............................................................248

BLOGS, in Portuguese........................................................248

BLOGS, in Italian...............................................................248

BLOGS, in German............................................................248

BLOGS, in French..............................................................249

L'ATELIER's News ...................................................................................249

BLOGS, in Russian.............................................................250

Igor Subbotin's Blog (Both in English & Russian) (Huge list of resources)250

BLOGS, in Japanese...........................................................251

BLOGS, in Chinese............................................................251

JOURNALS, in English......................................................252

Journal of Machine Learning Research, MIT Press..................................252

Machine Learning Journal (last article could be downloaded for free)......252

Machine Learning (Theory).......................................................................252

List of Journals on Microsoft Academic Research website........................252

Wired magazine..........................................................................................252

Data Science Central..................................................................................252

JOURNALS, in Spanish.....................................................253

JOURNALS, in Portuguese................................................253

JOURNALS, in Italian.......................................................253

JOURNALS, in German....................................................253

JOURNALS, in French.......................................................253

JOURNALS, in Russian.....................................................253

JOURNALS, in Japanese....................................................254

JOURNALS, in Chinese.....................................................254

FORUM, Q&A, in English.................................................255

Data Tau.....................................................................................................255

Hacker News..............................................................................................255

Kaggle Forums...........................................................................................255

Reddit /r/MachineLearning.....................................................................255

Reddit /r/generative..................................................................................256

Cross validated Stack Exchange.................................................................256

Open data Stack Exchange........................................................................256

Data Science Beta Stack Exchange............................................................256

Quora.........................................................................................................256

Machine Learning Impact Forum..............................................................257

FORUM, Q&A, in Spanish................................................258

FORUM, Q&A, in Portuguese...........................................258

FORUM, Q&A, in Italian...................................................258

FORUM, Q&A, in German...............................................258

FORUM, Q&A, in French..................................................258

FORUM, Q&A, in Russian.................................................259

Reddit in Russian .......................................................................................259

Habrahabr.ru Forum (in Russian translated by Google Chrome)..............259

FORUM, Q&A, in Japanese...............................................260

FORUM, Q&A, in Chinese................................................260

Zhihu.com..................................................................................................260

Guokr.com..................................................................................................260

Governmental REPORTS, in English................................262

Big Data report, Whitehouse, US..............................................................262

FUN, in English...................................................................263

Founder of PhD Comics............................................................................263

MACHINE LEARNING RESEARCH GROUPS, in USA264

Computer Science and Artificial Intelligence Lab, MIT...........................264

Artificial Intelligence Laboratory, Stanford University..............................264

Machine Learning Department, Carnegie Mellon University..................265

Noah's ARK Research Group, Carnegie Mellon University.....................265

Intelligent Interactive Systems Group, Harvard University.......................265

Statistical Machine Learning, University of California, Berkeley..............266

UC Berkeley AMPLab, AMP: ALGORITHMS MACHINES PEOPLE267

Berkeley Institute for Data Science............................................................267

Department of Computer Science - ARTIFICIAL INTELLIGENCE &

MACHINE LEARNING, Princeton University........................................268

Research Laboratories and Groups, University of California, Los Angeles

(UCLA).......................................................................................................268

Cornwell University...................................................................................269

Machine Learning Research, University of Illinois at Urbana Champaign269

Department of Computing + Mathematical Science, California Institute of

Technology, Caltech...................................................................................269

Machine Learning, University of Washington...........................................270

"Big Data" Research and Education, University of Washington...............270

Social Robotics Lab - Yale University........................................................270

ML@GT, Georgia Institute of Technology...............................................271

Machine Learning Research Group, University of Texas and Austin.......271

Penn Research in Machine Learning, University of Pennsylvania............271

Machine Learning @ Columbia University...............................................271

New York City University...........................................................................271

University of Chicago................................................................................272

The Johns Hopkins Center for Language and Speech Processing (CLSP)

Archive Videos............................................................................................272

MISCELLENEAOUS...............................................................................272

IARPA Organization..................................................................................272

MACHINE LEARNING RESEARCH GROUPS, in Canada

273

Machine Learning Lab, University of Toronto.........................................273

The Fields Institute for Research in Mathematical Science, University of

Toronto.......................................................................................................273

Artificial Intelligence Research Group, University of Waterloo.................273

Artificial Intelligence Research Groups, University of British Columbia .274

MILA, Machine Learning Lab, University of Montreal...........................275

Intelligence artificielle, University of Sherbrooke......................................276

Centre de recherche sur les environnements intelligents, University of

Sherbrooke.................................................................................................276

Machine Learning Research Group, University of Laval..........................277

MACHINE LEARNING RESEARCH GROUPS, in Brazil278

MACHINE LEARNING RESEARCH GROUPS, in United

Kingdom.............................................................................279

The Centre for Computational Statistics and Machine Learning (CSML),

University College London........................................................................279

CASA (Centre for Advanced Spatial Studies) Working Papers, University

College London..........................................................................................279

The Machine Learning Research Group in the Department of Engineering

Science, Oxford University.........................................................................280

Machine Learning Group, Imperial College..............................................281

The Data Science Institute, Imperial College............................................282

The University of Edinburgh, Institute for Adaptive and Neural Computation...

282

Cambridge University................................................................................282

Centre for Intelligent Sensing, Queen Mary University of London..........282

ICRI, The Intel Collaborative Research Institute......................................283

MACHINE LEARNING RESEARCH GROUPS, in France

284

Magnet, MAchine learninG in information NETworks, INRIA...............284

Sierra Team - Ecole Normale Superieure , CNRS, INRIA.......................284

ENS Ecole Normale Superieure................................................................285

WILLOW Publications and PhD Thesis....................................................286

Laboratoire Hubert Curien UMR CNRS 5516, Machine Learning........286

MACHINE LEARNING RESEARCH GROUPS, in Germany

.............................................................................................288