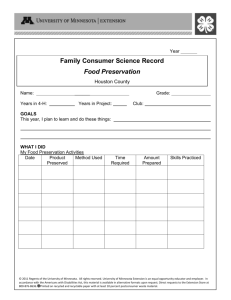

Systematic planning for digital preservation

advertisement