The Relationship Between Online Visual Representation of a Scene

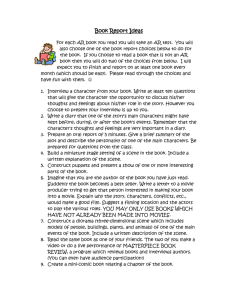

advertisement