Errors, Computer Arithmetic and Norms C

advertisement

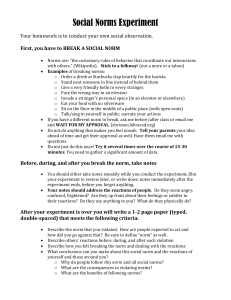

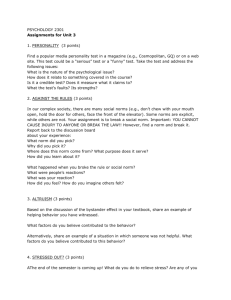

11.915 Comp Maths I, Unit 1 1 Advanced Mathematics and Statistics for Engineers Computational Mathematics I Unit 1 — Errors, Computer Arithmetic and Norms Contents 1 Disasters due to numerical errors 1.1 The explosion of Ariane 5 . . . . . . . . . . . . . . 1.2 Roundoff error and the Patriot missile . . . . . . . 1.3 The sinking of the Sleipner A offshore oil platform 1.4 Errors in computer modelling of real systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 1 1 2 2 2 Computer arithmetic 2.1 Absolute, relative, backward and forward errors . . . . . . 2.2 Computer representation of floating point numbers . . . . 2.3 Scientific computers . . . . . . . . . . . . . . . . . . . . . 2.4 Example — adding 0.001 repeatedly . . . . . . . . . . . . 2.5 Example — cancellation errors . . . . . . . . . . . . . . . 2.6 Example — cancellation errors in approximate derivatives 2.7 Example — quadratic roots . . . . . . . . . . . . . . . . . 2.8 Example — evaluating polynomials . . . . . . . . . . . . . 2.9 Example — more general functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 3 3 5 7 8 9 10 11 12 3 Vector and matrix norms 3.1 Vector norms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3.2 Matrix norms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3.3 Absolute and relative errors in norms . . . . . . . . . . . . . . . . . . . . . . 13 13 15 16 4 Problems 16 1 1.1 . . . . . . . . . . . . Disasters due to numerical errors The explosion of Ariane 5 Adapted from the WWW pages by D N Arnold http://www.ima.umn.edu/∼arnold/disasters On June 4, 1996 an unmanned Ariane 5 rocket launched by the European Space Agency exploded just forty seconds after its lift-off from Kourou, French Guyana. The rocket was on its first voyage, after a decade of development costing $7 billion. The destroyed rocket and its cargo were valued at $500 million. A board of inquiry investigated the causes of the explosion and in two weeks issued a report. It turned out that the cause of the failure was a software error in the inertial reference system. Specifically a 64 bit floating point number relating to the horizontal velocity of the rocket with respect to the platform was converted to a 16 bit signed integer. The number was larger than 32,768, the largest integer storeable in a 16 bit signed integer, and thus the conversion failed. 11.915 Comp Maths I, Unit 1 1.2 2 Roundoff error and the Patriot missile Article [5] by Robert Skeel, professor of computer science at the University of Illinois at Urbana-Champaign, in SIAM News, 1992. “The March 13 issue of Science carried an article claiming, on the basis of a report from the General Accounting Office (GAO), that a ‘minute mathematical error ... allowed an Iraqi Scud missile to slip through Patriot missile defenses a year ago and hit U.S. Army barracks in Dhahran, Saudi Arabia, killing 28 servicemen.’ The article continues with a readable account of what happened. “The article says that the computer doing the tracking calculations had an internal clock whose values were slightly truncated when converted to floating-point arithmetic. The errors were proportional to the time on the clock: 0.0275 seconds after eight hours and 0.3433 seconds after 100 hours. A calculation shows each of these relative errors to be both very nearly 2−20 , which is approximately 0.0001%. “The GAO report contains some additional information. The internal clock kept time as an integer value in units of tenths of a second, and the computer’s registers were only 24 bits long. This and the consistency in the time lags suggested that the error was caused by a fixed-point 24-bit representation of 0.1 in base 2. The base 2 representation of 0.1 is nonterminating; for the first 23 binary digits after the binary point, the value is 0.1 × (1 − 2−20 ). The use of 0.1 × (1 − 2−20 ) in obtaining a floating-point value of time in seconds would cause all times to be reduced by 0.0001%. “This does not really explain the tracking errors, however, because the tracking of a missile should depend not on the absolute clock-time but rather on the time that elapsed between two different radar pulses. And because of the consistency of the errors, this time difference should be in error by only 0.0001%, a truly insignificant amount. “Further inquiries cleared up the mystery. It turns out that the hypothesis concerning the truncated binary representation of 0.1 was essentially correct. A 24-bit representation of 0.1 was used to multiply the clock-time, yielding a result in a pair of 24-bit registers. This was transformed into a 48-bit floating-point number. The software used had been written in assembly language 20 years ago. When Patriot systems were brought into the Gulf conflict, the software was modified (several times) to cope with the high speed of ballistic missiles, for which the system was not originally designed. “At least one of these software modifications was the introduction of a subroutine for converting clock-time more accurately into floating-point. This calculation was needed in about half a dozen places in the program, but the call to the subroutine was not inserted at every point where it was needed. Hence, with a less accurate truncated time of one radar pulse being subtracted from a more accurate time of another radar pulse, the error no longer cancelled. “In the case of the Dhahran Scud, the clock had run up a time of 100 hours, so the calculated elapsed time was too long by 2−20 × 100 hours = 0.3433 seconds, during which time a Scud would be expected to travel more than half a kilometer. “The roundoff error, of course, is not the only problem that has been identified: serious doubts have been expressed about the ability of Patriot missiles to hit Scuds.” End of article by Robert Skeel. 1.3 The sinking of the Sleipner A offshore oil platform Adapted from the WWW pages by D N Arnold 11.915 Comp Maths I, Unit 1 3 http://www.ima.umn.edu/∼arnold/disasters The Sleipner A offshore oil platform sank during construction in Gandsfjorden outside Stavanger, Norway on 23 August 1991. The top deck weighed 57,000 tons, and provided accommodation for about 200 people and support for drilling equipment weighing about 40,000 tons. The crash caused a seismic event registering 3.0 on the Richter scale, and left nothing but a pile of debris on the sea bed 220m down. The failure involved a total loss of about $700 million. The conclusion of the investigation was that the loss was caused by a failure in a cell wall (one of the building blocks of the structure), resulting in a serious crack and a leakage that the pumps were not able to cope with. The wall failed as a result of a combination of a serious error in the finite element analysis and insufficient anchorage of the reinforcement in a critical zone. 1.4 Errors in computer modelling of real systems Computer predictions based on mathematical models of a real-world system can fail for all sorts of reasons. The three examples above show some of the pitfalls. Ariane was destroyed by an overflow problem (the number was too big for the place it was to be stored) and Patriot didn’t work when it was needed because of rounding error accumulation and mixing up precision. Sleipner was very different. The structure was destroyed because a finite element package was trusted to make predictions within a few percent and it didn’t get things right, perhaps because of modelling problems and perhaps because of errors in approximately solving the equations defined by the model. A brief summary of sources of error is: • The coding/hardware is wrong. Not our concern here, but always worth keeping in mind! • The mathematical model is wrong, or doesn’t take account of enough of the factors in the problem. • The equations that make up the mathematical model are not solved accurately enough. • The equations that make up the mathematical model cannot be solved accurately enough. For example, a system which is very unstable in the sense that small changes in input give rise to large changes in output, is going to be tricky to deal with when, say, measured data fed into it is noisy. This is a major concern in the study of numerical algorithms and linear algebra, and we will examine this in detail. 2 Computer arithmetic Most standard computer languages and processors are designed to do real arithmetic to about 15 significant digits. This sounds quite impressive until you start doing things like adding very small numbers to big ones, or relying on the result of subtracting two numbers that are very close together. Many texts on numerical analysis and scientific computing cover these topics, but [4] is probably the most comprehensive. 11.915 Comp Maths I, Unit 1 4 INPUT OUTPUT x backward error y=f(x) forward error x+dx y+dy = f(x+dx) Figure 1: Backward and forward errors in computing y = f (x). The thick solid line indicates the outcome of the computation. 2.1 Absolute, relative, backward and forward errors We need to be clear about these terms when considering errors. If the number x̂ approximates x, then the absolute error in x̂ = |x̂ − x| and, if x 6= 0 relative error in x̂ = |x̂ − x| . |x| where |x| is the absolute value (modulus) of x. (Matlab command abs.) We will see this generalised below when x is a vector or matrix. Figure 1 shows a schematic diagram of the computation of y = f (x) given x. The actual computed result is y + dy when it should be y, and dy is the forward error in this calculation. On the other hand y + dy corresponds to the correct calculation of f (x + dx), and dx is the backward error in the calculation. 2.2 Computer representation of floating point numbers There are standards for representing and processing floating point numbers on digital computers (e.g. the IEEE single, double and extended precisions) and, of course, the chips use binary (base 2) arithmetic. To keep life simple let us work with base 10 arithmetic here, but bear in mind the Patriot missile and the example in § 2.4 below when you think in base 10, but compute in base 2. A normalized decimal floating point number y 6= 0 has the form y = ±0.d1 d2 . . . dk × 10n , 1 ≤ d1 ≤ 9 , 0 ≤ ds ≤ 9 for s = 2 : k (1) and the integer n also has a limited range of values n = ±f1 . . . fj , 0 ≤ fs ≤ 9 for s = 1 : j . In the above form, 0.d1 d2 . . . dk is the mantissa and n is the exponent. Setting all the d’s to zero gives the number y = 0. The number requires k + j decimal digits and two sign bits to store it. If for example k = 7 and j = 2 is easy to show that max |y| = 0.9999999 × 1099 ≈ 1099 11.915 Comp Maths I, Unit 1 and 5 min |y| = 0.1000000 × 10−99 = 10−100 . y6=0 If you try to go outside these bounds you get an overflow or underflow, similar to Ariane. To convert a real number like y = π, 1/3 or 76 × 10−3 to the form f l(y) in (1), two procedures are used. First (notionally) write the real number in its infinite decimal expansion, say y = 0.d1 d2 . . . × 10n , 1 ≤ d1 ≤ 9 , 0 ≤ ds ≤ 9 for s ≥ 2 . (2) Chopping defines f l(y) as f lchop (y) = 0.d1 d2 . . . dk × 10n , while rounding defines f lround (y) = f lchop (y + 0.5 × 10n−k+1 ) = 0.δ1 δ2 . . . δk × 10n . Rounding looks complicated, but it is just the well-known rule: when dk+1 < 5 we round down to get f l(y) = 0.d1 d2 . . . dk × 10n , and when dk+1 ≥ 5 we round up by adding 1 to dk . For example, chopping and rounding π = 0.314159265358 . . . × 101 to 5 digit floating point form gives f lchop (π) = 0.31415 × 101 , f lround (π) = 0.31416 × 101 following the conventions above. The error that results by replacing a real number by its floating point representation is the roundoff error. If the real number y = 0.d1 d2 . . . × 10n (as in (2) above), then the relative error in chopping to k digits is |f lchop (y) − y| 0.dk+1 dk+2 . . . 0.dk+1 dk+2 . . . × 10n−k = ≤ × 10−k |y| 0.d1 d2 . . . × 10n 0.d1 d2 . . . and, given the restrictions on the decimal digits in (2), |f lchop (y) − y| 0.9999999 . . . ≤ × 10−k = 101−k . |y| 0.1 A similar result holds for rounding: |f lround (y) − y| ≤ 0.5 × 101−k . |y| So the relative errors do not depend on the magnitude of the numbers, so long as they fit into the range that can be stored. Computer arithmetic suffers from these floating point representation problems. Consider the real numbers x = 1/3, y = 5/7 and work in 5-digit chopping arithmetic. First we have f l(x) = 0.33333 × 100 and f l(y) = 0.71428 × 100 where we have shortened f lchop to f l. Computer evaluation of x + y (written x ⊕ y) can be thought of as x ⊕ y = f l(f l(x) + f l(y)) = f l(0.33333 × 100 + 0.71428 × 100 ) = f l(1.04761 × 100 ) = 0.10476 × 101 . 11.915 Comp Maths I, Unit 1 6 In other words, chop x and y to 5 digits, add these results together and chop. The relative error overall is approximately 0.18 × 10−4 . This isn’t bad since each chop could involve a relative error as big as 1 × 10−4 . This general way of thinking about computer arithmetic operations works for the other common operations: x ⊗ y = f l(f l(x) × f l(y)) , x ª y = f l(f l(x) − f l(y)) , x ª ÷y = f l(f l(x) ÷ f l(y)) . Of course the exact details vary from processor to processor. Finally, many implementations of floating point arithmetic satisfy the condition f l(x op y) = (x op y)(1 + δ), |δ| ≤ u, op = +, −, ×, ÷ (3) where the unit roundoff u = β 1−t /2 and β is the number base used (e.g. binary or decimal) and t is the precision (number of digits in the mantissa). For the commonly used IEEE double precision arithmetic these numbers are β = 2, t = 53, u ≈ 10−16 . See [Ch.2][4]. 2.3 Scientific computers Although the mass market for computers has settled onto one common type of processor, the more specialised market is much more diverse. There are RISC chips, parallel computers, vector processors etc. and one has to consider issues like main memory access times, onchip cache (fast memory attached to the processor) size and access time, the number of arithmetic units on individual processors etc.. We will only have a brief look here. Parallel processing Parallel computers come in many varieties. They are collections of processors that can be used together to execute a programme. Typical issues here are: • Can the programme be broken into independent pieces that can be executed together? If 90% of the code can be parallelised and 10% cannot, then the time taken by code can be reduced to 10% at best. • Do these independent pieces all take the same time to execute? If not, then many processors will be doing nothing waiting for the slowest to finish. Making this work is called load balancing. • Do the processors share memory or have independent chunks only accessible to to themselves? • If they don’t share memory, how fast do the processors pass information between one another? Perfect performance is roughly that P processors make your code P times faster. In real life, parallel performance is rarely near perfect, particularly as P gets big. It is quite possible for 256 processors to take longer than 64 on some problems. On the other hand it is also possible to see speed-ups of more than P with P processors, when the data for the problem are split into smaller pieces that can fit onto the faster cache memory and doesn’t use the slower main memory. 11.915 Comp Maths I, Unit 1 7 Vector processing Vector processors like the Cray and some Digital Signal Processing chips have also made a big impact. The basic idea is simple, but effective. Say we want to find the vector sum a + b. One type of hardware goes through the process for j=1:N read a(j) and b(j) from memory add them write result to memory end It doesn’t start on the next calculation until it completely finishes the current one. If that process takes say 4 clock cycles, then the whole thing takes 4N clock cycles. A vector pipeline processor is designed to split the calculation (read from memory, add, write result) up into say 7 sections, each taking one clock cycle, but to start a new calculation every clock cycle. See Table 1. It takes 7 clock cycles to get the first result out, but each one after that appears every cycle. The total time to add both vectors in this way is 6 + N cycles. When N is big, then this vector pipeline is 4 times faster then the simple unit. However it isn’t faster when adding pairs of scalars. The simplified example above shows how vector pipelines can speed up calculations of operations involving vectors. One reason to introduce this concept is that for other reasons, Matlab behaves as if it is a vector pipeline computer. For example, N = 100000; x = rand(N,1); y=rand(N,1); tic z = x+y; toc elapsed_time = 0.2166 tic for j=1:N z(j) = x(j)+y(j); end toc elapsed_time = 4.8452 shows a speed-up by a factor of 22 for the vector calculation (the first part) over the component-wise calculation. These were run on a Sun Ultra 5/270. We will give more Matlab speed tips throughout the course. 2.4 Example — adding 0.001 repeatedly The following code section should step through adding 0.001 to x at each step. It should take exactly 100 steps for x to be equal to 0.2, and it should stop at that point because of the logical check and break. 11.915 Comp Maths I, Unit 1 Cycle 1 2 3 4 5 6 7 8 ... 1 a1 , b1 a2 , b2 a3 , b3 a4 , b4 a5 , b5 a6 , b6 a7 , b7 a8 , b8 ... 8 2 3 Steps 4 5 6 7 Result a1 , b1 a2 , b2 a3 , b3 a4 , b4 a5 , b5 a6 , b6 a7 , b7 ... a1 , b1 a2 , b2 a3 , b3 a4 , b4 a5 , b5 a6 , b6 ... a1 , b1 a2 , b2 a3 , b3 a4 , b4 a5 , b5 ... a1 , b1 a2 , b2 a3 , b3 a4 , b4 ... a1 , b1 a2 , b2 a3 , b3 ... a1 , b1 a2 , b2 ... a1 + b1 a2 + b2 ... Table 1: Pipeline processing to find a + b x = 0.1; for k=1:200 x = x + 0.001; if x==0.2, disp(’x = 0.2’), break, end end disp([’Number of steps = ’,int2str(k),’ Final x = ’,num2str(x)]) OUTPUT Number of steps = 200 Final x = 0.3 The code never finds that x is exactly equal to 0.2! To see what happens near where x=0.2 modify the code: format long e % show full numerical precision x = 0.1; for k=1:200 x = x + 0.001; if abs(x-0.2) < 1.e-6, break, end end disp([’Number of steps = ’,int2str(k),’ Final x = ’,num2str(x),... ’ x-0.2 = ’,num2str(x-0.2)]) OUTPUT Number of steps = 100 Final x = 0.2 x-0.2 = 8.3267e-17 So adding 0.001 repeatedly to x doesn’t do what we expect in floating point arithmetic. That is because the number 0.001 can’t be represented exactly in binary (computer numbers). It is better to replace the check x==0.2 in the first version above by abs(x-0.2) < 1.e-6. That is, x is within distance 10−6 (or another small number) of 0.2. This is very similar to the Patriot missile problem. 11.915 Comp Maths I, Unit 1 2.5 9 Example — cancellation errors Start with an integer example. Subtract two 14 digit integers which agree in the first 6 places: >> format long >> p = 14567123456629 p = 1.456712345662900e+013 >> q = 14567109642894 q = 1.456710964289400e+013 >> r = p-q r = 13813735 Not surprisingly, the result r=p-q has 14-6 = 8 digits in it (and it is correct). Next try it with non-integers: >> format long >> a = 100000*sin(0.300000001) a = 2.955202076166761e+004 >> b = 100000*sin(0.299999999) b = 2.955202057060031e+004 The numbers a and b above are computed to about 16 significant figures and agree in their first 8 significant places. Taking the difference between them should leave a number accurate to about 16-8 = 8 significant decimals. >> c = a-b c = 1.910672981466632e-004 Unfortunately the computer lies and pads out the result by adding more digits than it really knows anything about. It gives the impression that the number c is known to 16 significant digits when it cannot possibly be. Now subtract the number d from c. They agree in the first 8 decimal places. >> d = 1.9106729123456e-4 d = 1.910672912345600e-004 >> c-d ans = 6.912103241120057e-012 11.915 Comp Maths I, Unit 1 10 Error in Difference Approxs of derivative of sin(x) at x=2 0 10 forward (f(x+h)−f(x))/h central (f(x+h)−f(x−h))/(2h) −2 10 −4 10 −6 10 −8 10 −10 10 −12 10 −10 10 −8 10 −6 −4 10 10 −2 10 0 10 h values Figure 2: Comparison of the absolute errors in the forward and central difference approximations of the derivative of sin x at x = 2. See § 2.6 Again we get a result quoted to 15 or 16 decimal places, but none of the digits in this number mean anything because c was accurate to 8 decimals and we subtracted them off using d. 2.6 Example — cancellation errors in approximate derivatives Derivatives of functions can be approximated by estimating the slope of the function. For example, the derivative of sin x at x = 2 is cos 2. Use a forward difference to approximate the derivative. >>x=2; h=0.1; aprx = (sin(x+h)-sin(x))/h aprx = -0.4609 >> exact = cos(2) exact = -0.4161 Not too far wrong. Making the parameter h smaller usually makes thing better. >>x=2; h=0.05; aprx = (sin(x+h)-sin(x))/h 11.915 Comp Maths I, Unit 1 11 aprx = -0.4387 >>exact = cos(2) exact = -0.4161 However, when h gets very small the difference between sin(x+h) and sin(x) is relatively very small and we can have rounding error problems as described earlier. We see in Figure 2 that as we decrease h we get closer to the exact derivative until about h = 1.e − 8. For smaller h we slowly drift away from this good result until at h = 1.e−16 we get a completely wrong result aprx=0! This is all due to rounding errors in subtracting two numbers that are very close together. 2.7 Example — quadratic roots Find the roots of the quadratic equation ax2 + bx + c = 0 . The two roots (when a 6= 0) are x± = −b ± √ b2 − 4ac . 2a This seems like a simple computation, but if b2 is much bigger than 4ac and b > 0, then x+ may be difficult to calculate accurately since it involves p −b + b2 − 4ac ≈ −b + b . In the case b > 0 with b2 << 4ac it is better to use an alternative formula for x+ given by √ √ √ −b + b2 − 4ac −b + b2 − 4ac −b − b2 − 4ac −2c √ √ x+ = = × = 2a 2a −b − b2 − 4ac b + b2 − 4ac or to compute x− first and then use the identity x+ = c/(ax− ). That way there are no cancellation problems to deal with. 2.8 Example — evaluating polynomials Consider the evaluation of the polynomial f (x) = x3 − 6x2 + 3x − 0.149 for x = 4.71 using 3-digit chopping and rounding arithmetic. arithmetic exact 3-chop 3-round x 4.71 4.71 4.71 3×x 14.13 14.1 14.1 x×x 22.1841 22.1 22.2 6×x×x 133.1046 132 133 x×x×x 104.487111 104 105 11.915 Comp Maths I, Unit 1 12 Note that 4.71 × 4.71 × 4.71 in 3-digit rounding arithmetic is (4.71 ⊗ 4.71) ⊗ 4.71 = f lround (22.1841) ⊗ 4.71 = 22.2 ⊗ 4.71 = f lround (104.562) = 105 and that 105 6= f lround (exact result) = 104. Now add everything up (working from left to right): exact 3-chop 3-round f (4.71) 104.487111 − 133.1046 + 14.13 − 0.149 ((104 ª 132) ⊕ 14.1) ª 0.149 ((105 ª 133) ⊕ 14.1) ª 0.149 = −14.636489 = −14.0 = −14.0 Relative error in both cases is 0.636/14.636 = 0.04348 . . . i.e. 4 per cent. Horner’s algorithm is an alternative method for polynomial evaluation that is computationally efficient and usually cuts down on rounding errors. For the general n-th degree polynomial f (x) = an+1 xn + an xn−1 + a2 x + a1 , Horner’s algorithm evaluates f (x) for given x and a1 , . . . , an+1 as w = a(n+1); for j=n:-1:1 w = w*x + a(j); end so in the cubic example above we get (with x = 4.71, a4 = 1, a3 = −6, a2 = 3, a1 = −0.149): algorithm w = a(4) w = w*x + a(3) w = w*x + a(2) w = w*x + a(1) w exact 1 -1.29 -3.0759 -1.4636489 w 3-chop 1 -1.29 -3.07 -14.5 w 3-round 1 -1.29 -3.08 -14.6 The relative errors are now approximately 0.9% and 0.2% respectively, compared to 4% by the direct evaluation above. Also note that the Horner algorithm takes 6 flops (floating point operations ± ÷ ∗) compared to 9 or 10 for the direct method. The potential improvement in accuracy and computational cost is greater for higher degree polynomials. 2.9 Example — more general functions Consider the function 1 − cos x x2 for small values of x. There are difficulties with g(x) because cos x ≈ 1 for small x. On a typical processor working to between 15 and 16 significant figures, the result g(x) will be returned as exactly 0 for all |x| 6= 0 less than around 10−8 . This is not very good since for small x, f (x) ≈ 0.5 which is nothing like 0. One cure for this is to use Taylor’s theorem (see almost any calculus book or [1, 2]) to expand the numerator and/or the denominator of such functions around x = 0. When a function f (x) is smooth enough, Taylor’s theorem gives g(x) = f (x) = f (x0 ) + (x − x0 )f 0 (x0 ) + (x − x0 )2 00 (x − x0 )3 (3) f (x0 ) + f (x0 ) + · · · . 2! 3! 11.915 Comp Maths I, Unit 1 13 In the example above with x0 = 0, 1 − cos x = 1 − cos 0 + (x − 0) sin 0 + = (x − 0)2 (x − x0 )3 cos 0 + (− sin 0) + · · · 2! 3! x2 x4 − + ··· 2 4! which only has even powers of x. Finally, a good approximation of g(x) for small x is obtained by 1 x2 1 − cos x ≈ − . g(x) = x2 2 4! The error in this approximation is actually ≤ x4 /6! in exact arithmetic, but we shall leave that to the Computational Mathematics II module. 3 Vector and matrix norms We are going to be studying linear algebra, which works with vectors and matrices. We need a way to measure the size of vectors (and sometimes matrices) and particularly the errors in vector results. Norms are used to give a measure the “size” of vectors and matrices. They should not be confused with the “dimension”, which is the number of elements in a vector and the number of rows and columns in a matrix. See many books on numerical linear algebra for an overview of this topic, for example [1, 2, 3, 4]. Note: We will usually think of vectors as column vectors. 3.1 Vector norms Perhaps the most familiar vector norm is the distance between two points on a map or a plan. The distance from the origin to the point (2, 6) on a map is then p 22 + 62 ≈ 6.3246 (Pythagoras’ theorem for right-angled triangles) and in 3D, the distance from the origin to (2, 6, −1) is p 22 + 62 + (−1)2 ≈ 6.4031 . This definition of distance (called the Euclidean distance) is what you would measure with a ruler. For multicomponent vectors, the Euclidean distance from the origin to the point denoted by x = (x1 , x2 , . . . , xN )T is 1/2 N q X def x2j , kxk2 = x21 + x22 + · · · + x2N = j=1 assuming that x has real (not complex) components. The notation kxk2 is read as “the 2-norm” or the “Euclidean norm” or the “`2 norm” of vector x. Throwing away the ruler, there are other many ways to measure distance when dealing with real vectors. The most 11.915 Comp Maths I, Unit 1 14 x+y ||x+y|| ||y|| 0 x ||x|| Figure 3: Triangle inequality kx + yk ≤ kxk + kyk for vectors x, y. common examples for x ∈ RN (i.e. x is a vector of N real numbers) are the `p norms defined by 1/p N X def |xj |p , 1 ≤ p < ∞ , x ∈ RN kxkp = j=1 and the special case of the infinity or `∞ or maximum norm def kxk∞ = max |xj | . j=1:N The most common cases are p = 1, 2 and ∞. The Matlab command norm works for both vector and matrix norms. To qualify as a vector norm, k · k (however it is defined) must satisfy some rules: kxk ≥ 0 ∀x ∈ RN (4) kxk = 0 ⇔ x = 0 kx + yk ≤ kxk + kyk kα xk = |α| kxk (5) N ∀x, y ∈ R (6) N ∀α ∈ R, x ∈ R (7) The symbol ∀ means for all. The p-norms defined above do satisfy these rules. The rules can be interpreted as: 1. The norm is not negative (it is like a distance after all). 2. The norm of x is zero, if and only if x is 0 (again think of distances). 3. The distance travelled to go from the origin to point x and then to point y is greater than or equal to the distance going directly from the origin to the point x + y. See Figure 3 This is a generalisation of the more familiar |a + b| ≤ |a| + |b| with a = 4, b = ±7 for example. 4. If all components of the vector x are multiplied by a constant then the norm/distance is multiplied by the modulus of the same constant. As a consequence of these properties, vector norms also satisfy the Holder inequality ¯ ¯ ¯N ¯ ¯X ¯ 1 1 ¯ + =1 (8) xj yj ¯¯ = |xT y| ≤ kxkp kykq , ¯ p q ¯ j=1 ¯ 11.915 Comp Maths I, Unit 1 15 where x, y ∈ RN , 1 ≤ p, q ≤ ∞. The special case |xT y| ≤ kxk2 kyk2 (9) (p = q = 2) is called the Cauchy-Schwarz inequality. Furthermore, all norms on RN are equivalent. That is there exist constants c1 , c2 > 0 such that c1 kxkr ≤ kxks ≤ c2 kxkr (10) for any vector norms k · kr , k · ks . The constants c1 , c2 do not depend on the contents of x, only on the details of the norms and the length of the vector. For example kxk∞ ≤ kxk1 ≤ N kxk∞ √ kxk∞ ≤ kxk2 ≤ N kxk∞ √ kxk2 ≤ kxk1 ≤ N kxk2 for all x ∈ RN . This means that if we can show that the error in a vector tends to zero in one norm, then it does so in all other norms. 3.2 Matrix norms Matrices present further complications, but norms on matrices behave in a similar way to norms on vectors. The Matlab command norm works for both vector and matrix norms. We will concentrate on real, N × N square matrices, denoted by A ∈ RN ×N . A matrix norm k · k must satisfy kAk ≥ 0 ∀A ∈ RN ×N (11) kAk = 0 ⇔ A = 0 kA + Bk ≤ kAk + kBk kα Ak = |α|kAk (12) N ×N ∀A, B ∈ R (13) N ×N ∀α ∈ R, A ∈ R . (14) (Beware! The requirements for a matrix norm vary from book to book, but the main points are in agreement.) The most common matrix norms are the p−norms kAkp = max kAxkp = sup kxkp =1 x6=0 kAxkp kxkp for 1 ≤ p ≤ ∞ and the Frobenius norm v uN N uX X |aij |2 kAkF = t i=1 j=1 where aij is the element in the ith row and jth column of matrix A. The matrix p-norm is sometimes called the natural or induced matrix norm associated with the vector p-norm. The 1- and ∞-norms are the easiest of the p-norms to evaluate. They are equivalent to kAk1 = max j=1:N N X i=1 |aij | and kAk∞ = max i=1:N N X j=1 |aij | , 11.915 Comp Maths I, Unit 1 16 the maximum column sum and the maximum row sum respectively. (Incidentally, this is messy to prove. See e.g. [2, Ch.7].) The others are not at all straightforward or computationally cheap to evaluate. The p-norms also satisfy the conditions kAxkp ≤ kAkp kxkp (15) kABkp ≤ kAkp kBkp (16) and for A, B ∈ RN ×N and x ∈ RN . One way to think of the p-norm of matrix A is that it measures the maximum “magnification” or “size increase” the matrix can give when multiplying a vector or another matrix. The p-norms and the Frobenius norm are linked together in the same way as vector norms. For example, √ kAk2 ≤ kAkF ≤ N kAk2 √ √1 kAk∞ ≤ kAk2 ≤ N kAk∞ N √ √1 kAk1 ≤ kAk2 ≤ N kAk1 N p kAk2 ≤ kAk1 kAk∞ maxi,j=1:N |aij | ≤ kAk2 ≤ N maxi,j=1:N |aij | for all A ∈ RN ×N . In fact all norms on matrices in RN ×N are equivalent, just as we have seen in (10) for vectors. 3.3 Absolute and relative errors in norms Suppose that vector x̂ approximates x and matrix  approximates A. Then, for the norm chosen, absolute error in x̂ = kx̂ − xk , absolute error in  = k − Ak . If x 6= 0 and A 6= 0, relative error in x̂ = kx̂ − xk , kxk relative error in  = k − Ak . kAk Note that a different choice of norm can give different numerical results. 4 Problems 1. The number p̂ approximates p. What is the (i) absolute error and (ii) relative error in the approximation of p by p̂? (a) p = π, p̂ = 3.1 (b) p = 100/3, p̂ = 33.3 . 2. The number x̂ approximates x with a relative error of at most 10−N . What range of values can x̂ take given x and N below? (a) x = 22/7, N = 4 (b) x = 150, N = 3 . 11.915 Comp Maths I, Unit 1 17 3. How many normalised floating point numbers x with a 5-digit mantissa are there with 700 ≤ x < 900 using base 10 arithmetic? (The number of digits in the exponent doesn’t matter here.) 4. What is the smallest (non-zero) normalised floating point number with a 3-digit mantissa and 2-digit exponent in base 10 arithmetic? What is the biggest? (Smallest means closest to zero and biggest means furthest from zero.) 5. Suppose that f l(y) is a k-digit rounding approximation to real number y 6= 0. Show that ¯ ¯ ¯ y − f l(y) ¯ ¯ ≤ 0.5 × 10(1−k) . ¯ ¯ ¯ y 6. Perform the following computations (i) exactly, (ii) using 3-digit chopping, (iii) using 3-digit rounding and determine any loss in accuracy, assuming the numbers given below are exact. (a) 14.1 + 0.0981 (b) 0.0218 × 179 . 7. Use 3 digit rounding arithmetic to evaluate f (1.53) by (i) direct evaluation and (ii) Horner’s algorithm (see § 2.8 and set y = ex below). f (x) = 1.01e4x − 4.62e3x − 3.11e2x + 12.2ex − 1.99 . Computing the result to high precision and then rounding to 3 digits gives f (1.53) = −7.61. 8. Why is computer or calculator evaluation of f (x) = (ex − 1 − x)/(1 − cos x) likely to be inaccurate when x > 0 is small? Use Taylor expansions of the numerator and denominator to derive an alternative formula for f (x) which is accurate for small x. 9. Find kxk2 and kxk∞ for x = (3, −4, 0, 1.5)T , (2, 1, −3, 4)T . 10. Verify that the function k · k1 defined on RN by kxk1 = N X |xi |, i=1 is a vector norm and evaluate kxk1 for the vectors in the previous question. 11. Find k · k∞ for the following matrices: µ ¶ µ ¶ 1 −1 1 1 , , 2 1 1 1 µ 12. Show that k · k defined below is a matrix norm. def kAk = n n X X i=1 j=1 |aij | . 10 15 0 1 ¶ . 11.915 Comp Maths I, Unit 1 18 13. Show that k · k defined below is not a matrix norm. def kAk = max |aij | . ij 14. Use Matlab to find the 1-, 2-, ∞- and Frobenius norms of the 100 × 100 Hilbert matrix. Hint: look at the commands hilb, norm. References [1] K. E. Atkinson. An Introduction to Numerical Analysis. Wiley, 2 edition, 1989. [2] R. L. Burden and J. D. Faires. Numerical Analysis. Brooks Cole, 7 edition, 2001. [3] G. Golub and C. F. van Loan. Matrix Computations. John Hopkins University Press, 3 edition, 1995. [4] N. J. Higham. Accuracy and Stability of Numerical Algorithms. Society for Industrial and Applied Mathematics, Philadelphia, PA, USA, 1996. [5] R. Skeel. Roundoff error and the Patriot missile. Society for Industrial and Applied Mathematics (SIAM) News, 25(4):11, 1992. c B Duncan & G J Lord, Department of Mathematics, September 2001 °D