Technical Report

Interpreting the

Writing Subtest Scores

June 2007

Acknowledgments

This Research Report was prepared by the following individuals from Harcourt Assessment, Inc.:

Donald G. Meagher, EdD

Senior Research Director — Post-Secondary Education

Anli Lin, PhD

Senior Psychometrician — Post-Secondary Education

Christina D. Perez, MS, MEd

Senior Research Analyst — Post-Secondary Education

Rachel Price, MA

Research Assistant — Post-Secondary Education

Konstantin Tikhonov, BA

Editor — Post-Secondary Education

Copyright © 2007 by Harcourt Assessment, Inc.

Normative data copyright © 2007 by Harcourt Assessment, Inc.

All rights reserved.

No part of this publication may be reproduced or transmitted in any form or by any means, electronic or

mechanical, including photocopy, recording, or any information storage and retrieval system, without

permission in writing from the publisher.

The PCAT and PSI logos are trademarks of Harcourt Assessment, Inc., registered in the United States of

America and/or other jurisdictions.

PsychCorp is a trademark of Harcourt Assessment, Inc.

Printed in the United States of America.

1 2 3 4 5 6 7 8 9 10 11 12 A B C D E

PCAT Technical Report 2007—Writing

Table of Contents

Introduction..................................................................................................................1

PCAT Test Blueprint for 2007–08 and Beyond ........................................................2

Development of the Writing Subtest ..........................................................................3

Interpreting PCAT Writing Scores............................................................................5

How the Writing Subtest is Scored ..................................................................................... 5

Conventions of Language Scores........................................................................................ 5

Problem Solving Scores...................................................................................................... 6

How the Writing Scores Are Reported ............................................................................... 8

Using PCAT Writing Scores as Criteria for Admission ..................................................... 9

Technical Information for the Writing Field-Test..................................................10

Field-Test Sample ............................................................................................................. 10

Demographic Characteristics of the Field-Test Sample.................................................... 11

Evidence of Reliability and Validity.........................................................................15

Sample Essays ............................................................................................................17

Sample Essay 1 ................................................................................................................. 18

Sample Essay 2 ................................................................................................................. 18

Sample Essay 3 ................................................................................................................. 19

Sample Essay 4 ................................................................................................................. 20

Sample Essay 5 ................................................................................................................. 21

Sample Essay 6 ................................................................................................................. 23

i

PCAT Technical Report 2007—Writing

Figures

Figure 1 PCAT Test Blueprint .....................................................................................2

Figure 2 Instructions for the Writing Subtest ..............................................................4

Figure 3 Conventions of Language Rubric ..................................................................6

Figure 4 Problem Solving Rubric ................................................................................7

Figure 5 Sample Official Transcript for 2007–08 and Beyond ...................................8

Figure 6 Sample Personal Score Report for 2007–08 and Beyond .............................9

Tables

Table 1 Field-Test Sample Statistics.........................................................................10

Table 2 Writing Score Point Distributions................................................................10

Table 3 Writing Scores by Current Level of Education............................................11

Table 4 Writing Scores by Years of College Biology Completed ............................12

Table 5 Writing Scores by Years of College Chemistry Completed ........................12

Table 6 Writing Scores by Age Category .................................................................12

Table 7 Writing Scores by Sex..................................................................................13

Table 8 Writing Scores by Linguistic Background...................................................13

Table 9 Writing Scores by Ethnic Identification.......................................................14

Table 10 Writing Scores by Number of PCAT Attempts ..........................................14

Table 11 Percent of Writing Score Inter-Rater Agreements ......................................15

Table 12 Correlations between the Writing Scores and Multiple-Choice Subtest

Scaled Scores ..............................................................................................16

Table 13 Sample Prompt Field-Test Score Statistics.................................................17

Table 14 Sample Prompt Field-Test Score Point Distributions .................................17

ii

PCAT Technical Report 2007—Writing

Introduction

The American Association of Colleges of Pharmacy (AACP) and Harcourt Assessment, Inc.,

have collaborated within the last few years to implement several enhancements to the

Pharmacy College Admission Test (PCAT) intended to improve the test, and thereby improve

the relevance of the scores received by pharmacy schools.

Beginning with the June 2007 administration, a revised PCAT test blueprint will be

introduced, with the length of each multiple-choice subtest slightly reduced, and a second

Writing subtest added to allow new Writing prompts to be field-tested with each test

administration. These changes to the structure of the PCAT have extended the overall time of

the test by only five minutes. The revised PCAT blueprint was reviewed and approved by the

PCAT Advisory Panel in 2006. Each multiple-choice subtest includes items that are

proportional in number to the test blueprint originally approved by the PCAT Advisory Panel

in 1999, with the exception of Biology, which includes a greater proportion of items on

genetics; and Quantitative Ability, which no longer includes any geometry items, but now

includes new basic math items, a greater proportion of probability/statistics and pre-calculus

items, and a smaller proportion of calculus items. The scaled score ranges and corresponding

percentile ranks for the five multiple-choice subtests will remain unchanged, and the current

PCAT Technical Manual (2004) can continue to be used to interpret these scores.

In addition to the structural changes to the PCAT, another change involves the Writing score.

During the first two years that the Writing subtest was an operational component of the PCAT

(2005–06 and 2006–07), a single score was reported that represented the examinee’s

command of conventions of language. Beginning with the June 2007 test administration,

Writing scores will be reported for Conventions of Language and Problem Solving on a scale

of 0.0–5.0. Along with these two Writing scores, mean scores will also be reported for

comparison purposes that indicate the averages of all Writing scores earned by examinees on

a given test date. As with the scores reported for the other sections of the PCAT, it is

especially important that pharmacy schools consider the Writing scores only in combination

with other information about candidates applying for admission.

The information in this Technical Report is intended for use by faculty, administrators, and

professional staff in colleges of pharmacy and other institutions that need to interpret PCAT

scores for admission purposes and is not intended for use by students or by individuals

planning to take the PCAT or to apply for admission to pharmacy schools. This report

contains the new PCAT test blueprint and information necessary to interpret the Writing

scores for 2007–08 and beyond, including descriptions of the structure and scoring of the

Writing subtest, a sample Official Transcript, field-test data obtained for the problem solving

Writing prompts to be used during the 2007–08 testing cycle, and sample essays representing

each score point.

For any questions about the PCAT Writing subtest or about this Technical Report, please

contact PSE Customer Relations by phone at 1-800-622-3231, or by email at

scoring.services@harcourt.com.

1

PCAT Technical Report 2007—Writing

PCAT Test Blueprint for 2007–08 and Beyond

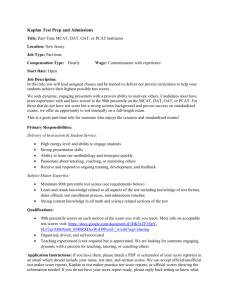

The test blueprint shown in Figure 1 is a general list of the contents of each of the seven

subtests. Each of the five multiple-choice subtests contains 40 operational items that count

toward examinees’ scores and 8 experimental items that are being field-tested for possible

future use as operational items and do not count toward examinees’ scores. Similarly, one of

the two Writing subtests is operational and the other is experimental. The content objectives

tested on the PCAT were originally approved by the PCAT Advisory Panel in 1999, with the

shortening of the test, the addition of an experimental Writing section, and the revisions to the

Biology and Quantitative subtest objectives approved by the Panel in 2006.

Number of

Number of

Experimental

Operational

Items

Items

PCAT Subtest

1 Prompt (either operational or

Part 1: Writing

experimental)

Part 2: Verbal Ability

40

8

Analogies

25

4

Sentence Completion

15

4

Part 3: Biology

40

8

General Biology

25

4–5

Microbiology

7–8

1–2

Anatomy & Physiology

7–8

1–2

Part 4: Chemistry

40

8

General Chemistry

25

5–6

Organic Chemistry

15

2–3

Rest Break

1 Prompt (either operational or

Part 5: Writing

experimental)

Part 6: Reading Comprehension 40 (5 passages) 8 (1 passage)

Comprehension

12–13

2–3

Analysis

15

3–4

Evaluation

12–13

2–3

Part 7: Quantitative Ability

40

8

Basic Math

5–6

1–2

Algebra

7–8

1–2

Probability & Statistics

7–8

1–2

Pre-Calculus

9–10

2–3

Calculus

9–10

2–3

200 multiple40 multiplechoice

choice

operational

experimental

Total Test

items + 1

items + 1

writing

writing

prompt

prompt

Figure 1

PCAT Test Blueprint

2

Time

Allowed

30 min.

30 min.

30 min.

30 min.

Variable

30 min.

50 min.

40 min.

240 min. =

4 hrs. +

Rest Break

PCAT Technical Report 2007—Writing

Development of the Writing Subtest

In 1999, the AACP PCAT Advisory Panel requested that Harcourt Assessment develop

critical thinking and essay components to add to the PCAT. Harcourt Assessment proposed

combining the two into an essay exam that would generate separate scores for critical thinking

and language conventions. The Panel approved this suggestion, and in 2000, Harcourt

Assessment piloted several writing prompts (topics) with groups of undergraduates. The

PCAT Advisory Panel reviewed the results of the pilot tests administered and recommended

that a Critical Thinking Essay component of the PCAT consist of argumentative and problem

solving prompts.

During the 2004–05 testing cycle, eight writing prompts were field-tested. The field-test

samples were scored by trained scorers at Harcourt Assessment for both conventions of

language and critical thinking. Analyses of the field-test data showed much more consistency

from prompt to prompt between the conventions of language scores than between the critical

thinking scores. For this reason, a decision was made to introduce the Writing subtest as an

operational component of the PCAT for the 2005–06 cycle, but only to report scores for

conventions of language on a scale of 0–5 (5=highest possible earned score; 1=lowest

possible earned score; 0=invalid). This single score was reported for all PCAT administrations

from June 2005 through January 2007.

After further data analyses of mean score and score point distribution data, it became clear

that the inconsistencies in the original critical thinking scores were primarily due to the two

different types of prompts: there was much more consistency between scores earned from the

problem solving prompts than from the argumentative prompts. One way that was considered

to adjust for these mean score differences was to equate all of the score data and report scaled

scores and percentile ranks, similar to the way that the multiple-choice subtest scores are

reported. In April 2006, however, the AACP PCAT Advisory Panel and Harcourt Assessment

decided that scaled scores and percentile ranks should not be reported for the Writing scores

because doing so would not provide useful information for pharmacy schools. Instead, it was

decided to report each examinee’s earned score plus a mean score for the entire group of

examinees to be used for comparison purposes. Also, because of the critical thinking score

discrepancies between the argumentative and problem solving prompts found during fieldtesting, decisions were made to administer only the problem solving prompts operationally, to

continue reporting what would be called a Conventions of Language score, and to begin

reporting a score for Problem Solving, which seemed a more accurately descriptive name than

critical thinking for this aspect of the assessment. The Panel also agreed to restructure the test

to include a second Writing subtest that would provide a way to field-test new prompts for

possible future operational use. These changes will be in effect for all PCAT administrations

beginning with the June 2007 test date.

As a result of these decisions, the Writing subtest on all PCAT test forms will now require

examinees to write essays in response to statements that present a problem needing a solution.

Also, beginning with the June 2007 administration, each PCAT test form will include two

Writing subtests, one operational and the other experimental. During the 2007–08 testing

3

PCAT Technical Report 2007—Writing

cycle, the four prompts used will be taken from the original problem solving prompts fieldtested during 2004–05. The addition of an experimental Writing section will allow new

prompts to be field-tested for use on test forms for the 2008–09 and beyond.

For each Writing subtest, examinees are presented with a detailed set of instructions, followed

by the prompt itself. Figure 2 shows the instructions for the problem solving prompts that

appear in each PCAT test booklet, along with the Writing prompt used during the June 2007

PCAT administration that has been retired from subsequent operational use.

Directions: The topic on which you will write is stated below. The topic is in the form of a statement

that presents a problem. Your essay should present a solution to the problem presented in the

statement.

Your essay should be of sufficient length to adequately explain your solution to the problem

presented in the statement. In your response, you should observe the following:

•

Suggest a solution to the problem presented.

•

State a clear thesis and present relevant support that draws upon credible references from

your academic or personal experience, reading, or studies.

•

Discuss and evaluate possible alternative solutions to the one you are suggesting.

•

Use appropriate and conventional grammar, punctuation, usage, and style.

•

Make sure your essay is legible so it can be easily read and scored.

•

Allow time to proofread your essay and make sure that any revisions you make are indicated

clearly.

•

Once you are instructed to begin, you will have 30 minutes to plan and write your essay.

You may use the space on page 3 of your Answer Booklet to plan your essay (notes, outlines, or

other pre-writing activities), and you will write your essay on pages 4–6 of your Answer Booklet.

Pages 4–6 of your Answer Booklet will be the only pages scored.

While planning and writing your essay, you may refer back to this page in your Test Booklet.

Writing Topic:

Discuss a solution to the problem of assuring national security in an open and free

society that is based on individual civil rights and liberties.

WAIT: Do not begin planning or writing your essay in the Answer Booklet until instructed to

do so.

Figure 2

Instructions for the Writing Subtest

4

PCAT Technical Report 2007—Writing

Interpreting PCAT Writing Scores

Beginning with the June 2007 administration, every examinee taking the PCAT will receive

Writing scores for Conventions of Language and Problem Solving. Each examinee’s Writing

scores will be included on the personal Score Report sent to the examinee, on Official

Transcripts sent to the pharmacy schools and other institutions requested by the examinee,

and on all data sent to the Pharmacy College Application Service (PharmCAS).

Along with the Writing scores earned by the examinee, mean scores will also be reported for

both Conventions of Language and Problem Solving that represent the averages of all Writing

scores earned by examinees taking the PCAT on the same national test date. These mean

scores are calculated immediately after a PCAT test date and are then listed on the personal

Score Reports sent to examinees and on the Official Transcripts sent to recipient schools. The

mean scores allow pharmacy schools to compare an examinee’s performance on the Writing

subtest to the average performance of other examinees who wrote on the same prompt at the

same time.

How the Writing Subtest is Scored

Each essay is read at Harcourt Assessment by trained scorers who assign Writing scores

ranging from 0.0 to 5.0, with 5.0 representing the highest earned score possible, 1.0

representing the lowest earned score possible, and 0.0 representing an invalid score. Essays

are scored as 0.0 (invalid) only if left blank, if written in a foreign language, if written

illegibly, or if what is written indicates a refusal to write. Essays are also scored as 0.0 for

Problem Solving if written on a topic other than the one assigned, but such essays may be

assigned a Conventions of Language score (1.0–5.0), if deemed to be otherwise valid.

The examinees’ hand-written essays are first scanned from the printed PCAT Answer

Booklet, and are then read and scored on computer monitors. Both the PCAT Answer

Booklets and scanned images of the essays are archived for one year after each test

administration. All scorers are experienced at scoring essay exams and have been trained in

advance using sample essays chosen as representative examples of each score point. These

sample essays were written by examinees in response to the same Writing prompt to be used

during the current PCAT test administration when the prompt had been originally field-tested.

Scorers for the Writing subtest are also guided by the Conventions of Language and Problem

Solving rubrics that describe the characteristics of each score point (see Figures 3 and 4 for

details). Specific procedures are followed to verify the assigned scores (see below).

Conventions of Language Scores

The following scoring rules are observed for assigning the Conventions of Language scores:

•

Following the Conventions of Language rubric (see Figure 3), one scorer assigns a score for

the essay ranging from 1.0–5.0 (or 0.0 if deemed invalid).

•

10% of the essays scored are read again by a supervisor to ensure that the scorers are assigning

scores that are consistent with their training and the Conventions of Language scoring rubric.

5

PCAT Technical Report 2007—Writing

•

In the case of a difference of more than one score point between the original score and the

supervisor’s score (e.g., 3 and 5), a resolution leader reads the essay, and a determination is

made on a case-by-case basis what the score should be.

•

When repeated inconsistencies are found between a scorer’s assigned scores and a supervisor’s

10% validation check, then the scorer will be retrained or dismissed. In such cases, all of a

retrained or dismissed scorer’s essays may be rescored, if deemed necessary.

Score Point 5: Superior

• The writer is in command of the conventions of language.

• The writer makes very few, if any, mistakes in sentence formation, usage, and mechanics.

• A number of these responses show some evidence of advanced techniques or successful “risk taking.”

Score Point 4: Efficient

• On the whole, the writer correctly applies the conventions of language.

• Some mistakes in sentence formation, usage, or mechanics are present. However, none of these errors

are serious enough to interfere with the overall flow of the response or with its meaning.

Score Point 3: Adequate

• The writer is fairly successful in applying the conventions of language.

• Several mistakes in sentence formation, usage, or mechanics are present. The density of these errors

may interfere with the overall flow of the response but does not interfere with its meaning.

Score Point 2: Limited

• The writer is marginally successful in applying the conventions of language.

• Patterns of mistakes in sentence formation, usage, and mechanics significantly detract from the

presentation.

• At times, the meaning of the response may be impaired.

Score Point 1: Weak

• The writer’s achievement in applying the conventions of language is limited.

• Frequent and serious mistakes in sentence formation, usage, and mechanics make the response difficult

to understand.

Figure 3

Conventions of Language Rubric

Problem Solving Scores

The following scoring rules are observed for assigning the Problem Solving scores:

•

Following the Problem Solving Rubric (see Figure 4), one score is assigned per essay ranging

from 1.0–5.0 by each of two scorers (or 0.0, if deemed invalid).

•

When two scores are the same (e.g., 3 and 3), or differ by no more than one score point (e.g., 3

and 4), then the two scores are averaged, resulting in a score to one decimal place (e.g., 3.5).

•

When the two scores differ by more than one score point (e.g., 3 and 5), a resolution leader

reads the essay and assigns a score. The resolution score is then combined with the higher of

the two original scores, with the average of these two scores representing the final score (e.g.,

original high score of 5 + resolution score of 4 = final score of 4.5).

6

PCAT Technical Report 2007—Writing

Score Point 5: Superior

• Taking great care throughout to avoid fallacious reasoning of all kinds, the writer develops a

powerful, sophisticated argument embodying important principles of effective composition.

• The solution discussed is clearly related to the problem and is developed in sufficient detail with

relevant, convincing support provided (facts, examples, anecdotes).

• At appropriate points, the main tenets of the problem and the solution are discussed and explained.

• Multiple possible solutions are adequately discussed and evaluated.

• The response is organized logically (and sometimes ingeniously) from beginning to end.

Score Point 4: Efficient

• Despite possible bits and pieces of questionable reasoning, the response is a persuasive essay

showing strong evidence of effective composition.

• The solution discussed is clearly related to the problem and is developed with relevant, appropriate

support provided with some degree of depth.

• The main tenets of the problem and the solution are discussed and explained.

• Multiple possible solutions are at least mentioned, with some attempt at evaluation.

• For the most part, the organization is logical, although minor lapses may occur.

Score Point 3: Adequate

• This response is fairly successful in using important principles of effective composition.

• Though the presentation may remain too general to be convincing, the discussion of the problem

and solution is clear.

• The solution discussed is clearly related to the problem, and most of the support presented is

appropriate and relevant, but the response lacks the detailed, in-depth support characteristic of the

higher score points.

• The writing may progress logically enough but may be loosely organized; in such cases, the writer

may digress from the organizational plan or offer unnecessary redundancies, thus making the

presentation less straightforward and compromising its effect.

Score Point 2: Limited

• The writer may seem more concerned with self-expression than with meeting the demands of an

abstract task.

• A solution related to the problem is discussed, though it may be either implicit or, if explicit, not

clearly stated.

• Support is sketchy and, at times, interrupted with redundancies, digressions, irrelevancies, and/or

conditions/qualifications not clearly related to the problem.

• Organization may be rather haphazard. In such instances, this loose structuring of ideas weakens the

overall flow (and, hence, the power) of the discussion.

Score Point 1: Weak

• The response does not successfully embody important principles of effective composition.

• It is unclear how the solution presented relates to the problem.

• If a solution can be ascertained, the support is either fragmentary and unconvincing or is a

combination of material that does not contribute to the presentation (contradictions, caveat,

digression, redundancies, and outright irrelevancies).

• Chaotic organization may make it hard to follow the logic of the presentation.

Figure 4

Problem Solving Rubric

7

PCAT Technical Report 2007—Writing

How the Writing Scores Are Reported

Writing scores for each examinee and the mean Writing scores for the test date are reported

on Official Transcripts for recipient schools and on a personal Score Report for the examinee,

as shown in Figures 5 and 6.

Figure 5

Sample Official Transcript for 2007–08 and Beyond

8

PCAT Technical Report 2007—Writing

Figure 6

Sample Personal Score Report for 2007–08 and Beyond

Using PCAT Writing Scores as Criteria for Admission

When used appropriately, the PCAT Writing scores represent valuable information in the

admissions process that can be used to identify students’ written communication skills and as

guides for placement purposes. Because the ability to create organized and coherent reports or

correspondences is a common feature of professional life, it is important to assess a writing

sample produced under time constraints, and the product of that assessment is important for

college admission committees to consider.

The Writing scores should be interpreted with reference to the Mean scores listed on the

Official Transcript and to the score-point descriptions in the scoring rubrics provided in this

Technical Report. You may also find it useful to refer to the sample essays of each score that

are included in the final section of this Report.

It is important to remember that the PCAT Writing scores must always be considered along

with all other information available to you when considering a candidate for admission, and

that rigidly observed cut-off scores may inadvertently exclude otherwise worthy candidates.

For pharmacy schools already requiring candidates to submit an essay or other written

statement, the PCAT Writing scores may be useful as a sample of a candidate’s timed writing.

In such cases, the Writing scores can be compared to other writing samples submitted by the

candidate.

When used with other information available to admissions committees, PCAT Writing scores

represent a valuable tool for evaluating applicants to pharmacy programs. It is the

responsibility of each school of pharmacy to determine how it uses PCAT Writing scores.

Neither the AACP nor Harcourt Assessment establishes a passing score for any of the PCAT

Writing subtests or for the PCAT as a whole.

9

PCAT Technical Report 2007—Writing

Technical Information for the Writing Field-Test

The data included in this section represent all valid scores assigned during the 2004–05

Writing field-test for essays that were written on the four problem solving prompts. Of all the

field-test essays written during the 2004–05 testing cycle, samples of around 1,500 essays

were scored for each prompt, which is a sufficient number for analysis purposes. When these

essays were scored in 2004–05, both Writing scores were assigned by a single scorer, rather

than by one scorer for Conventions of Language and two scorers for Problem Solving, which

is the current procedure. Also, Writing scores were assigned during field-testing without the

benefit of representative examples of each score point to guide the scorers, as is practice for

scoring operational essays. As a result of the more refined scoring made possible by the use of

representative essays as scoring guides, Conventions of Language scores assigned during

2005–06 and 2006–07 tended to be slightly lower (about 0.14, on average) than scores

assigned during the field-test. Nevertheless, the following field-test sample data are useful for

comparing relative average performances on the two Writing scores and between Writing

scores for different demographic groups.

Field-Test Sample

Table 1 shows the mean scores (M), standard deviations (SD), and n-counts (n) for examinees

who earned scores on the problem solving prompts during the 2004–05 field-testing.

Table 1

Field-Test Sample Statistics

Stat.

Conventions

of Language

Problem

Solving

M

3.61

3.41

SD

0.67

0.75

n

6,244

6,244

Table 2 shows the Writing score point distributions (1–5) by number (Freq.) and percentage

(%) for examinees from the field-test sample.

Table 2

Writing Score Point Distributions

Conventions of Language

Score Points

Problem Solving

Score Points

Stat.

1

2

3

4

5

Stat.

1

2

3

4

5

Freq.

38

224

2,175

3,479

328

Freq.

74

525

2,692

2,686

267

%

0.61

3.59

34.83

55.72

5.25

%

1.19

8.41

43.11

43.02

4.28

10

PCAT Technical Report 2007—Writing

Demographic Characteristics of the Field-Test Sample

Tables 3–10 provide mean scores (M), standard deviations (SD), and n-counts (n) for the

Writing scores earned by examinees in the field-test sample, according to the demographic

characteristics as indicated on examinees’ PCAT applications. Because most of this

information is self-reported, there are some missing data in each category.

Table 3 shows scores by examinees’ current educational status, defined as the year of current

enrollment or the last year completed if not currently enrolled. While over 97% of the

examinees had completed more than one year of college, the highest-scoring examinees were

those in their first year of college, especially for Conventions of Language. Perhaps these

results can be explained if examinees were taking their English and other liberal arts

requirements around the time they took the PCAT, while those who took the test later on were

further removed from the writing-intensive liberal arts courses. These results may also be

attributable to the ambition of high-achieving students who apply to pharmacy schools early

in their college careers. Nevertheless, these score differences are less pronounced for Problem

Solving, with fourth-year students and college graduates averaging nearly the same as firstyear students. This may be attributable to the fact that the Problem Solving score is based

more on organizational and thinking skills than the Conventions of Language score, which is

based more on skills related to the mechanics of language use.

Table 3

Writing Scores by Current Level of Education

Conventions of Language

Years of College Currently

Enrolled/Completed

Stat.

High

School

High

School

Grad.

1

2

3

4

Grad.

M

3.43

3.71

3.80

3.61

3.58

3.62

3.65

SD

0.98

0.49

0.60

0.66

0.67

0.67

0.69

n

40

7

93

1,577

1,446

1,125

1,092

Problem Solving

Years of College Currently

Enrolled/Completed

Stat.

High

School

High

School

Grad.

M

3.23

3.43

3.47

3.39

3.36

3.43

3.46

SD

0.89

0.53

0.73

0.74

0.75

0.76

0.77

n

40

7

93

1,577

1,446

1,125

1,092

1

2

3

4

Grad.

Tables 4 and 5 report mean scores by the number of years of college-level biology and

chemistry coursework completed. As shown in Table 4, a large majority of the examinees had

completed more than one year of college biology, but the mean scores for this group are

slightly lower than for examinees who had completed fewer years of this subject. Considering

that the written communication skills involved in the Writing subtest may be quite different

from the skills developed in college biology courses, these results are not entirely surprising.

11

PCAT Technical Report 2007—Writing

Table 4

Writing Scores by Years of College Biology Completed

Conventions of Language

Problem Solving

Stat.

0

Less

than 1

1

More

than 1

Stat.

0

Less

than 1

1

More

than 1

M

3.69

3.64

3.66

3.60

M

3.46

3.48

3.42

3.40

SD

0.60

0.64

0.64

0.68

SD

0.74

0.73

0.74

0.75

n

123

152

903

4,125

n

123

152

903

4,125

As seen with biology coursework completion, Table 5 shows that a large majority of

examinees had completed more than one year of college chemistry. However, unlike the data

related to biology coursework in Table 4, the data in Table 5 show different performance

patterns for the two Writing scores. While examinees who had completed fewer chemistry

courses averaged slightly higher on Conventions of Language scores, those who had

completed more chemistry courses averaged slightly higher on Problem Solving scores. These

score inconsistencies may reflect the fact that different abilities are involved in chemistry

coursework than in writing an essay.

Table 5

Writing Scores by Years of College Chemistry Completed

Conventions of Language

Problem Solving

Stat.

0

Less

than 1

1

More

than 1

Stat.

0

Less

than 1

1

More

than 1

M

3.96

3.74

3.65

3.61

M

3.43

3.33

3.39

3.41

SD

0.37

0.57

0.61

0.67

SD

0.66

0.67

0.72

0.76

n

23

46

404

4,841

n

23

46

404

4,841

Table 6 provides score information according to the examinees’ age at the time of testing.

While those aged 21 and older made up the largest proportion, the data show that younger

examinees tended to earn higher Writing scores (with the exception of those aged 18 and

younger for Problem Solving). These results look very similar to the data in Table 3 related to

examinee’s current level of education and could perhaps be explained for similar reasons.

Table 6

Writing Scores by Age Category

Conventions of Language

Problem Solving

Stat.

≤ 18

19

20

21–23

≥ 24

Stat.

≤ 18

19

20

21–23

≥ 24

M

3.63

3.72

3.67

3.60

3.53

M

3.28

3.52

3.46

3.41

3.32

SD

0.66

0.60

0.63

0.67

0.73

SD

0.84

0.69

0.72

0.75

0.79

n

118

992

1,233

2,179

1,696

n

118

992

1,233

2,179

1,696

12

PCAT Technical Report 2007—Writing

Table 7 provides mean scores according to examinees’ sex. The field-test sample consisted of

nearly twice as many females as males, and females averaged higher scores than male

examinees. Interestingly, these results are the opposite of the results seen for the 1998–2003

normative sample, which showed males outperforming females on each multiple-choice

subtest (see the 2004 edition of the PCAT Technical Manual). For this reason, the Writing

scores should provide a useful balance to the multiple-choice scores for female candidates to

pharmacy schools.

Table 7

Writing Scores by Sex

Conventions of

Language

Problem Solving

Stat.

Female

Male

Stat.

Female

Male

M

3.65

3.53

M

3.43

3.34

SD

0.65

0.68

SD

0.75

0.76

n

3,570

1,837

n

3,570

1,837

Table 8 provides mean scores according to examinees’ linguistic background, defined as the

dominant language spoken at home. The PCAT registration includes the three categories for

linguistic background shown in the table. Examinees in the Spanish and Other categories

scored considerably lower than those claiming English as their dominant language.

Considering the language focus of the Writing subtest, these results are not surprising.

Table 8

Writing Scores by Linguistic Background

Conventions of Language

Problem Solving

Stat.

English

Spanis

h

Other

Stat.

English

Spanis

h

Other

M

3.69

3.18

3.32

M

3.46

3.12

3.16

SD

0.63

0.70

0.73

SD

0.73

0.71

0.78

n

3,976

100

843

n

3,976

100

843

Table 9 provides mean scores according to ethnic identification. The PCAT registration

currently allows candidates to select one ethnic identity from among the same categories used

by the U.S. Census. The largest number of examinees identified themselves as White and

tended to score the highest Writing scores (with the exception of Conventions of Language

scores for those identifying themselves as Native Hawaiian/Other Pacific Islander, with too

small of an n-count to allow definite conclusions). Though not an uncommon result on

standardized test scores, these lower performances for non-White groups remain a concern

and underscore the need for schools of pharmacy to consider many sources of information

about a candidate for admission other than test scores.

13

PCAT Technical Report 2007—Writing

Table 9

Writing Scores by Ethnic Identification

Conventions of Language

Asian

African

American/

Black

Hispanic/

Latino

Native

Hawaiian/

Other

Pacific

Islander

3.63

3.42

3.49

3.42

3.76

3.73

3.49

SD

0.64

0.74

0.66

0.70

0.62

0.60

0.71

n

49

1,176

506

252

21

2,870

225

White

Other

Stat.

American

Indian/

Alaskan

Native

M

White

Other

Problem Solving

Asian

African

American/

Black

Hispanic/

Latino

Native

Hawaiian/

Other

Pacific

Islander

3.37

3.24

3.29

3.27

3.38

3.50

3.34

SD

0.67

0.80

0.76

0.77

0.80

0.71

0.77

n

49

1,176

506

252

21

2,870

225

Stat.

American

Indian/

Alaskan

Native

M

Table 10 provides score information about examinees who were taking the PCAT for the first

time and those who had taken the test previously. Those taking the PCAT for the first time

made up the largest proportion of examinees from the field-test sample and tended to score

higher on the Writing subtest than those who had taken it more than once. These results are

consistent with the performance of the normative sample as reported in the 2004 edition of the

PCAT Technical Manual, which shows examinees averaging higher on all PCAT multiplechoice subtests who had taken the PCAT only once.

Table 10

Writing Scores by Number of PCAT Attempts

Conventions of

Language

st

Problem Solving

Stat.

1st

Attempt

2 or More

Attempts

3.59

M

3.43

3.36

0.66

0.69

SD

0.74

0.77

3,225

2,085

n

3,225

2,085

Stat.

1

Attempt

2 or More

Attempts

M

3.63

SD

n

14

PCAT Technical Report 2007—Writing

Evidence of Reliability and Validity

The PCAT Writing subtest is intended to measure a candidate’s composition skills and

problem solving ability, and it is important to know that this assessment is performing as

expected. Data in Table 11 present evidence of the reliability of the Writing subtest indicated

through inter-rater reliability, the degree of agreement between scores assigned to the same

essay by different scorers. Data in Table 12 present evidence of the validity of reporting

separate Writing scores by examining correlations between the Writing scores and the PCAT

multiple-choice subtest scaled scores, as well as correlations between the two Writing scores.

The data in Tables 11 and 12 reflect the performances of all PCAT examinees who made up

the field-test sample (n=6,244).

Table 11 indicates the relationships between the Conventions of Language and Problem

Solving scores assigned by trained scorers and the scores assigned by the 10% validation

check. Though only the Conventions of Language scores are currently validated in this way

(see pages 5–7 of this Report for explanations of how the scores are assigned and validated),

the Problem Solving scores were similarly validated during the field-test. The data in Table 11

show the percentage of instances of discrepancy between a trained scorer’s score and a

supervisor’s check score. For the Conventions of Language scores, 55% had a 0-point

discrepancy, 41% had a 1-point discrepancy, and 96% had either a 0-point or a 1-point

discrepancy. For the Problem Solving scores, 58% had a 0-point discrepancy, 38% had a

1-point discrepancy, and 96% had either a 0-point or a 1-point discrepancy. The 0–1 score

point discrepancies of 96% suggest high degrees of consistency in the scoring of the essays.

Table 11

Percent of Writing Score Inter-Rater Agreements

Scores

Percent with

0 Point

Discrepancy

Percent with

1 Point

Discrepancy

Percent with

0–1 Point

Discrepancy

Conventions of Language

55

41

96

Problem Solving

58

38

96

Table 12 shows the relationships between the Writing scores and the PCAT multiple-choice

subtest scores and between the two Writing scores. Each of these relationships is displayed as

a correlation coefficient (r), a measure of the relative strength of the relationship. Regarding

correlations between the two Writing scores and the five multiple-choice subtest scores, it is

not surprising that Table 12 shows that the Writing scores are more highly correlated with the

scores for the two other language-oriented subtests: correlations of 0.36 between Verbal

Ability and Conventions of Language, 0.28 between Verbal Ability and Problem Solving,

0.35 between Reading Comprehension and Conventions of Language, and 0.29 between

Reading Comprehension and Problem Solving. It is also no surprise that the lowest

correlations are between the Writing scores and the Quantitative Ability scores (0.14 for both)

and Chemistry scores (0.12 and 0.14). Interestingly, the correlations between the Composite

score and the Writing scores (0.30 and 0.27) are relatively similar to the correlations between

the Writing scores and the Verbal Ability and Reading Comprehension scores.

15

PCAT Technical Report 2007—Writing

Nevertheless, the relatively low to moderate correlations between the Writing and the

multiple-choice subtest scores as shown in Table 12 (0.12–0.36) suggest that the Writing

scores are measuring skills distinct from what the other subtests are measuring. Indeed when

the highest correlation coefficient (r) of 0.36 is squared, the resulting r2 is 0.13, suggesting

that the amount of common variation between the Conventions of Language and Verbal

Abilities scores is only 13%. In addition, all of the correlations shown in Table 12 are lower

than the correlations found between the multiple-choice subtests themselves, as reported in

the 2004 edition of the PCAT Technical Manual, also suggesting that the Writing scores

indicate different information about examinees than the multiple-choice subtest scores do.

With regard to correlations between the Conventions of Language and Problem Solving

Writing scores, Table 12 suggests that the two scores are more similar to each other (r=0.56)

than to any of the other PCAT subtests. This is not surprising considering that both scores are

derived from a single essay. Nevertheless, these correlations are less than the correlations

between some of the multiple-choice subtest scores as reported in the 2004 edition of the

PCAT Technical Manual. While correlations of 0.56 are moderately strong, they are low

enough to suggest that the two scores are assessing characteristics that are different enough

from each other to justify reporting them separately.

Table 12

Correlations between the Writing Scores and Multiple-Choice Subtest

Scaled Scores

PCAT Subtest Correlations with Writing

Writing

Scores

Verb.

Bio.

Chem.

Read.

Quant.

Comp.

Conv.

Lang.

Prob.

Solv.

Conventions

of Language

0.36

0.20

0.12

0.35

0.14

0.30

—

0.56

Problem

Solving

0.28

0.18

0.14

0.29

0.14

0.27

0.56

—

Note.

Pearson product-moment correlation coefficient used; all correlations significant at the

0.0001 level (2-tailed).

16

PCAT Technical Report 2007—Writing

Sample Essays

The six essays on the following pages represent examples of each earned score point (1.0, 2.0,

3.0, 4.0, and 5.0) for Conventions of Language and Problem Solving. Each sample essay is

shown with the assigned Conventions of Language and Problem Solving score, and with brief

summary notes (in parentheses following each score) to explain what a score point indicates.

The sample essays were all written on the following prompt:

Discuss a solution to the problem of assuring national security in an open and free

society that is based on individual civil rights and liberties.

This prompt was field tested in January 2005 and was then used operationally in June 2007,

but will never again be used on a PCAT test form. For your reference, Table 13 shows the

field-test mean scores (M), standard deviations (SD), and n-counts (n) for the sample prompt,

and Table 14 shows the field-test score point distributions obtained for this prompt.

Table 13

Sample Prompt Field-Test Score Statistics

Stat.

Conventions

of Language

Problem

Solving

M

3.62

3.30

SD

0.65

0.76

n

1,579

1,579

Table 14

Sample Prompt Field-Test Score Point Distributions

Conventions of Language

Score Points

Problem Solving

Score Points

Stat.

1

2

3

4

5

Stat.

1

2

3

4

5

Freq.

8

55

533

910

73

Freq.

30

161

730

615

43

%

0.51

3.48

33.76

57.63

4.62

%

1.90

10.20

46.23

38.95

2.72

The sample essays that follow are based on examinees’ handwritten essays that have been

transcribed for this Technical Report and then modified enough so that none are identifiable

regarding authorship. Please keep in mind while reviewing these sample essays—and indeed

when considering an examinee’s Writing scores—that examinees who write a PCAT essay are

basically composing a rough draft under the pressure of a high-stakes testing situation. With a

30-minute time limit to plan and write the essay, examinees have very little time to proofread

and make any desired revisions to their draft. There is neither time allowed during the test nor

space provided in the Answer Booklet for examinees to entirely rewrite an essay, and

corrections are typically made by erasing and rewriting words or phrases, or by crossing out

text and making edits on the same lines above the original text or in margins. Though the

sample essays that follow do not include any editing marks, they do include errors in spelling,

sentence structure, and word usage that are illustrative of the types of errors commonly made

by examinees.

17

PCAT Technical Report 2007—Writing

Sample Essay 1

Conventions of Language Score: 1.0 (Weak—very weak skills, many serious errors,

difficult to understand)

Problem Solving Score: 1.0 (Weak—ineffective organization, development, and

presentation)

A lot of social organization problems are presence in free society. A world

lacking order is going to degenerated. To help order in the open and free society

it is specially crucial to have a national security. With no honesty voluntary

helping or self-consciousness of human actions. None of this could be

possibility this ways.

Sample Essay 2

Conventions of Language Score: 2.0 (Limited—marginal skills, patterns of errors, some

impairment of meaning)

Problem Solving Score: 1.0 (Weak—disorganized, unclear development, unconvincing

presentation)

A significant challenge in today’s open and free society is guaranteeing

national security. Three years have passed since the shocking 9/11 terror

incidents in the States and people are still fearful. Many are afraid that the

States may be hit again.

I learned something new the other day. I went to the mall with a friend

that I met in one of my classes to run some errands. When we got just inside

the mall she told me that she was shocked that no one check her purse or search

her body before entering. I looked at her confused. Then she told me that in

Isriel the security check in everyone’s bags and search their bodies before

entering the mall. I told her that our security do that too, just when you are

leaving the mall. After talking with her more she explained to me that Isriel has

been dealing with security issues for a great deal longer time than States. To

avoid this from occurring in States, like already have in Isriel, action needs to be

taken quikly.

18

PCAT Technical Report 2007—Writing

Sample Essay 3

Conventions of Language Score: 3.0 (Adequate—satisfactory skills, several errors, clear

beginning, middle, and end)

Problem Solving Score: 2.0 (Limited—unclear organization, incomplete development,

ineffective presentation)

The thought of national security being enforced would bring mixed views

to society. While some individuals would view it positively, others would be

skeptical. Freedom, individual rights, & privacy are all concerns that are likely to

arise.

Of these concerns, privacy is sure to bring the most controversy. At the

heart of the new national security operations there would likely be survelance

cameras & phone tapping. Both of which are currently allowed due to the

patriot act which lets the government look through information on anyone.

Sadly due to this someone is aware of who we are & what we do each day.

Where is the privacy in that?

In order to guard our country with the highest level of security all citizens

would need to temporarily give up their own freedom. No one would be able to

just go somewhere spur-of-the moment. Everyone, young & old, would all

have to follow the same rules. Individuals could even be regarded as a terrorist if

they do not follow their given schedules.

The individual rights that were once given as a gift would be stripped

away, all due to a lack of trust. Individuality would be gone. Finally some sort of

tyranny would come with a socialist viewpoint in its place, & a since of security

is all we would get. Who wants that to happen? Not me, and hopefully not you.

Is national security this important? For us to give up our most treasured

rights of privacy, freedom, and individuality. Let us hope that we are not

moving this way as a nation. Regrettably, we are falling with no one in sight to

catch us.

19

PCAT Technical Report 2007—Writing

Sample Essay 4

Conventions of Language Score: 5.0 (Superior—impressive skills, minimal errors, clear

structure)

Problem Solving Score: 3.0 (Adequate—loose organization, appropriate development,

adequate but simplistic presentation)

In this time of uncertainty, the concern for national security must be

addressed responsibly. Fortunately, there are several promising solutions that

authorities can consider. Prior to adopting a specific plan, authorities must

make sure that any decisions are consistent with the needs, views, and concerns

as expressed by the public. Citizens must be allowed to freely express their views

and to vote on which solutions they consider to be best for their country.

One possible solution that should be considered involves consulting with

the leaders of other countries to resolve any differences. Each national leader

should inform the others of specific policies and regulations followed in each

country. Doing so could bring about coalitions between countries in order to

safeguard all involved against potential enemies. Alliances of this sort would

represent important steps toward ensuring our nation’s security.

After assuring the cooperation of international allies, we can attempt to

secure our nation through internal means by placing physical boundaries, such

as electric fences and security devices, on all the borders. In order to stop people

from entering this country without appropriate reasons, we could put gated

entrances with security patrols at the locations where individuals most

frequently attempt to cross the borders. In this way, strict entry policies can be

adopted.

Another internal measure that should be explored involves enacting laws

that restrict the private use of weapons and that enforce effective penalties on

individuals who violate these regulations. Accurate and up-to-date records

should be kept on all citizens that own and use weapons. Weapons laws should

be firm, and anyone who uses weapons to threaten the sense of security held

dear by law-abiding citizens should be disciplined.

With the rights and wishes of citizens in mind, and after making alliances

with other countries, it is definitely possible to secure our nation.

20

PCAT Technical Report 2007—Writing

Sample Essay 5

Conventions of Language Score: 4.0 (Efficient—solid skills, some errors, effective flow)

Problem Solving Score: 4.0 (Efficient—solid organization, clear development, persuasive

presentation)

The growing threat of terrorism is causing national security to be an issue

in several countries. Ways to solve this problem are being discussed and

modified by all. One solution is to initiate a community strengthening program

in addition to having a reasonably paid volunteer army that is committed to

national security. This solution appears promising in a free nation that

encourages individual rights and liberties.

Within each community the leader would need to create a program that

would meet the requsts of everyone involved. In order to do this, a meeting

would need to be held to establish an agreed upon goal and to start to develop a

bond. With each of these programs being locally operated within each

community it would allow citizens to start to from bonds with one another.

This would then help build a trusting relationship and create a responsibility to

look after each other in trying times. A united communty that is built on trust

would continue to provide support to their government due to their ability to

act freely. Each citizen would then feel pride for their nation which will make

them want to continue to protect everyone around them from harm.

In getting the nation to participate by strengthening all communities,

every citizen should feel as though they can relate with the person next to them.

This should stop people from seeing one another in categories and instead as

people they can depend on and wish to guard from all harm. A community

strengthening program of this type would allow every citizen the right to

choose to protect themselves and their neighbors in the community around

them. This would eventually strengthen a person’s belief in civic rights which

consist of their liberties in an open society.

[Essay 5 continued on the next page]

21

PCAT Technical Report 2007—Writing

[Essay 5 continued from the previous page]

Another solution to the national security issue would be to create an

improved national military that people can take pride in. From the locally

established communities highly paid army units could be recruited. Since all of

the members in the army unit would come from the same community they

would all have the same values and beliefs. Everyone in these army units would

feel extremly honored and more willing to serve their country and protect their

nation.

Establishing these two programs would hopefully unite the nation as a

whole to work together in order to assure national security. If these program are

created in a way that respects people’s rights, citizens will make their own moral

decisions to want to participate, which will make this country stronger and safer.

22

PCAT Technical Report 2007—Writing

Sample Essay 6

Conventions of Language Score: 5.0 (Superior—impressive skills, minimal errors, clear

structure)

Problem Solving Score: 5.0 (Superior—effective organization, convincing development,

sophisticated presentation)

The national and state political leaders must work together with all the

civilians of our nation to help assure national security. Increasing security in our

airports and along the borders and coastlines are measures that can greatly help

to ensure national security. As important as these measures are, it is essential

that they be implemented only while taking into consideration the individual

rights and liberties of all people.

Increased security in the vulnerable entry points of our nation entails

inspecting items that come into the country through the borders or via the air

and seas. It also involves a detailed examination of luggage arriving on airplanes,

as well as belongings coming into our country by any other means. Although

these procedures are necessary, there should be no reason to violate an

individual’s rights in the process, especially when doing personal searches. For

instance, many nuns are required to wear their habits and veils at all times,

which includes going through airport security checks. When such individuals

with religious affiliations that involve wearing specific cloths are asked by

security at airports or customs to remove a piece of their required clothing, it

violates their individual rights as well as there constitutional guarantee of

freedom of religion. For this reason, border patrol and airport security staff

must be willing to perform their searches without violating the civil rights and

liberties of any individual.

[Essay 6 continued on the next page]

23

PCAT Technical Report 2007—Writing

[Essay 6 continued from the previous page]

To go along with increasing security, any new policies or changes to old

policies should be made public knowledge. To do this news channels across the

nation could all run stories regarding the new policies and all of the airports

could post signs regarding what is and isn’t allowed to enter and leave the

country. Hopefully, doing things like this would help prevent inconvenances

and misunderstandings when traveling. Another thing that can be done to help

inform travelers to the U.S. about our national security changes is to inform

officials from other countries about these policies. By doing this, officials from

other countries would be able to inform their citizens about our new policies to

prevent any misunderstandings they may have when traveling to our country.

In summary, feasible solutions to our national security problems are

available to increase security at our airports, borders, and coastlines. In order to

implement these measures, political leaders must take steps to make sure that all

civilians of our country are made aware of the new policies and are informed

enough to want to agree to follow them. In addition, our national policy

makers need to ensure the public that all security officers will keep in mind the

individual rights and liberties of all, regardless of gender, faith, race or origin.

24