Pattern Classification (Duda, Hart, Stork) Nearest Neighbor Pattern

advertisement

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Pattern Classification (Duda, Hart, Stork)

Nearest Neighbor Pattern Classification (Cover and Hart)

Roberto Souza

DCA/FEEC/UNICAMP

16 de março de 2012

Roberto Souza

1/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Agenda

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Roberto Souza

2/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Developed in the 1960s;

Non-parametric, sub-optimum classifiers;

Often provide competitive results;

Simple to understand and implement.

Roberto Souza

3/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

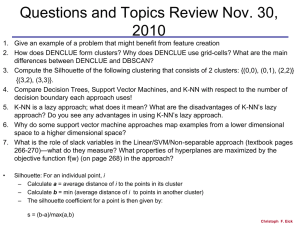

Supervised Classification Problem Formulation

M Classes;

N i.i.d. labeled samples

Z = {(X1 , θ(X1 )), (X2 , θ(X2 )), ...(XN , θ(XN ))}

θ(Xi ) ∈ {ω1 , ω2 , ..., ωM }

Assign new samples X s to one of the M possible classes

in a way to minimize the misclassification error.

Error expression:

Z +∞

p(error ) =

p(error , X )dX

−∞

Z +∞

=

p(error | X )p(X )dX .

−∞

Roberto Souza

4/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Bayesian Decison Rule

Finds an optimal solution to the classification problem;

p(ωi ) and p(X | ωi ) are known distributions;

From Bayes’ Theorem, p(ωi | X ) can be written as:

p(ωi | X ) =

where:

p(X ) =

M

X

p(X |ωi )×p(ωi )

,

p(X )

p(X | ωi ) × p(ωi ).

i=1

Bayesian decision Rule:

p(error | X ) = 1 − max[p(ω1 | X ), ..., p(ωM | X )].

Bayes’ Error Rate (BER) is achieved by using BDR.

Roberto Souza

5/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

1-NN Overview

Figura: Illustration of 1-NN operation.

Roberto Souza

6/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

1-NN Mathematical Formulation

The 1-NN classifier can be formulated in terms of mathematical equations. The label θ(X ) of each new sample X is

given by the following equation:

θ(X ) = θ(XNN ),

(1)

XNN = arg min {dX (Xi )},

(2)

where XNN is given by:

∀Xi ∈Z

and dX (Xi ) is the distance between X and Xi in the chosen

metric.

Roberto Souza

7/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

1-NN Error Bounds

As the number of labeled samples N tends to infinity in a

M-class classification problem, the 1-Nearest Neighbor Error

Rate (1NNER) is bounded by the following expression:

BER ≤ 1NNER ≤ BER × (2 −

Roberto Souza

8/ 13

M

M−1

× BER).

(3)

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

1-NN Weaknesses

1-NN is sensible to noise and outliers;

1NNER is only valid on an infinite labeled samples space;

Its computational complexity increases with N.

Roberto Souza

9/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

k-NN Overview

k-NN is a natural extension of the 1-NN classifier. k-NN

classifies X by assigning it to the label most frequently

present in the k nearest neighbors. k-NN takes into

account k neighbors, so it is less sensible to noisy than

1-NN;

It can be shown that for an infinite number of samples,

N,as k tends to infinity the k-NN Error Rate (kNNER)

tends to the BER;

Roberto Souza

10/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Anomalous 3-NN Case

Figura: Illustration of a 3-NN anomalous case.

Roberto Souza

11/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Although k-NN, for k > 1, is theoretically a better

classifier than 1-NN, this may not be true if the number

of training samples is not large enough;

To avoid k-NN anomalous behaviour it is inserted a

parameter d, i.e. k-NN is no longer a non-parametric

classifier.

Roberto Souza

12/ 13

Introduction

Supervised Classification Backbone

1-NN

k-NN

Conclusions

Thanks for your attention!

Roberto Souza

13/ 13