Assignment 6

advertisement

Johan Helsing, 19th October 2008

NUMA11/FMNN01 fall ’08: Numerical Linear Algebra

Numerical Analysis, Centre for Mathematical Sciences

Assignment 6

Deadline: Friday October 10.

Purpose: practice applications of spectral theory.

Problem 1(PF). Do exercise 24.2 in Trefethen and Bau.

Problem 2(E). Do exercise 24.3 in Trefethen and Bau. Note that exp and

expm act differently on matrices in Matlab. When you compare your plot

to the straight line etα(A) (use semilogy) you will notice that it sometimes

is translated with respect to the asymptotic behaviour of ||etA ||2 . Compute therefore, numerically in Matlab, also a constant c such that the line

cetα(A) better follows the asymptotic behaviour of ||etA ||2 . Your Matlab

expression for c should be simple and only contain one or more of the following quantities: a 2-norm of a right Matlab eigenvector, a 2-norm of a left

Matlab eigenvector, an eigenvalue of A. With right Matlab eigenvector

is meant any column of V computed as [V,D]=eig(A). With left Matlab

eigenvector is meant any row of the inverse to V. See also comment on page

4 of the assignment.

(2p)

Problem 3(E). This is a follow up to problem 9 of Assignment 1 and

problem 2 of Assignment 5. The program below constructs a diagonalizable

n × n matrix A of rank n − m, and a right hand side rhs in the range of A.

function mynull

n=input(’Give system dimension: ’);

m=input(’Give null-space dimension: ’);

[U,S,V]=svd(randn(n));

s=diag(S);

s1=s(1:n-m);

U1=U(:,1:n-m);

U2=U(:,n-m+1:n);

V1=V(:,1:n-m);

V2=V(:,n-m+1:n);

A=U1*diag(s1)*V1’;

rhs=U1*randn(n-m,1);

You can safely assume that all entries of s1 are non-zero. The system

A*x=rhs then has infinitely many solutions, and if you try x=A\rhs you

may very well find an approximation to one of these. You can also assume

that span{V1, U2} = Rn . Your task is to continue this program so that it

page 1 of 4

a) Constructs an n × n matrix B of full rank and such that the vector x1

obtained with x1=B\rhs is the minimum norm solution.

b) Constructs an n × n matrix C of full rank and such that the vector x2

obtained with x2=C\rhs lies in the range of A.

Compute also the relative residuals and orthogonality checks

• norm(rhs-A*x1)/norm(rhs)

• norm(rhs-A*x2)/norm(rhs)

• norm(V2’*x1)/norm(x1)

• norm(U2’*x2)/norm(x2)

All these quantities should be very small.

The matrices B and C are by no means unique. Assume that n m. Try,

primarily, to make simple and computationally inexpensive constructions of

B and C given the matrices that are already available in the program. If

you can think of several constructions of similar costs, choose those that

are likely to make the condition numbers of B and C the lowest. Hand in

program and output. No proofs are necessary.

(2p)

Problem 4(E). The n × n matrix A has entries

− log |zi − zj | ,

i 6= j ,

i, j = 1, . . . , n ,

Aij =

− log |ri | ,

i=j,

(1)

where zi are n randomly placed points in a unit square centered at the origin

in the complex plane and where each ri is a random number in (0, 0.5di ] with

di being the distance between the point zi and its nearest neighbour.

The matrix A of (1), or some modification of it, occurs as system matrix

in a variety of situations in material science and electrical engineering. Its

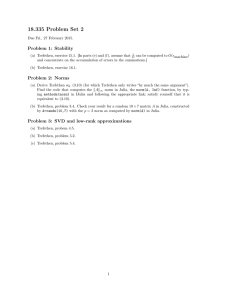

spectrum grows as n. See Figure 1, first image, for a realization with n =

1000. We want to find a good sparse approximate inverse to A. There are

theoretical as well as experimental indications that such an inverse exists.

For example, let B denote the matrix consisting of the k largest entries in

each column of A−1 with the remaining elements being zero. With small

k and large n the matrix B is very sparse. The second image of Figure 1

shows the spectrum of the composition AB for k = 20 and n = 1000. Most

eigenvalues are clustered around unity.

The cost of storing B is only O(nk), but its computational cost is O(n3 )

FLOPs. Interestingly, we can find another matrix C, with similar properties

as B, that is cheaper to compute. An n×n matrix C with k nonzero element

in each column can be constructed, column by column, in the following way:

Fix a j and let Sj denote the set of indices i corresponding to the k smallest

page 2 of 4

Spectrum of A

0.1

0.05

Im{λ}

Im{λ}

0.05

0

−0.05

−0.1

Spectrum of A*B

0.1

0

−0.05

0

10

0.1

−0.1

2

10

Re{λ}

Spectrum of A*C

0

10

2

Re{λ}

10

Im{λ}

0.05

0

−0.05

−0.1

0

10

2

Re{λ}

10

Figure 1: Spectrum of A, AB, and AC for k = 20 and n = 1000. Note that

the x-axis is logarithmic while the y axis is linear and that the scales are very

different.

distances |zi − zj | and let  be a symmetric k × k submatrix of A containing

all entries of A which have both indices in Sj . One can then write

Âil = Aq(i)q(l) ,

i, l = 1, 2, . . . k ,

where q is an injective map from the set 1, 2, . . . , k to Sj and such that

q(1) = j. Now solve the k × k linear system

Âm = e ,

(3)

where e is a column unit vector with k entries e=[1;0;...;0]. Then let

the nonzero elements in column j of C be

Cq(i)j = mi ,

i = 1, 2, . . . k .

This process is repeated for j = 1, 2, . . . , n and the cost of constructing C is

only O(nk 3 ) FLOPs. The third image of Figure 1 shows the spectrum of the

composition AC. The eigenvalues are, perhaps, even better clustered than

for the composition AB. The matrix C is sometimes called a mesh neighbour based Diagonal Block Approximate Inverse, see K. Chen, ’An Analysis

of Sparse Approximate Inverse Preconditioners...’, SIAM J. Matrix Anal.

Appl., 22(4) pp. 1058-1078, (2001). An improvement of C, called D, has

also been suggested where (3) is replaced by

+ α(n, k)Â−1 uuT m = e ,

page 3 of 4

where u is a column vector with all entries unity, u=[1;1;...;1] and α(n, k)

is given by

− 4 logk

α(n, k) = 4 · (n − k) · 10

10 (n)

.

Construct the matrices A, C, and D. Take k = 20 and n ≥ 1000.

Compute spectra of A, AC, and AD as in Figure 1. When constructing

D, make the code efficient when it comes to linear algebra: Construct the

matrix A and the maps q in any way you like (compatible with text above),

but try to make the construction of D, given A and the q maps, cost only

one lu-factorization and an additional 4k 2 + O(k) FLOPs per column. You

may assume that the backslash operator takes advantage of lower and upper

triangular structures. An efficient Matlab code for the entire problem does

not have to be more than 45 lines, including setup and plotting commands.

Hand in programs and plots.

(2p)

Problem 5(PF). Do exercise 26.1 in Trefethen and Bau. Note: σm (B)

denotes the smallest singular value of the matrix B. Remember: a test

vector which realizes the 2-norm of a matrix has to be a multiple of the

right singular vector corresponding to the largest singular value.

Problem 6(E). Do exercise 26.2 i Trefethen and Bau. Pseudospectra seem

to be a special interest of the authors. Part (b) of this problem is quite hard

and definitely requires some extra reading. Hint: The “Initial growth rate”

seems to be related to the “numerical abscissa”, while the maximum of the

curve is related to the pseudospectrum in a complicated manner

(1p)

Problem 7(PF). Do exercise 27.5 in Trefethen and Bau. Your “proof”

should be convincing, but complete rigour is not required. Hint: In the

calculation on page 95 it is assumed that δx is infinitesimal. This assumption

is no longer valid when A is nearly singular.

Comment to Problem 2. The improved line cetα(A) cannot follow ||etA ||2

asymptotically when ||etA ||2 oscillates. But it is possible to find a function

c(t), rather than a constant c, which makes c(t)etα(A) follow the asymptotic

behaviour of ||etA ||2 even when it oscillates. All that is needed for c(t) is the

variable t and eigenvalues and left- and right eigenvectors of A. And then

basic aritmetic operations, inner products, square roots, the exponential

function, and conjugations. It is not required that you find c(t) in this

Problem.

page 4 of 4