Augmenting Context-Dependent Memory

Jeanine K. Stefanucci

College of William & Mary

Shawn P. O’Hargan

Dennis R. Proffitt

University of Virginia

ABSTRACT: The goal of this study was to design a human-computer interface that would

increase the memorability of information presented by providing context as compared

with memory with no context. Our focus was in augmenting context-dependent memory because it is a powerful and often unexploited characteristic of human cognition.

To amplify this cognitive strength, we built the InfoCockpit, which included a large

screen containing projected images of places, a three-dimensional surround-sound

system that played ambient noises congruent with the projected images, and a flat-panel

monitor that served as the focal display for the presentation of the to-be-remembered

information. Participants in our study learned and recalled information in either the

InfoCockpit or a standard desktop environment. The InfoCockpit group demonstrated a

131% memory advantage. Contextual factors that were previously found to be effective

in isolation created a large effect when presented in combination.

CONTEXT-DEPENDENT MEMORY REFERS TO THE FINDING THAT PEOPLE ARE OFTEN BETTER AT

remembering information if they return to the place where it was encountered or if

they imagine themselves to be in that place. To experience this phenomenon, try to

remember what you had for lunch on Wednesday of last week. An effective retrieval

strategy is first to recall what you were doing last Wednesday and where you were

when you had lunch.

Research on context-dependent memory finds consistent advantages for reinstating context (J. R. Anderson, 1983; Bower, 1967; McGeoch, 1942; Raaijmakers &

Shiffrin, 1981; Tulving, 1974, 1983); however, the size of these effects tends to be

small (for a review, see Smith & Vela, 2001). One reason that these effect sizes are small

is that most studies were designed to isolate the factors that contribute to contextdependent memory effects. The aim in the current research was to determine whether

we could engineer a work environment that would evoke effects of sufficient size to

be of applied significance.

Because moderate or null findings have been reported in the context-dependent

memory literature in the past (Fernandez & Glenberg, 1985; Godden & Baddeley,

1975, 1980; Jacoby, 1983; Nixon & Kanak, 1981; Smith, Glenberg, & Bjork, 1978),

we created an environment that combined those factors that have been found to evoke

the largest context-dependent memory effects.

Smith and Vela (2001) reviewed the literature on context-dependent memory and

identified those factors that consistently produced large effect sizes across studies.

ADDRESS CORRESPONDENCE TO: Jeanine K. Stefanucci, Department of Psychology, College of William

& Mary, P.O. Box 8795, Williamsburg, VA 23187-8795, jkstef@wm.edu.

Journal of Cognitive Engineering and Decision Making, Volume 1, Number 4, Winter 2007, pp. 391–404.

DOI 10.1518/155534307X264870. © 2007 by Human Factors and Ergonomics Society. All rights reserved.

391

For instance, including human faces and voices within contexts creates powerful

associations that draw attention to the setting of the speaker and help later recall

of learned material (Craik & Kirsner, 1974; Davies, 1988; Dodson & Shimamura,

2000). Smith and Vela (2001) noted in their meta-analyses that recall is best when

the people associated with the context are the same at encoding and retrieval. Like

voices and faces, distinctive sounds associated with the environment at encoding

also serve as cues for successful retrieval.

Recent work by Davis, Scott, Pair, Hedges, and Oliverio (1999) and by Stefanucci

and Proffitt (2002) showed that when ambient sounds (such as chirping birds, rustling leaves, or thunderstorms) were paired with congruent images as contexts for

encoding, remembering was improved relative to presenting the images without

complementing sounds (see Smith, 1985, for other work on background sounds and

music as contexts).

The presence of distinctive cues at both encoding and retrieval is important in

obtaining large effect sizes, but so too is the experimental paradigm employed. Context has been found to mediate large memory effects when it is used to reduce interference between competing recalled items. It is hard to remember what we ate

for lunch last Wednesday because we are likely to have eaten many different things for

lunch over the past week, and we tend not to associate our meals with dates. Thus,

when trying to remember a specific meal, lots of different possibilities come to mind.

This type of interference causes memory impairment because information is confused

with similar memories, which complicates and blocks recovery of the desired information (M. C. Anderson & Neely, 1996; Crowder, 1976).

One way to keep the information separate would be to associate it with different

contexts (Smith, 1982, 1984; Strand, 1970). Past research has shown that environmental context is an effective memory cue when it is used to discriminate between

target and interfering memory traces that may be activated at the time of retrieval,

thereby facilitating recall of the intended target memory (Nairne, 2002). Early studies on interference reduction found that learning interfering lists in different contexts

substantially reduced both proactive and retroactive interference (thereby increasing recall) because items could be discriminated based on their associations with

the learning environments (Bilodeau & Schlosberg, 1951; Dallett & Wilcox, 1968;

Greenspoon & Ranyard, 1957). In fact, the biggest context-dependent memory effect

sizes have been found using paradigms that reduce interference at retrieval (Smith &

Vela, 2001). Bjork and Richardson-Klavehn (1988) termed these interference paradigms second-order experiments because they included the study of multiple word lists

in many different contextual environments.

There are ways to implement multiple contexts other than physically changing

locations. An alternative is to develop a dynamic virtual environment that is capable

of supporting multiple contexts in a single space. This can be accomplished by using

large projected images paired with congruent ambient sounds, thereby converting a

typical room into a variety of virtual environments, from a forest clearing to a lunar

landscape. For our study, we implemented a computer display system that had these

capabilities, labeled the InfoCockpit, to find out if we could additively increase

392

Journal of Cognitive Engineering and Decision Making / Winter 2007

memory performance and obtain a large context-dependent memory effect of practical significance. This system was designed to exploit cues that are known to increase memory performance. In this fashion, a number of memory cues could be

examined to assess their combined performance advantage.

Although the benefits of context-dependent memory have been small in laboratory settings (because researchers have isolated the components of context to assess

their individual effects), we believe that the combination of the cues previously mentioned (faces, voices, sounds, reinstatement of cues at retrieval), when used in realworld office environments, has the potential to yield large results.

Experiment:The Benefits of Elaborate Contexts

All participants learned three lists of word pairs in a cued-recall paradigm. Half

the participants were asked to learn information (a word list) in each of three distinct

environments on the InfoCockpit. The other half learned the same information in one

unchanging environment (a standard desktop computer), similar to current office environments. All participants returned to the same learning environments a day later

to retrieve the to-be-remembered information. We predicted that the added cues in

the InfoCockpit would make each list more distinctive, thereby reducing interference and improving recall scores.

Method

Participants

Twenty-four undergraduate students (12 men, 12 women) from the University

of Virginia participated in the experiment. They were given class credit for their participation. They had no previous exposure to the InfoCockpit. All participants gave

written informed consent for their participation.

Apparatus

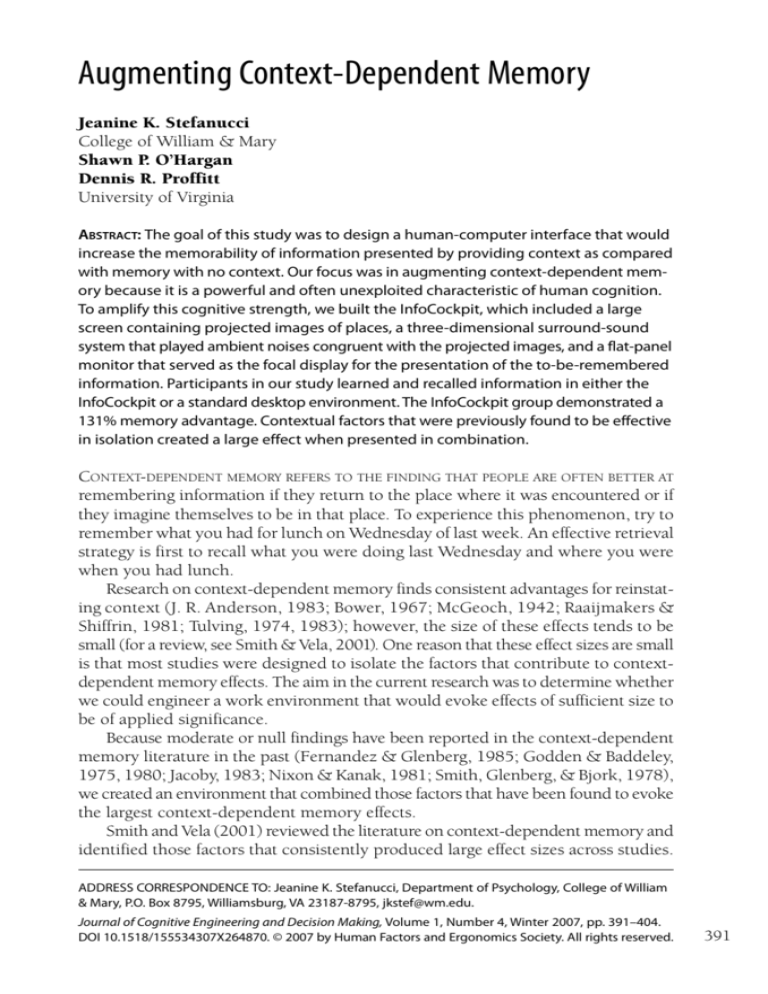

To manipulate the user’s context, we used the InfoCockpit (see Figure 1, page 396),

which combined panoramic images with appropriate three-dimensional ambient

(3-D) surround sounds. For example, one environment was an outdoor scene of the

lawn at the University of Virginia, and the accompanying sounds were of birds, insects,

and leaves. Participants learned the information on an NEC 18-inch LCD monitor, and

the contexts were projected by three Sharp Notevision LCD projectors (2200 lumens)

onto a semicircular projection screen subtending a 145-deg field of view relative to

the participant. Panoramas were 360-deg images, with 145 deg projected onto the

screen at any moment. All panoramas rotated counterclockwise at a speed of one rotation every 28 min. The slow rotation was barely noticeable; however, the new objects

coming into view kept the participants engaged and the scene somewhat dynamic.

Audio contexts were created by layering sound samples from sample libraries at

the University of Virginia and the University of Southern California. A six-channel

surround-sound mix of the samples was produced using a Digidesign Pro Tools Mix24

Augmenting Context-Dependent Memory

393

digital audio workstation running on a Macintosh G4 computer. (For more information on Digidesign Pro Tools, see http://www.digidesign.com/.) The software plug-in,

Smart Pan Pro (Kind of Loud Technologies, Santa Cruz, CA), was used to position

some of the samples in space. The contexts were mixed on six B&W speakers. Two

were directly in front of the listener, one at ear level and one elevated to 9 feet, and

the angle of azimuth was ±30 deg (at a distance of approximately 6 feet). The final

two were positioned at an angle of ±120 deg from straight ahead and were elevated

a few feet above ear level. The surround mix was exported as six *.wav files to a Dell

Pentium II computer running Cakewalk Virtual Jukebox software. The six channels

were output from the computer through an Aark24 sound card (Aardvark) to an

Adcom GFA 5006 power amplifier and then to the speakers.

Materials

Three contextual environments were used during the experiment. The lawn

environment consisted of a panoramic photograph of the lawn at the University of

Virginia accompanied by appropriate ambient outdoor sounds of wind, birds, and

insects. The museum context was a panoramic photograph of the inside of the Bayley

Art Museum at the University of Virginia with the ambient sounds of whispering

and footsteps that would typically be heard in a museum gallery. The third context,

the history environment, was created by using a panoramic painting of 18th-century

Edinburgh, combined with ambient sounds of horses on cobblestones, church

bells, and pigeons. (The panorama was retrieved from http://www.acmi.net.au/AIC/

PANORAMA.html.) The digital images were converted to *.bmp format and modeled

as tiled textures onto a virtual cylinder using Alice99, a free 3-D modeling program.

(Alice99 was created by the Stage 3 Research Group at Carnegie Mellon University

and is available free at http://www.alice.org.) A young woman (age 26), a middle-aged

man (age 40), and a young man (age 22) were videotaped to be the face and voice

for the lawn, museum, and history environments, respectively.

The three lists of word pairs were deliberately designed to create interference.

For each list, the same 10 cue words were paired with a different set of 10 target words

(creating an A-B, A-C, A-D interference paradigm; see Table 1). For example, in the

lawn list, the cue farmer was paired with the target castle. However, in the museum

list, farmer was paired with keys, and in the history list, farmer was a cue for hat. The

words were chosen from the Brown Corpus based on Francis and Kucera (1967)

norms, and the mean raw count per million was 100 per item. Presentation of the

pairs was randomized within lists.

Procedure

Upon arrival, all participants were told three things: They would be learning three

lists of word pairs on a computer, they would be tested on these lists the following day,

and all further instructions would come to them directly from the computer system.

Participants were randomly assigned to one of two conditions, context or no context.

The no-context group trained in the same physical environment as did the context group. Participants sat in front of a single monitor with no images displayed on

394

Journal of Cognitive Engineering and Decision Making / Winter 2007

Augmenting Context-Dependent Memory

395

String

Passenger

Museum

History

Scientist

Plate

Lawn

List

Hat

Keys

Castle

Farmer

Tenant

Cricket

Waters

Entrance

TABLE 1. Cue and Target Words From Each List

Bone

Gardens

Earth

Wedding

Steel

Guitar

Mirror

Branches

Cake

Sun

Leaves

Faces

Cue Word

Cattle

Snow

Corridor

Finger

Wheel

Jacket

Weapon

Port

Stomach

Wives

Posts

Muscles

Dust

Hospitals

Prisoners

Vehicles

the projection screen. This environment stayed the same for every list. Participants

were given instructions by text presented on the monitor (see Figure 1). Text instructions that described the learning phase for each list were phrased in an informal,

friendly tone and began with a greeting from a named individual. A different individual was associated with each list.

The context group was trained with a distinct panoramic image and ambient

surround-sound environment associated with each list. The context group also received instructions about the learning phase for each list from full-motion audio/

video clips displayed on the focal monitor. The video instructions consisted of a fullscreen headshot of a person situated in the context of the upcoming list (also see

Figure 1). The audio track for the instructions was played through a pair of stereo

desktop speakers. The context and no-context instructions were identical in content, and both included a suggestion to “use your context to help you remember

the word pairs.”

Training. Training began with a 1-min acclimation period. During this time, participants in the context condition observed the panoramic images and sound, whereas

participants in the no-context condition observed the blank projection surface. This

acclimation period was repeated before each list. After 1 min, participants received

on-screen instructions explaining the rest of the training activities in either video

or text form.

Following the presentation of the instructions, the procedure was identical across

conditions. First, the name of the list was displayed on the computer screen. Then a

pair of words appeared: a cue word and a target word. Each pair remained on the

screen for 5 s; it took approximately 50 s to go through the list of 10 pairs. Participants were told in the instructions that they would be prompted with the cue word

later and would have to respond with the target word. When the list preview was

completed, participants were prompted with a random cue word from the list and a

Figure 1. On the left is the no-context condition environment for training and testing.

On the right is the context condition environment for training and testing.

396

Journal of Cognitive Engineering and Decision Making / Winter 2007

blank field into which they typed the associated target word. If they responded with

the correct target word, they were given a “correct” message and moved on to another cue word. If they gave an incorrect response, they were reminded of the correct

answer before moving on to another cue. This allowed participants to continue to

learn the lists as they trained.

The training phase ended when the participant responded to all 10 cues in a row

without an incorrect answer (meeting 100% criteria). At this point, they moved on

to the next list, repeating the same procedure (acclimation, instruction, and training)

until they had been trained on all three lists. The training of the lists occurred in the

same order – lawn, museum, and history – for all participants.

Testing. Participants returned the following day for testing. The list order was randomized for the testing phase. Participants began each list with a 1-min acclimation

period to the contextual environment. Depending on the condition, instructions followed in either video or text format and indicated that a cue word would be presented on the screen, accompanied by a blank field.

For each list, instructions came from the same person and in the same format

as the day before. Participants were told to fill in the target word that matched the

cue for the given list. Each cue was presented once and in a random order. The participants did not receive feedback after their answers. The same procedure (acclimation,

instructions, and test) was repeated for each list separately. Participants completed

the test for one full list before moving on to the next list.

Results

Training

A one-way analysis of variance (ANOVA) comparing the number of iterations

to learn the word pairs with criteria during the training phase did not show a significant difference across the conditions, F(1, 23) = 2.39, MSE = 21.41, p = .14. The

mean number of iterations for the context condition (with standard deviations in

parentheses) was 11.75 (4.83), and the mean iteration score for the no-context condition was 14.67 (4.42). There was a trend for a greater number of iterations in the

no-context condition, but this trend did not prove to be significant.

Correct Attribution During Testing

As hypothesized, participants in the context condition correctly attributed more

words to the appropriate lists than did participants in the no-context condition. The

mean number of words correctly attributed to the appropriate list (with standard deviations in parentheses) was 18.67 (5.65) for the context condition and 8.08 (4.60) for

the no-context condition (see Figure 2). A one-way ANOVA comparing the two conditions showed that the difference was significant, F(1, 23) = 25.34, MSE = 26.53,

p < .001, R2 = 0.51. As stated previously, this difference in attribution was not attributable to a difference in time taken to learn the lists during training.

Augmenting Context-Dependent Memory

397

Figure 2. Attribution of pairs by context condition (N = 24).

Correct Recall During Testing

Overall memory for targets, regardless of whether or not the target was attributed to the correct list, was also analyzed. This analysis was performed to discover

whether contextual information was more likely to facilitate recall of the targets

themselves. For example, participants in the context condition could have recalled

more target words overall, which may have influenced their correct attribution scores.

However, participants did not differ in their overall target memory. A one-way ANOVA

performed on the total number of targets recalled in each condition was not significant, F(1, 23) = 0.69, MSE = 11.89, p = .42. Participants did not have a problem

recalling the targets (M = 24.42, SD = 3.43) but did make errors when attributing

the targets to the appropriate lists.

A proportion correct score was also calculated for all participants in both conditions. This proportion was computed by dividing the number of targets correctly

attributed to the appropriate list by the mean number of targets correctly recalled

overall for that condition (see Table 2). As expected, a one-way ANOVA revealed a

TABLE 2. Proportion of Words Correctly Attributed for the Context and No-Context

Conditions

Condition

Mean Number Correct

Words Recalled

Words Attributed

Proportion Attributed

Context

25.00 (.81)

18.67 (1.63)

0.75 (0.07)

No context

23.83 (1.15)

8.08 (1.33)

0.34 (0.06)

All numbers are out of 30 possible correct responses. Standard errors are in parentheses.

398

Journal of Cognitive Engineering and Decision Making / Winter 2007

significant difference in the proportion correct scores across conditions, F(1, 23) =

22.56, MSE = 0.04, p < .0001, R2 = 0.48. The participants in the context condition

correctly attributed a larger proportion of the words they recalled compared with

the no-context condition.

Error Analyses

Participants made errors that fell into five possible categories while retrieving the

word pairs from memory. In committing an across-list intrusion, the participant correctly recalled a target for the cue word, but the target came from an alternative list.

For example, if participants answered passenger as the target for plate instead of

scientist while trying to recall the lawn list, they committed a target intrusion error.

The second error category, termed within-list intrusion, occurred when the participant recalled a target that was inappropriate for the cue word being tested but was

from the appropriate list. More specifically, the participant answered castle for plate

while being tested on the lawn list.

Old-word intrusions occurred when a participant answered with a target that was

inappropriate for the cue and from an alternative list (answering keys for plate while

being tested on the lawn list). Alternatively, an error that was made by participants

leaving an answer blank was termed no answer.

Finally, if a participant answered using a word not in any of the lists, the error

was coded as new word.

On average, participants made 8.33 (5.32) across-list intrusions, 1.17 (1.09)

within-list intrusions, 1.54 (1.84) old-word intrusions, 3.75 (3.99) no answers, and

1.83 (1.99) new word errors. Because across-list intrusions occurred significantly

more often than any other error, we will focus the rest of the error discussion on those

only. A multivariate analysis of variance (ANOVA) confirmed that significant differences between errors committed in each group were in the across-list intrusions,

F (1, 23) = 28.60, MSE = 12.87, p < .0001, R2 = 0.57. There were no significant differences between the conditions for any other error types.

Participants in the context condition committed 4.42 (3.45) across-list intrusions,

whereas participants in the no-context condition committed 12.25 (3.72). The interference paradigm effectively reduced the pairing of the appropriate target with each

cue word in the no-context condition. Participants in the no-context condition committed nearly three across-list intrusions for every one across-list error committed

by participants in the context condition.

Discussion

Although small effect sizes have typically been found in context-dependent memory studies in the past, our study demonstrates that large effect sizes can be achieved.

In this study, we showed that by engineering a laboratory “environment” having many

cues similar to distinctive real-world settings, a substantially larger memory advantage could be evoked than has been previously found in laboratory experiments that

focused on isolating the effect of specific factors in context-dependent memory.

Augmenting Context-Dependent Memory

399

It is important to emphasize that improved performance in the context condition

was not attributable to whether the participants in this group were better at encoding

the lists. All participants were required to learn all the lists to the same 100% correct

criterion; moreover, the number of trials required to do so did not differ between

groups. Some of the reasons our study yielded an effect size that was larger than normal can be clearly elucidated, but others are speculation.

First, we believe that the combination of multiple cues used in the InfoCockpit

were important in producing the large memory effect. Previous research has shown

that environmental sounds that are paired with congruent images during encoding

increased memory for objects within the environment (Davis et al., 1999). Using other

cues (such as smell and music), Parker and Gellatly (1997) found that participants

who were given the combination of cues showed better memory performance than

those who were given the cues in isolation. The combination yielded the largest advantage when longer intervals were allowed between study and test (e.g., 24–48

hr). Finally, recent work in our lab revealed that neither images of an environment

nor sounds alone produced as large a memory effect as the combination of the two

(Stefanucci & Proffitt, 2007).

We argue that the combination of cues served to engage participants in the environments at encoding and provided a meaning to the environment that was more easily accessed later. Thus, we believe that the combination of congruent images, sounds,

faces, and voices used in this experiment provided the participants with integrated

cues on which to “hook” their memories. In the current work, we were interested in

engineering an environment that provided a large memory advantage, one of practical significance, rather than exploring which environments can serve as useful contexts for memory (as in Stefanucci & Proffitt, 2007). We designed an environment

that included faces and voices because these cues are prevalent in workplaces and are

very memorable, according to previous context-dependent and source memory research (Craik & Kirsner, 1974; Davies, 1988; Dodson & Shimamura, 2000; Smith

& Vela, 2001). In addition, we decided to make these cues available to the user at

both encoding and retrieval, which had not been done in past research.

However, the present study did not test whether manipulating the relationship

between the context and the to-be-remembered information could improve memory performance. The contexts were in no way related to the word pairs that participants learned. But previous research has shown that when context is related to the

learned information, it sometimes does not increase memory performance beyond

an unrelated context (Murnane, Phelps, & Malmberg, 1999). Anecdotally, we would

expect an even larger effect to result from strengthening the relationship between

the context and the to-be-remembered information, but only if the context itself did

not distract from learning. Therefore, we tried to build engaging environments that

did not draw attention from the to-be-remembered information. The sights, sounds,

faces, and voices were interesting but not dynamic enough to distract the participant

while learning.

The paradigm used in this study had important characteristics that also may have

added to the overall size of our effect. Reinstatement of the cues was important in

400

Journal of Cognitive Engineering and Decision Making / Winter 2007

that it reminded participants of the environment at encoding. Matching cues at encoding and retrieval has been effective in producing context-dependent memory

effects in previous studies (J. R. Anderson, 1983; Bower, 1967; McGeoch, 1942;

Raaijmakers & Shiffrin, 1981; Tulving, 1974, 1983). Certainly some of the effect observed in this experiment is attributable to the reinstatement of the cues at retrieval,

but it is important to consider future implementations that could further augment

this design element.

For example, it would be interesting to address whether the reinstatement needs

to occur within the office environment or whether it could occur outside the office

on a handheld computing device such as a personal digital assistant (PDA) or even

a cell phone. Research by Brad Myers and colleagues has shown that interfacing a

PDA or handheld device with a desktop computer in real time is possible and becoming more pervasive every year (Myers, 2001). It is possible that reinstatement of the

contextual cues on a PDA outside the office could increase recall.

Interference also happens in everyday life, so we thought it appropriate to include

that in our paradigm as well. For a more specific example, consider the potential for

interference that would be encountered by a building contractor. Much of his work

goes on in his office: phoning employees, e-mailing customers, and ordering materials online. If he gets a call from a confused driver who cannot remember where to

deliver a drywall shipment, then he must remember either the day he made the order

or the project for which the order was made. When the contractor tries to recall the

details of one of these orders, recalling the context in which he made the order is of

little help because his same office was used in every order that he has placed. These

other orders interfere with the specific information that he is trying to recall.

In the study reported, participants’ memory for word pairs was tested, which is

much simpler than the complex world of the contractor and may not generalize to

the contractor scenario. However, generalizability is always a problem in memory

research and would still be a problem even if we had tested a contractor in his everyday work environment. For instance, a contractor’s daily work environment and

to-be-remembered information is very different from a stockbroker’s or an airplane

pilot’s environment. Memory relapses for those careers could be even more detrimental than for a contractor. The aspect of our experiment that does generalize is the

interference built into the experimental design.

Interference between similar information is common in office environments. The

daily work associated with one project is often very similar to the work for other

projects, no matter what the profession. Consequently, similar information is often

highly confusable and susceptible to interference. One way to keep the information

separate would be to associate it with different contexts (Smith, 1982, 1984; Strand,

1970). One could imagine that interference would be reduced by having a different

office for each project and by associating different rooms with different projects. For

our contractor, this proposal would enable him to discriminate where to send the drywall because he would have diagnostic cues, such as different environments for each

of the projects, to aid him in retrieval of the correct order. Indeed, past psychology

research has shown that encoding information in multiple environments increases

Augmenting Context-Dependent Memory

401

the ability to recall the learned information later (Smith, 1982, 1984). Realistically,

though, the costs of moving from room to room would certainly outweigh any

memory benefits. In addition, most offices tend to look similar, which suggests that

the overall benefit of moving from room to room would be small (Smith, 1979).

The implications of our findings are relevant for offices and workplaces of tomorrow. The InfoCockpit provides a way of turning any environment into multiple settings that can be useful memory cues. However, we do realize that there is a scaling

problem with this suggestion. Suppose there are many contracts with which the contractor is involved, and a different place is tied to each of these contracts. Given a

large number of contexts, remembering which context was associated with which

contract would become a formidable task. We recommend the creation of hierarchies

within contexts to address this problem. Similar projects could have related settings

to narrow the search for the appropriate context and memory cue. In this manner, a

project that has multiple components could be associated with a family of settings,

such as “small towns in Tuscany.” Then each of the contracts or people associated

with that project could have a particular Tuscan town (e.g., Lucca, Siena, Pisa) assigned to it. This practice would allow the contractor to more easily recall a highlevel category, such as Tuscany, and then search the towns associated with Tuscany

to locate the information he needs.

Conclusions

Willingham (2001) stated in his introductory cognition textbook that students

who are asked whether memory is better if physical environments are matched at encoding and retrieval should answer “just a little” (p. 214). We believe this is true for

many of the simple laboratory settings used in past context-dependent memory studies, but the context-dependent memory benefit can be large if multiple cues are presented within distinctive simulated real-world environments. When presented with

a combination of distinct images, sounds, and faces, the participants in this study correctly attributed 131% more words than did those who had no unique contexts at

encoding. By combining many factors known to influence context-dependent memory, we achieved an effect size that may be of applied significance and could be

applied to everyday work practices to increase productivity and efficiency.

Acknowledgment

We thank Tom Banton and Steve Jacquot for helping us create the virtual environments. This research was supported by NSF ITR/Carnegie Mellon Grant 0121629

and ONR Grant N000140110060 to the third author. This research was conducted

at the University of Virginia, but Jeanine K. Stefanucci has now moved to the College

of William & Mary.

402

Journal of Cognitive Engineering and Decision Making / Winter 2007

References

Anderson, J. R. (1983). A spreading activation theory of memory. Journal of Verbal Learning and

Verbal Behavior, 22, 261–295.

Anderson, M. C., & Neely, J. H. (1996). Interference and inhibition in memory retrieval. In E.

Bjork & R. Bjork (Eds.), Memory: Handbook of perception and cognition (2nd ed., pp. 237–313).

San Diego, CA: Academic Press.

Bilodeau, I. M., & Schlosberg, H. (1951). Similarity in stimulating conditions as a variable in

retroactive inhibition. Journal of Experimental Psychology, 41(3), 199–204.

Bjork, R. A., & Richardson-Klavehn, A. (1988). On the puzzling relationship between environmental context and human memory. In C. Izawa (Ed.), Current issues in cognitive processes: The

Tulane Flowerree Symposium on Cognition (pp. 313–344). Mahwah, NJ: Erlbaum.

Bower, G. H. (1967). A multi-component theory of the memory trace. In K. W. Spence & J. T.

Spence (Eds.), The psychology of learning and motivation: Advances in research and theory (pp.

299–325). New York: Academic Press.

Craik, F. I. M., & Kirsner, K. (1974). The effect of speaker’s voice on word recognition. Quarterly

Journal of Experimental Psychology, 26, 274–284.

Crowder, R. G. (1976). Principles of learning and memory. Mahwah, NJ: Erlbaum.

Dallett, K., & Wilcox, S. G. (1968). Contextual stimuli and proactive inhibition. Journal of Experimental Psychology, 18, 725–740.

Davies, G. M. (1988). Faces and places: Laboratory research on context and face recognition. In

G. M. Davies & D. M. Thompson (Eds.), Memory in context: Context in memory (pp. 35–53).

New York: Wiley.

Davis, E. T., Scott, K., Pair, J., Hodges, L. F., & Oliverio, J. (1999). Can audio enhance visual perception and performance in a virtual environment? Proceedings of the Human Factors and Ergonomics Society 43rd Annual Meeting (pp. 1197–1201). Santa Monica, CA: Human Factors and

Ergonomics Society.

Dodson, C. S., & Shimamura, A. P. (2000). Differential effects of cue dependency on item and source

memory. Journal of Experimental Psychology: Learning, Memory, & Cognition, 26, 1023–1044.

Fernandez, A., & Glenberg, A. M. (1985). Changing environmental context does not reliably affect

memory. Memory & Cognition, 13, 333–345.

Francis, W. N., & Kucera, H. (1967). Frequency analysis of English usage: Lexicon and grammar.

Boston: Houghton Mifflin.

Godden, D. R., & Baddeley, A. D. (1975). Context-dependent memory in two natural environments:

On land and underwater. British Journal of Psychology, 66, 325–331.

Godden, D. R., & Baddeley, A. (1980). When does context influence recognition memory? British

Journal of Psychology, 71, 99–104.

Greenspoon, J., & Ranyard, R. (1957). Stimulus conditions and retroactive inhibition. Journal of

Experimental Psychology, 53(1), 55–59.

Jacoby, L. L. (1983). Perceptual enhancement: Persistent effects of an experience. Journal of Experimental Psychology: Learning, Memory, & Cognition, 9, 21–38.

McGeoch, J. A. (1942). The psychology of human learning. New York: Longmans.

Murnane, K., Phelps, M. P., & Malmberg, K. (1999). Context-dependent recognition memory:

The ICE theory. Journal of Experimental Psychology: General, 128, 403–415.

Myers, B. A. (2001). Using handhelds and PCs together. Communications of the ACM, 44(11),

34–41.

Nairne, J. S. (2002). The myth of the encoding-retrieval match. Memory, 10, 389–395.

Nixon, S. J., & Kanak, N. J. (1981). The interactive effects of instructional set and environmental

context changes on the serial position effect. Bulletin of the Psychonomic Society, 18(5), 237–240.

Augmenting Context-Dependent Memory

403

Parker, A., & Gellatly, A. (1997). Moveable cues: A practical method for reducing context-dependent

forgetting. Applied Cognitive Psychology, 11(2), 163–173.

Raaijmakers, J. G., & Shiffrin, R. M. (1981). Search of associative memory. Psychological Review,

88, 93–134.

Smith, S. M. (1979). Remembering in and out of context. Journal of Experimental Psychology: Human

Learning and Memory, 5, 460–471.

Smith, S. M. (1982). Enhancement of recall using multiple environmental contexts during learning. Memory & Cognition, 10, 405–412.

Smith, S. M. (1984). A comparison of two techniques for reducing context-dependent forgetting.

Memory & Cognition, 12, 477–482.

Smith, S. M. (1985). Background music and context-dependent memory. American Journal of Psychology, 98, 591–603.

Smith, S. M., Glenberg, A., & Bjork, R. A. (1978). Environmental context and human memory.

Memory & Cognition, 6, 342–353.

Smith, S. M., & Vela, E. (2001). Environmental context-dependent memory: A review and metaanalysis. Psychonomic Bulletin & Review, 8, 203–220.

Stefanucci, J. K., & Proffitt, D. R. (2002). Providing distinctive cues to augment human memory.

In W. Gray & C. Schunn (Eds.), Proceedings of the Twenty-Fourth Annual Conference of the Cognitive Science Society (p. 840). Mahwah, NJ: Erlbaum.

Stefanucci, J. K., & Proffitt, D. R. (2007). Which aspects of contextual environments bind to memories?

Manuscript submitted for publication.

Strand, B. Z. (1970). Change of context and retroactive inhibition. Journal of Verbal Learning &

Verbal Behavior, 9, 202–206.

Tulving, E. (1974). Recall and recognition of semantically encoded words. Journal of Experimental

Psychology, 102, 778–787.

Tulving, E. (1983). Elements of episodic memory. New York: Oxford University Press.

Willingham, D. B. (2001). Cognition: The thinking animal. Upper Saddle River, NJ: Prentice Hall.

Jeanine K. Stefanucci is an assistant professor in the Departments of Psychology and Neuroscience at the College of William & Mary. She received her Ph.D. in cognitive psychology from

the University of Virginia in 2006.

Shawn P. O’Hargan is an associate at the law firm of Kirkland & Ellis, LLP, in New York City. He

received his J.D. from the University of Virginia in 2006. Prior to attending law school, Shawn

was a research associate in the Proffitt Perception Lab at the University of Virginia, where he

helped to conduct the research described here.

Dennis R. Proffitt is the Commonwealth Professor of Psychology at the University of Virginia,

where he is a member of the cognitive area graduate program in the Psychology Department,

director of the undergraduate degree program for cognitive science, and a faculty member

of the graduate degree program in neuroscience.

404

Journal of Cognitive Engineering and Decision Making / Winter 2007