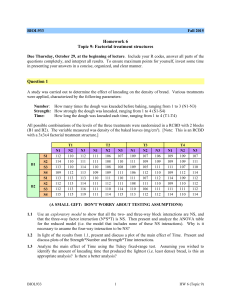

Three-way ANOVA Chapter

advertisement