Sensitivity Analysis for Search-Based Software Project Management

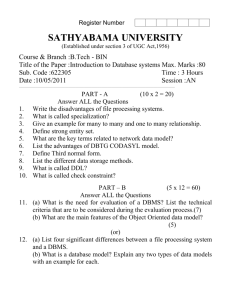

advertisement

Sensitivity Analysis for Search-Based

Software Project Management

Francisco de Jose

King’s College London

MSc Computing And Internet Systems 2007/2008

School of Physical Sciences and Engineering

Computer Science Deparment

Supervised by Mark Harman

August 2008

“Live as if your were to die tomorrow. Learn as if you were to live forever”

Gandhi

Abstract

This paper introduces a new perspective in the field of Software Engineering in pursuance of a feasible alternative to the classical techniques of Software Project Management through the use of Genetic Algorithms (GAs) in Sensitivity Analysis (SA). A

beneficial solution is important from the point of view of the manager as a result of

the increasing complexity of the software projects. The use of GAs in SA can provide

new means to improve the initial schedule of a project and thereby tackle the classical Project Scheduling Problem (PSP). The proposed implementation will develop an

answer to the managers in their necessity to identify the most sensitive tasks as well

as new ways to optimize their project in terms of duration. This paper describes the

application of GAs in a process of resource allocation. Moreover, it analyses the impact

of breaking dependencies within the definition of a project. The alternative detailed in

this paper indicates the suitable direction of future work to achieve a proper results for

an implementation of SA through the use of GAs to all the parameters of a project. In

so doing that, the biggest negative impact due to the smallest alteration in one of the

parameters can provide the most sensitive factors of the entire project.

Key words: search-based, software engineering, genetic algorithms, sensitivity analysis,

project management.

Acknowledgements

First I wish to convey my sincere gratitude to my supervisor, Prof. Mark Harman

who offered invaluable assistance, support and guidance. Without whose knowledge and

counsel this study would not have been successful. I appreciate his direction, technical

support and supervision at all levels of the research project.

I must also acknowledge special thanks to my colleges from the University of Malaga

Juan Jose Durillo and Gabriel Luque for their suggestions and advise which have been

inestimable help in the development of this project.

The author would like to recognise to Simon Poulding from the University of York for

proving valuable advice and assistance in the statistical analysis of this dissertation, for

which I am really grateful.

Most of all I would like to express my deepest love to my family, my parents Francisco

and Maria Del Carmen and my sister Irene. Their endless support, motivation and

encouragement through this Master and my life has contributed notably to this project,

I owe them my eternal gratitude.

ii

Contents

Abstract

i

Acknowledgements

ii

List of Figures

iv

List of Tables

v

Abbreviations

vi

Symbols

vii

1 Introduction

1.1 Simple Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Roadmap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

2

4

2 Problem Statement

2.1 Problem Definition . . . . . . . . . . . . . . . . .

2.2 Task Precedence Graph . . . . . . . . . . . . . .

2.2.1 Task Precedence Graph (TPG) . . . . . .

2.2.2 Critical Path Method (CPM) . . . . . . .

2.2.3 Project Evaluation and Review Technique

.

.

.

.

.

5

5

6

6

7

8

. . . . .

. . . . .

. . . . .

. . . . .

(PERT)

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3 Literature Survey

3.1 Search-based Software Engineering . . . . .

3.2 Search-Based Software Project Management

3.2.1 Genetic Algorithms . . . . . . . . . .

3.2.2 Sensitivity Analysis . . . . . . . . .

3.3 Validation of the work . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

9

9

11

12

17

20

4 The Model Introduced by this Thesis

4.1 Specific Details of the Model . . . . .

4.1.1 Scenario . . . . . . . . . . . . .

4.1.2 Evaluation (Fitness Function) .

4.1.3 Recombination (Operator) . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

21

24

24

24

25

iii

.

.

.

.

.

.

.

.

.

.

.

.

Contents

iv

5 Resource Allocation

5.1 Non-Classical GA . . . . . . . . . . . . . . . .

5.1.1 Features . . . . . . . . . . . . . . . . .

5.1.2 First Version . . . . . . . . . . . . . .

5.1.2.1 Individuals and Populations

5.1.2.2 Evaluations . . . . . . . . . .

5.1.2.3 Method of Selection . . . . .

5.1.2.4 Recombination . . . . . . . .

5.1.2.5 Mutation . . . . . . . . . . .

5.1.2.6 Results . . . . . . . . . . . .

5.1.3 Second Version . . . . . . . . . . . . .

5.1.3.1 Method of Selection . . . . .

5.1.3.2 Results . . . . . . . . . . . .

5.2 Classical GA . . . . . . . . . . . . . . . . . .

5.2.1 First Version . . . . . . . . . . . . . .

5.2.1.1 Features . . . . . . . . . . .

5.2.1.2 Individuals and Populations

5.2.1.3 Evaluation . . . . . . . . . .

5.2.1.4 Method of Selection . . . . .

5.2.1.5 Recombination . . . . . . . .

5.2.1.6 Mutation . . . . . . . . . . .

5.2.1.7 Results . . . . . . . . . . . .

5.2.2 Second Version . . . . . . . . . . . . .

5.2.2.1 Features . . . . . . . . . . .

5.2.2.2 Individuals and Populations

5.2.2.3 Evaluation . . . . . . . . . .

5.2.2.4 Method of Selection . . . . .

5.2.2.5 Recombination . . . . . . . .

5.2.2.6 Mutation . . . . . . . . . . .

5.2.2.7 Results . . . . . . . . . . . .

5.2.3 Third Version . . . . . . . . . . . . . .

5.2.4 Features . . . . . . . . . . . . . . . . .

5.2.5 Evaluation . . . . . . . . . . . . . . .

5.2.5.1 New Parent population . . .

5.2.5.2 Results . . . . . . . . . . . .

5.3 Conclusion . . . . . . . . . . . . . . . . . . .

6 SensitivityAnalysis

6.1 Methodology to adapt data . . .

6.2 Project defintions . . . . . . . . .

6.2.1 Project 1: CutOver . . . .

6.2.2 Project 2: Database . . .

6.2.3 Project 3: QuotesToOrder

6.2.4 Project 4: SmartPrice . .

6.3 Sensitivity Analysis Methodology

6.4 Sensitivity Analysis Results . . .

6.4.1 Results Project 1 . . . . .

.

.

.

.

.

.

.

.

.

iv

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

26

26

27

28

28

28

29

29

29

29

32

32

32

36

36

36

36

37

37

37

37

37

40

40

41

41

41

41

42

42

44

44

45

45

45

47

.

.

.

.

.

.

.

.

.

48

49

50

51

51

51

51

52

52

53

Contents

6.5

6.6

6.7

v

6.4.2 Results Project 2 . . . . . . . . . . . . .

6.4.3 Results Project 3 . . . . . . . . . . . . .

6.4.4 Results Project 4 . . . . . . . . . . . . .

Statistical Analysis . . . . . . . . . . . . . . . .

6.5.1 Statistical Techniques and Methodology

6.5.2 Statistical Tools . . . . . . . . . . . . .

6.5.3 Statistical Results . . . . . . . . . . . .

6.5.3.1 Statistical Results Project 1 .

6.5.3.2 Statistical Results Project 2 .

6.5.3.3 Statistical Results Project 3 .

6.5.3.4 Statistical Results Project 4 .

Threats to Validity . . . . . . . . . . . . . . . .

Discussion . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

56

60

64

68

68

69

69

70

71

71

72

73

74

7 Conclusions

76

8 Future Work

78

A Appendix

80

B Appendix

97

Bibliography

118

v

List of Figures

1.1

1.2

1.3

Example 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Example 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Example 3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2

3

3

2.1

2.2

TPG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

CPM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6

7

3.1

GA’s methodology. Evolutionary Testing. . . . . . . . . . . . . . . . . . . 13

4.1

Two-point crossover operator method . . . . . . . . . . . . . . . . . . . . 25

5.1

5.2

5.3

5.4

5.5

5.6

5.7

5.8

5.9

TPG Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Sony Results Non-Classical GA V1 . . . . . . . . . . . . . . . . . . . . .

Comparison Non-classical GA V1 and V2 with 1000 evaluations . . . . .

Comparison Non-classical GA V1 and V2 with 10000 evaluations . . . .

Comparison Non-classical GA V1 and V2 with 100000 evaluations . . .

Average comparison Non-classical GA V1 and V2 . . . . . . . . . . . . .

Comparison Non-classical GA V1 and V2 and Classical GA V1 . . . . .

Comparison Average Non-classical GA V1 and V2 and Classical GA V1

Comparison Average Non-classical GA V1 and V2 and Classical GA V1

and V2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.10 Comparison Average Non-classical GA V1 and V2 and Classical GA V1,

V2, and V3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

6.1

6.2

6.3

6.4

6.5

6.6

6.7

6.8

6.9

6.10

6.11

6.12

6.13

6.14

6.15

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

Projec

1.

1.

1.

1.

2.

2.

2.

2.

3.

3.

3.

3.

4.

4.

4.

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Normalisation

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

1.

2.

3.

4.

1.

2.

3.

4.

1.

2.

3.

4.

1.

2.

3.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

vi

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

27

32

34

34

35

35

38

40

. 42

. 47

54

54

55

55

57

57

58

58

61

61

62

62

65

65

66

List of Figures

vii

6.16 Projec 4. Normalisation Test 4. . . . . . . . . . . . . . . . . . . . . . . . . 66

vii

List of Tables

5.1

5.2

5.3

5.4

5.5

5.6

5.7

5.8

5.9

5.10

5.11

5.12

5.13

5.14

5.15

5.16

5.17

5.18

TPG representation . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Duration representation . . . . . . . . . . . . . . . . . . . . . . . . . . .

Resource representation . . . . . . . . . . . . . . . . . . . . . . . . . . .

Resource allocation representation . . . . . . . . . . . . . . . . . . . . .

Sony project resource allocation . . . . . . . . . . . . . . . . . . . . . . .

Sony project results for Non-classical GA V1 . . . . . . . . . . . . . . .

Sony Average Results Non-Classical GA V1 . . . . . . . . . . . . . . . .

Sony project results for Non-classical GA V2 . . . . . . . . . . . . . . .

Average comparison Non-classical GA V1 and V2 . . . . . . . . . . . . .

Sony project resource allocation . . . . . . . . . . . . . . . . . . . . . . .

Sony project results for Classical GA V1 . . . . . . . . . . . . . . . . . .

Average Non-classical GA V1 and V2 and Classical GA V1 . . . . . . .

Resource allocation representation Classical GA V2 . . . . . . . . . . . .

Sony project results for Classical GA V2 . . . . . . . . . . . . . . . . . .

Average Non-classical GA V1 and V2 and Classical GA V1 and V2 . . .

TPG Representation Second Version . . . . . . . . . . . . . . . . . . . .

Sony project results for Classical GA V3 . . . . . . . . . . . . . . . . . .

Average Non-classical GA V1 and V2 and Classical GA V1, V2, and V3

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

27

27

28

28

30

31

31

33

34

38

39

39

40

43

43

44

46

46

6.1

6.2

6.3

6.4

6.5

6.6

6.7

6.8

6.9

6.10

6.11

6.12

6.13

Original project definition . . . . . . . . . . . . . . . .

Transformation project definition table . . . . . . . . .

Adapted project definition . . . . . . . . . . . . . . . .

Final project definition . . . . . . . . . . . . . . . . . .

Projects definition . . . . . . . . . . . . . . . . . . . .

Top 10 dependencies Project 2 . . . . . . . . . . . . .

Top 10 dependencies Project 3 . . . . . . . . . . . . .

Top 10 dependencies Project 4 . . . . . . . . . . . . .

Top 10 dependencies Project 1 . . . . . . . . . . . . .

P-value Rank Sum test Top 10 dependencies Project 1

P-value Rank Sum test Top 10 dependencies Project 2

P-value Rank Sum test Top 10 dependencies Project 3

P-value Rank Sum test Top 10 dependencies Project 4

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

49

50

50

50

51

59

63

67

70

70

71

72

72

A.1

A.2

A.3

A.4

A.5

Top

Top

Top

Top

Top

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

81

82

83

84

85

10

10

10

10

10

Results

Results

Results

Results

Results

Project

Project

Project

Project

Project

1

1

1

1

2

Test

Test

Test

Test

Test

1

2

3

4

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

viii

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

List of Tables

A.6 Top

A.7 Top

A.8 Top

A.9 Top

A.10 Top

A.11 Top

A.12 Top

A.13 Top

A.14 Top

A.15 Top

A.16 Top

10

10

10

10

10

10

10

10

10

10

10

ix

Results

Results

Results

Results

Results

Results

Results

Results

Results

Results

Results

Project

Project

Project

Project

Project

Project

Project

Project

Project

Project

Project

2

2

2

3

3

3

3

4

4

4

4

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

Test

2

3

4

1

2

3

4

1

2

3

4

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

ix

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

86

87

88

89

90

91

92

93

94

95

96

Abbreviations

AEC

Architecture, Engineering and Construction

BRSA

Broad Range Sensitivity Analysis

CPD

Critical Path Diagram

CPM

Critical Path Method

DOE

Design of Experiments

FAST

Fourier Amplitude Sensitivity Test

GA

Genetic Algorithm

PERT

Project Evaluation Review Technique

PSP

Project Scheduling Problem

SA

Sensitivity Analysis

SD

System Dynamic

TPG

Task Precedence Graph

x

Symbols

RAN D M AX

number

xi

32767

interger

To my family

xii

Chapter 1

Introduction

The main goal of this project is the development of an implementation able to provide

an improvement over the original schedule in software project management by the use

of search-based software engineering techniques. The first phase aims to the deployment

of a solution for the process of resource allocation in the definition of the project by

the application of genetic algorithms (GAs). At the same time, this work supplements

this first goal by a second aspect of improving planning in software project management

by breaking dependencies and performing sensitivity analysis (SA). Software project

management is a significant up to date area with increasing complexity and demand

from almost every sector in the industry and not only in the field of New Technologies.

Nowadays, projects in every engineer or industrial sector such as architecture, energy,

aeronautic, aerospace, and much more involve the use of specific software and therefore

the Project Scheduling Problem (PSP) is more present than ever. As a result, the demand for new tools and techniques by companies and their managers to improve the cost

and duration, variables which define the PSP, of software projects is a visible concern.

Hence, the idea highlighted of contributing with new means to advance, enhance, and

improve the solutions in software project management is significant enough to justify

this paper.

The remainder of the paper is organised as follows. The next section starts setting out

the problem covered in this research in general terms. Thereafter, it is mentioned the

previous related work and the main background material, analyzing the ideas proposed

in that material and the main differences regarding the focus of this research. Then this

paper introduces a section with the main foundations which support it and present the

base of the model developed. The next section explains the work that was performed

during this research, specifying the main techniques used during its approach as well as

the methodology adopted. In this sense, this section concretely states the implications

1

Introduction

in software project management of this paper. Likewise, the first subsection describes

an accurate specification of the technical details. Straight afterwards, the following

section details the two different algorithms developed and the results obtained in the

application of those GAs to diverse scenarios constructed from data of real projects.

Next, a complete section explains the process of SA performed as well as the process of

breaking dependencies in addition to the results obtained. The following section fully

details the conclusions obtained after analysing the results. The last section of this

paper details which could be the next steps and the future work in the development of

this research.

1.1

Simple Example

The idea behind this paper is exemplified in Figure 1.1, Figure 1.2, and Figure 1.3. The

first figure represents the task precedence graph of a simple project definition. In this

project definition there are five tasks with their duration as well as the dependencies

between those tasks. For this simple example it is assumed that the resource allocation

for those tasks has been made producing the duration indicated in the number inside

the octagons, which represent the tasks. In addition, it is also assumed that every task

can be at any time due to the resource allocation. This part would correspond to the

first phase of this research.

Taking into consideration all the premises detailed in the previous paragraph and according to the information revealed in Figure 1.1, the optimal completion time for this

project definition would be 26 units of time.

Figure 1.1: TPG + Duration. Example 1.

The second phase of this research rests in the process of breaking dependencies and

performing sensitivity analysis to evaluate whether it is possible to optimise the overall

completion time for the project definition. If this idea is applied to this particular

2

Introduction

example, it is feasible to reduce considerable the schedule. As it can be observed in

Figure 1.2, if the dependence between the task 2 and the task 3 is removed the scenario

represented in Figure 1.3 would allow new completion times. In this case, the new

optimal completion time would be 16 units of time. Hence, it would be possible to

decrease the original schedule by ten units of time which is a significant improvement.

Figure 1.2: TPG + Duration. Example 1. Breaking dependencies.

Figure 1.3: TPG + Duration. Example 1. Resulting TPG after breaking dependencies.

Furthermore, it is necessary to indicate that by removing dependencies the process of

resource allocation it would be performed again and therefore, the number of possible

combinations is considerable yet not always desirable.

The process explained and illustrated in the previous figures is a simplification of the

model developed. The purpose of this example is to provide a general idea of the main

goal aimed in this research.

3

Introduction

1.2

Roadmap

The project first developed an algorithm able to cover the considered parameters of

the PSP in order to provide an accurate benchmark for the resource allocation in the

project definition. This algorithm worked on different combinations, understanding by

combinations the different possibilities of the assignment between the resources available

and the tasks defined. This part corresponds to the first phase the research, and as

it shows the section 5 of this paper various alternatives were considered and several

scenarios were tested.

The second phase of the project carried out the process of breaking dependencies and

re-running the algorithm developed in the first part in order to evaluate whether it

is possible to produce improvements by reducing the benchmark or original optimal

completion time. In order to produce trustworthy results, the model developed was

applied to different scenarios based on real data projects. Thus, the input data set

which fed the model produced valuable output since the possible spectrum of scenarios is

infinite whereas the real framework might be limited. The results obtained are evaluated

performing sensitivity analysis over the solutions provided by the model. This procedure

measures the relative impact of every modification introduced in the original scenario.

The data analysed is the effect produced on the original completion time by removing

dependencies and altering the resources available. This method tries to identify whether

the dependencies which produce improvement are always the same and how they behave

in the different scenarios.

In addition, based on the results collected in the sensitivity analysis statistics analysis

was performed to add reliability to the data produced by the model developed.

4

Chapter 2

Problem Statement

2.1

Problem Definition

In the discipline of software project management the main objective is to successfully

achieve the project goals within the classical constraints of time and budget utilizing the

available resources. In order to be able to accomplish this aim in the best way, companies

and managers desire to optimize the allocation of the resources in the tasks, which define

the project, to meet the objectives. Based on this distribution, tasks have a specific start

and completion time, and the whole set defines the schedule of the project. This plan

is classically illustrated using Gantt charts, which is the most common technique for

representing the phases of a project.

Nevertheless, this assignment is not straightforward since the group of tasks might have

dependencies between them. Therefore, it might be necessary to first finish one or more

tasks to be able to start a next one. The Task Precedence Graph is the main technique

used to represent the tasks of the project and their dependencies.

This method of representing for a project should not be mistaken with the other two

main techniques, the Project Evaluation and Review Technique (PERT) and the Critical

Path Method (CPM), used to analyse and represent the schedule of the set of activities

which composes the definition of the project. This difference is explained in detail in

the section 2.2 of this paper.

The scope of this research is clearly defined within the context of developing new techniques in software project management to improve the optimal solutions of the PSP. In

pursuance of this aim, this research focuses on resource allocation and breaking dependencies between tasks to find a new schedule which improves the completion time of the

original optimal one. In the interest of this objective, the main technique fully detailed

5

Problem Statement

in the section 3.2.1 of this paper is GAs with the use of SA. In consequence, the idea

highlighted of optimizing completion times, probably the most complicated concern in

software project management as PSP, reasonably validates this paper.

2.2

Task Precedence Graph

This section establishes the differences between the the Task Precedence Graph (TPG),

which is a method of representation, and the Project Evaluation and Review Technique

(PERT) and the Critical Path method (CPM), which are techniques or methods for

project management. The main reason in so doing that is that the similarity in terms

of concepts and patterns used to represent in schemas these procedures could lead to

confusion.

Usually, specifying the tasks and identifying dependencies between them is the first step

in definition of a project, and the TPG is the best approach to depict this early stage.

After this point, it is common in the management of a plan to decide the time that it

is necessary to spend in every task in order to complete it. Here, it lays the key point

which distinguishes the TPG from the PERT and CPM.

2.2.1

Task Precedence Graph (TPG)

The TPG is just a method of illustrating the definition of a project by representing two

different features:

1. Tasks

2. Dependencies

Figure 2.1: Example of Task Precedence Graph.

This methodology only represents the two features mentioned and it does not take into

consideration measures about the duration of each task and therefore, cannot establish

6

Problem Statement

the known Critical Path Diagram (CPD) neither the minimum time required to complete

the whole project. Hence, the exercise of determining the time necessary to finalised

a task is a complete different activity. This fact allows establishing a separate list of

durations for the tasks calculated in terms of ”unit of time / person” or other kind

of measure. As a result the completion time of every task can vary depending on the

resources assigned to it.

2.2.2

Critical Path Method (CPM)

The CPM is a mathematical method to schedule the definition of a project which is

able to calculate the completion time. Although the management of a project involves

the consideration of many factors which can be added enhancing the CPM, the basic

representation entails the three main features:

1. Tasks

2. Dependencies

3. Duration

Figure 2.2: Example of Critical Path Method

In this sense, it is understood that the CPM shows a representation where the duration

of the task is fixed due to different reasons such as that the resources which perform the

tasks have been already assigned or the estimation in terms of duration do not depends

on the allocation of resources. The CPM is able to demarcate which tasks are critical

and which not regarding the finalisation of the project, and as a result, construct the

Critical Path Diagram in addition to the best completion time using the information

of the duration of the tasks and their dependencies. Furthermore, this methodology

determines the earliest and latest time in which every task can start and finish.

7

Problem Statement

New developed versions and tools of the CPM allow introducing the concepts of resources

producing variation in the schedule of the project, this fact can lead to misunderstanding

between CPM and TPG.

2.2.3

Project Evaluation and Review Technique (PERT)

The PERT is second technique used for analysing and scheduling the definition of a

project. This method has common similarities in its approach to the CPM, but it is also

a technique used in project management for planning the completion time and not just

for representing tasks and dependencies as the TPG. The features considered and the

means used to displays the information is almost analogue to the CPM; nevertheless,

this technique has some differences with respect to. PERT is considered a probabilistic

methodology due to its technique of estimating the duration of each task, whereas CPM

is considered a deterministic technique.

8

Chapter 3

Literature Survey

This chapter introduces the theoretical foundation for the implementations developed,

which tries to provide an improvement in the process of planning software project management in a first stage, and a further evolution of breaking dependencies through the

use of genetic algorithms (GAs) in sensitivity analysis (SA). Therefore, the aim of this

section is to settle the theoretical base of the main techniques and methodologies used in

the development of this paper. In so doing that, definitions are given for the key concepts

necessaries in the different approaches followed throughout the implementations.

In addition, the aim of this chapter is a comprehensive identification of the most relevant

literature whose information has direct impact on supporting the background area over

this paper is built on. In so doing that, we meet one basic requirement for deploying a

remarkable thesis.

3.1

Search-based Software Engineering

Search-based software engineering is a field inside software engineering whose approach

is based on the utilization and application of metaheuristic search techniques such genetic algorithms, hill climbing algorithm, tabu search, and simulated annealing. These

techniques aim to produce exact or approximate solutions to optimization and search

problems within software engineering. Its application has been successfully accomplished

in different areas of software engineering which were reviewed in the literature survey

section.

Search-based software engineering and its metaheuristic techniques are mainly represented and based on mathematical data and representation. This fact, as a result,

9

Literature Survey

requires the reformulation of the software engineering problem as a search problem by

means of three main stages [1]:

• Problem’s representation

• Fitness Function

• Operators

However, it has to be taken into consideration that particular search-based techniques

may need specific necessities due to their intrinsic theoretical definition.

The representation of the problem allows the application of the features of the searchbased technique. Furthermore, it usually entails the description of the framework by

expressing the parameters, which are involved in the definition of the software engineering problem and the search-based technique applied, in a numerical or mathematical

system. In addition, this manner allows an exhaustive statistical analysis of the results.

Fitness function is a characterization in terms of the problem’s representation of an objective function or formula able to measure, quantify, and evaluates the solution domain

provided by the search-based technique.

Operators define the method of reproduction to create candidate solutions. A considerable variety of operators can be applied depending of the search-based technique chosen,

and the way in which it is applied is subject to the features of that technique.

The scheme divided in the three key stages proposed by Harman and Jones in [1] is the

main base of the model developed in this thesis. Therefore, the main contribution of this

paper is the supply of the basic structure necessary in the application of search-based

techniques to the problem stated in the section 2.1 of this paper. However, the model

developed differs from this work offering its own implementation of a GA as well as the

application of SA to tackle the problem.

The recent development in search-based software engineering and its different techniques

as well as its successful application in different areas has been exhibited in a significant

variety of papers. It is important highlighting certain articles such as [2] which states

the benefits of the techniques of this particular area of software engineering and provides

an overview of the requirements for their application. Moreover, it denotes a set of eight

software engineering application domains and their results. The relevance of these techniques is demonstrated by their application in a wide variety of areas with encouraging

results [3][4][5][6][7].

10

Literature Survey

[2] stated that search-based optimization techniques have been applied widely to a large

range of software activities. The list mentioned covers different areas of the life-cycle

such as service-oriented software engineering, project planning and cost estimation, compiler optimization, requirements engineering, automated maintenance, and quality assessment.

Furthermore, [2] offered a broad spectrum of optimization and search techniques for this

purpose such as genetic algorithms and genetic programming. In addition to the allusion

to GAs the same paper also remarked one key point that is directly related to interest

of this research. It cited the crucial importance of the fitness function as the main

difference between obtaining good or poor solutions no matter the search technique.

Harman in [2] analysed different optimization techniques by dividing them into two

main groups: classical techniques such as linear programming; and metaheuristic search

such as Hill Climbing, Simulated Annealing, and GAs. Moreover, Harman [2] showed a

special concern about the role of sensitivity analysis in search-based software engineering.

It turned the focus of the approach of the software engineering problems from global

optimum to the impact of the input values in different questions such as the shape of

the landscape and the location of the search space peaks.

This thesis is influenced by the work done by Harman in [2] because it also states

motivation for metaheuristics techniques, particularly GA. However, this work developed

an implementation of that particular technique to cope with the PSP. In addition, it

performs an SA which is enhanced by the use of statistical tests. Therefore, this thesis

does not raise SA as a future work, but it produces it as an essential part of the model.

3.2

Search-Based Software Project Management

Currently, software project management is a major concern software engineering. This

fact is proof by the considerable amount of techniques that are being applied in this

field. One of the areas that is making a special effort is search-based software engineering. Within this area, diverse authors use techniques such as hill climbing, GAs, and

simulated annealing. The main technique used in this thesis is GAs. As a result, despite

successful application of other techniques in search-based software engineering problems

such as hill climbing, the literature survey of this thesis is mainly based on GAs. There is

considerable amount of information related to the field of software project management

and more recently in the application of new techniques such as GAs [3][4][5][6][7][8][9][10].

The use of SA in project management is relatively new in terms of application. Hence,

the literature survey mentions not only papers with the basic notions of SA, but also

11

Literature Survey

different works where this technique was applied with interesting results in areas not

related to software project management. The main reason is to show the capacity of this

technique particularly measuring input factors of a model. This contributed significantly

to the idea of establishing which dependencies produce a bigger impact and therefore

reduce the completion time in a greater proportion when they are removed.

3.2.1

Genetic Algorithms

Genetic algorithms are a stochastic search-based technique and a particular class of

evolutionary algorithms which performs an iterative optimization method based on the

processes of natural genetics to resolve or approximate a solution. The foundation of GAs

is to extrapolate the Charles Darwin’s evolution theory to an algorithm which is able to

evolve possible candidate solutions in a process of optimization in the pursuance of the

solution of a problem. It is generally agreed that genetic algorithms have application in

a significant variety of areas such engineering, computer science, mathematics, physics

and so on.

In the development of the GAs it can be distinguished three main points:

• Definition of the process. Evolutionary testing is going to be used as a technique.

Which procedure is shown in Figure 3.1.

• Definition of the Fitness Function. The Fitness Function is the objective function

used in the process of evaluation of the candidate solution or individuals.

• Definition of the genetic operator. The election of the operator will define the way

in which a population of chromosomes or individuals is recombined to generate a

new individual.

The methodology of GAs consists in a sequence of actions explained following and illustrated in Figure 3.1. In the first one, a population of individuals also known as

chromosomes is generated randomly. Each of those individuals represents a candidate

solution and therefore the initial search space of the algorithm. The next step consists in

the evaluation of every possible candidate solution through the use of the fitness function

and verifying whether the problem has been resolved or not. The fitness function has

been previously defined for the particular problem and it is able to assess the genetic

representation of the individual. The third action in the algorithm specifies a sequence

of steps included within a loop looking for an evolution in the candidate solutions or

individuals by recombining and mutating them. The sequence continuously performs a

selection whose main purpose is to choose the individuals to generate a new population.

12

Literature Survey

Figure 3.1: GA’s methodology. Evolutionary Testing.

Individuals with better fitness function evaluation are likely to be elected, although not

in an exclusively way, since this fact would incur in a problem of non-diversity within

the population. There is a considerable diversity of methods of selection. A case in

point, used in the implementation of this paper, is the tournament selection [11]. The

next step is the process of recombination of the individuals previously selected by the

methodology defined by the genetic operator agreed. In the same way that with the

method of selection, there is a considerable variety of genetic operators, a good and relevant example deployed in the implementation of this paper in the two point crossover

operator [12]. The intention of this action is to combine different candidate solutions

in order to generate the new individuals of the regenerated population. After that, the

sequence performs the step of mutation whose main purpose is to try to introduce genetic diversity by randomly mutating the individuals. In so doing that, the algorithm

attempts to keep the process of evolution. The last two steps of the loop consist in

evaluating the new candidate solutions generated by the algorithm and reinserting them

into the new population.

In the use of GAs this research is significantly inspired by the work done by Massimiliano

Di Penta, Mark Harman, and Giuliano Antoniol [3][5] about software project management in a search-based approach. In addition, [4] reinforce the application of GAs in

Search-based technique for optimizing the project resource allocation. Likewise, the

work of this research is encouraged by the concerned shown by Shin Yoo in his work [10]

13

Literature Survey

about data sensitivity in the application of a Novel Semi-Exhaustive Search Algorithm.

In [3][5], based on previous work [4], is declared the application of GAs, which obtains

significant better results than random search, to implement the search-based approach.

This methodology provides empirical evidences of the variance in the completion time

and the allocation of the resources by the overhead communication produced in the

Brook’s law. Moreover, this approach is able to evaluate the impact of Brook’s law in

the completion time of the project providing a prudent technique to improve software

project management scheduling.

The work done by Antoniol et al in [3][4][5] contributed to this thesis in the idea of using

search-based approach to face the resource allocation in the project management and

the scheduling. However, those papers are orientated in complete different direction.

[3] was particularly interested in attempting to evaluate the effect of communication

overhead on software maintenance project staffing. It analysed the impact of different

factors such as the dependencies between tasks on project completion time, which is also

related to this thesis, and on staff distribution. The base of this work is to disclose the

influence or effect of Brook’s law on the two goals previously mentioned. The empirical

study presented an evaluation of the relation between the tasks and the effort (persons/month). Whereas the main focus of this paper is to apply search-based techniques to

decrease the completion time by removing dependencies and performing SA.

The paper [4] primary compares different search-bases techniques, using queuing simulation in the fitness function evaluation. This similarity can be also appreciated in [3]

, where the use of GAs and a queuing system is part of the model. The use of GAs

to analyse the resource allocation is common in the approach of this thesis and [4][3],

yet its use is different. The GA implemented in this thesis does not use this queuing

system and it was developed to cover the parameters considered for the duration aim of

the PSP in order to allow the process of altering the TPG and study its impact over the

project completion time.

In the case of [5], it contributed to this thesis in its demonstration of the capacity of the

GAs to tackle resource allocation. This is essential part of the model generated in this

work. However, the main effort of [5] is concentrated on a search-based approach over

undesirable but frequent situations in project management, such as rework or abandonment of the tasks, uncertain estimations, and errors. The idea behind this thesis also

implies resource allocation, yet it focuses on offering alternatives to the project manager

by approaching the PSP in a new way, breaking dependencies. Hence, the robustness of

the initial scheduling offered and the possible setbacks or issues arisen during the project

are not considered.

14

Literature Survey

The second main source of previous related work in GAs, which is considered as significant valuable background, is the material of Enrique Alba and Francisco Chicano [8][9]

in the application of GAs to software project management. In this work, the application

of GAs to different scenarios to resolve the PSP provides significant results in terms of

the sensitivity of the classical variables: number and duration of tasks, skills, available

resources, dedication, and cost. These results are obtained through the use of an instance

generator developed specifically by this purpose, which automatically creates different

project scenarios based on the customize file configured. As it is always done in the

exercise of GAs, a fitness function calculates the achievement of a successful result. This

work states interesting details of the fitness function, the different considerations within

the algorithm developed, and the behaviour the of the instance generator which are fully

detailed in [8]. Yet, the most remarkable attainments are the results obtained and described in the section 6 about the experimental study. This study could be considered a

sensitivity analysis of the different parameters, accurately represented in various tables

and an absolutely complete set of figures. Moreover, the study concludes the variable

importance the parameters. A case in point is the number of tasks, which considerably

increases the difficulty to obtain a solution in the application of the GAs.

This work done in [8] exhibited the ability of the genetic algorithms to face the Project

Scheduling problem. Nevertheless, this work also evidences certain level of incapacity

to offer solutions when the number of possible combinations is increased by the rise of

the input values which feed the model. The algorithm developed follow the classical

convention for the GAs as can be appreciated in Algorithm 1.

Algorithm 1 GA Pseudocode. Adopted from [8]

Initalize

Evalute

while do

Select

Recombine

Mutate

Evaluate

Replace

end while

The model produced by Alba and Chicano in [8] has one primary strength and one main

weakness. The major positive point of this work is its competence to deal with the

parameters of the two goals in conflict that define the PSP the cost and the duration

of the project. This work takes into consideration tasks, duration and dependences between those tasks, resources, skills of those resources, effort between tasks and resources,

salaries, and dedication of the resources to the tasks. However, the method of representation for the dedication within the algorithm could generate non-feasible solutions.

15

Literature Survey

This important fact is the main disadvantage of the model. By non-feasible solution it is

understood those where one resource has overwork and therefore the dedication assigned

to multiple resources is impossible to be reproduce in the real work environment. In addition, the model could generate non-feasible solutions in two more situations. First, if

one of the tasks has no resource assigned. The last one, when the resource assigned does

not have the necessary skils to perform the tasks. As a result, the authors of the paper

introduced a fitness function able to discern the viability of the solutions by adding a

variable of penalty. Forming the fitness function 3.1, adapted from [8]. The term (q)

measures the quality of the solutions generated and the second term (p) calculates the

penalty.

(

f (x) =

1/q

if f easiblesolution

1/(q + p)

otherwise

(3.1)

Alba and Chicano in [9] demonstrated the capacity of the search-based technique to

approach the Project Scheduling Problem. This paper exhibited the ability of the metaheuristics techniques and the genetic algorithms in particular to manage optimally the

time and the cost in the project management. Alba and Chicano perform an incredible

realistic work by considering the most classical parameters in the PSP. This fact and the

different scenarios processed allow them to exercise a precise analysis of the influence of

the attributes in the solutions of the problem. Both authors mentioned the difference

between the application of GAs in software engineering and other fields such as bioinformatics, mathematics, telecommunications, and so on. This metaheuristics technique

is not so intensively used in software project management. Nevertheless, their results

and conclusion proved the capacity and accuracy of the genetic algorithms to help the

managers addressing the project management.

The work done by Alba and Chicano in [8][9] significantly inspired the model developed

in this thesis contributing with the idea of using GAs to face the resource allocation

in the PSP. Nevertheless, the focus between those papers and this work is completely

different. In [8][9] Alba and Chicano studied the behaviour of the model developed in

order to produce an optimal resource allocation in diverse scenarios facing both goals of

the PSP, cost and duration. For this purpose, they took into consideration parameters

which affect both aspects such as skills of the resources, for instance. Whereas the main

aim of this thesis is to provide new means and methods to improve the overall completion

time of a project by removing dependencies and performing sensitivity analysis in this

action. All the efforts done in this work is only focus on the improving only one of the

goals of the PSP, duration. Therefore, despite both works attempt to help managers

16

Literature Survey

in software project management by the use of GAs the ultimate targets are completely

different.

3.2.2

Sensitivity Analysis

This section briefly and concisely defines sensitivity analysis and the main concepts

surrounding its study. In addition, it analyses the main previous related work in the

field of sensitivity analysis and in the area of software project management in particular.

Sensitivity analysis (SA) is the study of the impact in the output model value due to

variation in the input model source parameters. Therefore, sensitivity analysis is mainly

used in the study of the sensitivity of a model or a system in changing the input value

parameters which define that model or system [13]. This kind of analysis is generally

known as parameter sensitivity. Despite other authors also mention structure sensitivity,

that study is not matter of interest to the main purpose of this paper. Consequently,

sensitivity is the statistical measure of the effect in the outcome in comparison with the

modification in the parameters that determine it. Ergo, the greater in the variation of

the result per unit the greater the sensitivity is.

A key point in this research is a comprehensive, complete and thorough related background documentation of SA, which is necessary as a base. The general introduction

done by Lucia Breierova and Mark Choudhari for the MIT [13], as well as the definition

of Global Sensitivity Analysis proposed by Andrea Saltelli [14] provide a good support

for the understanding of the topic, giving premises and hints to carry out this methodology. As it is mentioned in the introduction of this paper project management and

software development are present in all the engineering areas, and the application of SA

has already been experimented in fields such as planning process in architecture, engineering and construction (AEC) [15] and detecting and elimination errors in software

development [16].

The research done by Breierova and Choudhari in [13] and the one done by Saltelly in

[14] guided this thesis towards the concept of parameters sensitivity. Within sensitivity

analysis, it is the action of analysing, evaluation and measuring the impact of variations

in the input of the model to examine the behaviour responds of it. These two sources

mentioned, helped to understand the force of this theory providing a consistent base,

precise definitions and relevant examples. However, this thesis uses all this established

knowledge in the specific application of software project management, evaluating the

impact of removing in turns all the dependencies that compose the TPG in the PSP.

17

Literature Survey

[15] does not have a significant similarity with the work developed in this thesis. However, the work done by Lhr and Bletzinger was beneficial to exemplify the capacity and

the application of sensitivity analysis in different areas. Furthermore, the idea of evaluating the impact of diverse factors on the development of the planning process and

therefore, on the goal of time for optimal planning join the focus of this thesis in a

certain level. Since, it also performed sensitivity analysis to factors which have direct

impact in the duration of the PSP for software project management.

The work produced by Wagner in [16] coincided with the area of this thesis. It proposed

a model able to evaluate the quality costs in software development by deploying an analytical model and performing sensitivity analysis. This work is also partially based on

the concept of global sensitivity analysis arisen by Saltelly in [14]. Therefore, Wagner

contributed notably to the idea of applying SA to software project management. Nevertheless, this thesis differs from this work since the scenario and the factors which feed

the model and the model indeed are completely different. In general terms, the research

of Wagner focused on costs whereas this thesis tackled the other main goal of the PSP,

the duration. In addition, in [16] faced defect-detection techniques and its quality cost

associated. Thus, it did not approach the PSP directly.

Sensitivity analysis helps to identify the critical parameters which have more repercussion or influence in the output of the model or system. The current relevance of

performing SA in the development of model or system is stated by Saltelli in [17] where

it is supported by the allegation of the adequate advance of its theoretical methods.

A good example of its application and its positive results collecting information of the

impact of the input parameters is [18]. Furthermore, SA can alleviate the problem of

uncertainty in input parameters [19].

The papers [17] [18] [19] assisted significantly in the generation of this thesis, although

there are substantial differences. The work of Saltelli in [17] did not contribute to the

model developed in this thesis to a particular specific part. Yet, it was considerably

helpful to understand the concept behind the sensitivity analysis. Furthermore, it contributed to comprehend the importance of the factors which determine a model in order

to reveal information in the context of model-based analysis.

In the case of [18], despite the work was a clear sensitivity analysis within the context

of project management, its focus lay in analysing uncertainty and risk to justify or not

investment in projects. By contrast, in this thesis, sensitivity analysis is performed to

offer the manager different options to approach the PSP. In conclusion, both models

the one developed by Jovanovi in [18] and the one developed in this thesis work over

parameters which usually are involved in project management. Nevertheless, the final

aim of this analysis is completely different.

18

Literature Survey

Johnson and Brockman in [19] demonstrated the capacity of sensitivity analysis to identify and reveal the mechanisms that have the greatest impact on design time in design

process. Thus, the idea behind this paper is not related at all with the PSP in project

management. However, it added the concept of using sensitivity analysis to measure improvements in completion time when this is an essential factor of the model or problem

that wants to be faced.

In addition, it exists the application of SA in project management with different approaches such as Hybrid Models over Design of Experiments [20], MonteCarlo method

[21], and Fourier Amplitude Sensitivity Test (FAST) [16][22]. The main contribution of

these papers was its extensive use of SA to different aspects of software project management or software engineering. However, there are relevant differences with the main

focus of this thesis. All these paper used specialised techniques of SA to their particular issues. Whereas, the study done this thesis, produced a detailed evaluation of the

behaviour of the model in the different tests performed in common SA. Furthermore,

the scenario of application for this thesis, which is the classical PSP, was tackle in none

the papers mentioned. Particularly, the action of removing dependencies and measuring

its impact in the completion time of the project entailed a complete new are of survey.

Consequently, despite there is a common field of research between theses works, their

kernel of experiment is entire different.

First, Hybrid Model over Design of Experiments (DOE) [20], which is based on previous

models: System Dynamic (SD) Models, to obtain the dynamic behaviour of the project

elements and their relations; State Based Models, which reproduces process activities

and represents the dynamic processes all over the state transitions initiated by events;

and Discrete Models, to reproduce the development process. This hybrid model consists

in associating the discrete model and a process of continuous simulation able to reproduce

tasks which are affected by the constant changes of the parameters, and using the state

based model to express the changes of the states. Thus, Hybrid Models are able to

analyse the impact of changes in a dynamic project environment. Furthermore, the most

interesting affirmed aspect of this paper [20] is the possibility to show non-linearities not

discovered by the common sensitivity analysis using DOE in combination with Broad

Range Sensitivity Analysis (BRSA).

The second main approach in the use of SA is Montecarlo method and its software

covered in the research of Young Hoon Kwak and Lisa Ingall [21]. This paper applies

software based in MonteCarlo method to plan the project by the analysis, identification

and assessment of the possible problems and their circumstances within the context of the

development. This methodology has not been totally accepted in project management

for a real use although it has been used in several areas which have connections to

19

Literature Survey

modelling systems in biological research, engineering, geophysics, meteorology, computer

applications, public health studies, and finance.

The last approach is mentioned by Stefan Wagner [16] in his research of defect-detection