Signed Aphasia Proseminar “ Applied Linguistic” Hr. Christoph

advertisement

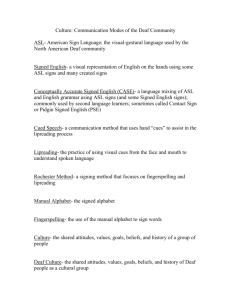

Signed Aphasia Proseminar ” Applied Linguistic„ Hr. Christoph Haase WS 2001/2002 01.03.2001 Marcel Hartwig Horst ä Menzel ä Str.35 09112 Chemnitz Tel.: 0371/3552978 Matr.Nr.: 25802 3. Semester, Magisterhauptfach: Anglistik/ Amerikanistik; Magisternebenfacher: BWP, Psychologie Contents 1. Introduction p.3 2. Terminology p.4 2.1. ASL p.4 2.1.1. Origin and History p.4 2.1.2. Language features p.5 2.2. Aphasia p.6 2.2.1. Central Aphasia types p.6 2.2.2. Approaches to therapy p.8 3. Issues p.9 3.1. ASL ä Brain organization p.10 3.2. ASL ä Brain Damage p.11 3.3. Spoken language vs. sign language acquisition p.12 4. Conclusion p.13 5. Bibliography p.14 6. Commitment p.15 7. Appendix p.16 1. Introduction Through language we seek contact and communication with other humans. It is our instrument to express different emotions, to represent different origins and cultures. Above all we have the innate ability to learn a language through which we gain the greatest gift of humanity: information. The exchange of information seems to be essential in our life. What happens if we would not be able to express and receive information on an auditory ä vocal way? Do we still have the ability to find a way to communicate? Indeed we will. Impaired children and grown ä ups do find their own communication system. Hence, facial expressions and hand signs gain a central role to open up a channel between the deaf and hearing community. American Sign Language (ASL) is the most common sign language for impaired individuals of English speaking countries. This paper will investigate its roots and appearance, tries to formulate rules of its grammar and consequently will prove its existence as language. Language and brain form a unit. Since the brain is divided into two hemispheres, neurologists are still searching for the definite area that is responsible for language. Until today they agree on the point that the left hemisphere dominates language acquisition. Thus the left side is responsible for the comprehension and processing of language. However, the function of the right hemisphere seems to be a riddle and its role in language is quite confusing. Moreover deaf impaired individuals show activity in both hemispheres when they exchange information through sign language. Subsequently, research in patients with language disorders and brain damage reveal better chances to localize the particulate areas for speech production and language comprehension. Aphasia is the clinical definition for language disorders caused by brain damage. Through aphasia problems in the reception and expression of language are evoked. This paper will give an overview on the most common forms of aphasia and will provide the reader with information on chances to therapy. Finally ASL and aphasia will be linked and results of research in aphasiac sign language will be scrutinized. 2. Terminology The following pages will provide the reader with the definitions of ASL and aphasia. Moreover, background knowledge on the origin and features of ASL as well as on the different types of aphasia will be offered. 2.1. ASL American Sign Language is the primary language used by the deaf community in the USA and other English speaking countries. Via hand signs the alphabet is represented, words are formed and sentences are created. In addition to the ” talking„ hands the face reveals shades of meaning. Thus hand signs together with appropriate facial expression form a living language, which ensures the deaf communityé s existence. 2.1.1. Origin and History Until the 18th century the deaf were not allowed to receive education, to conduct trade and to marry. Thus they lacked of fundamental human rights and the need for a communication system grew (Hafer & Wilson: 6). In 1815 Thomas Hopkins Gallaudet got the order to educate Alice Cogswell, a deaf girl from a well - off family. Since there were no schools for the deaf in the US, Gallaudet had to start his studies in sign language abroad. Eventually, he arrived in France where he met Abbe Roch Ambroise, head of a school for the deaf in Paris. There Old French Sign Language improved by new signs was taught (Hafer & Wilson: 6). Hence, this reorganized sign language was fully able to represent grammatical structures of the written as well as of the spoken French. Together with Laurent Clerc, who was a teacher at this French school, Gallaudet returned to the USA in 1817 and founded the Connecticut Asylum For The Education And Instruction Of Deaf And Dumb Persons in Hartford, Connecticut (Hafer & Wilson: 6). Apart from lip reading they taught French Sign Language, which was blended with the already existing communication system of the students. This new combined method of signs, speech and speech reading was called ASL and revolutionised any existing form of communication within the deaf community. With the dawn of the 20th century, oralism gained heavy support and overtook ASL. An increased emphasis on the use of amplification, speech training and speech reading brought this oral approach at its zenith in 1927 (Hafer & Wilson: 6). Eventually, in 1960 the Babbidge Report revealed a lower reading level for deaf children with hearing parents than for deaf children with deaf parents (Hafer & Wilson: 7). While having hearing parents, the deaf children had to learn the oral approach. Whereas deaf children with deaf parents had to communicate via sign language and consequently gained clear access to language and subsequently had more success at school. Thus, sign language regained its important role in the education of the deaf. This was supported by Total Communication Philosophy in 1970, which is still in use today by more than 70% of education programs for the deaf in the USA (Hafer & Wilson: 7). Nowadays, ASL is offered at universities to complete foreign language requirements and is the fourth most common language in the USA (Hafer & Wilson: 1). 2.1.2. Language features ASL is created in an area around the signer, which is called sign space. Within this space handshape, location and movement are responsible for ” utterance„ (Hafer & Wilson: 5). Thus the form of hands, the placement of them as well as its way of movement are essential features of ASL. In addition a manual alphabet and manual numbers help to gain better access to the language. Nevertheless, ASL is no universal sign language. Different people sign different languages. To my surprise there exists an independent sign language that tries to be as universal as Esperanto but is not signed as native language, which is called Gestuno (http://www.deaflibrary.org/asl.html#linguistics). ASL differs in grammar with spoken English. For instance, while we express in present perfect You haven–t signed in yet, we have to sign Not yet sign in you. Yet, through ASL, vocabulary and grammar can be rearranged in correct English word order with its appropriate grammatical rules. This is an important factor for communication between deaf and hearing communities. Signs are subdivided into sublexical elements (Johnson: 405). Thus phonemes co ä occur right through signs within sign space. Consequently, these phonemes ” are a small set of ” Handshapes„ , ” Movements„ and ” Locations„ „ (Johnson: 405). While sign language is signed in different communities, several influences are integrated into various interpretations through signing the language. Thus hearing people have to sign and speak simultaneously, when exchanging information with deaf people. This contact signing is a pidgin sign language (Hafer and Wilson: 5), while being used with several variations of sign language in different communities. Also ” native signers have an ” accent„ in a newly learned sign language„ (Johnson: 405) because of altered rules within different sign languages. Whereas sign space is necessary for phonology on the one hand it also bears the smallest units for carrying meaning, the morphemes, on the other. The most important factor for morphological output is movement. Various inflections of one word represent it in an exhaustive, durational or combined modulation. Thus, each morphological output can establish a new morphological input (Johnson: 408). This opens up the assumption that one morphological pattern is embedded in another. Various events and times can also be referred to by the help of sign space. Therefore the lexical items of syntax are manipulated through morphology and phonology. Hence, sign space bears linguistic meaning (Johnson: 406). In ASL syntax is spatially organized, which allows signers to refer simultaneously and independently to different entities, events and times. To put it into a nutshell, ASL is ” a fully autonomous language, with complex organizational properties not derived from spoken language (but it) exhibits formal structuring at the same levels as spoken language, and (follows) principles similar to those of spoken language„ (Johnson: 406). 2.2. Aphasia Neurologists talk about aphasia, when the left or the right side of the patienté s brain is damaged. This can frequently be caused by stroke. But also brain infections and tumours can trigger aphasia (Kelter: 9). On the following pages different markers of the most common aphasia types as well as some approaches to therapy are shown. 2.2.1. Central Aphasia types Since the second half of the 19th century causes for brain damage and its consequences have been investigated. The brain is divided into two hemispheres. Until the early twentieth century scientists had no doubt that only the left hemisphere was responsible for language. Until today the right hemisphere gained a greater interest in linguistic and neurological research. One of the first scientists to start research in brain damage was the French physician and neurologist Paul Broca. Between 1861 and 1865, Broca published several (‘ ) clinical cases of aphasia (or ” aphemia„ as he called the syndrome), and the ” language faculty„ became a widespread object of study, as the prime example of the localization of a psychological function in the nervous system. (Caplan: 46) Patients suffering from Brocaé s Aphasia are characterized by ” nonfluent speech, few words, short sentences and many intervening pauses„ (Sarno: 37). The following example shows us the main features of this syndrome. There are two aphasia patients talking about their days at school: M: er er maths? B: yeh er mmhm erm er M: maths M: erm are er er good maths? B: yes yes mmhm M: yes (Lesser & Milroy: 9) Thus the patients have a heavy disturbed sentence structure as well as a distorted utterance as expressed by ” mmhm„ or ” erm„ within speech, it is also called telegraphic style (Sarno: 37). The main reason for this impaired ability to utter and form sentences despite of intact comprehension is a right sided motor defect (Sarno: 37). Therefore, part of the brainé s motor cortex is called Brocaé s area (see appendix: illustration 1). Consequently, the ability to repeat words or sentences is impaired. A direct opposition of Brocaé s aphasia is Wernickeé s. While continuing Brocaé s studies on brain damage, the neurologist Carl Wernicke analysed a completely different type of aphasia. His patients were able to speak fluently in a well-articulated manner. Yet agrammatism through verbal and literal paraphasias (Sarno: 36) revealed defects in comprehension. Consequently, the patients had impaired reading and writing skills. That is why the area for language comprehension in the left hemisphere of the brain is called Wernickeé s area (see appendix: illustration 1). Infrequent or transient right hemiparesis (Sarno: 36) is the main cause for this kind of aphasia. Patients suffering from Wernickeé s syndrome show a tendency for paranoid ideation (Sarno: 36). When exchanging information the patients typically create new words and speak in long sentences without any particular meaning (Lesser & Milroy: 10). While Brocaé s and Wernickeé s aphasia are classical models they gained great respect in neurological research. However, in the following paragraph I would like to present the worst form of aphasia. Patients with an almost complete loss of the ability to communicate suffer from global aphasia. In addition to recurrent utterances (Kelter: 11) emotional exclamations remain as a rudiment of speech. Hence, those patients have a ” reduced auditory and a virtually negligible comprehension„ (Sarno: 39). Reading and writing seems to be impossible. This syndrome is accompanied by hemiplegia (Sarno: 39). A neurological disorder characterized by ” recurrent but temporary episodes of paralysis on one side of the body, (which) cause is unknown” (http://www.ninds.nih.gov). Patients not suffering from hemiplegia have less pronounced defects and a better chance for recovery (Sarno: 39). Other major forms aphasia can take are conduction aphasia, transcortical variations of aphasia, anomic aphasia, alexia and pure word deafness. 2.2.2. Approaches to therapy1 Recovery from aphasia can start spontaneously after a few weeks but there are also clinical ways to therapy. The three main approaches to direct therapy may be characterized as being directed at reactivation, reorganization and substitution (Lesser, 1985). Basso (1989) interprets these as, first, assisted recovery of the anatomical çhardwareé of the brain itself, secondly, the adoption of new algorithms by compensating brain areas, and, thirdly, the achievement of previous goals by different means. (Lesser & Milroy: 15) A first step to improve comprehension is to reactivate the patientsé ability to repeat words or sentences. Therefore, lists with a certain amount from simple ä structured to complex words give a look inside the depth of the patients impairment on comprehension. ” Three of the four çexperimental patientsé produced highly significantly (p<0,001) fewer errors on the naming test after two months of treatment.„ (Lesser & Milroy: 249) This shows that the patienté s semantics and phonological output (Lesser & Milroy: 249) can be reactivated. While being used widely, this naming therapy proved great success for improving the conditions of patients suffering from Brocaé s aphasia, dyslexia, anomia, apraxia and Wernickeé s aphasia (Lesser & Milroy: Fig.11.1 p.252 ff.). 1 This title refers to a headline of the book by Ruth Lesser and Lesley Milroy. Another method similar to this naming therapy is used for improving auditory comprehension. A set of minimal pairs and rhyming words as well as a training in word segmentation shall reactivate the brainé s hearing comprehension. Seventy-two hours of therapy were devoted to practice in selecting appropriate words to fill sentence gaps, with the gap varying in location and in the lexical category of the appropriate filler. Distractor choices were given of semantically congruent or anomalous words, or of phonemically similar or dissimilar non ä words. The patienté s performance improved over four months of this reactivation therapy; comprehension of sentence ä final nouns improved up to 85 per cent, of sentence ä initial nouns by 60 per cent and of sentence ä medial prepositions by 80 per cent. (Lesser & Milroy: 263) This strategy to improve the brainé s auditory intellectual capacity showed efficient progress especially for patients suffering from Brocaé s and global aphasia. Also in matters of agrammatism, strategies to restore sentence production through context comprehension are used for therapeutic purposes. Unfortunately, the patients had great difficulties in dealing with a sentence, when the ” subject was inanimate and had the role of an instrument„ (Lesser & Milroy: 267). Through a cognitive relay strategy (Lesser & Milroy: 270) by which concepts between arranged thoughts and sentence production were taught, the patient was finally able to structure his thoughts without the help of the therapist. This strategy often showed efforts after two months and helped the patient to establish and arrange ” his thoughts into long and correctly structured sentences„ (Lesser & Milroy: 270). Mainly patients with a diagnosed motor defect showed progress through this therapy. Eventually, case studies are the main source for progress in applied therapeutic models. Hence, experimental designs such as shown above helped to understand the different types of aphasia and opened up ways to curative strategies. Literature in psycholinguistics will provide the reader with further information about other methods to reactivate the brainé s language comprehension. 3. Issues To understand the roots of language and its organization in the brain, neurolinguistic as well as linguistic aphasiology unlocked new perspectives. Hence, the following pages shall give us a deeper look behind the consequences of brain damage and into new results in aphasiology. Therefore, the studies concerning brain damage in deaf or impaired patients evoked interesting issues. 3.1. ASL Brain organization Neurologists have been arguing the role of the brainé s left hemisphere as the origin of any language since research has proven immense right hemispheric activity in sign language. Thus, the question arises whether there is a hemispheric specialization for language in the brain or not. In sign language spatial visuals made by the movement of hands in sign space bear linguistic meaning (Keil & Wilson: 756). Thus the right hemisphere in signed languages is more active than in spoken languages while coping and comprehending with visual ä spatial information (Johnson: 419). Hence, both hemispheres are responsible for sign language processing and comprehension. Based upon tachistoscopic visual half field studies (Keil & Wilson: 756) scientists started to investigate hemispheric dominance in sign language. Experiments comparing the contribution of movement in sign language stimuli (Keil & Wilson: 756) confirmed a dominant role for the left hemisphere in processing with static but not with moving signs. However, sign language functions on three different levels: linguistic, symbolic and motoric (Johnson: 411). Consequently the brain has to process all three levels at once. This raised the question, whether signers can still identify signs while having no access to the sign space of the person they are talking to. After investigating patterns of moving lights in a study about the perception of biological motion (Johnson: 411) it was proven that the brain of native signers is able to distinguish morphemes in sign language even without seeing the hands of the signer. This verified other neurological results that tried to confirm a dominant role for the right hemisphere in processing morphological relationships of sign language. In the mean time, with the help of ” a lexical decision paradigm for moving signs that varied in imagability„ , it was proved that the left hemisphere of a native signeré s brain shows advantage for ” abstract lexical items„ (Keil & Wilson: 756). While lexical items are the fundamental scaffold of any language the dominant role of the left hemisphere in language was confirmed. But what would happen if one side of the brain were intact? Would the signeré s mind still be able to process and formulate language? The study of brain ä damaged deaf signers offers special insight into the organization of higher cognitive functions in the brain and how modifiable that organization may be. (Johnson: 411) 3.2. ASL Brain Damage Sign language aphasia exhibits like in spoken language aphasia no uniform impairments. However, typical consequences for aphasia in spoken languages such as agrammatism, utterance limitations and paraphasias are observable in sign languages too. More often, signers suffer from left hemisphere damage than one in the right hemisphere; there it even seems to be a rare phenomenon (Peperkamp & Mehler: 334). But also in other cases it was observed that ” although both major language ä mediating areas for spoken language were intact„ the signer could suffer from ” a severe and lasting sign comprehension loss„ (Johnson: 412). Deaf people with right hemisphere damage do not show any signs for aphasia. They exhibited fluent, grammatical, virtually error ä free signing, with a good range of grammatical forms, no agrammatism, and no signing deficits. (‘ ) only the right hemisphere damaged signers were unimpaired on our tests of ASL grammatical structure (‘ ) they even used the left side of signing space to represent syntactic relations (‘ ) (Johnson: 412) Yet they reacted aphasic in any spatial matters of sign language, while patients with left hemisphere damage were able to master spatial tasks (Johnson: Fig 18.5 p.418). Thus signers with right hemispheric lesions lack the ability to fully process sign language because of not being able to deal with all the information given within the sign space. Consequently, this assumption reveals that ” right hemisphere (‘ ) can develop cerebral specialization for non ä language visuospatial functions„ (Johnson: 419). Eventually, deaf people emphasize on non ä language skills in the same way as hearing people do. They also make use of spatial syntax and spatial mapping and arrange them differently in their sign space (Johnson: 419). Consequently, further information on a higher level is given through sign space as hearing people do when stressing their arguments with gestures and facial expressions. Thus, aphasia has not such massive consequences for deaf than for hearing persons. While being able to exchange information on a spatial level, communities of native signers would still have the competence to exchange information even when members of this community would suffer from aphasia. To put into a nutshell, sign language functions on a higher level than any other language. Bellugi, Poizner and Klima in their research about sign language and the brain even concluded that: (‘ ) sound is not crucial (‘ ) for the development of hemispheric specialization. (‘ ) not only is there left hemisphere specialization for language functioning, but (‘ ) also (‘ ) for non ä language spatial functioning. (Johnson: 423) Thus, sign language is language, not just an update to certain spoken language to help impaired people to follow the rules of this spoken language. For this reason, it would be interesting to compare the processes in acquisition of a spoken language with those of a sign language. 3.3. Spoken language vs. sign language acquisition Sign languages are in contrast to the auditory ä vocal form of spoken languages a visual ä gestural medium to exchange information. Both languages make use of gestures to bear and stress linguistic meaning. In sign language we distinguish natural sign language (e.g. ASL, Langue des Signes Franc aise) from devised or derivative sign language (e.g. Manually Coded English) (Keil & Wilson: 758). In spoken languages we can distinguish mother tongue from any foreign or any specialized language we acquire through our life. While usually hearing people grew up under the influence of their mother tongue, less than 5 per cent of the deaf signers are first exposed to their language in their infancy (Keil & Wilson: 759). Very often they grew up in hearing families and consequently they are exposed to sign language very lately in their childhood because of discouraged parents who try to find a way to spoken language acquisition for their children (Keil & Wilson: 759). Thus the parents avoid any contact with sign language and even prefer lip reading as speech presentation. Subsequently: (‘ ) native and early ASL learners show much more fluency, consistency, and complexity in the grammatical structures of the language, and more extensive and rapid processing abilities, than those who have acquired ASL later in life. (Keil & Wilson: 759) In spite of that, ” deaf children between 1.5 and 4 years of age (‘ ) spontaneously develop their own structured sign system„ (Sarno: 351) when not exposed to sign language until this age. This rudimental sign language is similar to the basic properties of a spoken language, which hearing infants start to speak and master within the very same period (Sarno: 351). Hence, deaf children also do have the same innate drive to speak as hearing children. According to this, signers grew up with the same natural language development as if they were hearing children that acquire a spoken language. It is proven that ” deaf children are often able to perform very closely to the level of hearing children of the same age„ (Sarno: 351). This leads to the assumption that ” acquisition of signed languages is equally fast and follows the same developmental path as that of spoken languages„ (Peperkamp & Mehler: 343). Furthermore: (‘ ) signs might be easier to perceive than spoken words (‘ ) signs might be easier to produce, if motor control of the hands matures earlier than that of the vocal apparatus (and) first signs are easier to recognize than first words by the adult observer. (Peperkamp & Mehler: 343) 4. Conclusion As we have learned, sign language functions as a completely autonomous language. It follows its own grammatical rules, syntax and vocabulary. Morphology and phonology are important to refer to different entities and times just by altering movement, loci or shape of the hand. Furthermore, impaired signers exhibit the same symptoms of aphasia as impaired speakers do. The deaf have found a higher level to communicate by the help of spatial syntax and mapping (Johnson: 419). Due to this, they do not suffer from the same consequences like aphasiac hearing patients. Thus, depressions or suicidal tendency almost never occur with deaf aphasiac patients. Further research in the origins of sign language and its organization within the brain would allow better access to the innate origin of language and its representation in the brain. We also have shown that ASL is no universal sign language, but with complex grammar and vocabulary it has proven to be one of the most common sign languages in the world. ASL is capable of conveying subtle, complex, and abstract ideas. Signers can discuss philosophy, literature, politics, education, and sports. Sign language can express poetry as poignantly as can any spoken language and can communicate humour, wit, and satire just as bitingly. As in other languages, new vocabulary items are constantly being introduced by the community in response to cultural and technological change. (http://www.aslinfo.com/aboutasl.cfm) 5. Bibliography Bellugi, Ursula / Poizner, Howard / Klima, Edward S. (1996). ” Language, Modality and the brain„ . In Johnson (1996: 404-423). Caplan, David (1995). Neurolinguistics and linguistic aphasiology An introduction. Cambridge: Cambridge University Press. Corina, David P. (1999). "Sign Language and the Brain". In Keil / Wilson (1998: 756-757). Hafer, Jan C. / Wilson, Robert M. (1996). Come Sign with us. Washington, D.C.: Gallaudet University Press Johnson, Mark H., ed. (1996). Brain Development and Cognition A Reader. Oxford: Blackwell Publishers Ltd. Keil / Wilson, eds. (1999). MIT Encyclopaedia of the Cognitive Sciences. Cambridge: MIT Press. Kelter, Stephanie (1990). Aphasien. Stuttgart; Berlin; Koln: Verlag W. Kohlhammer Lesser, Ruth / Milroy, Lesley (1993). Linguistics and Aphasia. London: Longman Group UK Ltd. Newport, Elissa L. / Supalla, Ted (1999). "Sign Languages". In Keil / Wilson (1998: 758-760). Peperkamp, Sharon / Mehler, Jacques. "Signed and Spoken Language: A unique underlying System?„ , p. 333 ä 346, Source is unknown. Sarno, Martha Taylor, ed.(1991). Acquired Aplasia. San Diego: Academic Press, Inc. Sources from the internet: ASLinfo.com (1996 ä 2001): http://www.aslinfo.com/aboutasl.cfm (visited on: 27.03.2002) Nakamura, Karen (2002): http://www.deaflibrary.org/asl.html#linguistics (visited on: 20.03.2002) The National Institute of Neurological Disorders and Stroke (2002): http://www.ninds.nih.gov/health_and_medical/disorders/alternatinghemiplegi a.htm#What_is_Alternating_Hemiplegia (visited on: 04.03.2002) 6. Commitment Ich versichere, dass ich diese Hausarbeit selbst verfasst und dafur ausschlie lich die angegebenen Texte und Quellen verwendet habe. Alle wortlich ubernommenen Aussagen sind als Zitate eindeutig gekennzeichnet. Die Herkunft der indirekt ubernommenen Formulierungen ist angegeben. (Marcel Hartwig) 7. Appendix Illustration 1: (Caplan: 51)