Assignment Cover Sheet – Internal

UNIVERSITY OF SOUTH AUSTRALIA

Assignment Cover Sheet – Internal

An Assignment cover sheet needs to be included with each assignment. Please complete all details clearly.

If you are submitting the assignment on paper, please staple this sheet to the front of each assignment. If you are submitting the assignment online, please ensure this cover sheet is included at the start of your document. (This is preferable to a separate attachment.)

Please check your Course Information Booklet or contact your School Office for assignment submission locations.

Name: Phirun Son

Student ID 1 0 0 0 6 4 5 1 7

Email: sonpy003@students.unisa.edu.au

Course code and title: Minor Thesis

School: CIS Program code: LHIS

Course Coordinator: Prof. Stewart Von Itzstein

Day, Time, Location of Tutorial/Practical:

Assignment number:

Tutor: Prof. Stewart Von Itzstein

Due date: 26-Oct-2009

Assignment topic as stated in Course Information Booklet:

Minor Thesis

Further Information: (e.g. state if extension was granted and attach evidence of approval, Revised Submission Date)

I declare that the work contained in this assignment is my own, except where acknowledgement of sources is made.

I authorise the University to test any work submitted by me, using text comparison software, for instances of plagiarism. I understand this will involve the University or its contractor copying my work and storing it on a database to be used in future to test work submitted by others.

I understand that I can obtain further information on this matter at http://www.unisanet.unisa.edu.au/learningconnection/student/studying/integrity.asp

Note: The attachment of this statement on any electronically submitted assignments will be deemed to have the same authority as a signed statement.

Signed: Phirun Son Date: 26-Oct-2009

Date received from student Assessment/grade Assessed by:

Dispatched (if applicable): Recorded:

Page 1 of 55

Phirun Son 100064517

University of South

Australia

School of Computer and Information Science

Bachelor of Information Science

(Advanced Computer and Information Science)

CIS Honours Minor Thesis

Minor Thesis

Analysing Microarray Data Using

Bayesian Network Learning

Student: Phirun Son

Student ID: 100064517

Supervisor: Dr Lin Liu

Page 2 of 55

Phirun Son 100064517

Acknowledgement

Firstly I would like to thank my supervisor, Dr Lin Liu, who was always helpful to me.

Her support, guidance and encouragement allowed this work to be possible, despite the many complications that arose.

I would also like to thank the University of South Australia for allowing me the opportunity to learn and providing a great learning environment. The resources provided by the University were invaluable to my studies.

Not the least I would like to thank my family and friends for supporting me and helping me throughout my time at University.

Phirun Son 100064517

Page 3 of 55

Declaration

I declare that this thesis hereby contains works carried out by myself, and does not, to the best of my belief, incorporate without acknowledgement the work of any previously published works or materials written by another person. All substantive works by others are clearly acknowledged in the text.

Phirun Son

Phirun Son 100064517

Page 4 of 55

List of Figures

Figure

Microarray Example Image Figure 1:

Figure 2:

Figure 3:

Figure 4:

Figure 5:

Bayesian Network Example Image

Naive Bayesian Network Structure

Data Conversion – Value Conversion

Data Conversion – Missing Value Removal

Page No.

27

31

32

16

19

Phirun Son 100064517

Page 5 of 55

List of Tables

Table

Table 5:

Table 6:

Table 7:

Table 8:

Table 1:

Table 2:

Table 3:

Table 4:

Harvard Data Set – Cross-Validation

Michigan Data Set – Cross Validation

Harvard and Michigan Training on Stanford – 161 genes

Harvard and Michigan Training on Stanford – 100 genes

Harvard and Michigan Training on Stanford – 39 genes

Integrated Harvard and Michigan Training on Stanford

Harvard Training on Stanford – Structure Learning

Harvard Training on Stanford – Structure Learning

Page No.

35

35

37

37

37

33

40

41

Page 6 of 55

Phirun Son 100064517

Contents

Acknowledgement ...................................................................................................... 3

Declaration ................................................................................................................. 4

List of Figures ............................................................................................................. 5

List of Tables .............................................................................................................. 6

Abstract ...................................................................................................................... 9

Introduction .............................................................................................................. 10

Microarray ............................................................................................................. 10

Gene Expression ............................................................................................... 10

Microarray Technology ...................................................................................... 10

Challenges ......................................................................................................... 11

Research Question ............................................................................................... 12

Purpose and Limitations ....................................................................................... 14

DNA Microarray ........................................................................................................ 16

Analysis of Microarray Data .................................................................................. 17

Bayesian Networks................................................................................................... 19

Structure Learning ................................................................................................ 20

Bayesian Network Classification ........................................................................... 22

Summary............................................................................................................... 23

Research Method ..................................................................................................... 24

Data Sets .............................................................................................................. 24

Platform................................................................................................................. 27

Naive Structure Classification .................................................................................. 30

Tools and Data Preparation .................................................................................. 30

Page 7 of 55

Phirun Son 100064517

Single Set Cross Validation................................................................................... 34

Single Set Training ................................................................................................ 36

Integrated Data Set Training ................................................................................. 38

Structure Learning Classification .............................................................................. 40

Conclusion ............................................................................................................... 41

Future Work ............................................................................................................. 42

References ............................................................................................................... 43

Appendix A – Naive Classification (Cross-Validation) .............................................. 49

Appendix B – Naive Classification (Training Set) ..................................................... 52

Appendix C – K2 Classification (Training Set) .......................................................... 54

Phirun Son 100064517

Page 8 of 55

Abstract

Gene microarrays are a technology that allows the measurement of several thousand genes’ expression levels. This allows the acquisition of large amounts of data, with which allow the discovery of how various genes may affect human condition (Shalon et al, 1996). However, as this data is very large, it is incredibly complex to find which genes are responsible for these conditions. This is due in no small part to the high dimensionality of the number of genes versus the low dimensionality of samples.

Even so, the data found in these Microarray experiments are useful in many ways, such as to assist in classification. The task of classification deals with assigning a class variable to a sample in a data set. This is generally done on data of which the class is not known, but validation is usually done with pre-identified classification.

In this paper, the use of Bayesian Networks for purposes of classification is investigated. There are characteristics of Bayesian Networks that are desirable when working with microarray data, as it can attempt to learn network structure from data that is incomplete or noisy, as microarray data is (Heckerman, 1998).

Along with the investigation into Bayesian Network methods for classification inference, this thesis will also examine the effects of data integration in relation to classification. Integration deals with combining several similarly themed data sets together in order to attempt to get better results for classification.

Page 9 of 55

Phirun Son 100064517

Introduction

Microarray

Gene Expression

Genetic research is a major field of research in the bioinformatics field recently.

Specifically, a large amount of attention has been growing in relation to understanding the gene regulatory networks, also known as gene expression networks, which govern the way our bodies control cellular functions.

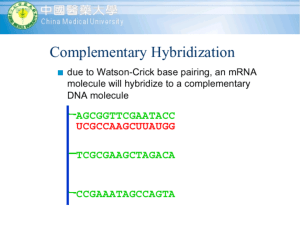

Deoxyribonucleic acid (DNA) is the template that our bodies use to create our cell structures. It is a form of nucleic acid which contains genetic instructions, which it relays to other components of our genetic makeup for the purpose of protein creation. This is done by creating messenger ribonucleic acid (mRNA), whose purpose is to transcribe DNA sequence information into RNA sequence information.

Ribonucleic acid (RNA) contains the information and components needed to create cellular proteins (Knox et al, 2001).

The process of transcribing this information is known as gene expression, or gene regulation. The levels in which these genes are expressed are able to be measured in a variety of ways, but a certain method that has allowed an unprecedented scope of data is the use of microarrays (Shalon et al, 1996).

Microarray Technology

A gene microarray is a technology which is able to capture thousands of genes’ expression levels at a time, giving a huge amount of data. This is done by having a

Page 10 of 55

Phirun Son 100064517

solid surface, such as a glass or silicon chip, attached with thousands of microscopic spots. These spots are known as probes, and are generally a short section of a gene, which can be processed using chemicals such as fluorophores in order to determine the level of expression of the gene. The expression levels of these targeted genes are generally captured over a period of time, in which the genes are exposed to some form of stimuli, such as a drug treatment, giving the progression of expression levels as a reaction to the stimuli (Shalon et al, 1996).

Challenges

It can be ascertained that this method of data capture of gene expression levels creates a huge amount of data to interpret. However this process also comes with the downside of creating a large set of genes, but each experiment can only capture so many time instances. As the process uses biological data, and there are numerous different methods of both capturing and interpreting the data, it is difficult to incorporate various experiments together.

In order to overcome the obstacle of having a limited sample of data, there are various methods of reducing the complexity of the original data. A basic way of reducing the complexity of a single data set is to isolate the gene variables that most affect the overall class of a sample. This method may help toward decreasing complexity, but with only a limited number of samples, this reduction may not be hugely accurate and could be biased.

To gather better results from this method, the use of several similar data sets could be use. This would have several effects on the outcome of the reduction. Since microarray data sets vary between different tests, even comparing data on the same subject matter may not have a large amount of overlap on the genes tested in the data. This means that when comparing several sets, non-overlapping genes may be

Page 11 of 55

Phirun Son 100064517

eliminated. The increase in number of samples by using several sets also inherently adds more reliability to the results gathered.

However, the challenge from using several sets is derived from the non-uniformity of the data gathering methods. In order for comparisons to be made between data gathered from similar test subjects, the data gathered must be in the same format; this presents the challenge of having several data gathering and interpretation methods output data which is incompatible with other experiments.

Research Question

There has been research into the use of combining several data sets in order to ascertain better results in regards to classifying samples in a gene microarray data set. In order to validate the correctness of integrating data from several sets of differing experimental methods, there are a number of procedures that may be done.

In order to test whether data has been properly normalised to work in conjunction between sets, validation can be done by using one set in order to test whether the data from that set can accurately identify the classification of samples for the other sets. Subsequently, if that proves to be applicable, the use of several data sets can be tested to see if the increase in samples can lead to higher accuracy of prediction in classifying unknown samples.

With that said, the challenge comes from performing the prediction and validation of the classification. There are several methods of performing validation, including the use of several modelling structures. This paper will focus on the use of Bayesian

Network structures in order to execute the task. This decision has the dual benefit of testing the integration of several data sets as well as the suitability of Bayesian

Network learning techniques.

Page 12 of 55

Phirun Son 100064517

The use of Bayesian Networks also includes the opportunity to explore the use of structure learning for Bayesian Network models. For Bayesian Networks to be at their most efficient and accurate, a proper modelling of the structure of the graph component of the Bayesian Network is essential. Typically, the use of naive structures as a method of validation is used; however the use of a naive structure is not optimal as it does not fully utilise the advantages of a Bayesian Network. By allowing for structure learning to be placed upon the data sets for use in validation, this paper can investigate the applicability of using structure learning in enhancing the precision of inference.

Therefore, the aim of this paper will be to explore these research questions:

Are Bayesian network structures suitable for modelling gene regulatory networks?

This question will expand on the idea of Bayesian Networks used as a modelling paradigm for gene networks. In order to do this, other methods of modelling must be analysed and compared to Bayesian Networks.

Can several data sets from various experiments be used conjunctively to raise effectiveness?

This will explore whether data, which has been gathered using different techniques, but on similar subjects, can be normalised into data that can be used conjunctively. In order to test this, the data from one set should be able to correctly predict the classification of the samples of another set.

Can the combination of several similar data sets impact the precision of classifying unknown samples of similar sets?

Page 13 of 55

Phirun Son 100064517

This will identify the viability of analysing several similar data sets in order to perform predictions on unknown samples in another similar data set. If this is true, the increase in available data can propagate even larger amounts of data in which can be used to perform predictions, leading to higher accuracy as more samples are gathered.

Are Bayesian Networks a suitable solution for predicting the classification of a gene microarray data sample? Can the use of structure learning enhance the performance of using Bayesian Network inference for classification?

This question will illustrate accuracy of using Bayesian Network models for use in inference of the classification of unknown data samples. The use of structure learning can also be tested and compared to both Bayesian Network learning using naive Bayesian structures, as well as other non-Bayesian

Network methods of inference.

Purpose and Limitations

Gene research is an important element in discovering more and more about how our bodies function. It can help in founding new medical research and in developing treatments and cures for diseases. If we could better understand the way that our genes interact with one another to produce the building blocks of our bodies, it is possible to further research into genetic science.

This can be achieved by using the data collected from microarray experiments, which are becoming more prevalent over time, and more freely available now due to public databases. By utilising this available data, novel approaches to deciphering the information they contain can arise, and with the increasing amount of data

Page 14 of 55

Phirun Son 100064517

available, these techniques can be tested and applied to a wide variety of different causes.

In order to properly use the data available, the appropriate techniques must be applied, and finding the techniques that work are part of this research. By testing the techniques devised, this research hopes to evaluate the validity of the said techniques, and allow expansion upon these techniques for further research.

To fulfil this goal, the aid of established techniques and vendor software is used. This research does not encompass the task of finding new methods of analysing data, as this is a major task that would be improbable to complete within the scope of a minor thesis; rather, it applies pre-existing methods into a new problem, and hopes to validate the hypotheses stated.

The contributions that this thesis will attempt to make are the following: to investigate the usability and feasibility of Bayesian Network Classifiers for use in gene

Microarray data using singular data sets, and to investigate the same with integrated data sets.

Page 15 of 55

Phirun Son 100064517

DNA Microarray

DNA microarray technology is a fairly recent technology, being incepted in the mid

1990’s. Along with allowing an unprecedented amount of genes to be monitored at once, microarray technology solved problems that earlier methods of monitoring gene expression levels faced, such as in blotting. For instance, blotting required the use of porous membranes on which the gene probes were attached. This limited the scope of genes that could be measured due to the requirement of needing radioactive, chemiluminescent or colormetric detection methods. These methods cause the probe readings to scatter and disperse. This is not the case with microarray technology, which can be applied on a glass surface, and uses fluorescent detection methods, resulting in lower background interference and greater probe density (Shalon et al, 1996). This not only allows for more genes to be measured at once, but increases consistency as they are measured during the same experiment rather than several, which would be later normalised.

Figure 1: Example of a 37,500 probe spotted ogilo microarray (public domain)

Page 16 of 55

Phirun Son 100064517

Microarray technology is not the only technology in recent years to be discovered; there have been other types of methods that have emerged, such as serial analysis of gene expression (SAGE) (Velculescu et al, 1995), however microarray technology has taken off the most due to being relatively easy to use, due to not requiring radioactive materials, and therefore specialised labs (Russo et al, 2003). Another advantage is that microarray technology is relatively cheap, and there are various types of microarray technology with incremental costs, allowing a range of firms of various sizes to afford to perform some kind of microarray-based technology

(Granjeaud et al, 1999).

Due to the popularity and relative ease of microarray technology, there is a huge influx of microarray data of which there is little ability to process for valuable information (Granjeaud et al, 1999). To compound on this, there has been little in the way of standardising the format of microarray data. There are many different manufacturers and procedures used to collect the gene data, so naturally there is variation in how the final data is represented. There have been attempts to standardise the format of microarray data to relieve this problem, with the most widely accepted being ‘Minimum Information About a Microarray Experiment’

(MIAME) developed by the Microarray and Gene Expression Data Society (MGED)

(Brazma et al, 2001). This standardisation has allowed repositories of microarray data to be formed, housing free gene expression data to the public. Such databases include ArrayExpress from the European Bioinformatics Institute (Brazma et al,

2003) and Gene Expression Omnibus (GEO) from the National Center for

Biotechnology Information (Edgar et al, 2002), both of which contain an abundance of freely available microarray data.

Analysis of Microarray Data

Although there is an abundance of data available in a standard format, the true challenge lies in the interpretation of the data available. Due to the nature of

Page 17 of 55

Phirun Son 100064517

microarray experiments, the output data is abundantly large in the number of genes sampled, but each gene can only be sampled so many times. This creates a large disparity between the quantities of genes, versus the quantity of samples. This issue causes difficulties in using the data to extract useful information, and so when performing microarray experiments, a clear objective is required.

One common objective of a microarray experiment is ‘class comparison’, in which different groups of subjects have their gene expression levels compared in order to discover which genes are expressed differently between the different classifications

(Simon et al, 2003). This can be used to isolate the genes which have the most variation in comparison to genes from the different classifications. For example, this technique can be applied by comparing the genes of a sample which is healthy and the genes of a sample which has an illness or disease. By comparing several differing samples, a general hypothesis can be made as to which genes are most likely to affect the classification of healthy and ill.

Similar to this is the process of ‘class prediction’ (Golub et al, 1999), which is what this paper will focus on. Class prediction is the use of known classes in estimating the class of unknown samples. By using data of known class subjects, new subjects can be inferred to be of a certain class by applying the knowledge of the expression of genes in the known class to see if the unknown subject relates. In order for this to be possible, the class prediction approach requires a set of genes which are correlated toward the definition of the class. By examining these genes, an unknown subject’s classification can be inferred by comparison of the expression levels of the chosen genes.

However, the process of performing these predictions is one problem in which there are several solutions. One of the aims of this paper is to compare the success and accuracy of certain techniques against each other. For this paper, various techniques utilising Bayesian Networks will be tested in use for classification inference.

Page 18 of 55

Phirun Son 100064517

Bayesian Networks

There have been different ideas as to what model would be best suited to model gene networks, such as neural networks (Xu et al, 2007), linear equations (Gebert et

al, 2007) and Boolean networks (Shmulevich et al, 2002), however this paper will focus on the use of Bayesian Networks for the modelling of gene data.

Figure 2: A simple example of a Bayesian Network (public domain).

Bayesian networks, also known as belief networks, represent a set of variables in the form of nodes on a directed acyclic graph (DAG), with each node containing a conditional probability table. Each edge in the graph represents a conditional dependency between two nodes. According to Heckerman (1998), Bayesian networks bring us four advantages as a data modelling tool, three of which are directly beneficial to working with gene microarray data. Firstly, Bayesian networks are able to handle incomplete or noisy data, which is a common trait of microarray experiments due to the nature of how the data is captured. Secondly, Bayesian networks are able to ascertain causal relationships through conditional independencies, allowing the modelling of relationships between genes. The last advantage is that Bayesian networks are able to incorporate existing knowledge, or

Page 19 of 55

Phirun Son 100064517

pre-known data into its learning, allowing more accurate results by using this known data when calculating the model. These points are re-iterated in many papers as reasoning of why Bayesian networks are a viable solution to modelling microarray data (Chen et al, 2006; Spirtes et al 2001; Wang et al, 2007; Yavari et al, 2008).

Bayesian Networks are able to perform various tasks by using the knowledge provided within them. One such task is inference, which is calculating the probability of a given unknown variable given other variables in the model. There are various methods of performing inference on a data sample, with their own advantages and limitations. For example, some methods of inference may only work on discrete data as opposed to continuous data. Some other methods work on a different topology than the directed acyclic graph model. For instance, the Quickscore method of

Bayesian Network inference uses a Quick Medical Reference structure for inference

(Heckerman, 1989). Then there are methods which perform exact probability inference, but are slower and require more computational power than other algorithms which perform approximate inference calculation.

One major reason for choosing to use Bayesian Networks is for the possibility of applying structure learning to the model. Structure learning is the process of using available data and creating a probable topology dor the data. There are several different techniques that can be utilised to perform this; however the challenge is in finding a suitable technique for use on gene data, of which there are many difficulties.

Structure Learning

Structure learning is the act of finding a plausible structure for a graph based on data input. However, it has been proven that this is an NP-Hard problem (Chickering,

1996), and therefore any learning algorithm that would be appropriate for use on

Page 20 of 55

Phirun Son 100064517

such a large dataset such as microarray data would require some form of modification for it to be feasible.

It is explained by Spirtes et al (2001) that finding the most appropriate DAG from sample data is large problem as the number of possible DAGs grows superexponentially with the number of nodes present. With gene data, this number is typically in the thousands, if not more. Therefore the possibility of performing an exhaustive search of DAGs attempting to find the optimal model is infeasible. Even with the use of reduction in order to decrease the number of variables in a dataset, the task would be immense. The use of heuristics is imperative in reducing the complexity of structure learning methods.

The K2 algorithm, a widely used technique for structure learning is one such standard learning algorithm which uses heuristics in order to limit the search space of DAGs. It requires a node ordering, that is the variables given to the algorithm must be from ancestor to descendant, and works on the principle of assuming a node has no parents. It then incrementally adds parent nodes and calculates probability until no parent node is found to be beneficial (Cooper & Herskovits, 1992).

By noting the method that the K2 algorithm operates, it is easy to deduce that the ordering of nodes is a large factor in determining the final result found. This poses the difficulty of not knowing prior ordering in a network such as a gene network.

There have been attempts at modifying the K2 algorithm in order to create a more feasible form, such as an effort by Numata et al (2007) to learn more appropriate orderings by observing a number of distributed orders, and narrowing down order through observation. Another effort was made by Chen et al (2006) which split the task of searching by first creating an undirected graph using mutual information. The graph is then processed using the reduced search space in order to assign directions to the undirected edges.

Page 21 of 55

Phirun Son 100064517

Other means of simplifying the problem have been to use hybrid techniques, combining different methods together in order to try getting better results. For instance, the use of clustering, by which genes with similar expression profiles are grouped together in order to reduce the sample space. This allows conventional algorithms which can run with the smaller number of variables to be used. These network clusters can then be used to incrementally increase the scope of the network (Yavari et al, 2008; Zainudin & Deris, 2008).

There have also been efforts in which new algorithms are being proposed to deal with the nature of gene data. Pena et al (2005) proposed a technique whereby a seed gene (arbitrary) is used as a base upon where a certain number of dependent genes are found for that gene, and iteratively increase the number of dependant genes to look for.

Bayesian Network Classification

There has been much research into the use of Bayesian Networks for use in classification. There are five general types of structure used in Bayesian Network classifiers, being naive Bayesian structures, tree-augmented Bayesian structures,

Bayesian augmented naive structures, Bayesian multi-nets and general Bayesian structures (Cheng & Greiner, 2001). Although the naive Bayesian structure, the simplest structure due to its static nature, has been found to be effective while performing classification inference on data where the variables in the structure are not strongly correlated (Langley et al, 1992), this is a strong assumption and there have been studies to find a better structure learning method for use with classification.

For example, a paper by Helman et al (2004) investigated a method of breaking down the classification problem into a matter of sub-networks involving a class label and the parent genes for that class. Others have analysed the use of different

Page 22 of 55

Phirun Son 100064517

structures in order to compare the results of the various methods. Cheng and

Greiner (1999) compared four different structures, including General Bayesian

Networks, Tree-Augmented Naive Networks, Bayesian Augmented Bayesian

Networks and plain naive Bayesian Networks, which concluded in finding General

Bayesian Networks and Bayesian Augmented Networks to be the most promising.

They also proposed using a wrapper to return the best result of the two methods to further improve results.

Cheng and Greiner (2001) later went on to study the use of Bayesian Multi-Nets for use in classification. A Bayesian Multi-Net being a version of a Bayesian Network where multiple local networks exist for different states that the class variable can be

(Geiger & Heckerman, 1996).

Summary

Classification of gene networks is still a hot topic and there is still much research to be done in the domain. This thesis will attempt to address some of the issues faced by researchers in this field of study. Bayesian Networks as a whole receives a lot of attention due to the potential usefulness that it has, but has yet to be utilised.

Page 23 of 55

Phirun Son 100064517

Research Method

Data Sets

With the increase in the number of publically available databases of free gene microarray data comes the opportunity to attempt integration of several similar data sets. However, within this research project, the use of data sets which have been previously studied will be used so that the results may be compared against the

Bayesian Network approach of this paper.

The main proponent for this research is based on the work of Li (2009) in which three independent data sets from three separate universities: Harvard University

(Bhattacharjee et al, 2001) which used Affymetrix U95A GeneChips on 186 lung tumour samples and 17 normal lung samples. Of these, a subset of data featuring only the samples which were defined with adenocarcinomas and the normal lung samples is extracted; this is the data used for the Harvard experiment. From

Michigan (Beer et al, 2002) is a study of 86 adenocarcinomas and 10 normal lung samples captured using Affymetrix HuGene GeneChips, giving a total of 96 samples.

The final data set comes from Stanford University (Garber et al, 2001) which collected samples from 67 tumours, of which 41 were adenocarcinomas and 5 normal lung specimens, totalling 46 samples. The experiment was performed using

24,000-element cDNA microarrays. From here on, the three data sets will be referred to by their lab names, Harvard, Michigan, and Stanford.

These three data sets are all publically available and their basis is all focused around comparing adenocarcinoma samples with healthy samples. However, as the experiments were independent, and each lab used different equipment to collect the samples, using the results collectively is not a trivial task. On top of this, the number of genes collected from each sample, and the genes themselves are not uniform among the three data sets. The Harvard data set contained 11,657 genes, the

Page 24 of 55

Phirun Son 100064517

Michigan data set contained 6,357 genes and the Stanford data set contained

11,985 genes.

In order to incorporate the data together, a number of operations were required to be performed, such as removing duplicate genes, normalising gene and class labels and selecting genes which are common to each data set. After completing these tasks, a subset for each data set was gathered, of which all the gene variables are the same amongst the three data sets.

Once these data sets are obtained, the next step was to further reduce the number of genes, as after the processes, the number of genes was still 1,963. In order to further reduce the number of genes, a series of feature selection tasks were implemented to finally bring the number of genes to a reasonable size: this was performed in a number of ways in order to test the differences in data integration methods. However, this will be discussed in a later chapter in this thesis. The output data sets gathered after performing the feature selection involved subsets of each of the three data sets of three different sizes: a subset of containing 161 gene variables, a subset of 100 gene variables, and a subset of 39 gene variables.

The method in which these data subsets were created was to compare the usability of different methods of data integration. For the 100 gene variable subset, each data set had feature selection performed on it individually. This created a subset of 100 genes which were chosen to be the most influential for that data set. This did not necessarily mean that those genes were the most influential for another data set.

The two techniques for integrating data were to extract the union of two data sets’ top 100 gene variables, creating the 161 gene variable subsets. The other technique was to take the intersection of two data sets’ top 100 gene variables, resulting in the

39 gene variable subsets.

Page 25 of 55

Phirun Son 100064517

Even though the data sets were now reduced to an amount of 161, 100 and 39 variables, as opposed to thousands, it is still a fairly large number for modelling

Bayesian Networks. Due to the super exponential growth of possible graphs for a

Bayesian Network to conform, it is not feasible to apply exhaustive techniques to the data set. Therefore, only heuristic Bayesian Network learning techniques are considered.

In order to test the usability of the data sets obtained from these experiments, a series of methods were written to perform Bayesian Network learning on the data sets. The first consideration when performing Bayesian Network techniques is the problem of creating a graph structure for the Bayesian Network based on the data.

One established method of modelling data for inference with Bayesian Networks is to create a naive network structure. A naive structure is a very basic topology, however it does not fully utilise the benefits of Bayesian Networks. The naive structure is one where one variable, the classification variable, is made a parent of all other nodes in the graph, and there are no other connections.

However, the naive Bayesian Network structure does not fully take advantage of the benefits of Bayesian Networks. Due to the nature of the naive structure, there is a strong assumption that all variables in the structure are conditionally independent of each other. Therefore, it may not be the ideal solution for use in classification inference, and a proper graph structure could be found by using structure learning techniques to test whether there are any advantages.

Page 26 of 55

Phirun Son 100064517

Figure 3: A naive network structure of size N

Although there are a number of established techniques used to model data into a

Bayesian network, they are not all feasible to perform when dealing with gene data, whether for time limitation, memory limitation, or accuracy. This is why there have been novel, innovative approaches to adjusting and modifying some of these established techniques in order to give better results in regards to accurately modelling gene data. Though there are many approaches to this problem, it would not be possible to test every one of them. Therefore, this topic would be best suited for a dedicated research project of its own and is too large to be integrated into this thesis. So the structure learning techniques will be limited to conventional methods.

Once these considerations have been taken care of, the next step was to find a suitable platform in order to create a program to perform the operations needed.

Platform

In order to test the techniques chosen, we will require a platform in which the various algorithms can be run and tested on data with equal footing. Therefore a system where data from gene microarray experiments can be imported into is needed, as

Page 27 of 55

Phirun Son 100064517

well as a way to represent Bayesian network structures and the capability to perform the intended algorithms on them.

For this purpose, the environment of MATLAB has been chosen (Mathworks, 2009).

MATLAB is a well established environment of which many mathematical and scientific functions are already pre-programmed, and it has the ability to extend its capabilities through toolboxes. In particular, the MATLAB environment is fairly easy to use as it caters towards those who are not usually well versed in the computer science field, so even complex activities can be performed with ease.

In terms of extendable toolboxes, there is a free, open source toolbox known as the

Bayesian Network Toolbox (BNT) (Murphy, 2001). As the name implies, the toolbox extends MATLAB to include the data structures and functions to allow Bayesian

Networks to be represented and manipulated. This simplifies the process of needing to create a representation of Bayesian networks ourselves, and also comes with many convenient functions which can be used to help in processing data into

Bayesian networks.

Along with the BNT, there is another extension called the Structure Learning

Package (SLP) (Leray & Francois, 2004), which extends the BNT even more by adding new functions in order to perform structure learning with various common structure learning algorithms, such as Markov Chain Monte Carlo (MCMC), PC, K2 algorithm, Maximum Weight Spanning Tree (MWST), Greedy search, etc. This toolbox will allow more freedom with choosing a suitable structure learning algorithm, as the base BNT has very limited structure learning capabilities. The SLP is also a free open source toolbox extension for MATLAB.

Another consideration in terms of the platform comes from the comparison of

Bayesian Networks to the original Decision Tree based inference performed by Li

(2009). In her paper, she used a program called WEKA (Hall et al, 2009), a data

Page 28 of 55

Phirun Son 100064517

modelling tool created by the University of Waikato, New Zealand. The program has many tools and functions relevant to the topic, including the ability to perform classification tasks similar to what is being proposed in this thesis; however, at this time the program does not seem to support the use of structure learning for

Bayesian Networks.

The use of WEKA would well compliment the program created in MATLAB as a testing resource against which an established product with sophisticated functions compares.

Phirun Son 100064517

Page 29 of 55

Naive Structure Classification

Tools and Data Preparation

The first step in the process of performing classification was to start with the simplest method: using a naive network structure so that the only parameter learning is required in order to construct the Bayesian Network based on the naive structure.

The program used to perform this process was built using the MATLAB programming environment, and utilised the Bayesian Network Toolbox extension (Murphy, 2001).

In order to import the current format of data into MATLAB, some changes were made to the data set files, which were given in various file types specific to the WEKA (Hall

et al, 2009) environment. In order for the files to be ready to import into MATLAB, the changes were made manually by converting them into comma delimited numerical values.

The first step was to remove unnecessary header data from the files. The files in the

WEKA format detailed the names of each attribute and the possible values of those attributes, which was not required and therefore that information was removed from the files. This left the files with just the attribute data.

While only working with the discretised data sets in their current state, each gene variable was denoted by a character string, specifically “high” or “low”, separated by commas. Each line in the file represented one data sample, with each variable value issued in order delimited by the commas. The final variable on each line was denoted by “ADEN” or “NORMAL” to indicate the class of the sample. In order to be imported into MATLAB, each value was assigned a numerical value, and all instances of the character string were replaced by this value. In the data sets which contained three discretised values, “low” was replaced by 1, and “high” replaced by

2. The numbering begins from 1 due to MATLAB behaviours of beginning indices

Page 30 of 55

Phirun Son 100064517

from 1. In all cases, the class variable, described by the values “NORMAL” and

“ADEN”, with normal being a healthy sample and “ADEN” being a sample diagnosed with adenocarcinomas, were replace in the same fashion for all data sets. “ADEN” was replaced with the value 1, and “NORMAL” was replaced with the value 2.

Figure 4: example of the state of data before conversion (left), and state after conversion (right) of the Harvard data set with 100 gene variables

One more lingering artefact from the WEKA files was that each data set, regardless of how many gene variables was present, still contained data for 161 genes, the maximum size of the final data subsets. This was a WEKA issue, however this meant that the 100 and 39 variable data sets contained extra unknown values in each sample, which were denoted by a “?”. When attempting to convert these missing values into “NaN”, the MATLAB symbol for “Not a Number”, it was found that the parameter learning algorithm used by MATLAB was unable to run when there were variables with no known values. This meant that all data sets needed to be reduced to just the known gene variables. This added the extra task of realigning the data sets, so that the gene variable positions matched up in each of the data sets from the different labs.

Page 31 of 55

Phirun Son 100064517

For example, in the Harvard data subset with 39 gene variables, there are still 161

“columns” of data, however only 39 contain actual data. The unknown columns consist of “?” symbols. These “columns” are removed; however this means that the data subsets from the data sets no longer line up with the modified Harvard subset.

Therefore for the other subsets to be compatible, the gene variables not present in the Harvard subset are also removed from their subset, even though those variables have values.

Figure 5: example of the state of data before removing missing values (left) and after removing missing values (right)

The data sets are now in a suitable format to be imported into the MATLAB environment. The next step is to import the data into MATLAB and construct it into a

Bayesian Network structure. In order to perform this task, the following information is required: the data itself, in the correct array format, a directed acyclic graph detailing the structure for the Bayesian Network, and the number of possible values for each variable in the data.

Page 32 of 55

Phirun Son 100064517

When the data is read in by MATLAB, the data array is in the opposite orientation than what is used by BNT, therefore a transposition of the data is required. This is a simple method call for MATLAB:

>> data = load('Harvard_100.data');

>> data = transpose(data);

>>

With the data in the proper orientation, the next step is to obtain the number of possible values for each variable. For our purposes, each variable is discrete and has a possible number of two values, “high”/”low” or “1”/”2” for the gene variables, and “ADEN”/”NORMAL” or “1”/”2” for the class variable. This value is calculated dynamically, however, to accommodate differently sized data sets. This is done by counting the number of different values that an entire gene variable has over all samples in the data set, assuming that the input data is discrete and ordered beginning from “1”. If there is only 1, infinite, or no values for that gene variable, the variable is automatically assumed to be 2, the minimum size for MATLAB to accept.

>> ns = ones(1,100);

>> fo r n=1:100

>> ns(n) = max(data(n,:));

>> if (ns(n) < 2 || ns(n) == Inf || isnan(ns(n)))

>> ns(n) = 2;

>> end

>> end

>>

Now, since this section is focusing on utilising a naive Bayesian classifier in order to perform inference, the directed acyclic graph will be created as a simple naive structure using the class variable at the head, such as what is shown in figure 3 above. This is achieved in MATLAB using the class provided by the Bayesian

Network Toolbox of a directed acyclic graph by giving each of the gene variable nodes a link to the class variable describing it as its parent node.

Page 33 of 55

Phirun Son 100064517

>> dag = zeros(100,100);

>> for j=1:Nvar

>> if (j ~= 101)

>> dag(101,j) = 1;

>> end

>> end

>>

All the components needed are now ready to be combined into a Bayesian Network; the next step is to perform parameter learning in order to obtain conditional probability tables for each node in the Bayesian Network. Parameter learning is the process of calculating the conditional probability distributions of a Bayesian network given data and the structure of the network (Heckerman, 1998). Due to having the fixed structure of a naive network, all the requirements for performing parameter learning are fulfilled.

Single Set Cross Validation

The next concern is the technique in which to test the usability of Bayesian Network classification. There are a number of experiments which have been performed in order to validate the use of Bayesian Network learning techniques.

Firstly, in order to validate the data, each data set is used independently to check for accuracy of classification. The method chosen to perform this is by using a ten-fold cross-validation (Kohavi, 1995). This methodology involves using a portion of the data set for use in training the Bayesian Network model, and then using the rest of the data for inference, to validate whether the learnt parameters are able to accurately infer the class variables. This is done by splitting the data set into ten subsets (for ten-fold cross-validation – this number will vary dependent on the number of folds), and using nine of the folds for parameter learning on the Bayesian

Network structure. The remaining tenth not used for training is used to perform the validation by leaving out the class variable when performing the inference, then

Page 34 of 55

Phirun Son 100064517

checking whether the estimated value is equivalent to the actual value. This is done upon all ten folds of data, with the end result being all samples in the data set being classified and checked against its actual value. The results are given in the tables below.

The following experiments were performed on each of the data subsets five times each, accumulating the total number of correct, incorrect and undetermined results.

An undetermined result is when the probability of being a certain classification is tied between two or more values. The results show the average percentage of correct, incorrect and undetermined classifications distributed over the five iterations of testing. The reason for performing the experiment five times for each data set was because for each iteration, the fold selection was different, possibly leading to an irregular outcome if the folds were not well split. This is done randomly by shuffling the data samples before splitting them into folds. The shuffling method used is not a built-in MATLAB function; it was obtained through the MATLAB code repository and was written by Silva (2008).

Data Set Format

161 variables

100 variables

39 variables

Correct

91.67%

92.44%

Incorrect

8.33%

7.56%

Undetermined

0.00%

0.00%

96.15% 3.85%

Table 1: Results for Harvard data set (156 samples)

0.00%

Data Set Format

161 variables

100 variables

39 variables

Correct

92.50%

93.13%

98.96%

Incorrect

7.50%

6.87%

1.04%

Table 2: Results for Michigan data set (96 samples)

Undetermined

0.00%

0.00%

0.00%

The results show a very positive result for both data sets for all variations of sub sets. The correct results are all above 90%, and the intersection sub set (39

Page 35 of 55

Phirun Son 100064517

variables) shows the highest result for both data sets. This would indicate that the intersection of features would be the most accurate for data training; however this is contrary to the findings of Li. Although, for this experiment the tested data was in the same set as the training data, so there would be no discrepancy between different microarray experiments.

Therefore next on the list of experiments is to apply the inference program to test one data set using another data set as the training data.

Single Set Training

The next step in validation is to use one data set in order to perform inference on another data set. In Li’s (2009) paper, validation was performed using the Harvard and Michigan data sets in order to perform classification on the Stanford data set.

In order to perform this task, the data has already been prepared in the correct format, with the gene variable “columns” aligned. Therefore, the program is slightly modified so that the test data utilises all samples from one data set, and performs the classification inference on another data set

The results from this test can be compared to the results from Li’s paper in order to compare the accuracy difference between Decision Tree based techniques and

Bayesian Network based techniques.

For purposes of testing, the process upon these data sets were also performed using

WEKA (Hall et al, 2009), the same program that Li used to perform the decision tree based methods. This was to validate the created program against another program of similar intent. WEKA contains functionality to perform naive Bayesian classification, however does not have the functionality for structure learned Bayesian

Networks.

Page 36 of 55

Phirun Son 100064517

The results of the experiments are shown below. The results show the percentage of correct classifications for three different techniques over the two data sets, Harvard and Michigan, being used as training data in order to perform inference on Stanford.

The decision tree results are gathered from the article by Li (2009).

WEKA – Decision Tree

WEKA – Bayes Net

BNT – Bayes Net

Harvard 161

85.4%

95.65%

89.13%

Michigan 161

87.0%

93.47%

89.13%

Table 3: Results of Harvard and Michigan training on Stanford, using 161 gene variables (Decision Tree results gathered from (Li, 2009)

WEKA – Decision Tree

Harvard 100

76.1%

Michigan 100

73.9%

WEKA – Bayes Net

BNT – Bayes Net

93.47%

89.13%

93.47%

89.13%

Table 4: Results of Harvard and Michigan training on Stanford, using 100 gene variables (Decision Tree results gathered from (Li, 2009)

WEKA – Decision Tree

WEKA – Bayes Net

Harvard 39

76.1%

89.13%

Michigan 39

73.9%

86.96%

BNT – Bayes Net 91.30% 89.13%

Table 5: Results of Harvard and Michigan training on Stanford, using 39 gene variables (Decision Tree results gathered from (Li, 2009)

Page 37 of 55

Phirun Son 100064517

It can be seen here that the results for Bayesian Network inference is generally higher than that of decision trees, both in the MATLAB program and with using the

WEKA built in function. However, the WEKA program is generally higher than the results gathered in MATLAB.

It is also noted that the results of the 161 gene variable experiment are generally higher than those of the 100 and 39 gene experiment, which reinforces Li’s conclusion that the union is a better source of training data, backed up with both decision tree and Bayesian Network inference methods.

Integrated Data Set Training

One of the largest obstacles of working with gene Microarray data is that there are very few samples per experiment, meaning that the training set for any given classification task will be limited by this. In order to overcome this obstacle, data integration is a process of taking data from two separate experiments and utilising the data as if it were singular. This effectively increases the number of samples that can be used to train data, however due to the nature of Microarray experiments, this technique is not guaranteed to work.

Even though a Microarray experiment may be examining the same genes on the same type of samples, such as the data sets that this thesis is utilising, as discussed the experiments may use completely different equipment and acquire the data in completely different ways. Difference in techniques and technology can easily result in the data sets being completely incompatible with each other.

In order to apply this technique into the MATLAB program, the data sets of Harvard and Michigan were augmented together by means of using the previously formatted

Page 38 of 55

Phirun Son 100064517

data. It was a simple task of getting the corresponding Harvard and Michigan data sets and pasting the data from one file to the end of the other. This resulted in two new files, one representing the combined data of Harvard and Michigan with 161 gene variables, and one representing the combined data of Harvard and Michigan with 39 gene variables.

WEKA – Decision Tree

WEKA – Bayes Net

BNT – Bayes Net

Harvard & Michigan 161 Harvard & Michigan 39

95.6%

89.13%

89.13%

91.3%

97.83%

91.30%

Table 6: Results of Harvard and Michigan integrated, training on Stanford, using both 39 and 161 gene variables (Decision Tree results gathered from (Li, 2009)

The results for this experiment were poor for Bayesian Network techniques. Rather than improving the result of the union data set, the result was worse, while the intersection data set was found to be higher, which is directly contradictory to the results found by Li using decision tree techniques.

Phirun Son 100064517

Page 39 of 55

Structure Learning Classification

The second part of this thesis will attempt to apply structure learning techniques on top of the already applied methods used with the naive Bayesian structure. By applying structure learning, the assumption that all variables are not correlated that comes from the naive Bayesian structure is removed.

The first experiment will be to re-apply the strategy of using a singular data set as a training set to test with the Stanford data set. This is equivalent to the single set training, only there will be a structure learning method applied. The results are shown below.

The structure learning algorithm used is the K2 algorithm, a heuristic algorithm. This requires a set order of which the nodes follow the hierarchy of the ordering whereby a node may not have a parent node later in the ordering than that node. This allows constraint on the possible graph structures, allowing the algorithm to complete in a reasonable amount of time. The ordering given to this algorithm in the program is one of sequential order, first starting with the class variable, followed by the gene variables in the order that they appear in the data sets. This is not the most ideal method, however it is used to test for now.

Data Set Format

161 variables

Correct

0.00%

Incorrect

0.00%

Undetermined

100.0%

100 variables

39 variables

2.17%

21.74%

0.0%

2.17%

97.83%

76.09%

Table 7: Results of Harvard training on Stanford utilising K2 learning, using 161, 100 and 39 gene variables

Page 40 of 55

Phirun Son 100064517

Data Set Format

161 variables

Correct

0.00%

Incorrect

0.00%

Undetermined

100.0%

100 variables

39 variables

0.00%

2.17%

0.00%

0.00%

100.0%

97.83%

Table 8: Results of Michigan training on Stanford utilising K2 learning, using 161,

100 and 39 gene variables

It can be seen from the data shown that the MATLAB program is unable to process the data properly using the structure learning method, and as the number of gene variable increases, the less it is able to perform proper inference. After much debugging, the cause seems to be related to the parameter learning capabilities of the Bayesian Network Toolbox, however time constraints have made it impossible to pursue.

Conclusion

Bayesian Networks show substantial promise in the domain of classification for gene data. By utilising similar data sets, it has been shown that Bayesian Network classification techniques are viable and are as good as or better than decision tree methods, given generally high accuracy in classification. The results of using the integrated data showing a reverse result with Bayesian Network structure compared with decision tree was interesting, and may show that using the intersection of two data sets may be the more accurate method.

Although in this thesis, the attempt at testing structure learning for Bayesian Network classification was a failure, it still holds a lot of promise into the extension of classification techniques.

Page 41 of 55

Phirun Son 100064517

Future Work

There is still much work to be done in the field of integration of data sets. It is still a young subject and there are still possibilities to be explored. I believe that structure learning will be a necessity as it is highly unlikely that genes are completely independent on other genes, and finding those relationships will be a major step toward further understanding our genetics, and into further interpreting gene microarray data.

Another direction that could be explored is to address the problem of missing values and their effects on classification. In this thesis, it was not touched upon as there was no real missing value to analyse, however it is a major concern as missing values are one of the inherent challenges of working with Microarray data.

Phirun Son 100064517

Page 42 of 55

References

Beer, D, Kardia, S, Huang, C, Giordano, T, Levin, A, Misek, D, Lin, L, Chen, G,

Gharib, T & Thomas, D 2002, 'Gene-expression profiles predict survival of patients with lung adenocarcinoma', Nature Medicine, vol. 8, no. 8, pp. 816-824.

Bhattacharjee, A, Richards, W, Staunton, J, Li, C, Monti, S, Vasa, P, Ladd, C,

Beheshti, J, Bueno, R & Gillette, M 2001, 'Classification of human lung carcinomas by mRNA expression profiling reveals distinct adenocarcinoma subclasses',

Proceedings of the National Academy of Sciences of the United States of America, vol. 98, no. 24, p. 13790.

Brazma, A, Hingamp, P, Quackenbush, J, Sherlock, G, Spellman, P, Stoeckert, C,

Aach, J, Ansorge, W, Ball, C & Causton, H 2001, 'Minimum information about a microarray experiment (MIAME)—toward standards for microarray data', Nature genetics, vol. 29, pp. 365-371.

Brazma, A, Parkinson, H, Sarkans, U, Shojatalab, M, Vilo, J, Abeygunawardena, N,

Holloway, E, Kapushesky, M, Kemmeren, P & Lara, G 2003, 'ArrayExpress--a public repository for microarray gene expression data at the EBI', Nucleic Acids Research, vol. 31, no. 1, p. 68.

Chen, X, Anantha, G & Wang, X 2006, 'An effective structure learning method for constructing gene networks', Bioinformatics, vol. 22, no. 11, pp. 1367-1374.

Cheng, J & Greiner, R 1999, 'Comparing Bayesian network classifiers'.

Page 43 of 55

Phirun Son 100064517

Cheng, J & Greiner, R 2001, 'Learning Bayesian belief network classifiers:

Algorithms and system', Lecture Notes in Computer Science, vol. 2056, pp. 141-151.

Chickering, D 1996, 'Learning Bayesian networks is NP-complete', Learning from data: Artificial intelligence and statistics v, pp. 121–130.

Cooper, G & Herskovits, E 1992, 'A Bayesian method for the induction of probabilistic networks from data', Machine learning, vol. 9, no. 4, pp. 309-347.

Edgar, R, Domrachev, M & Lash, A 2002, 'Gene Expression Omnibus: NCBI gene expression and hybridization array data repository', Nucleic acids research, vol. 30, no. 1, p. 207.

Garber, M, Troyanskaya, O, Schluens, K, Petersen, S, Thaesler, Z, Pacyna-

Gengelbach, M, van de Rijn, M, Rosen, G, Perou, C & Whyte, R 2001, 'Diversity of gene expression in adenocarcinoma of the lung', Proceedings of the National

Academy of Sciences, vol. 98, no. 24, p. 13784.

Gebert, J, Radde, N & Weber, G 2007, 'Modeling gene regulatory networks with piecewise linear differential equations', European Journal of Operational Research, vol. 181, no. 3, pp. 1148-1165.

Geiger, D & Heckerman, D 1996, 'Knowledge representation and inference in similarity networks and Bayesian multinets', Artificial intelligence, vol. 82, no. 1-2, pp.

45-74.

Page 44 of 55

Phirun Son 100064517

Golub, TR & Slonim, DK 1999, 'Molecular Classification of Cancer: Class Discovery and Class Prediction by Gene Expression', Science, vol. 286, no. 5439, p. 531.

Granjeaud, S, Bertucci, F & Jordan, B 1999, 'Expression profiling: DNA arrays in many guises', Bioessays, vol. 21, no. 9, pp. 781-790.

Hall, M, Frank, E, Holmes, G, Pfahringer, B, Reutemann, P, Witten, I.H, (2009); The

WEKA Data Mining Software: An Update; SIGKDD Explorations, Volume 11, Issue

1.

Heckerman, D 1989, 'A tractable inference algorithm for diagnosing multiple diseases'.

Heckerman, D 1998, 'A tutorial on learning with Bayesian networks', NATO ASI

SERIES D BEHAVIOURAL AND SOCIAL SCIENCES, vol. 89, pp. 301-354.

Helman, P, Veroff, R, Atlas, S & Willman, C 2004, 'A Bayesian network classification methodology for gene expression data', Journal of Computational Biology, vol. 11, no. 4, pp. 581-615.

Kohavi, R 1995, 'A study of cross-validation and bootstrap for accuracy estimation and model selection'.

Knox, L & Evans, S 2001, Biology (2nd edn).', McGraw-Hill Book Company: Sydney.

Langley, P, Iba, W & Thompson, K 1992, 'An analysis of Bayesian classifiers'.

Page 45 of 55

Phirun Son 100064517

Leray, P & Francois, O 2004, 'BNT structure learning package: Documentation and experiments', Laboratoire PSI, Tech. Rep.

Li, Y, Li, J, Liu, B, 2009, ‘A Simple yet Effective Data Integration Approach for Tree based Microarray Data Classification' (WIP), University of South Australia.

Mathworks 2009, MATLAB 2008b, http://www.mathworks.com.au/products/matlab

Murphy, K 2001, 'The bayes net toolbox for matlab', Computing science and statistics.

Numata, K, Imoto, S & Miyano, S 2007, 'A Structure Learning Algorithm for Inference of Gene Networks from Microarray Gene Expression Data Using Bayesian

Networks', paper presented at the Bioinformatics and Bioengineering, 2007. BIBE

2007. Proceedings of the 7th IEEE International Conference on.

Pena, JM, Bjorkegren, J & Tegner, J 2005, 'Growing Bayesian network models of gene networks from seed genes', Bioinformatics, vol. 21, no. suppl_2, September 1,

2005, pp. ii224-229.

Russo, G, Zegar, C & Giordano, A 2003, 'Advantages and limitations of microarray technology in human cancer', Oncogene, vol. 22, no. 42, pp. 6497-6507.

Page 46 of 55

Phirun Son 100064517

Shalon, D, Smith, SJ & Brown, PO 1996, 'A DNA microarray system for analyzing complex DNA samples using two-color fluorescent probe hybridization', Genome

Res., vol. 6, no. 7, July 1, 1996, pp. 639-645.

Shmulevich, I, Dougherty, E & Zhang, W 2002, 'From Boolean to probabilistic

Boolean networks as models of genetic regulatory networks', Proceedings of the

IEEE, vol. 90, no. 11, pp. 1778-1792.

Silva, S 2008, shuffle_orderby,

<http://www.mathworks.com/matlabcentral/fileexchange/3029-shuffleorderby>.

Simon, R, Radmacher, MD, Dobbin, K & McShane, LM 2003, 'Pitfalls in the Use of

DNA Microarray Data for Diagnostic and Prognostic Classification', J. Natl. Cancer

Inst., vol. 95, no. 1, January 1, 2003, pp. 14-18.

Spirtes, P, Glymour, C, Scheines, R, Kauffman, S, Aimale, V & Wimberly, F 2001,

'Constructing Bayesian network models of gene expression networks from microarray data'.

Velculescu, V, Zhang, L, Vogelstein, B & Kinzler, K 1995, 'Serial analysis of gene expression', Science, vol. 270, no. 5235, p. 484.

Wang, M, Chen, Z & Cloutier, S 2007, 'A hybrid Bayesian network learning method for constructing gene networks', Computational Biology and Chemistry, vol. 31, no.

5-6, pp. 361-372.

Page 47 of 55

Phirun Son 100064517

Xu, R, Wunsch, D & Frank, R 2007, 'Inference of genetic regulatory networks with recurrent neural network models using particle swarm optimization', IEEE/ACM

Transactions on Computational Biology and Bioinformatics, vol. 4, no. 4, pp. 681-

692.

Yavari, F, Towhidkhah, F, Gharibzadeh, S, Khanteymoori, AR & Homayounpour, MM

2008, 'Modeling Large-Scale Gene Regulatory Networks using Gene Ontology-

Based Clustering and Dynamic Bayesian Networks', paper presented at the

Bioinformatics and Biomedical Engineering, 2008. ICBBE 2008. The 2nd

International Conference on.

Zainudin, S & Deris, S 2008, 'Combining Clustering and Bayesian Network for Gene

Network Inference', paper presented at the Intelligent Systems Design and

Applications, 2008. ISDA '08. Eighth International Conference on.

Phirun Son 100064517

Page 48 of 55

43

44

45

46

47

48

49

37

38

39

40

41

42

50

51

52

31

32

33

34

35

36

24

25

26

27

28

29

30

18

19

20

21

22

23

12

13

14

15

16

17

9

10

11

6

7

8

1

2

3

4

5

Appendix A – Naive Classification (Cross-Validation)

function totalcorrect = classifynaive(name,class_index,num_folds)

data = load(name);

data = transpose(data);

[data t] = shuffle(data, 2);

Ndim = size(data);

Nvar = Ndim(1);

Ninst = Ndim(2);

Fold = num_folds;

FoldSize = floor(Ninst/Fold);

FoldRem = mod(Ninst, FoldSize);

ns = ones(1,Nvar); for n=1:Nvar

ns(n) = max(data(n,:)); if (ns(n) < 2 || ns(n) == Inf || isnan(ns(n)))

ns(n) = 2; end end

dag = zeros(Nvar,Nvar); for j=1:Nvar if (j ~= class_index)

dag(class_index,j) = 1; end end

datafolds = zeros(Nvar,FoldSize+1,Fold);

c_index = 1; for j=1:Fold if (j <= FoldRem) for k=1:FoldSize+1

datafolds(:,k,j) = data(:,c_index);

c_index = c_index + 1; end else for k=1:FoldSize

datafolds(:,k,j) = data(:,c_index);

c_index = c_index + 1; end end end

totalcorrect = 0;

totalincorrect = 0;

totalundetermined = 0;

Page 49 of 55

Phirun Son 100064517

95

96

97

98

99

100

89

90

91

92

93

94

101

102

103

104

105

106

82

83

84

85

86

87

88

76

77

78

79

80

81

70

71

72

73

74

75

64

65

66

67

68

69

58

59

60

61

62

63

53

54

55

56

57 for j=1:Fold

correct = 0;

incorrect = 0;

undetermined = 0;

trainingdata = zeros(Nvar,0); for k=1:Fold if (k ~= j)

add = datafolds(:,:,k); if (k > FoldRem)

add(:,FoldSize+1) = []; end

trainingdata = [trainingdata add]; end end

temp = mk_bnet(dag, ns); for i=1:Nvar

temp.CPD{i} = tabular_CPD(temp,i); end

bnet = learn_params(temp, trainingdata);

CPT = cell(1,Nvar); for i=1:Nvar

s=struct(bnet.CPD{i});

CPT{i}=s.CPT; end

testdata = datafolds(:,:,j);

nloop = FoldSize; if (j <= FoldRem)

nloop = nloop + 1; end for tests=1:nloop

engine = jtree_inf_engine(bnet);

evidence = cell(1,Nvar); for testno=1:Nvar if (testno ~= class_index)

ev = testdata(testno, tests); if (isnan(ev))

evidence{testno} = []; else

evidence{testno} = ev; end end end

Phirun Son 100064517

Page 50 of 55

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

107

108

109

110

111

112

113

114

115

116

117

engine = enter_evidence(engine, evidence);

m = marginal_nodes(engine, class_index);

inference = find(m.T == max(m.T));

s_inf = size(inference,1); if (s_inf > 1)

undetermined = undetermined + 1; elseif (testdata(class_index,tests) == inference)

correct = correct + 1; else

errorMargin = errorMargin + m.T(inference);

incorrect = incorrect + 1; end

clear engine ;

clear evidence ;

clear m ; end

totalundetermined = totalundetermined + undetermined;

totalcorrect = totalcorrect + correct;

totalincorrect = totalincorrect + incorrect;

clear trainingdata ; end end

Phirun Son 100064517

Page 51 of 55

43

44

45

46

47

48

37

38

39

40

41

42

49

50

51

31

32

33

34

35

36

25

26

27

28

29

30

19

20

21

22

23

24

12

13

14

15

16

17

18

9

10

11

6

7

8

1

2

3

4

5

Appendix B – Naive Classification (Training Set)

function totalcorrect = classifynaivet(name,class_index,trainname)

data = load(name);

data = transpose(data);

Ndim = size(data);

Nvar = Ndim(1);

Ninst = Ndim(2);

ns = ones(1,Nvar); for n=1:Nvar

ns(n) = max(data(n,:)); if (ns(n) < 2 || ns(n) == Inf || isnan(ns(n)))

ns(n) = 2; end end

dag = zeros(Nvar,Nvar); for j=1:Nvar if (j ~= class_index)

dag(class_index,j) = 1; end end

totalcorrect = 0;

totalincorrect = 0;

totalundetermined = 0;

trainingdata = load(trainname);

trainingdata = transpose(trainingdata);

temp = mk_bnet(dag, ns); for i=1:Nvar

temp.CPD{i} = tabular_CPD(temp,i); end

bnet = learn_params(temp, trainingdata); for tests=1:Ninst

engine = jtree_inf_engine(bnet);

evidence = cell(1,Nvar); for testno=1:Nvar if (testno ~= class_index)

ev = data(testno, tests); if (isnan(ev))

evidence{testno} = []; else

evidence{testno} = ev; end end

Page 52 of 55

Phirun Son 100064517

57

58

59

60

61

62

52

53

54

55

56

63

64

65

66

67

68

69

70 end

engine = enter_evidence(engine, evidence);

m = marginal_nodes(engine, class_index);

inference = find(m.T == max(m.T));

s_inf = size(inference,1); if (s_inf > 1)

totalundetermined = totalundetermined + 1; elseif (data(class_index,tests) == inference)

totalcorrect = totalcorrect + 1; else

totalincorrect = totalincorrect + 1; end

clear engine ;

clear evidence ;

clear m ; end end

Phirun Son 100064517

Page 53 of 55

34

35

36

37

38

39

40

41

42

43

44

45

46

47

29

30

31

32

33

22

23

24

25

26

27

28

11

12

13

14

15

16

17

18

19

20

21

7

8

9

10

1

2

3

4

5

6

Appendix C – K2 Classification (Training Set)

function totalcorrect = classifyk2t(name,class_index,trainname)

data = load(name);

data = transpose(data);

Ndim = size(data);

Nvar = Ndim(1);

Ninst = Ndim(2);

ns = ones(1,Nvar); fo r n=1:Nvar

ns(n) = max(data(n,:));

if (ns(n) < 2 || ns(n) == Inf || isnan(ns(n)))

ns(n) = 2;

end end

order = zeros(1,Nvar);

order(1) = class_index;

found_class = 0; if (class_index == 1)

found_class = true; end for i=2:Nvar if (found_class == 1)

order(i) = i; else

order(i) = i-1; end if (i == class_index)

found_class = 1; end end

totalcorrect = 0;

totalincorrect = 0;

totalundetermined = 0;

trainingdata = load(trainname);

trainingdata = transpose(trainingdata);

dag = learn_struct_K2(trainingdata,ns,order);

draw_graph(dag);

Page 54 of 55

Phirun Son 100064517

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

74

75

76

77

78

67

68

69

70

71

72

73

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

temp = mk_bnet(dag, ns); for i=1:Nvar

temp.CPD{i} = tabular_CPD(temp,i); end

bnet = learn_params(temp, trainingdata);

CPT = cell(1,Nvar); for i=1:Nvar

s=struct(bnet.CPD{i});

CPT{i}=s.CPT; end for tests=1:Ninst

engine = jtree_inf_engine(bnet);

evidence = cell(1,Nvar); for testno=1:Nvar if (testno ~= class_index)

ev = data(testno, tests); if (isnan(ev))

evidence{testno} = []; else

evidence{testno} = ev; end end end

engine = enter_evidence(engine, evidence);

m = marginal_nodes(engine, class_index);