A Practical Guide to the Use of Selected Multivariate Statistics

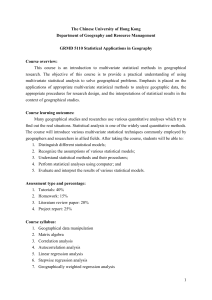

advertisement