Automatic Classification of Latin Music

Automatic Classification of Latin Music

Some Experiments on Musical Genre Classification

Miguel Alexandre Gaspar Lopes

Dissertation oriented by Fabien Gouyon

Developed at INESC Porto

Faculdade de Engenharia da Universidade do Porto

Departamento de Engenharia Electrotécnica e de Computadores

Rua Roberto Frias, s/n, 4200-465 Porto, Portugal

July 2009

Automatic Classification of Latin Music

Some Experiments on Musical Genre Classification

Miguel Alexandre Gaspar Lopes

Estudante Finalista do Mestrado em Engenharia Electrotécnica e

Computadores pela Faculdade de Engenharia da Universidade do

Porto

Dissertação realizada no 2º semestre do 5º ano do

Mestrado Integrado em Engenharia Electrotécnica e

Computadores (Major Telecomunicações) da Faculdade de

Engenharia da Universidade do Porto

Faculdade de Engenharia da Universidade do Porto

Departamento de Engenharia Electrotécnica e de Computadores

Rua Roberto Frias, s/n, 4200-465 Porto, Portugal

July 2009

Summary

The automatic information retrieval from music signals is a developing area with several possible applications. One of these applications is the automatic classification of music into genres. In this project, a description of the current methodology to perform such a task is done, with references to the state of the art in this area. Music is composed of several aspects such as the melody, the rhythm or the timbre. Timbre relates to the overall quality of sound. In the work developed in this project, an analysis of timbre and its ability to represent musical genres is done. Experiments are performed that tackle the problem of the automatic classification of musical genres using the following general procedure: characteristics related to timbre are extracted from music signals; these values are used to create mathematical models representing genres; finally these models are used to classify unclassified musical samples and their classification performance is evaluated.

There are several ways to perform the procedure described above: related to which characteristics from the musical signals are extracted or which algorithms (designated by classifiers) are used to create the genre models.

In this project there is a comparison of the classification performance obtained with several classification algorithms and with the use of different characteristics of the musical datasets being classified. One dataset characteristic that was evaluated was the use of an artist filter and its influence in the classification performance. An artist filter is a mechanism that prevents that songs from a certain artist are used to test the genre models created with the songs from that same artist (among other songs from other artists, obviously). It was concluded that the use of the artist filter reduces the classification performance, and should be used in classification experiments of this kind, so that the results obtained are as representative as possible.

Most experiments were carried out using the Latin Music Database, a musical collection composed of 10 different Latin music genres. Experiments were also made using a collection of 10 more general musical genres (such as “classical” or “rock”).

An assessment of the adequacy of the analysis of timbre to identify the genres of the Latin

Music Database is done, along with a computation of the timbral similarities between genres.

Abstract

Timbre is a quality of music that might be hard to define: it is related to the overall sound of a musical piece and is defined by its instruments and production characteristics – it is the quality that distinguishes the sound of two different instruments playing the same musical note, in the same volume. The musical timbre is certainly a defining quality in the characterization of a musical genre. The automatic classification of musical signals into genres through the analysis of timbre is what was attempted in the development of this work. The majority of the realized experiments were done with the Latin Music Database, composed of 10 exotic musical genres, some of them likely to be unknown to the reader. An analysis of the timbre characteristics of these genres was done, with results interesting to look at and to compare with a personal evaluation of these musical genres. Experiments with different classification algorithms were made and the different performances achieved compared. The influence in the classification performance of several factors was assessed: related to the size and nature of the datasets used to create and test the genre models.

Acknowledgements

I would like to thank Fabien Gouyon, that oriented this dissertation and guided me through the development of this work in the most helpful and encouraging way, Luís Gustavo Martins for the explanations on

Marsyas, Luís Paulo Reis for providing the template on which this document was written, INESC Porto for the realization of this project in the most pleasant environment and finally, to my family and friends for the sharing of moments of leisure and relaxation, that balanced the moments of work, in a combination that made the last few months an experience of personal well-being.

Index

1.

Introduction 1

1.1

Context ...........................................................................................................................1

1.2

Motivation.......................................................................................................................2

1.3

Goals ...............................................................................................................................2

1.4

Structure of the document ................................................................................................3

2.

Automatic musical genre classification 5

2.1

Defining musical genres ..................................................................................................5

2.2

The supervised and unsupervised approach ......................................................................7

2.3

Feature extraction ............................................................................................................8

2.4

Melody, harmony, rhythm and timbre ..............................................................................9

2.5

The “bag of frames” approach ....................................................................................... 10

2.6

Analysis and texture windows ....................................................................................... 12

3.

Experimental methodology 15

3.1

Feature extraction .......................................................................................................... 15

3.1.1

The Mel scale and the MFCC .................................................................................. 16

3.1.2

Marsyas and feature extraction ................................................................................ 17

3.1.3

Description of the features used ............................................................................... 19

3.1.4

The feature files ...................................................................................................... 19

3.2

Classification................................................................................................................. 20

3.2.1

Platforms used - Weka and Matlab .......................................................................... 21

3.2.2

Instance classification with Weka classifiers ............................................................ 23

3.2.2.1

Naive Bayes ...................................................................................................... 23

3.2.2.2

Support Vector Machines .................................................................................. 24

3.2.2.3

K-Nearest Neighbours....................................................................................... 25

3.2.2.4

J48 .................................................................................................................... 25

3.2.3

Song classification with Gaussian Mixture Models .................................................. 26

3.3

Datasets used ................................................................................................................. 27

3.3.1

The George Tzanetakis collection ............................................................................ 27

3.3.2

The Latin Music Database ....................................................................................... 27

3.3.2.1

Axé ................................................................................................................... 28

3.3.2.2

Bachata ............................................................................................................. 28

3.3.2.3

Bolero ............................................................................................................... 29

XII INDEX

3.3.2.4

Forró ................................................................................................................ 29

3.3.2.5

Gaúcha ............................................................................................................. 29

3.3.2.6

Merengue ......................................................................................................... 30

3.3.2.7

Pagode .............................................................................................................. 30

3.3.2.8

Salsa ................................................................................................................. 31

3.3.2.9

Sertaneja ........................................................................................................... 31

3.3.2.10

Tango ............................................................................................................... 31

3.4

Experimental setup ........................................................................................................ 32

3.4.1

Testing, training and validation datasets .................................................................. 32

3.4.2

Analysis measures ................................................................................................... 33

3.4.3

The “artist filter” ..................................................................................................... 34

4.

Results and analysis 37

4.1

Results .......................................................................................................................... 37

4.1.1

Results using the GTZAN collection ....................................................................... 37

4.1.2

Results using the LMD collection............................................................................ 38

4.2

Classifier comparison .................................................................................................... 40

4.3

On the influence of the artist filter ................................................................................. 40

4.4

On the influence of the dataset nature ............................................................................ 41

4.5

On the influence of the testing mode .............................................................................. 42

4.6

On the influence of the dataset size ................................................................................ 43

4.7

Analysis of confusion matrices and genre similarities .................................................... 45

4.8

Validation results .......................................................................................................... 59

4.9

Implementations in literature ......................................................................................... 61

65 5.

Conclusions

FIGURE LIST

Figure List

XIII

F IGURE 1: T EXTURE WINDOWS [16] ............................................................................................. 12

F IGURE 2: T HE M EL S CALE [22] .................................................................................................. 16

F IGURE 3: M ARSYAS O FFICIAL W EBPAGE ................................................................................... 18

F IGURE 4: W EKA : “R ESAMPLE ” ................................................................................................... 22

F IGURE 5: W EKA : C HOOSING A C LASSIFIER ................................................................................. 23

F IGURE 6: SVM: FRONTIER HYPER PLANES [29] .......................................................................... 25

F IGURE 7: THE BACHATA BAND “A VENTURA ”, THE CREATIVE TOUR DE FORCE BEHIND TIMELESS

CLASSICS SUCH AS “A MOR DE M ADRE ”, “A MOR B ONITO ” OR “E SO NO E S A MOR ” .............. 28

F IGURE 8: Z ECA P AGODINHO , THE AUTHOR OF “À GUA DA M INHA S EDE ”, THE MEMORABLE SONG

HE WROTE WHEN HE WAS THIRSTY ...................................................................................... 30

F IGURE 9: I NFLUENCE ON CLASSIFICATION OF THE TESTING / TRAINING PERCENTAGE ................... 42

F IGURE 10: S PLIT 90/10 V S 10 FOLD CROSS VALIDATION (GTZAN) ............................................. 43

F IGURE 11: C OMPARISON BETWEEN DIFFERENT DATASET SIZES (LMD, TRAINING SET )................ 44

F IGURE 12: C OMPARISON BETWEEN DIFFERENT DATASET SIZES (LMD, PERCENTAGE SPLIT )........ 44

F IGURE 13: C OMPARISON BETWEEN DIFFERENT DATASET SIZES (LMD, ARTIST FILTER ) ............... 45

F IGURE 14: C LASSIFICATION OF A XÉ SONGS ................................................................................ 46

F IGURE 15: C LASSIFICATION OF B ACHATA SONGS ....................................................................... 46

F IGURE 16: C LASSIFICATION OF B OLERO SONGS .......................................................................... 47

F IGURE 17: C LASSIFICATION OF F ORRÓ SONGS ............................................................................ 47

F IGURE 18: C LASSIFICATION OF G AÚCHA SONGS ......................................................................... 48

F IGURE 19: C LASSIFICATION OF M ERENGUE SONGS ..................................................................... 49

F IGURE 20: C LASSIFICATION OF P AGODE SONGS ......................................................................... 49

F IGURE 21: C LASSIFICATION OF S ALSA SONGS ............................................................................ 50

F IGURE 22: C LASSIFICATION OF S ERTANEJA SONGS ..................................................................... 51

F IGURE 23: C LASSIFICATION OF T ANGO SONGS ........................................................................... 51

F IGURE 24: D ISTANCE BETWEEN GENRES : A XÉ AND B ACHATA .................................................... 53

F IGURE 25: D ISTANCE BETWEEN GENRES : B OLERO AND F ORRÓ .................................................. 54

F IGURE 26: D ISTANCE BETWEEN GENRES : G AÚCHA AND M ERENGUE .......................................... 54

F IGURE 27: D ISTANCE BETWEEN GENRES : P AGODE AND S ALSA ................................................... 55

F IGURE 28: D ISTANCE BETWEEN GENRES : S ERTANEJA AND T ANGO ............................................. 56

TABLE LIST

Table List

XV

T ABLE 1: R ESULTS OBTAINED WITH THE GTZAN COLLECTION ................................................... 37

T ABLE 2: N UMBER OF INSTANCES USED IN THE GTZAN EXPERIMENTS ....................................... 37

T ABLE 3: R ESULTS WITH THE LMD, NO ARTIST FILTER ................................................................ 38

T ABLE 4: R ESULTS WITH THE LMD, NO ARTIST FILTER , RESAMPLE FIXED .................................... 38

T ABLE 5: R ESULTS WITH THE LMD WITH THE ARTIST FILTER ...................................................... 39

T ABLE 6: N UMBER OF INSTANCES USED IN THE LMD EXPERIMENTS ............................................ 39

T ABLE 7: C ONFUSION M ATRIX OBTAINED USING CLASSIFICATION WITH GMM ........................... 39

T ABLE 8: C LASSIFICATION PERFORMANCE BY CLASS USING GMM .............................................. 40

T ABLE 9: C LASSIFICATION PERFORMANCE COMPARISON ............................................................. 40

T ABLE 10: D ISTANCE BETWEEN GENRES GMM ........................................................................... 52

T ABLE 11: C ONFUSION M ATRIX OBTAINED USING CLASSIFICATION WITH KNN ........................... 57

T ABLE 12: C LASSIFICATION PERFORMANCE BY CLASS , USING KNN ............................................ 57

T ABLE 13: C OMPARISON BETWEEN TP AND FP R ATES OBTAINED WITH GMM AND KNN ............ 57

T ABLE 14: C LASSIFIERS PERFORMANCE ( VALIDATION DATASET ) ................................................. 59

T ABLE 15: C ONFUSION M ATRIX OBTAINED USING CLASSIFICATION WITH GMM ( VALIDATION

DATASET ) ........................................................................................................................... 60

T ABLE 16: C LASSIFICATION PERFORMANCE BY CLASS USING GMM ( VALIDATION DATASET ) ...... 60

GLOSSARY

Glossary

DFT – Discrete Fourier Transform

EM – Expectation-Maximization

EMD – Earth Mover’s Distance

FFT – Fast Fourier Transform

GMM – Gaussian Mixture Model

HMM – Hidden Markov Models

MFCC – Mel Frequency Cepstral Coefficients

MIR – Music Information Retrieval

RNN – Recurrent Neural Networks

SPR – Statistical Pattern Recognition

STFT – Short Time Fourier Transform

SVM – Support Vector Machines

XVII

Chapter 1

1.

Introduction

1.1

Context

The automatic information retrieval from music is a developing area with several possible applications: the automatic grouping of songs within genres, the calculation of song similarities, the description of the various components of music – such as the rhythm or timbre. And more ambitious goals can be foreseen: maybe an automatic labeling of music with subjective qualities? Quantifiers of an “emotional” level of the songs? Of a

“danceable potential”? There could be also automatic previsions about the commercial success of songs and records (which would reduce the staff employed by record labels responsible for the finding of new talents – considering some artists that are phenomenally advertised, one is left thinking if the loss is that great). Of course, if this is to happen, it won’t be in a near future, as the subject of the automatic musical information retrieval is just giving its first steps. The internet has become a huge platform for multimedia data sharing, where music plays a big role. Search engines are becoming more complex and descriptive. Mechanisms to automatically organize and label music in various ways, as complete and precise as possible, will surely be of great help in the future.

In this dissertation, experiments were made with the goal to correctly classify music into different genres. Two musical collections were used: one composed entirely of Latin

Music, distributed over ten different genres (“axé”, “bachata”, “bolero”, “forró”, gaúcha”,

“merengue”, “pagode”, “salsa”, “sertaneja” and “tango”), and the other composed of ten general musical genres (“blues”, “classical”, “country”, “disco”, “hip hop”, “jazz”,

“metal”, “pop”, “reggae” and “rock”). Separate experiments were conducted with these two different datasets.

Music can be characterized in four main dimensions: melody, harmony, rhythm and timbre. Melody, harmony and rhythm are temporal characteristics as they are related to progressions over time. Timbre, on the other hand, relates to the aspects of sound.

The classification experiments made in this dissertation only considered the musical characteristic of timbre – the goal here is the assessment of the quality of musical genre classification based only on an analysis of the timbre of music.

2 CHAPTER 1: INTRODUCTION

The approach used can be explained in the summarized way: values characterizing the timbre of the different genres were extracted and models that represented these extracted values (that characterize timbre) were automatically computed for each genre. These models were then tested, as they were used in an attempt to correctly classify into a genre new and unidentified values, characterizing timbre. The values used to create the model are the training values, and the values used to test the model are the testing values. These values were obtained with the computation of signal characteristics of time segments, or frames, of very small duration.

1.2

Motivation

The motivation behind this project can be identified as being of two different natures: a personal motivation, driven by my curiosity on the subject of this dissertation – what are the mechanisms behind the automatic genre classification of music? Or more generally, what are the mechanisms behind the automatic retrieval of information from sounds and music? What characteristics can be extracted from a specific song? How are these characteristics extracted? How can the extraction of characteristics from music be used to implement a system that will automatically classify new and unidentified music? What are the possibilities of such systems? What is the state of the art in this subject right now?

The other nature of the motivation behind the realization of this project has to with the possibility to contribute, even if in the smallest degree, to the body of work that is being built around the subject of automatic classification of music. It is a new and exciting area, of possibilities of great interest to anyone that finds pleasure in the listening or study of music.

A part of the work here developed is related to the study of the characteristics of timbre of ten different Latin music genres, and there is an attempt to compute similarities between these genres. The motivation behind this task was a personal curiosity towards the Latin musical genres, of which I knew little about and the possibility to contribute to a study of these musical genres, their timbral characteristics, and how well they are defined by them - in the degree set by the characteristics of the performed classification procedures.

1.3

Goals

The experiments described in this dissertation were designed with specific goals in mind.

These goals are the comparison of the performance obtained with different classification algorithms and the assessment of the influence on the classification performance of several factors, which are:

CHAPTER 1: INTRODUCTION 3

1) The nature of the datasets used in the classification procedures - a comparison is made between the classification performances obtained using the Latin music genres and the ten general genres.

2) The size of the datasets used – due to computational limitations, the datasets could not be used in their totality, therefore smaller samples of the datasets were used instead. A comparison was made between the results obtained with datasets of variable size.

3) The relation between the datasets used for training and for testing – the classification is performed with models representing genres; these models are created from the analysis of the songs belonging to that genres, in a designated

“training” phase. These models are then tested with a “testing” dataset (the results of these tests are the results used to assess the classification performance). The training and testing datasets can be the same, or can be different – defined after a larger dataset is split into two, creating the datasets. The ratio of this split can vary.

The influence of these characteristics in the classification performance was assessed.

4) The use of an artist filter in the definition of the training and testing datasets – as it was said above, the training and testing datasets can be created after a larger dataset is split in two. The split can be made in a random way or can be done respecting conditions. A condition was defined that no artist (or more precisely, its songs) can be present in both the training and testing datasets – this condition is the artist filter. If songs of a certain artist are contained in the training set, songs from that same artist cannot be contained in the testing set and vice-versa. The influence of the use of the artist filter in the classification results was assessed.

Another goal set is to compare the performance of the different Latin music genres and to compute genre similarities based on the characteristics of timbre.

1.4

Structure of the document

This dissertation is structured in five chapters, the first one being this introduction to the developed work.

The second chapter is composed of a more extensive introduction to the subject of automatic musical genre classification and a description of the approaches being taken in this area.

The third chapter is the description of the experimental methodology followed in the experiments reported in this dissertation: there is a description of the extraction of characteristics from the musical samples; of the algorithms used in the classification procedures; of the characteristics of the datasets used. Finally, there is a subchapter dedicated to the analysis of some experiments that are found in the literature available.

4 CHAPTER 1: INTRODUCTION

The fourth chapter is dedicated to the presentation and analysis of the results achieved in the performed experiences.

The last chapter contains the general conclusions of the developed work.

Chapter 2

2.

Automatic musical genre classification

2.1

Defining musical genres

The automatic identification of music is a task that can be of great use – the internet has become a huge and popular platform of multimedia data sharing and the trend is to become even bigger – the limits to what it can and will achieve are impossible to preview.

Music is available through search engines that tend to become more complex and sophisticated and these search mechanisms of the music available require descriptions as complete as possible.

In this context, mechanisms to automatically label music (or other multimedia content) in a digital format are obviously helpful. The manual labeling of music can be a hard and exhausting job if the quantities to be labeled are of great size. The manual labeling of a catalog of music of a few hundred thousand titles into several objective ( e.g.

rhythm) and subjective ( e.g.

mood) categories, takes about 30-man years – in other words, this task would require the work of 30 musicologists during one year [1].

The most obvious and immediate property of a piece of music might be its genre. If one tries to organize music into main groups, these groups are probably the big musical genres

– classical, jazz, pop/rock, world music, folk, blues or soul. It is natural that the field of science related to the automatic labeling of music would start with an attempt to label music with its genre. It is in this place where the subject of automatic labeling of music is right now – at the very beginning of its task. It is not hard to imagine that future developments on this area can lead to greater possibilities – for example, to automatically label music with subjective qualities such as the emotional effects caused in the listener.

Advances in this subject of computational music analysis can also lead to the automatic production of music and, just like machines now outperform humans in the playing of chess, maybe one day music-making will be a task reserved to machines (but no doubt that this idea seems far-fetched and I would be particularly curious to see how something like the soul of James Brown is recreated in an algorithm).

6 CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION

The subject of automatic information retrieval from music is commonly designated by

MIR (an acronym for Music Information Retrieval). Advances in this area are related with subjects such as content-based similarity retrieval (to compute similarities between songs), playlist generation, thumb nailing or fingerprinting [1].

The problem of genre definition is not trivial. There are obvious questions that come up when one tries to map songs into specific musical genres: for example, should this mapping be applied to individual songs, albums or artists? The fact is that a considerable proportion of artists change the characteristics of their music along their career or at some point venture into musical genres that are not the ones that would characterize the majority of their work. Many musicians even escape categorization, when their music is a big melting pot of different and distant influences. And when defining a song’s genre, should only the musical aspects of the song be taken into consideration? Or also socio-cultural aspects? The musical genres (or subgenres) “Britpop” and “Krautrock” (the word “Kraut” is used in English as a colloquial term for a German person) suggest a decisive geographical factor in its name, and “Baroque” designates the music made in a specific period of the history, the classical music made in Europe during the 17 th

and first part of the 18 th

century. It is important to note that there is not a general agreement over the definition and boundaries of musical genres. According to [2], if we consider three popular musical information websites (Allmusic, Amazon and Mp3), we see that there are differences in their definition of genres: Allmusic defines a total of 531 genres, Amazon

719 genres and Mp3 430 genres. Of these, only 70 genres were common to the three. The very nature of the concept of “musical genre” is something extremely flexible: new genres are created through expressions coined by musical critics that “caught fire” and got popular (“Post-rock”, popularized by the critic Simon Reynolds is an example), or through the merging or separation of existing genres.

These considerations are addressed in [3], where two different concepts of “musical genre” are defined: an intentional concept and an extensional concept. In the intentional concept, genre is a linguistic tool which is used to link cultural meanings to music. These meanings are shared inside a community. The example given is the “Britpop” genre, which serves to categorize the music of bands like the Beatles and carries with it references to the 60’s and its cultural relevance and context - things we are all more or less familiar with (it should be noted that the authors got it wrong: the common usage of the term “Britpop” refers to the music from a set of British bands of the 90’s, such as Blur or

Oasis, but the point is made). On the other hand, in the extensional concept, genre is a dimension, an objective characteristic of a music title, like its timbre or language of the lyrics.

The fact that these two concepts are many times incompatible is a problem in the attempt to achieve a consensual musical genre taxonomy.

CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION

2.2

The supervised and unsupervised approach

7

There are three general approaches to the task of automatic musical genre classification.

The first approach consists on the definition of a precise set of rules and characteristics that define musical genres. A song would be analyzed and evaluated if it fell under the definition of a musical genre – if it respected the defined rules that characterize the genre.

This approach is designated in [4] as “expert systems”. No approach of this kind has been implemented yet – the reason is the complexity needed for these systems: an expert system would have to be able to describe high level characteristics of music such as voice and instrumentation and this is something that is yet to be achieved.

The unsupervised and supervised approach can be considered as the two parts of a more general category that could be named “machine-learning approach”, for the mathematical characteristics that define a musical genre are automatically elaborated, during training phases. Various different algorithms exist for this purpose and they are called “classifiers”.

During a training phase, mathematical data that characterizes musical signals is given to the classifier, the classifier processes this data and automatically finds relations or functions that characterize subgroups of the data given - in this case, musical genres.

There are two approaches to do this: an unsupervised and a supervised way.

As it was said above, the classifier processes mathematical data that characterizes musical signals; it does not process the musical signal itself. These characteristics are called

“features” and are extracted from the musical signal. Each feature takes a numeric value.

In the unsupervised approach, the sets of features used in the training phase are not labeled. The algorithm is not given the information that a certain set of features is “rock”, or that another one is “world music”.

The labels (the classes) will emerge naturally: the classification algorithm will consider the differences between the given sets of features and define classes to represent these differences.

This approach has the advantage of avoiding the problematic issues, referred in chapter

2.1, related to the usage of a fixed genre taxonomy (genre taxonomy being the definition and characterization of the total universe of musical genres), such as the difficulties of assigning a genre to certain artists or the fuzzy frontiers between genres.

One could consider that this approach makes more sense: if one song’s genre is going to be classified as a function of the song’s extracted mathematical signal features, the definition of genre should only take into consideration these same features. But then, if this procedure is adopted, one has to expect that the new emerging “genres” will not correspond to the definition of genres we are all familiar with, that are subject not only to the musical signal characteristics, but also to cultural and social factors. Maybe a new taxonomy of genres could be adopted, one that reflected only the set of characteristics

(reflected in the extracted features) of the musical signal, classified with a defined

8 CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION classifier. People would adopt this new genre taxonomy when talking about musical genres and the task of automatic musical genre classification would become much easier.

But that is highly unlikely to happen, as it is impossible to imagine a complete reconfiguration of the musical genre vocabulary used by common people, musicians and critics alike. And one that could go against some rules of common sense: if, by some extraordinary mathematical coincidence, it grouped in the same new genre such opposite artists as, let’s say, the pop-oriented band The Clash and the schizophrenic and aggressive

Dead Kennedys. Controversial at least.

The unsupervised approach requires a clustering algorithm – that decides how to group together the models of the audio samples. “K-means” is one of the simplest and most popular algorithms. (This algorithm will be described in a latter section of this work, although in a different context.)

In the supervised approach there is an existing taxonomy of genres, which will be represented by mathematical models, created after a training phase: sets of songs belonging to different genres are labeled with their genre, their features are extracted and an algorithm will automatically find models to represent each musical genre. After that, unlabeled songs can be classified into the existing genres.

2.3

Feature extraction

There are a variety of features that can be extracted from an audio signal. These features can be characterized through some properties. Four properties are distinguished in [5] that can be used to characterize features: “the steadiness or dynamicity of the feature”, “the time extent of the description provided by the features”, “the abstractness of the feature”, and “the extraction process of the feature”.

The first property, about “the steadiness or dynamicity of the feature”, indicates if the extracted feature characterizes the signal at a specific time (or at a specific segment of time), or if it characterizes the signal over a longer period of time, that spans several segments of time: for example, each of these time segments will provide specific feature values that will then be used to calculate features characterizing the longer time segment, such as the means and standard deviations of the short-time segments feature values. In other words, in this latter case, the features are the mean and standard deviation values of consecutive sequences of extracted features.

The second property, about the “time extent of the description provided by the features”, indicates the extent of the signal characterized by the extracted feature. The features are distinguished between “global descriptors” and “instantaneous descriptors” – the global descriptors are computed for the whole signal (and representative of the whole signal), while the instantaneous descriptors are computed for shorter time segments, or frames.

CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION 9

“The abstractness of the feature” is the third referred property. The example given to illustrate this property is the spectral envelope of the signal. Two different processes to extract features are used to represent the spectral envelope – “cepstrum” and “linear prediction”. Cepstrum is said to be more abstract than linear prediction.

The last property, “the extraction process of the feature”, relates to the process used to extract the features. For example, some features are computed directly on the waveform signal, such as the “zero crossing rate”. Other features are extracted after performing a transform on the signal, such as the FFT (Fast Fourier Transform).

The features extracted may be related to different dimensions of music, such as the melody, harmony, rhythm or timbre. A description of these dimensions is done in the following chapter.

2.4

Melody, harmony, rhythm and timbre

Various definitions are used to characterize “melody”. Some of these definitions are found in [6]: melody is “a pitch sequence”, “a set of attributes that characterize the melodic properties of sound” or “a sequence of single tones organized rhythmically into a distinct musical phrase or theme”. There is a certain difficulty in the attempt to precisely define melody, although melody is a familiar and understandable concept: it is what is reproduced in the whistling or humming of songs. A review of techniques used to extract features that can describe melody is found in [6].

Harmony is distinguished from melody in the following way: while melody is considered as a sequence of pitched events, harmony relates to the simultaneity of pitched events – or using a more common designation, “chords”. It is common the idea that melody relates to a “horizontal” progression of music, and harmony relates to a “vertical” one. This idea is easily understood if we think of time as the horizontal (melodic) dimension – harmony will be a vertical dimension, related to the simultaneity of melodic progressions. Work related to the extraction of information related to harmony can be found in [7], where a method that estimates the fundamental frequencies of simultaneous musical sounds is presented, or in [8], where it is described a method to analyze harmonies/chords, using signal processing and neural networks, and analyzing sound in both time and frequency domains. A method to retrieve pitch information from musical signals is presented in [9].

Rhythm is related to an idea of temporal periodicity or repetition. The existence of temporal periodicity, and its degree of predominance, is what characterizes a piece of music as “rhythmic” (or not). This is a vague definition, but there is not one more precise, the notion of rhythm is something intuitive: it is agreeable that jazz music is more rhythmoriented than classical music or certain types of experimental rock.

A report of a variety of approaches related to the subject of automatic rhythmic description is made in [10], with diverse applications being addressed such as “tempo induction, beat

10 CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION tracking, quantization of performed rhythms, meter induction and characterization of intentional timing deviations”. The concepts of metrical structure, tempo and timing are used in the analysis of rhythm: the metrical structure is related to the analysis of beats, which are durationless points in space, equally spaced in time. Tempo is defined as the rate of beats at a given metrical level. It is commonly expressed as the number of beats per minute or the time interval between beats. The definition of timing might be harder to make: timing changes are short time changes in the tempo (which remains constant after that variations), as opposed to tempo changes, which refer to long term changes in the tempo (the tempo or beat rate changes after the variation). The task of extraction features to characterize rhythmic properties is addressed in [10] and [11].

Timbre is the quality in sound that differentiates two sounds with the same pitch and loudness. Timbre is what makes two different instruments, let’s say, guitar and piano, playing the same notes in an equal volume, sound different. It is also designated by color or “tone quality”.

The features characterizing the timbre analyze the spectral distribution of the signal and can be considered global in a sense that there is no separation of instruments or sources – all that “happens” at a certain time is analyzed. These features are “low-level” since usually they are computed for time segments (frames) of very small duration and do not attempt to represent higher level characteristics of music, such as melody or rhythm.

A classification of features characterizing timbre is made in [4]. These features are classified as: temporal features (that are computed from the audio signal frame, such as the

“auto-correlation coefficients” or the “zero crossings rate”); energy features (that characterize the energy content of the signal, such as “root mean square of the energy frame” or the “energy of the harmonic component of the power spectrum”); spectral shape features (that describe the shape of the power spectrum of a signal frame, such as

“centroid”, “spread”, “skewness” or the “MFCC”); and perceptual features (that simulate the human hearing process, such as the “relative specific loudness” or “sharpness”).

The experiments made in the scope of this dissertation will extract and classify features only related to timbre. A detailed description of the features extracted will be done in the chapter 3.1.

2.5

The “bag of frames” approach

The majority of work related to the automatic classification of music uses features related to timbre. This approach is designated as the “bag of frames” (BOF) approach in [12].

The audio signal to be classified is split into (usually overlapping) frames of short duration

(the duration of the frames and of the overlap percentage is variable, usually the duration and overlap percentage are around 50 ms and 50 %, respectively); for each frame features are extracted (necessarily related to timbre because the short duration of the frames makes

CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION 11 it impossible to extract features characterizing higher level attributes such as rhythm); the features from all frames are then processed by a classifier, which will decide which class better represents the majority of the frames of the signal to be classified.

These classifiers are trained during a training phase (with a training set that should be as representative as possible) that can be supervised or not, as it was described in chapter 2.2.

The training should be done using the same features characterizing timbre and with the same characteristics of the features of the signal to be analyzed, obviously (the same frame duration and overlap percentage).

After the training phase, the classifiers can be used to perform classifications of new, unidentified signals. These new signals are classified as belonging to one of the predefined classes.

Some problems with the “bag of frames” approach are identified in [12], [13], [14] and

[15]: these issues are named “glass ceiling”, “paradox of dynamics” and “hubs”.

“Glass ceiling” is a problem reported in [13] that relates to the fact that although variations on the characteristics of the features and on the classification algorithm can indeed improve the classification performance, this same performance fails to overcome an empirical “glass ceiling”, of around 70 %. It is suggested that this limit of 70 % is a consequence of the nature of the features used and that, in order to improve this number, a new approach will have to be considered – using something more than only features characterizing timbre.

“Paradox of dynamics” is reported in [14] and addresses the fact that changes in the feature characteristics or in the classification algorithm in order to take into account the dynamic variations of the signal over time, do not cause an improvement of the classification results. Some of the strategies that take into account the time variations of the signal are referred: stacking together consecutive feature vectors to create larger feature vectors; the use of time derivatives of the extracted features; the averaging of the features over a certain time period (this method is the use of “texture windows”, explained in section 2.6); the use of classifiers that take into account the dynamics of the features, such as Recurrent Neural Networks (R-NN) or Hidden Markov Models (HMM). After running a set of experiments it was concluded that these strategies do not improve the quality of the best static models if the data being classified is polyphonic music (music with several instruments) (on the contrary, performance decreases). However, when applying these strategies to non-polyphonic music (composed of clean individual instrument notes), there is an improvement of the classification performance. It should be noted that these results apply to methods considering features related to timbre only (the

“bag of frames” approach).

It is reported in [15] the existence of a group of songs that are irrelevantly close to all other songs in the database and tend to create false positives. These songs are called

“hubs” and the definition given is the following: “a hub appears in the nearest neighbours

12 CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION of many songs in the data base; and most of these occurrences do not correspond to any meaningful perceptual similarity”. This definition of “hub” does not necessarily cover songs which are close to most of the songs in the database if the database is composed of songs that resemble the style/genre of the analyzed songs. The example given is the fact that it could happen that a song from the Beatles (“A Hard Day’s Night”) would be found to occur frequently as a nearest neighbour to a great number of songs of a database composed only by Pop songs. However, in other databases, composed of different musical genres, this would not occur. Therefore, “A Hard Day’s Night” should not be considered a

“hub”. It is found that different algorithms produce different “hub” songs (although there is a minority of songs that are “hubs” regardless of the algorithm used). It is concluded that the “hubness” of a song is a property of the algorithm used and its characteristics.

2.6

Analysis and texture windows

A technique to take into account the dynamics of the signal over time is through the use of texture windows. The concepts of analysis and texture windows are going to be introduced next.

The signal to be analyzed is broken into small, possibly overlapping, smaller time segments or frames. Each frame is analyzed individually and its features are extracted.

The time length of these frames has to be small enough so that the magnitude of the frequency spectrum is relatively stable and the signal for that length of time can be considered stationary. The frame and its length is designated “analysis window”.

The use of texture windows is a strategy to take into account the dynamics of the signal being studied. The features are extracted from short time samples of around 50 ms (the analysis window). When feeding these values to the classifiers, it is a common strategy to compute approximations of longer time segments – called the texture windows. The classifier will not evaluate all the features that correspond to all frames, individually, but will instead evaluate the distribution statistics of these features over the time of the texture window, through the parameters of mean and standard deviation.

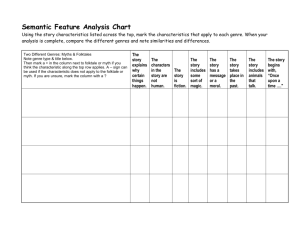

Figure 1: Texture windows [16]

For each analysis window there will be a correspondent texture window, composed of the features from the analysis window and from a fixed number of previous analysis windows.

CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION 13

This technique can be seen as perceptually more relevant, as frames of 10 th

ms are obviously not long enough to contain any statistically relevant information for the human ear [4].

The ideal size of the texture window is defined by [9] as one that should correspond to the minimum time needed to “identify a particular sound or music texture”, where “texture” is defined as a subjective characteristic of sound, “result of multiple short time spectrums with different characteristics following some pattern in time”, and the example given is the vowel and consonant sections in speech.

Experiments run by [9] show that the use of texture windows effectively increases the performance of the classifiers. Using a single Gaussian classifier and a single feature vector characterizing music titles, if no texture window was used, the classification accuracy obtained was around 40 %. The use of texture windows increased greatly the accuracy and this accuracy improved with the size of the texture window used – until 40 analysis windows. After that, the classification accuracy stabilized. The value of 40 analysis windows corresponds to the time length of 1 s. The accuracy obtained with a texture window size of 40 was around 54 %.

Implementations of different time segmentations are made in [17], [18] and [19].

A new segmentation method, named “onset detection based segmentation” based on the detection of “peaks in the positive differences in the signal envelope” (onsets), which correspond to the beginning of musical events is purposed in [17]. This technique would ensure that a musical event is represented by a single vector of features and that “only individual events or events that occur simultaneously” contribute to a certain feature vector. It is argued that although the use of texture windows of 1 s that are highly overlapped “are a step in the right direction” because they allow the modeling of several distributions for a single class of audio, they also complicate the distributions because these windows are likely to capture several musical events.

The performance obtained using several types of segmentation is compared and the onset detection based segmentation yields the best results, although closely followed by the use of 1 s texture windows.

Three segmentation strategies in a database of 1400 songs distributed over seven genres were compared in [18]: using texture windows of 30 s long, using texture windows of 1 s long and using a different approach that consists on using vectors that are centered “on each beat averaging frames over the local beat period”. The results are not conclusive, for the levels of performance achieved vary according to the genre being detected and the classifier used and one cannot proclaim that the “beat” time modeling achieves better results than the use of 1 s long texture windows.

14 CHAPTER 2: AUTOMATIC MUSICAL GENRE CLASSIFICATION

[19] proposes “modeling feature evolution over a texture window with an autoregressive model rather than with simple low order statistics”, and this approach is found to improve the classification performance on the used datasets.

Chapter 3

3.

Experimental methodology

3.1

Feature extraction

The feature extraction process computes numerical values that characterize a segment of audio – in other words, it computes a numerical representation of that segment of audio (or frame) in the form of a vector consisting of the values of several particular features. This feature vector has typically a fixed dimension and can be thought of as a single point in a multi-dimensional feature space. The several steps of this procedure are described below:

A Time-Frequency analysis technique such as the Short Time Fourier Transform (STFT) is performed on the signal from which the features are to be extracted, to provide a representation of the energy distribution of the signal over a time-frequency plane. The signal is broken into small segments in time and the frequency content of each segment is calculated – for each time segment there is a representation of the energy as a function of the frequency.

The STFT can be calculated in a fast way using the Fast Fourier Transform (FFT). The

FFT is a method to calculate the Discrete Fourier Transform (DFT) and its inverse. There are other approaches to perform these calculations, but the FFT is an extremely efficient one, often reducing the typical computation time by hundreds [20].

A frequency spectrum achieved after a STFT procedure represents perfectly the respective audio signal, but it contains too much information: a lot of the information represented in the spectrum is not important and feature vectors of limited dimensions (and as informative as possible) are more adequate to machine learning algorithms. For this reason, audio identification algorithms typically use a set of features that are calculated from the frequency spectrum signals.

Some of these features are calculated after the Short Time Fourier Transform is applied

(which can be calculated using the Fast Fourier Transform (FFT) algorithm) such as the

The Spectral Centroid, The Rollof Frequency, The Spectral Flux and the Mel Frequency

Cepstral Coefficients (MFCC). The MFCC constitute the majority of the calculated features and their description is done in this chapter.

16 CHAPTER 3: EXPERIMENTAL METHODOLOGY

3.1.1

The Mel scale and the MFCC

The Mel scale was proposed by Stevens, Volkman and Newman in 1937 in the context of a study of human auditory perception in the field of psychoacoustics. The Mel is a unit that measures the pitch of a tone the way humans perceive it. This concept is easily understood with the following explanation of how the scale was developed: the frequency of 1000 Hz was chosen as a reference and designated to be equal to 1000 Mels. Listeners were then presented a signal and were asked to change its frequency until the perceived pitch was twice the reference, half the reference, ten times the reference, 1/10 the reference, and so on. From these experimental results, the Mel scale was constructed. The

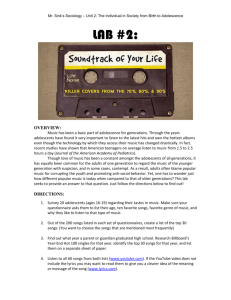

Mel scale is approximately linear below 1000 Hz and logarithmic above that frequency – the higher the frequency, the higher the frequency increment must be to produce a pitch increment [21]. The next figure represents the Mel Scale:

Figure 2: The Mel Scale [22]

The relation between the hertz unit (frequency) and the Mel unit is: m=1127.01048 log(1+f/700) Mel

The inverse relation is: f=700(e^(m/1127.01048) – 1) Hz

When calculating the MFCC, the Mel scale is used to map the power of the spectrum obtained after the FFT is applied, using triangular overlapping windows.

CHAPTER 3: EXPERIMENTAL METHODOLOGY 17

The discrete cosine transform (DCT) expresses a signal in terms of a sum of sinusoids with different frequencies and amplitudes. It is used as the final step of the calculation of the MFCC: the signal obtained after the application of the Mel-mapping is approximated by a sum of a defined number of cosines whose frequency is always doubling. The coefficients of these cosines are the MFCC.

3.1.2

Marsyas and feature extraction

The extraction of the features of audio files was done using Marsyas. Marsyas stands for

Music Analysis Retrieval and SYnthesis for Audio Signals. It is a work in progress and has been developed by George Tzanetakis and a community of developers. Tzanetakis started the Marsyas project in the end of the 90’s and since then it has gone through major revisions. Marsyas 0.2 is the latest version of this software.

Marsyas is a framework for audio analysis, synthesis and retrieval. It provides a variety of functionalities to perform a number of audio analysis tasks. These functionalities include signal processing and machine learning tools.

This software is designed to be used by experts and beginners. Expert users will have the possibility to create more complex applications through the writing of new code. Beginner users will have the possibility to use the system through graphical user interfaces and high-level scripts [23]. The version of Marsyas used was the revision 3432.

The Marsyas tool “bextract” was used to perform the feature extraction from audio files. It uses as input a text file indicating the paths of the files whose features are going to be extracted; each path is labeled with the class the musical file belongs to (the musical genre).

The Marsyas tool “mkcollection” was used to create the labeled collection files. Its syntax is: mkcollection –c collectionfile.mf –l label /pathcollection

Where collectionfile.mf

is the output file, containing the path to the music files and their labels, label is the class (genre) of the analyzed files, and /pathcollection is the path to the files. The musical files must be previously organized into genre paths. This procedure was done to all musical genre paths, resulting in a collection text files, one for each genre.

These text files were copied into a unique larger text file - containing the path to all files of all genres to be classified.

The feature extraction is done with the tool “bextract”, as it was said above. It uses as input the labeled text file with the path to the files to be considered and outputs a feature file. Its simplest syntax is:

Bextract collectionsfile.mf –w featuresfile.arff

18 CHAPTER 3: EXPERIMENTAL METHODOLOGY

Collectionsfile.mf

is the text file with the labeled path to all the files to be considered and featuresfile.arff

is the desired name of the output file, containing the extracted features.

There are many options to configure the feature extraction process. In the project,

“bextract” was used in the following way:

Bextract –ws 2048 –hp 1024 –m 100 –s 40 –l 30 collectionsfile.mf –w featuresfile.arff

The option -ws indicates the size of the analysis windows, in the number of samples of the audio file being analyzed (the number of samples per second of the music file is defined by its audio sample rate, usually 44 kHz music files). Analysis windows of 2048 samples were used. The option -hp indicates the hop size, in number of samples. The hop size is the distance between the beginnings of consecutive analysis windows. If the hop size was the same as the analysis window, there would be no overlap between consecutive analysis windows. In this case, there is an overlap of 50 %, as the hop size is half the size of the analysis windows (1024 samples and 2048 samples).

The option -m indicates the size of the texture windows, in number of analysis windows.

In this project, each texture window is composed of 100 consecutive analysis windows.

The option -s defines the starting offset in seconds of the feature extraction process in the audio files to be considered. The option -l defines the length in seconds of the segments from each sound file from where the features are to be extracted. If the -s and -l values are not set Marsyas extracts features from the whole audio files. There was a decision to extract features located in the middle of the songs (between the seconds 40 to 70). Many songs have beginnings that are not particularly representative – live records for example, have clapping and cheering sounds dominating the first seconds. The decision to consider only 30 s from each sound file was also made to limit the size of the output feature file.

Figure 3: Marsyas Official Webpage

CHAPTER 3: EXPERIMENTAL METHODOLOGY

3.1.3

Description of the features used

19

16 different features were considered, and for each feature, two values were computed: the mean and the standard deviation, representing the values of the feature along the 100 individual analysis windows that constitute the texture window. This totals 32 features that are extracted from each different texture window, resulting in a feature vector composed of 32 features. This feature vector can be designated as “instance”. Instances are single points in a multi-dimensional universe and are the objects used in the training and testing of classifiers.

All features but one (The Zero Crossings Rate) are extracted after a Fast Fourier

Transform is applied to each frame. The extracted features are:

The Zero Crossings Rate : this is the rate at which the signal changes from positive to negative – or the rate at which it crosses the zero value.

The Spectral Centroid : the center of gravity of the frequency spectrum. The spectral centroid is the weighted mean of the frequencies, with the weights being the frequency magnitudes.

The Rolloff Frequency : the frequency below which 85 % of the magnitude distribution is concentrated.

The Spectral Flux : the squared difference between the normalized magnitudes of the spectal distributions of consecutive frames. This feature should make an estimate of the variation of the magnitudes of consecutive frames.

The Mel Frequency Cepstral Coefficients (MFCC): after the FFT transformation is applied to the audio segments, the resulting signals are smoothed according to the Mel scale.

Then, a Discret Cosine Transform is performed to the resulting signals, to obtain the designated Mel-Frequency Ceptral Coefficients (MFCC), as it was described in chapter

3.1.1. The first 12 MFCC are considered and used as features.

3.1.4

The feature files

These feature files computed with Marsyas can be read by Weka (a platform to perform machine learning and classification experiments) and have an .arff

extension. The beginning of the feature file contains the definition of the features or attributes. The attributes are defined in the lines beginning with “@attribute”.

After the definition of the attributes, the file contains the values of these attributes for each instance or texture window. Each line has the values of all attributes, for one instance (in the same order of the attribution definition in the beginning of the file). The values are

20 CHAPTER 3: EXPERIMENTAL METHODOLOGY separated by commas. The last attribute is the “output attribute”, which indicates the class to which the instance belongs to.

Let’s consider that a song has a 44 100 Hz sampling rate and that features are extracted from this song with an analysis window size of 2048 samples, a hop size of 1024 samples, and a texture window size of 100 analysis windows. What will the extracted feature vectors (or instances) represent?

The sampling rate is 44 100 Hz, so the distance in seconds between two consecutive samples is 1/44100 s. A window size of 2048 samples corresponds to 2048/44100 s, or

0.0464 s. A hop size of 1024 samples corresponds to 0.0232 s. The distance between the beginnings of two consecutive analysis windows is 0.0232 s (the hop size). The texture windows are composed of 100 consecutive analysis windows, and will therefore characterize 0.0232*100=2.32 s. It should be noted that a texture window is computed for each different analysis window – consecutive texture windows will share 99 of their 100 analysis windows, and of course, will be extremely similar.

Each line in the .arff file is composed of the feature vector of a different texture window.

These lines are the instances that are used to train and test the classifiers.

3.2

Classification

Classification algorithms, as the name suggests, categorize patterns into groups of similar or related patterns. The definition of the groups of patterns (classes) is done using a set of patterns – the training set. This process is designated machine learning and can be supervised or not. If the learning is supervised each of the patterns that constitute the training set given to the system is labeled. The system will then define the characteristics of the given labels (or classes) based on the characteristics of the patterns associated to those labels. If the learning is not supervised, there is no prior labeling of the patterns and the system will define classes by itself based on the nature of the patterns. There are also machine learning algorithms that combine labeled and unlabeled training patterns – the learning is said to be semi-supervised.

Tzanetakis in [24] identifies the following three kinds of classification schemes: statistical

(or decision theoretic), syntactic (or structural) and neural. A statistical classification scheme is based on the statistical characterizations of patterns and assumes that the patterns are generated by a probabilistic system – it does not consider the possible dependencies between the features of the pattern. On the contrary, a syntactic classification scheme takes in account the structural interrelationships of the features. A neural classification scheme is based on the recent paradigm of computing influenced by neural networks.

CHAPTER 3: EXPERIMENTAL METHODOLOGY 21

The basic idea behind the classification schemes is to learn the geometric structure of the features of the training data set, and from this data, estimate a model that can be generalized to new unclassified patterns.

A huge variety of classification systems has been proposed and a quest for the most accurate classifier has been going on for some time. [25] praises this activity as being extremely productive and the cause of today’s existence of a wide range of classifiers that are employed with commercial and technical success in a great number of applications – from credit scoring to speech processing. However, no classifier has emerged as the best.

A single classifier has yet to outperform all others on a given data set and the process of classifier selection is still a process of trial and error.

The existing classifiers differ in many aspects such as the training time, the amount of training data required, the classification time, robustness and generalization.

The quality of a classification process depends on three parameters: the quality of the features (how well they identify uniquely the sound data to be classified); the performance of the machine-learning algorithm; and the quality of the training dataset (its size and variety should be representative of the classes) [25].

3.2.1

Platforms used - Weka and Matlab

The platforms used for classification were Weka and Matlab. Weka stands for “Waikato

Environment for Knowledge Analysis” and is a platform to perform machine learning and data mining tasks, such as classification or clustering. It is developed at the University of

Waikato, New Zealand. It is free software and available under the GNU General Public

License [26].

The feature files output by Marsyas (using the “bextract” option) are in a format that can be properly opened and processed by Weka.

Weka was used to perform classification experiments. Four different classifiers were used:

Naive Bayes, Support Vector Machines, K-Nearest Neighbours and C4.5.

One Weka tool that was used is the “Resample Filter” option. It can happen that the feature files to be processed by the Weka classifiers are so large in size that the training and testing tasks take several hours and even days to perform. In these cases, sampling the dataset reduces the computational work made by the classifiers. The size of the sampled dataset is defined by the user (in the form of percentage of the total dataset), is randomly created, and can be biased so that the classes are represented by a similar number of instances.

22 CHAPTER 3: EXPERIMENTAL METHODOLOGY

Figure 4: Weka: “Resample”

There are several ways to train and test datasets with Weka. Once the dataset is opened, the user can use all the dataset to train the classifier and use the same dataset to test the classifier. All the dataset’s instances are used to train the classifier and the classifier’s performance is tested with these same instances. There is the option to split the dataset into two smaller datasets: one dataset will be used to train the classifier and the other will be used to test it. The sizes of each dataset are defined by the user, in the form of percentages of the original dataset. This option is called “percentage split”. Another way to train and test classifiers is through “ n -fold cross-validation”: the dataset is split into n folds, n -1 folds are used to train the classifier and the other fold is used to test it. The classification results are saved. Then, this process is repeated n -1 times, with the test fold changing every time. All the folds are used once as the test fold. The classification results are averaged. Finally, the user can train and test the classifier with separate dataset files.

The classification output in Weka has information about the quantity of correctly and incorrectly classified instances and detailed information about the classification of instances from each class. A Confusion Matrix is also output, it indicates how many instances belonging to each genre were classified as belonging to each genre.

Matlab was used to perform classification experiments with Gaussian Mixture Models.

Matlab stands for “Matrix Laboratory”; it is a popular platform to perform numerical computation and provides also a programming language.

CHAPTER 3: EXPERIMENTAL METHODOLOGY 23

With the Weka classifiers there is a classification of instances: each instance is treated separately to train and test the classifier. The results that are ouput by Weka are relative to the classification performance of instances.

The Gaussian Mixture Models were used to perform a different kind of classification: of whole songs instead of instances. Songs were used to test the GMM, and the output of the test characterizes how were the individual songs classified (not instances).

Figure 5: Weka: Choosing a Classifier

3.2.2

Instance classification with Weka classifiers

A description of the classifiers used with Weka is made below.

3.2.2.1

Naive B ayes

The Naive Bayes classifier assumes that there are no dependencies between the different features that constitute the feature vector. The probability of a feature vector or instance belonging to a certain class is equal to multiplying the probabilities of each individual feature belonging to that class. The probabilities of the feature vector belonging to each class are estimated and the most probable class is elected. The Naive Bayes classifier

24 CHAPTER 3: EXPERIMENTAL METHODOLOGY yields surprisingly good results, comparable to the ones of other algorithms of greater complexity. The good results obtained with Naive Bayes are surprising because of the wrongful assumption that is made by this classifier: that the features are independent from each other. An explanation for this fact is that the probabilities estimated with the Naive

Bayes classifier do indeed reflect this feature independency and thus are not precise, but the higher calculated probabilities usually do correspond to the correct classes, and so the

Naive Bayes classifier performs well in classification tasks. Other purposed explanation is that although the classification of the Naives Bayes algorithm is affected with the dependency distributions along all attributes, there are times when the dependencies cancel themselves and the no-dependency assumption becomes accurate [27].

3.2.2.2

Support Vector Machines

The concept behind Support Vector Machines is to find hyper planes that will constitute the frontiers between classes. In an n -dimensional universe, a hyper plane is n -1 dimensional and separates the universe in two. If the universe dimension is 1 (a line), the hyper plane will be a point; if the universe dimension is 2 (a plane), the hyper plane will be a line. Here, the dimension of the universe will be the number of different features extracted.

The frontier between two different classes will not be a single hyper plane, but two. These two hyper planes will constitute the margins of the frontier – inside those margins there are no class objects (see figure 6). When determining these hyper planes, the SVN classifier will take into consideration maximizing the distance between them (the margins), and minimizing errors. And what are these errors?

It is unlikely that the distribution of the (training set) data will be one in which classes are linearly separable. Considering a 2-dimensional universe, two classes belonging to that universe are linearly separable if one line can separate in one side the objects belonging to one class and on the other side the objects belonging to the other class. If the two classes are not linearly separable (which is the case most of the times), an error will occur if an object is placed on the wrong side of the line – the side of the other class.

The SVN approach has been generalized to take into account the cases where classes are not properly separated by linear functions. It is possible to separate classes with non-linear functions, such as circles (in a 2 dimensional universe). The concept remains the same: there will be two circles separating two classes, and when designing these circles there will be an effort to maximize the distance between the two circles and to minimize errors.

Further reading on support vector machines can be found in the documents [28] and [29], the former more comprehensive than the latter.

CHAPTER 3: EXPERIMENTAL METHODOLOGY 25

Figure 6: SVM: frontier hyper-planes [29]

3.2.2.3

K-Nearest Neighbours

The K-Nearest Neighbours (KNN) classifier directly uses the training set for classification and does not use any mathematical form to describe classes in the feature space, such as probability density functions. With the KNN classifier, a new unclassified object is classified according to the position of its K nearest neighbours. This is better understood if we think of objects as points in the multi dimensional space. If K is one, the object will be classified as belonging to the class of its nearest neighbour. The “neighbours” are the instances of the training dataset, which are labeled. In the classification of an instance, the distances between this instance and all the labeled instances of the training set are computed, and the instance is classified with the class of its nearest training instance. A tutorial on K-Nearest Neighbours classification can be found in [30].

3.2.2.4

J48

J48 is Weka’s version of the C4.5 classifier, which is the latest public implementation of the decision tree approach popularized by J. R. Quinlan. The algorithm works as it is described below:

First, an attribute that best differentiates the different classes is chosen; tree branches are created for each value of the chosen attribute (or for subsets of the attribute’s value); the instances are divided into subgroups (the created tree branches), reflecting their “chosen attribute’s” value; if all the instances of a subgroup belong to the same class, the branch is labeled with that class; if there aren’t attributes left that can distinguish instances belonging in the subgroup, the branch is labeled with the class of the majority of the subgroup’s instances; if it isn’t the case of any of these situations just described, the whole process is repeated (for each subgroup) [31].

26 CHAPTER 3: EXPERIMENTAL METHODOLOGY

3.2.3

Song classification with Gaussian Mixture

Models

The Gaussian Mixture Model (GMM) classifier represents each class as a weighted mixture of a fixed-size number of Gaussian distributions. If a Gaussian Mixture Model is referred to as GMM3, this would mean that three Gaussian distributions are used. A

Gaussian distribution is also frequently known as a Normal distribution. The used

Gaussian distributions are multi-dimensional – the number of dimensions is the number of features. A GMM is characterized by the mixture weights and by the mean and covariance matrices (in plural, because the distributions are multi-dimensional) for each mixture component. These parameters are defined by the labeled training set during the training phase. Classes can be represented by a single Gaussian distribution – in this case, the classifier is simply called “Gaussian classifier”.

The GMM classifier can be said to belong to a group of Statistical Pattern Recognition

(SPR) classifiers, because a probability density function is estimated for the features extracted for each class [9].