Lecture 4

advertisement

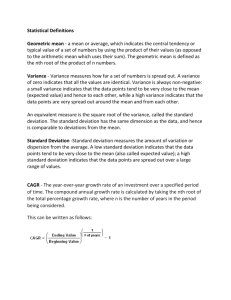

Data Analysis and Statistical Methods Statistics 651 http://www.stat.tamu.edu/~suhasini/teaching.html Lecture 4 (MWF) Boxplots and standard deviations Suhasini Subba Rao Lecture 4 (MWF) Review of previous lecture • In the previous lecture we defined the population mean and median and the sample mean and median. • Both the mean and median are measures of centrality in a population or sample. • It is also useful to have measures of spread. Measures of spread give us information about how spread out the population is and it will also give information about how accurate the sample mean will be. The more spread out the population the less accurate the sample mean is likely to be (see variance explanation lecture4.pdf, pages 1-2). • The most simple measure of spread is to use the range of the data (smallest and largest number in the data set). But as illustrated by the 1 Lecture 4 (MWF) extreme examples (given in lecture 3) Sample 1: −1, 0, 0, 0, 0, 0 Sample 2: −1, 0, 0, 0, 0, 2 Sample 3: −1, 0, 0, 0, 0, 20 using the range does not necessarily represent the ‘typical’ spread. • An alternative measure of spread is the Interquartile Range. 2 Lecture 4 (MWF) Boxplots, medians and means largest value 75th percentile Order data Median 25th percentile lowest value 3 Lecture 4 (MWF) Example The number of people volunteering to give blood at a center was recordered for each of 20 successive Fridays. The data is shown below 320 274 370 308 386 315 334 368 325 332 315 260 334 295 301 356 270 333 310 250 Make a boxplot of the observations. 4 Lecture 4 (MWF) Solution in JMP 5 Lecture 4 (MWF) Comparing multiple samples using boxplots • Boxplots are excellent for comparing two samples. • In JMP you can compare multiple samples through boxplots. • Instructions are given in my JMP notes on how to to do this. • Please do this: Make boxplots for the sample (since we only have the data from class) distribution of the total number of M&Ms for each type of M&M. 6 Lecture 4 (MWF) Measures of spread - The Variance • The variance is a commonly used measure of spread. Given the population x1, . . . , xN we define the population variance as N X 1 σ2 = (xi − µ)2, N µ = population mean. k=1 • In words: this is the sum of all the squared differences between all possible outcomes and the population mean. • In reality it will never be observed (since the population is unknown) and it has to be estimated instead. 7 Lecture 4 (MWF) • Given the sample X1, . . . , Xn we define the sample variance as n X 1 (Xi − X̄)2, s2 = (n − 1) i=1 given the sample we not know the mean µ, so we estimate it with using the sample mean X̄ (swop µ with X̄). The reason we divide by (n − 1) and not n when obtaining the sample variance is a quirk of statistics. We do it to reduce the bias. I don’t much care whether you divide by n or (n − 1). What you need to remember is that the difference between the sample variance and the population is that the population variance is calculated using the entire population, whereas the sample variance is calculated using the sample. 8 Lecture 4 (MWF) Example - the variance (by hand) We are given the sample 5, 4, 3, 0, 3. The sample mean x̄ is 3. Calculating the sample variance: xi 5 4 3 0 3 frequency xi − x̄ 5−3=2 4−3=1 3−3=0 0 − 3 = −3 3−3=0 (xi − x̄)2 (5 − 3)2 = 4 (4 − 3)2 = 1 (3 − 3)2 = 0 (0 − 3)2 = 9 (3 − 3)2 = 0 sum = 14 The sample variance is n X 1 14 14 s2 = = . (xi − x̄)2 = (n − 1) i=1 5−1 4 9 Lecture 4 (MWF) The variance does not change with shifts • The variance is invariant to shifts in the data. Suppose we shift the data by 20 (this could be because of a change in units of observations), so we observe 25, 24, 23, 20, 23. The mean is shifted by 20, but spread stays the same. To see why look at the calculation: xi 25 24 23 20 23 frequency xi − x̄ 25 − 23 = 2 24 − 23 = 1 23 − 23 = 0 20 − 23 = −3 23 − 23 = 0 (xi − x̄)2 (5 − 3)2 = 4 (4 − 3)2 = 1 (3 − 3)2 = 0 (0 − 3)2 = 9 (3 − 3)2 = 0 sum = 14 10 Lecture 4 (MWF) • The above example show that the variance is simply measuring squared the distance from the mean (which stays the same for shift transformations). • See the explanation of the variance given in https://www.stat.tamu. edu/~suhasini/teaching651/variance_explanation_lecture4.pdf pages 3-5. 11 Lecture 4 (MWF) The population, sample and variance 3,4,6,9,10,15,44,16 Set of all 3,16 Sample measurements 1 (population) 6,9,3 Sample 2 • Population variance In this case it is σ 2 = 81 [(3 − 13.375)2 + (4 − 13.375)2 + . . . + (16 − 13.375)2] = 153.4844. 12 Lecture 4 (MWF) Observations • Like the sample mean the sample variance is random and varies from sample to sample. • Sample variance For Sample 2: it is s22 = For Sample 1: s21 = 1 3−1 [(6 1 2−1 [(3 − 6)2 + (9 − 6)2 + (3 − 6)2] = 9. − 9.5)2 + (16 − 9.5)2] = 84.5. • Similar to the sample mean, the sample variance is also random (changes according to the sample). • We see that the sample variance tends to be smaller than the population variance. 13 Lecture 4 (MWF) • When we start learning statistical methods in this class, we will work under the assumption that the variance of the population known. In many situations this is an unrealistic assumption, and we need to estimate the variance from the data. This changes our inference and is the reason that we will be using the t-distribution (this comes much later - circa Lecture 14). 14 Lecture 4 (MWF) The variance does not measure distance! • Example Consider the observations −4, −3, −2, −1, 1, 2, 3, 4. The mean 1 is zero and the sample variance is 8−1 (42 +32 +22 +12 +12 +22 +32 +42) = 8.57 • The range of the data is −4, 4, which has length 8. We see that the sample variance = 8.57, it is even bigger than the range itself! • This is because the variance squares the distances. To overcome this we square root the variance, to give the standard deviation. 15 Lecture 4 (MWF) The standard deviation • The standard deviation is the square root of the variance. That is v u N u1 X (xi − µ)2 σ=t N N = size of population k=1 • The sample standard deviation is v u u s=t n X 1 (Xi − X̄)2 (n − 1) i=1 n = size of sample • The standard deviation has the advantage that it has the same set of units as the data. 16 Lecture 4 (MWF) • The variance ‘squares’ the amount of spread. The standard deviation gives a more accurate measure of the amount of spread. • The variance and standard deviation are closely related. The variance is just the square of the standard deviation. Often students treat them as different measures of spread. They are not. The variance is not a measure of spread, the square root of it (the standard deviation) is. 17 Lecture 4 (MWF) Comparing standard deviations and IQR • For the majority of the analysis we do in this class, we be using the standard deviation, but it is useful to understand how outliers may influence the standard deviation: • Observe that the majority of the points are about zero but one value is 5. This pulls the mean and the standard deviation to the right. However, there is not effect on the median or IQR. 18 Lecture 4 (MWF) Standard deviation: Graphical illustration Mean is 29 and standard deviation is 6.6. The green line [29 − 6.6, 29 + 6.6] = [22.4, 35.6] is one standard deviation from the mean. Observe that 7/11 = 63.3% of the points are within one standard deviation of the mean. 19 Lecture 4 (MWF) Standard deviation and IQR • Here we illustrate the differences between the two measures of spread; IQR and standard deviations. – We observe if all the data takes the same value, the IQR and standard deviation are both zero. The standard deviation is only zero if all the data is the same. – However, we see on the second plot that the IQR can still be zero if most of the values (but not all) are the same. 20 Lecture 4 (MWF) The empirical rule (this just a rule of thumb) For observations from several different disciplines (nature, engineering etc) we often observe that • Approximately 68% of the observations lie inside the interval [x̄−s, x̄+s]. • Approximately 95% of the observations lie inside the interval [x̄ − 2s, x̄ + 2s]. • Approximately 99.7% of the observations lie inside the interval [x̄ − 3s, x̄ + 3s]. This is only a rule of thumb and it only applies to data, which is ‘normally’ distributed. Don’t take it seriously. What it does say is that the majority of the data will be within three standard deviations of the mean. 21 Lecture 4 (MWF) Interpretation of the empirical rule This is only a rule of thumb. It should not be taken too seriously. Some explanations: • What we mean by the interval [a, b]. These are two points of the ‘time line’. Starting with a and ending at b. Example, what does the interval [3, 4.5] mean? • What we mean by ‘ Approximately 68% of the observations lie inside the interval [x̄ − s, x̄ + s]’. Is that we calculate s and X̄ from the data. For example, it could be X̄ = 3 and s = 1. For this example, the interval [x̄ − s, x̄ + s] is [3 − 1, 3 + 1], and we count the number of observations in this interval. The empirical rule states that for many data sets 68% of the observations lie in this interval. Similarly [x̄−2s, x̄+2s] = [3−2, 3+2] and [x̄−3s, x̄+3s] = [3−3, 3+3]. 22 Lecture 4 (MWF) Where you may have previously encountered the standard deviation • When you go to the doctors office, the technicians take several measurements, such as weight, height, blood pressure and blood samples. • How do they determine whether it is ‘normal’. Often you will get your reading with the percentile (we come to this later) and the number of standard deviations your reading is from the average (mean). Consider the following example: – Suppose that you have a blood sample taken. The mean for the reading is 20 and you have 14, is that normal? – The difference of 14 from 20 does not tell you anything unless you know how spread out the readings can be. 23 Lecture 4 (MWF) – Yhe standard deviation is 8, so there is a fair amount of variation or spread. Then you are within (14 − 20)/8 = −6/8 standard deviations of the mean. This means you are not too far from the average (what we mean by far and not too far is really determined by the percentile and the distribution of the measurements, but we come to this later). – In addition if blood samples followed the empirical rule, since your reading is within one standard deviation of the mean then your reading is ‘within’ 68% of the mean • Another example: – A friend has their blood sample taken, he has the reading 45. Is that normal? – His reading is 25 from the mean and (45 − 20)/8 = 3.125 standard deviations from the mean. – This seems to be in the extreme (recall by the empirical rule, for many data sets 99.7% of observations are within 3 standard deviations of 24 Lecture 4 (MWF) the mean). – This suggests that (i) either he is one of the very few people who are fine with such a large reading or (ii) he is not fine. The data suggests that (ii) could be true. 25