Mining Unstructured Text at Gigabyte per Second Speeds Alan Ratner

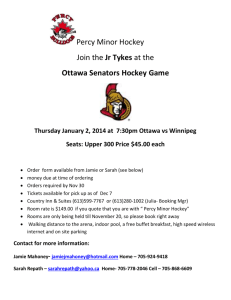

advertisement

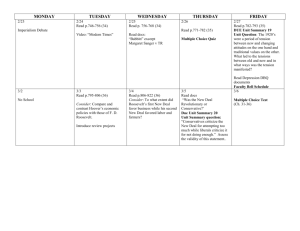

Mining Unstructured Text at Gigabyte per Second Speeds Alan Ratner 1 Aim • Identify language and topic of streaming web documents – blogs, newsgroup posts • In the presence of noise – bytes of HTML & JavaScript >> bytes of content → everything looks like English • At network speeds – this year at 2.5, 10, & 40 Gb/s – next year at 100 Gb/s 2 The Nature of Text Ideas Sequence of Words Topic Language Graphical Characters Script Sequences of Bits Encoding native & transliterated 3 The Nature of Text Ideas Sequence of Words Topic Language Graphical Characters Script Sequences of Bits Encoding native & transliterated Variations: >>106 4,000 29+ ASCII, WCP, ISO, UTF, languagespecific 4 6 Fundamental Questions in Mining Text Language 1. Detection: Is there language? 2. Identification: What language is it? 3. Clustering: Is this language like any other I’ve seen? Topic 4. Labeling: How can I describe the topic? 5. Identification: Is it on a specific topic of interest? 6. Clustering: Is this topic like any other I’ve seen? 5 Agenda • • • • Computing Platforms ◄ Language Detection Language Identification Topic Identification 6 High Speed Processing • Processing time – O(nanosecond per byte) – O(microsecond per document) • Platforms – FPGA* – CAM* – NPU – GPU – N-core CPU (N >> 1) 7 Content Addressable Memory • If string in memory then return address • CAM is typically ternary (base 3) – specified bits/bytes can be ignored – ignoring the 6th bit in many encodings makes the comparison case-insensitive • 1013 18-byte string compares per second 8 Architecture Streaming Data TCAM (Tokens) FPGA RAM (Sums of Weights) (Weights) CPU (Choose winning class) 9 Agenda • • • • Computing Platforms Language Detection ◄ Language Identification Topic Identification 10 Does a Given File (or Streaming Data) Contain Language? • Language ≡ vocabulary AND grammar • Shannon showed we can correctly guess the next character in English text using a 27-letter alphabet 69% of the time • Text is typically more predictable than well-compressed non-text (zip, audio, video, images) 11 Spaceyness vs. Highness (on noiseless text) 100% 80% Highness 60% Non-Text Latin Text Non-Latin Text 40% 20% 0% 0 10 20 30 40 50 60 Spaceyness 12 Agenda • • • • Computing Platforms Language Detection Language Identification ◄ Topic Identification 13 Occurrence AND T HE 10.0% 1.0% YO FOR U NO T WT H IV H WA A E ARES T HI S B THUET F RO Y M HIS ALL ON W E Y HA T THOEUR AB ORE U W WIOL L T WH ULD H DICH WA HAHSO CA N MO W RE THEERE WH IR EN SOOUMT PE O E TH P LE HEREM B J UE EN LIKS T A YE ON SATIHE R H D T IM OH NEN TIMLY THAE N DGOET N' T HO KN W SHEO W T IN K S HO BEHC UL CO AUSD U L D E AOLU R VESRO M Y N AY EVOEW NE N INTW S THEO SE E SE WE LL IT'S D TDHIO NEWS E MA ITSKE M CH WU T AY O OW VER MO G ST SUOCO D MA H FIRNY S DO AFTEST WH ER HERY G R E SA YOUP R IG W HT BEHI E RE POSNG YE TE JWULARSD RY I'M OTE US E HO WAME NT T HA T English Word Occurrence (>=3 letters) 0.1% 0 10 20 30 40 50 60 70 80 90 100 Rank 14 English Cumulative Word Occurrence 25% Cumulative Occurrence 20% WAS HAVE ARE THIS BUT THEY HIS FROM ALL ONE HAD WHICH WILL WOULD ABOUT THERE YOUR WHAT WITH 15% NOT FOR YOU THAT 10% AND THE 5% 0% 0 5 10 15 20 25 Rank 15 Comparative Cumulative Word Occurrence 60% Cumulative Probability 50% 40% Arabic ArabicChat Russian 30% RussianChat English Dutch 20% 10% 0% 0 100 200 300 400 500 Top Ranked Words 16 Selecting Patterns for Language Id • Spaced languages – Common words ≥ 3 characters with spaces before & after – Avoid • HTML keywords • JavaScript keywords • International words (proper nouns) • Unspaced languages – Common character pairs 17 Agenda • • • • Computing Platforms Language Detection Language Identification Topic Identification ◄ 18 Topic Id & Search • How do you find the 10-100 relevant docs to satisfy a user’s need for information (out of 1012 candidates)? • Search engines force keyword search onto users • What users generally want is topic id – “Show me docs on topic X” – “Show me docs that look like this and not like this” • False negatives OK – User doesn’t know what you have missed (unlike anti-spam) • Precision needs to be high – Don’t annoy the user! – Extremely low false positive rate (0.0000001%) 19 Algorithms for Topic ID • • • • • • • • • • • • • Bayes Markov Markov Orthogonal Sparse Bi-word Hyperspace Correlative Entropy Term Frequency * Inverse Doc Frequency Minimum Description Length Morphological Centroid-based Logistic Regression (similar to SVM & single-layer NN) … Most of these use either additive or multiplicative weights of detected tokens 20 Topic ID Implementation 1. Select on-topic & off-topic docs for training 2. Select tokens (typically 1K on-topic/10K off-topic) 3. Compute additive weights that minimize false positives with acceptable recall 21 Topic Id Evaluation • Consistent labeling of informal documents by topic is difficult • Need millions of labeled docs to cover major languages and wide variety of topics • Used 30K newsgroup posts on 32 topics – Topic assumed to be name of newsgroup – Considerable overlap in topics, especially those on sports and religion 22 Hockey Space Hockey Blog Space Wiki True Positive Rate 30% 20% 10% 0% 0.00% 0.02% 0.04% 0.06% 0.08% 0.10% False Positive Rate 23 Hockey Space Hockey Markov Space Markov True Positive Rate 30% 20% 10% 0% 0.00% 0.02% 0.04% 0.06% 0.08% 0.10% False Positive Rate 24 25 Conclusions 1. Language detection solved on clean documents but unsolved on noisy web documents 2. Language identification works well at gigabyte per second speeds 3. Topic identification should work well at gigabyte per second speeds; further testing needed on larger data sets 4. Topic clustering needs further work 26