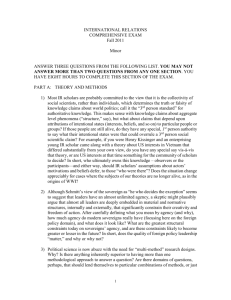

International Relations in the US Academy1

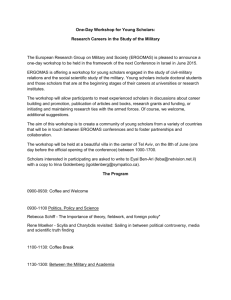

advertisement