Change of basis for coordinates

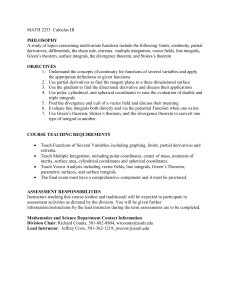

advertisement

Change of basis for coordinates

The standard bases for some of our examples are very useful, because as

we have seen, it is very easy to find coordinates of a vector, often “by

inspection”, i.e., without calculations.

Of course, we can always do this in two steps:

2 3

c1

6 7

1. Given the B-coordinates [v ]B = 4 ... 5, we can calculate v directly:

But often it is necessary or desirable to use a di↵erent basis, one that is

more suited to a particular problem.

So it will be useful to be able to convert coordinates of a vector from one

basis to another.

cn

v = c 1 b1 + · · · + c n bn

Problem

0

2. Then find the B coordinates of v.

Given a vector space V , a vector v, and two di↵erent bases, B and B 0 , of

V , if we know the B-coordinates of v, namely [v]B , how can we

(efficiently) calculate the B 0 coordinates, [v]B 0 :

change-of-basis

! [v]B 0

[v]B

1

2

⇢

2

1

0 1

,

and B 0 =

,

.

1

2

1 1

(You can verify that these are bases.) Now suppose we know that the

3

B-coordinates of some vector v are [v]B =

. Then

4

2

1

10

1. v = 3b1 4b2 = 3

4

=

.

1

2

5

For example, let V = R2 , B =

⇢

For another example, let V = P2 , B = { 1 2x + x 2 , x + x 2 , 1 x 2 },

and B 0 = { 1 + x, x, 1 + x + x 2 }. You can easily check that these are

both bases of V . Now suppose we are told that p is a vector whose

B-coordinates are

2 3

3

[p]B = 4 2 5,

1

and are asked to find the B 0 -coordinates. First, we calculate p:

2. Now we must find the coordinates of v with respect to B 0 . If

(v)B 0 = (d1 , d2 ), we set v = d1 b01 + d2 b02 :

10

0

1

= d1

+ d2

5

1

1

p = 3b1 + 2b2

=2

15 and d2 = 10. So

15

=

.

10

3

2x + x 2 ) + 2(x + x 2 )

4x + 6x

(1

x 2)

2

Next, we need to find the B 0 -coordinates of p, so we set

p = c1 b01 + c2 b02 + c3 b03 , or

This is easily solved to give d1 =

[v]B 0

1b3 = 3(1

2

4x + 6x 2 = c1 (1 + x) + c2 (x) + c3 (1 + x + x 2 )

and solve for c1 , c2 , c3 .

4

This two-step procedure always works, but if we have a lot of vectors to

convert, we would have to set up and solve a set of equations for each

one. Next, we will develop a method that, once set up, will convert

coordinates for any vector with just a matrix multiplication.

This leads to

2

1

41

0

So we have c1 =

0

1

0

1 |

1 |

1 |

4, c2 =

3

2

2

1

45 · · ! 40

6

0

6, c3 = 6, and

2 3

4

[p]B 0 = 4 65

6

0

1

0

0 |

0 |

1 |

3

4

65.

6

Let V be any space, with bases B and B 0 . For v 2 V , let

2 3

c1

6 7

[v]B = 4 ... 5

which means that

v = c 1 b1 + · · · + c n b n .

cn

Now remember that the coordinate isomorphism preserves linear

combinations, so, switching to B 0 -coordinates, we have

[v]B 0 = [c1 b1 + · · · + cn bn ]B 0 = c1 [b1 ]B 0 + · · · + cn [bn ]B 0 .

5

6

Theorem (Change of basis formula)

[v]B 0 = P[v]B ,

This linear combination can be expressed in matrix form. Let P be the

n ⇥ n matrix whose j-th column is [bj ]B 0 . Then

2 3

c1

h

i6 c 2 7

6

7

P[v]B = [b1 ]B 0 [b2 ]B 0 · · · [bn ]B 0 6 . 7 = c1 [b1 ]B 0 + · · · + cn [bn ]B 0 .

4 .. 5

cn

where P is the transition matrix from B to B 0 :

h

i

P = [b1 ]B 0 [b2 ]B 0 · · · [bn ]B 0

whose j-th column is [bj ]B 0 .

For clarity, we will use the notation

But this is just [v]B 0 . Therefore we have proved the following theorem.

P = PB!B 0

to emphasize that the transition is from B to B 0 . So

h

i

PB!B 0 = [b1 ]B 0 [b2 ]B 0 · · · [bn ]B 0

7

8

In order to compute the transition matrix PB!B 0 , we need to compute

B 0 -coordinates for all the basis vectors in B, but once this is done, the

coordinates of any vector can be found by matrix multiplication.

⇢

⇢

2

1

0 1

In the first example: V = R2 , B =

,

B0 =

,

, we

1

2

1 1

need to find [b1 ]B 0 and [b2 ]B 0 , so we will row-reduce two augmented

matrices:

0 1 | 2 R1 $R2 1 1 | 1 R1 R2 1 0 |

1

[B 0 |b1 ] =

!

!

1 1 | 1

0 1 | 2

0 1 | 2

[B 0 |b2 ] =

So [b1 ]B 0

0 1 |

1 R1 $R2 1 1

!

1 1 | 2

0 1

1

3

=

, [b2 ]B 0 =

, and

2

1

|

|

2

1

R1 R2

!

h

i 1

P = PB!B 0 = [b1 ]B 0 [b2 ]B 0 =

2

|

|

1 0

0 1

In the example earlier, we were given [v]B =

find [v]B 0 using P:

[v]B 0 = PB!B 0 [v]B =

3

1

1

2

3

1

3

=

4

3

. Now we can easily

4

3 12

=

6+4

15

,

10

as before.

3

.

1

9

10

Notice that when finding P, we did

0 1 | 2 R1 $R2 1

[B 0 |b1 ] =

!

1 1 | 1

0

0

[B |b2 ] =

1

0

1

1

|

|

1

2

1

!

0

R1 $R2

1

1

|

|

1

1

|

|

1

2

R 1 R2

!

2

1

1 0

0 1

1 0

!

0 1

R1 R2

|

|

There are a few theorems that can help simplify finding transition

matrices, as well as being of theoretical importance later. The first

concerns a change of basis followed by a second change. These two

changes can be rolled together into a single one:

1

2

|

|

Theorem

3

1

Let B, B 0 , and B 00 be bases of a vector space V . Then

(PB 0 !B 00 )(PB!B 0 ) = PB!B 00

and both systems have the same left-hand parts, i.e., the same coefficient

matrices, and so the row operations involved are the same. Therefore we

can use the technique mentioned in Section 1.6 of the text (“Linear

Systems with a Common Coefficient Matrix”, p. 61, and Example 2

following). So we apply elimination to the “doubly augmented matrix”:

0 1 | 2

1 R1 $R2 1 1 | 1 2 R1 R2 1 0 | 1 3

!

!

,

1 1 | 1 2

0 1 | 2

1

0 1 | 2

1

and immediately get PB!B 0 =

11

1

2

Proof.

Recall that the j-th column of a product AB is A times the j-th column

of B (see text, page 31). So the j-th column of P = (PB 0 !B 00 )(PB!B 0 ) is

PB 0 !B 00 [bj ]B 0

3

.

1

But this is the formula for the B 00 coordinates of bj . So the j-column of

P is [bj ]B 00 , which is precisely the j-th column of PB!B 00 .

12

In the case where the two bases B and B 0 are the same, then “change of

basis” is no change at all. And the matrix that does this is the identity

matrix.So we have

Finally, by combining these two theorems we get the following.

Theorem

Theorem

PB!B = I

For any bases B and B 0 ,

PB 0 !B = (PB!B 0 )

Proof.

The j-th column of PB!B is [bj ]B . But clearly

bj = 0b12+3· · · + 1bj + · · · + 0bn , which is equivalent to

0

6 .. 7

6.7

6 7

7

[bj ]B = 6

617 = ej . And ej is the j-th column of the identity matrix.

6 .. 7

4.5

0

Since PB!B and I have the same j-th column for every j, they are equal.

13

Proof.

(PB 0 !B )(PB!B 0 ) = PB!B = I

14

For “standard” bases, finding coordinates is easy, so finding a transition

to a standard basis is also easy:

x + x 2 , 1 + 2x

Example: Let V = P2 , B = { 2

B 0 = { 1, x, x 2 }. Find PB!B 0

x 2, 1 + x

Then to get the reverse transition matrix PB 0 !B , by the previous

theorem, we only need to find the inverse.

x 2 } and

We only need to read o↵ the coefficients of the polynomials in B to get

the columns of P:

2

3

2

1

1

15

PB!B 0 = 4 1 2

1

1

1

15

PB 0 !B = (PB!B 0 )

1

2

2

=4 1

1

1

2

1

3

1

15

1

1

2

1/3

= ··· = 4 0

1/3

0

1

1

3

1/3

1 5

5/3

16

We can also use these two theorems together, when neither basis is

standard.

⇢

⇢

2

1

0

1

Consider again V = R2 with B =

,

B0 =

,

.

1

2

1

1

Then if S is the standard matrix of V , we easily get

2

1

0

PB!S =

and PB 0 !S =

1 2

1

This calculation can be simplified by the following observation.

Theorem

Let A be an invertible n ⇥ n matrix and let B be any n ⇥ k matrix. Then

A is row-equivalent to the identity matrix. Suppose, by elementary row

operations, that

[ A | B ] · · ! [ I | C ].

1

1

Then by the “repeated change” theorem:

Then C = A

PB!B 0 = PS!B 0 PB!S

But we also have PS!B 0 = (PB 0 !S )

PB!B 0 = (PB 0 !S )

17

1

1

1

PB!S =

0

1

1

1

B.

Proof.

, so

1

1

2

1

This is because the “multisystem” AX = B is equivalent to IX = C . But

the solution to AX = B is X = A 1 B, so C = X = A 1 B.

1

2

18

We can apply this to finding a transition matrix by way of a standard

basis, as in the previous example.

Applying this method to the previous example, we had

2

1

0 1

PB!S =

and PB 0 !S =

1 2

1 1

“An Efficient Method . . . p. 220”

Let B and B 0 be bases of V , and let S be the “standard” basis of V .

Then

PB!B 0 = (PS!B 0 )(PB!S ) = (PB 0 !S ) 1 (PB!S )

So we reduce

⇥

So to calculate this, (1) form the matrix

⇥

⇤

PB 0 !S | PB!S .

⇤

0

PB 0 !S | PB!S =

1

1 | 2

1 | 1

And

Then (2) convert this to reduced row-echelon form. The result will be

⇥

⇤

⇥

PB 0 !S | PB!S · · ! I | PB!B 0 ].

PB!B 0 =

1

2

1

2

··!

1

0

0 |

1 |

1

2

3

1

3

.

1

(3) Extract the right-hand side.

19

20

In our second example above, V = P2 , B = { 1 2x + x 2 , x + x 2 , 1 x 2 },

and B 0 = { 1 + x, x, 1 + x + x 2 }. Then if S = { 1, x, x 2 }, we have

2

3

2

3

1 0 1

1 0 1

PB!S = 4 2 1 0 5, PB 0 !S = 41 1 15,

1 1

1

0 0 1

2 3

3

And we can use this to find [p]B 0 where we were given [p]B = 4 2 5:

1

So we reduce

⇥

PB 0 !S

2

1

⇤

| PB!S =41

0

2

1

·· !40

0

2

0

And we have PB!B 0 = 4 3

1

1

1

1

0

1

0

0

1

0

1 |

1 |

1 |

0 |

0 |

1 |

1

2

1

0

1

1

0

3

1

1

1

1

3

1

05

1

[p]B 0

3

2

15

1

3

2

15 .

1

2

0

= PB!B 0 [p]B = 4 3

1

in agreement with our earlier answer.

21

1

1

1

32 3 2 3

2

3

4

15 4 2 5 = 4 65 ,

1

1

6

22

Here is another example, from the text (#16, p. 224).

(b) If w = ( 5, 8, 5), find [w]B . Then use (a) to find [w]B 0 .

Let V = R3 , B = { ( 3, 0, 3), ( 3, 2, 1), (1, 6, 1) }, and

B 0 = { ( 6, 6, 0), ( 2, 6, 4), ( 2, 3, 7) }.

To express w as a linear combination of B, we reduce [B

2

3

2

3

3 1 | 5

1 0 0 |

40

2

6 | 8 5 · · ! 40 1 0 |

3

1

1 | 5

0 0 1 |

(a) Find PB!B 0 .

Using the standard basis S, we form [ PB 0 !S | PB!S ] and reduce:

2

6

4 6

0

So

23

2

6

4

2 |

3 |

7 |

3

0

3

3

2

1

3

2

1

1

5

6 ·· ! 40

1

0

2

3/4

PB!B 0 = 4-3/4

0

3/4

-17/12

2/3

0

1

0

0 |

0 |

1 |

3

3/4

3/4

-3/4

-17/12

0

2/3

1/12

2 3

1

So [w]B = 415.

1

3

-17/125

2/3

(Check:

1/12

-17/125

2/3

24

1b01

+

1b02

+

1b03

2

| w]:

3

1

15

1

3 2 3 2 3 2 3

3

3

1

5

= 4 0 5 + 4 2 5 + 4 6 5 = 4 8 5 = w.)

3

1

1

5

Now to find [w]B 0 , we have

[w]B 0

2

3/4

= PB!B 0 [w]B = 4-3/4

0

3/4

-17/12

2/3

(c) Check by computing [w]B2 directly.

32 3

1/12

1

-17/125415

2/3

1

2

3

19/12

-43

4

/125

=

4/3

Comment: The instructions said to check by “computing [w]B2 directly”.

But there is an easier way. Check by using the definition of coordinates,

i.e., show that

19 0

43 0

4

b +

b + b0 = w

12 1

12 2 3 3

We can do this the same way we found [w]B1 :

Do this.

[PB2 !S

25

2

6

| [w]S ] = 4 6

0

2

6

4

2 |

3 |

7 |

3

2

5

1

5

8

· · ! 40

5

0

0 0 |

1 0 |

0 1 |

19/12

3

-43/125

4/3

26