MEF: Malicious Email Filter A UNIX Mail Filter that Detects

advertisement

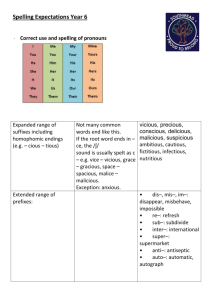

MEF: Malicious Email Filter A UNIX Mail Filter that Detects Malicious Windows Executables Matthew G. Schultz, Eleazar Eskin, and Salvatore J. Stolfo Computer Science Department, Columbia University mgs,eeskin,sal @cs.columbia.edu Abstract The UNIX server could either wrap the malicious email with a warning addressed to the user, or it could block the email. All of this could be done without the server’s users having to scan attachments themselves or having to download updates for their virus scanners. This way the system administrator can be responsible for updating the filter instead of relying on end users. We present Malicious Email Filter, MEF, a freely distributed malicious binary filter incorporated into Procmail that can detect malicious Windows attachments by integrating with a UNIX mail server. The system has three capabilities: detection of known and unknown malicious attachments, automatic propagation of detection models, and the ability to monitor the spread of malicious attachments. The system filters malicious attachments from emails by using detection models obtained from data-mining over known malicious attachments. It leverages research in data mining applied to malicious executables which allows the detection of previously unseen, malicious attachments. These new malicious attachments are programs that are most likely undetectable by current virus scanners because detection signatures for them have not yet been generated. The system also allows for the automatic propagation of detection models from a central server. Finally, the system allows for monitoring and measurement of the spread of malicious attachments. The system will be released under GPL. The standard approach to protecting against malicious emails is to use a virus scanner. Commercial virus scanners can effectively detect known malicious executables, but unfortunately they can not detect unknown malicious executables. The reason for this is that most of these virus scanners are signature based. For each known malicious binary, the scanner contains a byte sequence that identifies the malicious binary. However, an unknown malicious binary, one without a pre-existent signature, will most likely go undetected. We built upon research at Columbia University on datamining methods to detect malicious binaries [5]. The idea is that by using data-mining, knowledge of known malicious executables can be generalized to detect unknown malicious executables. Data mining methods are ideal for this purpose because they detect patterns in large amounts of data, such as byte code, and use these patterns to detect future instances in similar data along with detecting known instances. Our framework used classifiers to detect malicious executables. A classifier is a rule set, or detection model, generated by the data mining algorithm that was trained over a given set of training data. 1 Introduction A serious security risk today is the propagation of malicious executables through email attachments. A malicious executable is defined to be a program that performs a malicious function, such as compromising a system’s security, damaging a system or obtaining sensitive information without the user’s permission. Recently there have been some high profile incidents with malicious email attachments such as the ILOVEYOU virus and its clones. These malicious attachments caused an incredible amount of damage in a very short time. The Malicious Email Filter (MEF) project provides a tool for the protection of systems against malicious email attachments. A freely available, open source email filter that operates from UNIX to detect malicious Windows binaries has many advantages. Operating from a UNIX host, the email filter could automatically filter the email each host receives. The goal of this project is to design a freely distributed data mining based filter which integrates with Procmail’s pre-existent security filter [1]. This filter’s purpose is to detect both the set of known malicious binaries and a set of previously unseen, but similar malicious binaries. The system also provides a mechanism for automatically updating the detection models from a central server. Finally the filter reports statistics on malicious binaries that it processes which allows for the monitoring and measuring of the propagation of malicious attachments across email hosts. The system will be released under GPL. 1 Since the methods we describe are probabilistic, we could also tell if a binary is borderline. A borderline binary is a program that has similar probabilities for both classes (i.e. could be a malicious executable or a benign program). If it is a border-line case then there is an option in the network filter to send a copy of the malicious executable to a central repository such as CERT. There, it can be examined by human experts. When combined with the automatic distribution of detection models, this can reduce the time a host is vulnerable to a malicious attachment. The detection model generation worked as follows. The binaries were first statically analyzed to extract bytesequences, and then the classifiers were trained over a subset of the data. This generated a detection model that was tested on a set of previously unseen data. We implemented a traditional, signature-based algorithm to compare their performance with the data mining algorithms. Using standard statistical cross-validation techniques, this framework had a detection rate of 97.76%, over double the detection rate of a signature-based scanner. The organization of the paper is as follows. We first present the how the system works and how it is integrated with Procmail. Secondly, we describe how the detection models are updated. Then we describe how the system can be used for monitoring the propagation of email attachments. At the end, we summarize the research in data mining for creating detection models that can generalize to new and unknown malicious binaries, and report the accuracy and performance of the system. can then be analyzed by experts to determine whether they are malicious or not, and subsequently included in the future generation of detection models. A simple metric to detect borderline cases and redirect them to an evaluation party would be to define a borderline case to be a case where the probability it is malicious is above some threshold. There is a tradeoff between the detection rate of the filter and the false positive rate. The detection rate is the percentage of malicious email attachments detected, while the false positive rate is the percentage of the normal attachments labeled as malicious. This threshold can be set based on the policies of the host. Receiving borderline cases and updating the detection model is an important aspect of the data mining approach. The larger the data set used to generate models the more accurate the detection models are because borderline cases are executables that could potentially lower the detection and accuracy rates by being misclassified. 2.2 After a number of borderline cases have been received, it may be necessary to generate a new detection model. This would be accomplished by retraining the data mining algorithm over the data set containing the borderline cases along with their correct classification. This would generate a new detection model that when combined with the old detection model would detect a larger set of malicious binaries. The system provides a mechanism for automatically combining detection models. Because of this we can only the portions of the models that have changed as updates. This is important because the detection models can get very large. However, we want to update the model with each new malicious attachment discovered. In order to avoid constantly sending a large model to the filters, we can just send the information about the new attachment and have it integrated with the original model. Combining detection models is possible because the underlying representation of the models is probabilistic. To generate a detection model, the algorithm counted of times that the byte string appeared in a malicious program versus the number of times that it appeared in a benign program. From these counts the algorithm computes the probability that an attachment is malicious in a method described later in the paper. In order to combine the models, we simply need to sum the counts of the old model with the new information. As shown in Figure 1, in Model A, the old detection model, a byte string occurred 99 times in the malicious class and 1 time in the benign class. In Model B, the update model, the same byte string was found 3 times in the malicious class and 4 times in the benign class. The combination of models A and B would state that the byte string occurred 102 times in the malicious class and 5 times in 2 Incorporation into Procmail MEF filters malicious attachments by replacing the standard virus filter found in Procmail with a data mining generated detection model. Procmail is invoked by the mail server to extract the attachment. Currently the mail server supported is sendmail. This filter first decodes the binary and then examines the binary using a data mining based model. The filter evaluates it by comparing it to all the byte strings found with it to the byte-sequences contained in the detection model. A probability of the binary being malicious is calculated, and if it is greater that its likelihood of being benign then the executable is labeled malicious. Otherwise the binary is labeled benign. Depending on the result of the filter, Procmail is used to either pass the mail untouched if the attachment is determined to be not malicious or warn the recipient of the mail that the attachment is malicious. 2.1 Update Algorithms Borderline Cases Borderline binaries are binaries that have similar probabilities of being benign and malicious (e.g. 50% chance it is malicious, and 50% chance it is benign). These binaries 2 – Model A – The byte string occurred in 99 malicious executables The byte string occurred in 1 benign executable – Model B – The byte string occurred in 3 malicious executables The byte string occurred in 4 benign executables – Combined Model – The byte string occurred in 102 malicious executables The byte string occurred in 5 benign executable 4 Methodology for Building Data Mining Detection Models We gathered a large set of programs from public sources and separated the problem into two classes: malicious and benign executables. Each example in the data set is a Windows or MS-DOS format executable, although the framework we present is applicable to other formats. To standardize our data-set, we used MacAfee’s [4] virus scanner and labeled our programs as either malicious or benign executables. We split the dataset into two subsets: the training set and the test set. The data mining algorithms used the training set while generating the rule sets, and after training we used a test set to test the accuracy of the classifiers over unseen examples. Figure 1: Sample Combined Model resulting from applying the update model, B, to the old model, A. the benign class. The combination of A and B would be the new detection model after an update. The mechanism for propagating the detection models is through encrypted email. The Procmail filter can receive the detection model through a secure email sent to the mail server, and then automatically update the model. This email will be addressed and formatted in such a way that the Procmail filter will process it and update the detection models. 4.1 Data Set The data set consisted of a total of 4,301 programs split into 3,301 malicious binaries and 1,000 clean programs. The malicious binaries consisted of viruses, Trojans, and cracker/network tools. There were no duplicate programs in the data set and every example in the set is labeled either malicious or benign by the commercial virus scanner. All labels are assumed to be correct. All programs were gathered either from FTP sites, or personal computers in the Data Mining Lab here at Columbia University. The entire data set is available off our public ftp site ftp://ftp.cs.columbia.edu/pub/mgs28. 3 Monitoring the Propagation of Email Attachments Tracking the propagation of email attachments would be beneficial in identifying the origin of malicious executables, and in estimating a malicious attachments prevalence. The monitoring is done by having each host that is using the system log the malicious attachments, and the borderline attachments that are sent to and from the host. This logging may or may not contain who the sender or receiver of the mail was depending on the privacy policy of the host. In order to log the attachments, we need a way to obtain an identifier for each attachment. We do this by computing a hash function over the byte sequences in the binary obtaining a unique identifier. The logs of malicious attachments are sent back to the central server. Since there is a unique identifier for each binary, we can measure the propagation of the malicious binaries across hosts by examining their logs. From these logs we can estimate how many copies of each malicious binary are circulating the Internet. The current method for detailing the propagation of malicious executables is for an administrator to report an attack to an agency such as WildList [9]. The wild list is a list of the propagation of viruses in the wild and a list of the most prevalent viruses. This is not done automatically, but instead is based upon a report issued by an attacked host. Our method would reliably, and automatically detail a malicious executable’s spread over the Internet. 4.2 Detection Algorithms We statically extracted byte sequence features from each binary example for the algorithms to use to generate their detection models. Features in a data mining framework are properties extracted from each example in the data set, such as byte sequences, that a classifier uses to generate detection models. These features were then used by the algorithms to generate detection models. We used hexdump [7], an open source tool that transforms binary files into hexadecimal files. After we generated the hexdumps we had features in the form of Figure 2 where each line represents a short sequence of machine code instructions. 646e 776f 2e73 0a0d 0024 0000 0000 0000 454e 3c05 026c 0009 0000 0000 0302 0004 0400 2800 3924 0001 0000 0004 0004 0006 000c 0040 0060 021e 0238 0244 02f5 0000 0001 0004 0000 0802 0032 1304 0000 030a Figure 2: Example Set of Byte Sequence Features 3 4.3 Signature-Based Approach 4.5 To quantitatively express the performance of our method we show tables with the counts for true positives, true negatives, false positives, and false negatives. A true positive, TP, is an malicious example that is correctly tagged as malicious, and a true negative, TN, is a benign example that is correctly classified. A false positive, FP, is a benign program that has been mislabeled by an algorithm as a malicious program, while a false negative, FN, is a malicious executable that has been mis-classified as a benign program. We estimated our results over new executables by using 5-fold cross validation [3]. Cross validation is the standard method to estimate likely predictions over unseen data in Data Mining. For each set of binary profiles we partitioned the data into 5 equal size partitions. We used 4 of the partitions for training and then evaluated the rule set over the remaining partition. Then we repeated the process 5 times leaving out a different partition for testing each time. This gave us a reliable measure of our method’s accuracy over unseen data. We averaged the results of these five tests to obtain a good measure of how the algorithm performs over the entire set. To compare our results with traditional methods we implemented a signature based method. First, we calculated the byte-sequences that were only found in the malicious executable class. These byte-sequences were then concatenated together to make a unique signature for each malicious executable example. Thus each malicious executable signature contained only byte-sequences found in the malicious executable class. To make the signature unique, the byte-sequences found in each example were concatenated together to form one signature. This was done because a byte-sequence that was only found in one class during training could possibly be found in the other class during testing [2], and lead to false positives when deployed. Since the virus scanner that we used to label the data set had been trained over every example in our data set, it was necessary to implement a similar signature-based method to compare with the data mining algorithms. There was no way to use an off-the-shelf virus scanner, and simulate the detection of new malicious executables because these commercial scanners contained signatures for all the malicious executables in our data set. In our tests the signature-based algorithm was only allowed to generate signatures over the set of training data to compare them to data mining based techniques. This allowed our data mining framework to be fairly compared to traditional scanners over new data. 4.4 Preliminary Results 4.6 New Executables To evaluate the algorithms over new executables, the algorithms generated their detection models over the set of training data and then tested their models over the set of test data. This was done five times in accordance with five fold cross validation. Shown in Table 1, the data mining algorithm had the highest detection rate of either method we tested, 97.76% compared with the signature based method’s detection rate of 33.96%. Along with the higher detection rate the data mining method had a higher overall accuracy, 96.88% vs. 49.31%. The false positive rate at 6.01% though was higher than the signature based method, 3.80%. For the algorithms we plotted the detection rate vs. false positive rate using ROC curves [10]. ROC (Receiver Operating Characteristic) curves are a way of visualizing the trade-offs between detection and false positive rates. In this instance, the ROC curves in Figure 3 show how the data mining method can be configured for different applications. For a false positive rate less than or equal to 1% the detection rate would be greater than 70%, and for a false positive rate greater than 8% the detection rate would be greater than 99%. Data Mining Approach The classifier we incorporated into Procmail was a Naive Bayes classifier [6]. A naive Bayes classifier computes the likelihood that a program is malicious given the features that are contained in the program. We assumed that there were similar byte sequences in malicious executables that differentiated them from benign programs, and the class of benign programs had similar sequences that differentiated them from the malicious executables. We used the Naive Bayes method to compute the probability of an executable being benign or malicious. The Naive Bayes method, however, required more than 1 GB of RAM to generate its detection model. To make the algorithm more efficient we divided the problem into smaller pieces that would fit in memory and trained a Naive Bayes algorithm over each of the subproblems. The subproblem was to classify based on every 6th line of machine code instead of every line in the binary. For this we trained six Naive Bayes classifiers so that every byte-sequence line in the training set had been trained over. We then used a voting algorithm that combined the outputs from the six Naive Bayes methods. The voting algorithm was then used to generate the detection model. 4.7 Known Executables To evaluate the performance of the algorithms over known executables the algorithms generated detection models over the entire set of data and then their performance was evalu4 Profile Type Signature Method Data Mining Method True True False Positives (TP) Negatives (TN) Positives (FP) 1121 1000 0 3191 940 61 False Negatives (FN) 2180 74 Detection False Positive Overall Rate Rate Accuracy 33.96% 0% 49.31% 97.76% 6.01% 96.88% Table 1: These are the results of classifying new malicious programs organized by algorithm and feature. Note the Data Mining Method had a higher detection rate and accuracy while the Signature based method had the lowest false positive rate. Profile Type Signature Method Data Mining Method True True False Positives (TP) Negatives (TN) Positives (FP) 3301 1000 0 3301 1000 0 False Negatives (FN) 0 0 Detection False Positive Overall Rate Rate Accuracy 100% 0% 100% 100% 0% 100% Table 2: Results of classifying known malicious programs organized by algorithm and feature. The Signature based method and the data mining method had the same results. 5 Data Mining Performance 100 90 The system required different time and space complexities for model generation and deployment. 80 Detection Rate 70 60 50 5.1 40 In order for the data mining algorithm to quickly generate the models, it required all calculations to be done in memory. Using the algorithm took in excess of a gigabyte of RAM. By splitting the data into smaller pieces, the algorithm could be done in memory with a small loss ( 1.5%) in accuracy and detection rate. The calculations could then be done on a machine with less than 1 GB of RAM. This splitting allowed the model generation to be performed in less than two hours. 30 20 Data Mining Signature Based 10 0 0 1 2 3 4 5 6 False Positive Rate 7 8 Training 9 Figure 3: Data Mining ROC. Note that the Data Mining method has a higher detection rate than the signature method with a greater than 0.5% false positive rate. 5.2 ated by testing over the same examples they had seen during training. As shown in Table 2, over known executables the methods had the same performance. Each had an accuracy of 100% over the entire data set - correctly classifying all the malicious examples as malicious and all the benign examples as benign. The data mining algorithm detected 100% of known executables because it was merged with the signature based method. Without merging, the data mining algorithm detected 99.87% of the malicious examples and misclassified 2% of the benign binaries as malicious. However, we have the signatures for the binaries that the data mining algorithm misclassified, and the algorithm can include those signatures in the detection model without lowering accuracy over unknown binaries. After the signatures for the executables that were misclassified during training had been generated and included in the detection model, the data mining model had a 100% accuracy rate over known executables. During Deployment Online evaluation of executables can take place with much less memory required. The models could be stored in smaller pieces stored on the file system that would facilitate quicker analysis than having only one large model that needed to be loaded into memory for evaluation of each binary. Also by not loading the model into memory during evaluation we also avoid the problem of computers with small amounts of memory ( 128 MB), and taking memory away from the other processes running on the server. Since the algorithm analyzes each line of byte code contained in the binary, the time required for each evaluation varies. However, this can be done efficiently. 6 Conclusions The first contribution that we presented in this paper was a freely distributed filter for Procmail that detected known malicious Windows executables and previously unknown malicious Windows binaries from UNIX. The detection 5 rate of new executables was over twice that of the traditional signature based methods, 97.76% compared with 33.96%. In addition the system we presented has the capability of automatically receiving updated detection models and the ability to monitor the propagation of malicious attachments. One problem with traditional, signature-based methods is that in order to detect a new malicious executable, the program needs to be examined and a signature extracted from it and included in the anti-virus database. The difficulty with this method is that during the time required for a malicious program to be identified, analyzed and signatures to be distributed, systems are at risk from that program. Our methods may provide a defense during that time. With a low false positive rate the inconvenience to the end user would be minimal while providing ample defense during the time before an update of models is available. Virus Scanners are updated about every month, and 240– 300 new malicious executables are created in that time (8– 10 a day [8]). Our method would catch roughly 216–270 of those new malicious executables without the need for an update whereas traditional methods would catch only 87– 109. Our method more than doubles the detection rate of signature based methods. John F. Morar. Anatomy of a Commercial-Grade Immune System, IBM Research White Paper, 1999. http://www.av.ibm.com/ScientificPapers/White/ Anatomy/anatomy.html [9] Wildlist Organization. Virus description of viruses in the wild. Online Publication, 2000. http://www.fsecure.com/virus-info/wild.html [10] Zou KH, Hall WJ, and Shapiro D. Smooth nonparametric ROC curves for continuous diagnostic tests, Statistics in Medicine, 1997 References [1] John Hardin, Enhancing E-Mail Security With Procmail, Online publication, 2000. http://www.impsec.org/email-tools/procmailsecurity.html [2] Jeffrey O. Kephart, and William C. Arnold. Automatic Extraction of Computer Virus Signatures, 4th Virus Bulletin International Conference, pages 178-184, 1994. [3] Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection, IJCAI, 1995. [4] MacAfee. Homepage - MacAfee.com, Online publication, 2000. http://www.mcafee.com [5] MEF Group, Malicious Email Filter Group, Online publication, 2000. http://www.cs.columbia.edu/ids/mef/ [6] D.Michie, D.J.Spiegelhalter, and C.C.Taylor. Machine learning of rules and trees. In Machine Learning, Neural and Statistical Classification, Ellis Horwood, New York, pages 50-83,1994. [7] Peter Miller. Hexdump, Online publication, 2000. http://www.pcug.org.au/ millerp/hexdump.html [8] Steve R. White, Morton Swimmer, Edward J. Pring, William C. Arnold, David M. Chess, and 6

![an internal intrusion detection and protection[new][ieee]](http://s3.studylib.net/store/data/006684577_1-31b4a0b329e97f32a7be0460d9673d6a-300x300.png)