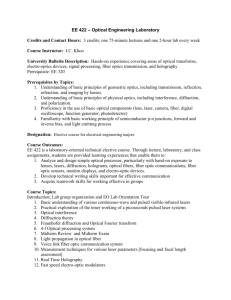

EIBONE Working Group Transmission Technologies

advertisement