Case studies in job analysis and training evaluation

advertisement

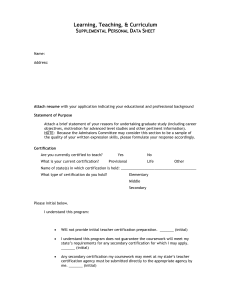

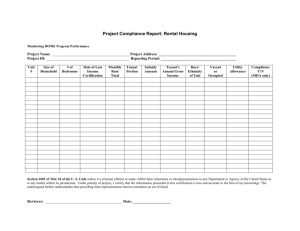

International Journal of Training and Development 5:4 ISSN 1360-3736 Case studies in job analysis and training evaluation Jack McKillip Job analysis identifies work behaviors and associated tasks that are required for successful job performance. Job analysis is widely recognised as a starting point in a needs analysis for training and development activities. The content validity justification for basing training on a job analysis can be used for training evaluation. Effective training should only have an impact on job tasks significantly related to successful job performance. This article presents two examples of the use of job analysis for training evaluation: evaluation of an information technology certification and evaluation of training for a professional association of librarians. A job analysis identifies the work behaviors and associated tasks that are required for successful job performance (Harvey, 1990). Once the tasks necessary for a given job are analysed, personnel selection instruments can be constructed and/or training programs developed. The utility of a job analysis does not end with selection and training need assessment, but can be extended to training evaluation. This article illustrates the use of a job analysis for evaluation of training in two settings: an information technology certification, and a potential library certification. Steps in job analysis Although its application is flexible, a job analysis usually involves at least four steps (Harvey, 1990). First, work activities or operations performed on the job are elicited widely from job occupants and supervisors. Next, activities and operations are grouped into tasks or duties to simplify and organise statements and to eliminate overlap. Third, the resultant list of job tasks is rated on measures of overall significance to successful performance on the job. Job occupants or supervisors also accomplish this task. Finally, the knowledge and skills required for successful job performance are identified for development of selection instruments or potential training needs. As an example, 2,800 computer professionals from 85 countries rated job task ❒ Jack McKillip is Professor of Applied Experimental Psychology at Southern Illinois University, Carbondale, Illinois 62901-6502, USA. Email: mckillip얀siu.edu Blackwell Publishers Ltd. 2001, 108 Cowley Road, Oxford OX4 1JF, UK and 350 Main St., Malden, MA 02148, USA. Case studies 283 statements for the job of system engineer as part of an Internet survey (McKillip, 1998). Overall, 48 statements related six job tasks to each of eight technical areas. Ratings included three scales: importance for successful job completion, difficulty to learn and perform, and frequency of performance. Table 1 presents examples of job task statements and rating scales. Content validity and evaluation Content validity refers to the extent to which a selection instrument or a training program covers the knowledge and skills required to successfully perform a job. The use of job analysis as the starting point in selection or training provides evidence of content validity for these activities. Goldstein (1993) and McKillip (1997) describe Table 1: Systems engineer job analysis statements and rating scales (McKillip, 1999) Technical area description: • Unified directory services such as Active Directory system architecture and Windows NT domains. • Connectivity between and within systems, system components, and applications. Examples include Exchange Server connectors and SMS senders. • Data replication such as directory replication and database replication. • System architecture considerations include the physical and logical location of resources. Job tasks statements • Analyse the business requirements for the system architecture. • Design a system architecture solution that meets business requirements. • Deploy, install, and configure the components of the system architecture. • Manage the components of the system architecture on an ongoing basis. • Monitor and optimize the components of the system architecture. • Diagnose and resolve problems regarding the components of the system architecture. Significance rating: 5-point importance scale 1. OF LITTLE IMPORTANCE—for tasks that have very little importance in relation to the successful completion of your job. 2. OF SOME IMPORTANCE—for tasks that are of average importance relative to other tasks but are not given high priority. 3. MODERATELY IMPORTANT—for tasks that are of some importance but generally are given low priority. 4. VERY IMPORTANT—for tasks that are important for successful completion of work. These tasks receive higher priority than other tasks, but are not the most important tasks. 5. EXTREMELY IMPORTANT—for tasks that are essential for successful job performance. Such a task must be completed and performed correctly in order to have a satisfactory outcome. 284 International Journal of Training and Development Blackwell Publishers Ltd. 2001. how valid training procedures can be built on a job analysis. The logic is illustrated in Figure 1. The X-axis displays significance for job success and the Y-axis displays training emphasis or need. Crucial training activities focus on knowledge and skills identified in a job analysis as significant to success on the job, i.e., the upper righthand quadrant of Figure 1. Training on knowledge and skills that are not significant results in a waste of resources that are better directed elsewhere. Goldstein (1993) urged that the same content validity logic be used for evaluation of training. Effective training should be found to emphasise job tasks significant for successful performance, and not to cover job tasks of low significance. Similarly, McKillip and Cox (1998) used an Importance-Performance matrix like that of Figure 1 to evaluate training. Job analysis ratings of importance were combined with posttraining ratings of training usefulness or coverage of job tasks (performance). A display of tasks along the lower left to upper right diagonal of the figure revealed positive training impact. This use of content validity logic to evaluate training is illustrated in two examples. Evaluating an information technology certification (McKillip, 1998) The Microsoft Company describes on its company’s web page the Microsoft Certified Systems Engineer (MCSE) credential certification as relevant for ‘professionals who analyze the business requirements and design and implement the infrastructure for business solutions based on the Windows platform and Microsoft server software. Implementation responsibilities include installing, configuring, and troubleshooting network systems.’ The certification is awarded after an applicant passes a series of core and elective examinations on Microsoft products concerning technical proficiency and expertise in solution design and implementation. One part of the evaluation of this certification examined whether those who received the certification found it useful for their work on 24 job tasks. Usefulness ratings were correlated with importance ratings from a previous and independently conducted job analysis. A positive evaluation of the certification would be indicated by showing the certification was useful for important job tasks, but not otherwise. Methodology The job analysis for the MCSE certification (Educational Testing Service, 1997) included ratings of the importance of 91 job tasks covering eight job areas by 415 MCSEs from 20 countries. A five-point scale was used, from (1) Not important to (5) Figure 1: Content validity matrix for relating job analysis and training. Adapted from McKillip (1997). Used with permission. Blackwell Publishers Ltd. 2001. Case studies 285 Extremely important. From the 91 job tasks, an expert group of MCSEs selected a subset of 24 that covered each of the eight job areas, that were mutually exclusive, and that paralleled the range of importance ratings of the full set of job tasks. During April 1998, 1,671 MCSEs responded to the Internet survey rating each of the 24 job tasks on ‘how useful the certification process was to their own job performance’ on each job task, using a nine-point scale from (1) Not at all useful to (9) Extremely useful. Respondents had been computer professionals for an average of 7.5 years and had held their MCSE certification for 1.5 years. They were overwhelmingly male and the majority was employed in the US (59 per cent). Forty-one per cent of the respondents came from 66 other countries. Results Figure 2 uses a scatter diagram to display the importance ratings of 24 job tasks identified from the job analysis (X-axis) with the usefulness ratings from the Internet survey (Y-axis). Twenty-three of the job tasks fell along the important lower left to upper right diagonal,1 providing impressive support for the effectiveness of the certification. MCSEs rated the certification as most useful for those job areas that were most important. They did not rate the certification as useful for everything that they did—unimportant job areas generated lower usefulness ratings for the certification. The one job task that clearly did not fall along the lower-left to upper-right diagonal warranted investigation for increased coverage as part of the certification process. Evaluating librarian preparation2 Most professional librarians complete a master’s in library science (MLS) degree as part of their professional preparation. Understanding the MLS as a generalist degree, a professional association of librarians evaluated whether further training in the form of a certification was needed to meet the challenge of keeping pace with the evolving demands of their particular type of library work. The evaluation involved a job Figure 2: Use of job tasks in evaluation of training. Adapted from McKillip (1998). Used with permission. 1 The correlation between importance as identified in the job analysis and usefulness of certification from the Internet survey was 0.71. This is a large and reliable association. 2 Dr. Caryl Cox of Program Evaluation for Education and Communities, 705 W. Elm Street, Carbondale Illinois 62901 coauthored this study with Dr. McKillip. 286 International Journal of Training and Development Blackwell Publishers Ltd. 2001. analysis that identified the job activities and duties of members of the professional association and a survey of its members concerning (1) the importance of the identified job tasks for their professional work and (2) the extent to which the tasks were covered as part of their formal MLS education. Current training would be judged adequate if the tasks that were important for the profession were covered as part of formal study and if unimportant tasks were not covered (i.e., the lower left to upper right diagonal of Figure 1). However, if current training did not cover important tasks (i.e., the lower right quadrant of Figure 1), an association-sponsored certification might be needed. Methodology Review of association documents and focus groups with association members and leaders led to the development of 31 job-task statements covering positions held by members of the professional association. These job tasks, along with other questions, were included in a mailed survey of members. Respondents rated the job task statements on ‘important[ce] to your present job as a % librarian’ using a 9-point scale labeled: (1) Not at all, (3) Minimally, (5) Somewhat, (7) Very, and (9) Extremely. Respondents also indicated whether or not the task ‘was covered by academic coursework from an accredited institution’. Three categories of association members were selected by random sampling: those employed in private firms or corporations, those employed in government or court libraries, and those employed in university libraries. Some 527 association members (48 per cent completion rate) responded, closely mirroring characteristics of those sampled on geography and place of employment. Eighty-six per cent of respondents had achieved an MLS or equivalent degree and an equal percentage agreed with the statement: ‘[members of this profession of] librarians should have an MLS or equivalent library degree’. Results reported in the following section were restricted to respondents with an MLS degree. Results Figure 3 uses a scatter diagram to display the importance ratings of the 31 job tasks (identified by general area) by the percentage of respondents who had received formal academic training for the task. Clearly, most job tasks do not fall in the lower left and upper right quadrants of the figure! Close inspection shows several meaningful and important patterns. For example, tasks in the area of Reference, Research, and Patron Services (A) follow the effective-training pattern along the lower left to upper right diagonal. Most respondents received formal training on the most important tasks in this area and did not receive formal training on this area’s less important tasks. By contrast, tasks in the areas of Library Management (B) and of Information Technology (C) are concentrated in the lower right quadrant—the ‘Potential Need’ quadrant of Figure 1. If the professional association were to launch a certification, these areas should be explored. Discussion Neither training needs assessment nor training evaluation are easy. The logic and effort used on a job analysis to support valid need assessment can also be used for training evaluation. As the two examples illustrate, Goldstein’s (1993) suggestion that the content validity link between job analysis and training needs assessment can fruitfully be extended to link job analysis and training evaluation. Requirements for the link include a thorough job analysis of the position for which training is conducted. Analysis should identify job tasks that are important as well as those that are unimportant for successful job performance, and should not abandon unimportant tasks partway through the job analysis, as is frequently the case Blackwell Publishers Ltd. 2001. Case studies 287 Figure 3: Use of job tasks in evaluation of librarian training Librarian job task areas A. Reference, Research and Patron Services B. Library Management C. Information Technology D. Collection Care and Management E. Teaching F. Special Knowledge (Harvey, 1990). Importance ratings can then be combined with post-training assessments of the usefulness or thoroughness of the training. The pattern of positive association of task importance and training usefulness will not result from simple trainee enthusiasm with the training experience itself. In such a case, trainees’ reactions are likely to be indiscriminately positive—uncorrelated with task importance. When trainees’ judgments follow importance ratings, persuasive evidence of appropriate training design and effective delivery result. In practice, scatter-diagram displays like Figure 2 and 3 have proven particularly effective in presenting the message of training effectiveness or training need. Clients have quickly grasped the logic and method of evaluation, and easily interpreted the results. In addition, the scatter diagrams are easily constructed by most spreadsheet programs, such as Microsoft Excel. The patterns that result from the scatter diagram not only can give evidence of training effectiveness but can also be used to identify wasted efforts and potential needs for further training. References Educational Testing Service (1997), An Analysis of the Job of a Microsoft Certified Systems Engineer (Princeton, NJ: Author). Goldstein, I. L. (1993), Training in Organizations: Needs Assessment, Development, and Evaluation, 3rd ed. (Pacific Grove, CA: Brooks/Cole). Harvey, R. J. (1990), ‘Job Analysis’, in M. D. Dunnette and L. M. Hough (eds), Handbook of Industrial and Organizational Psychology, vol. 2 (Palo Alto, CA: Consulting Psychologists Press), pp. 71–163. McKillip, J. (1997), ‘Need Analysis: Process and Techniques’, in L. Bickman and D. Rog (eds), Handbook of Applied Research Methods (Thousand Oaks, CA: Sage), pp. 261–84. 288 International Journal of Training and Development Blackwell Publishers Ltd. 2001. McKillip, J. (1998), Criterion Validity of Microsoft’s Systems Engineer Certification: Making a Difference on the Job. Report to Participants (Redmond, Washington, DC: Microsoft Corp. http://www.microsoft.com/trainingandservices/content/downloads/SysEngrCert.doc). McKillip, J. (1999), 1999 MSCE Job Task Analysis: Report to Participants (Redmond, Washington DC: Microsoft Corp. http://www.microsoft.com/trainingandservices/content/downloads/ MCSEFTaskFAnalysis.doc). McKillip, J. and Cox, C. (1998), ‘Strengthening the Criterion-Related Validity of Professional Certifications’, Evaluation and Program Planning, 21, 191–7. Blackwell Publishers Ltd. 2001. Case studies 289